Professional Documents

Culture Documents

R6 - Temporal Relational Reasoning in Videos

Uploaded by

Nalhdaf 07Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

R6 - Temporal Relational Reasoning in Videos

Uploaded by

Nalhdaf 07Copyright:

Available Formats

Zain Nasrullah (998892685)

Review on “Temporal Relational Reasoning in Video”

Authors: B. Zhou, A. Andonian, A. Torralba

Short Summary

The goal of this paper is to reason about temporal dependencies in video frames at multiple time scales.

The authors propose Temporal Relation Net (TRN) as a means to improve historical baselines that

exceed at action detection but cannot reason about transformations between frames. The module is

designed to be extensible enough to be inserted into any CNN architecture as a plug-and-play module.

The architecture involves using different frame relation modules that analyze n-frame relations by

sampling from the video. For simplicity, Inception pre-trained on ImageNet is used as the base model

and tests are performed with and without the TRN module. The model can capture the essence of

activity given only a few frames from an action. Using a larger number of frames generally improves

accuracy and ordered frames perform significantly better than shuffled ones suggesting that the

temporal order plays an important role.

The model achieves state-of-the-art results on the Something-Something dataset for human-interaction

recognition (33.60 top1 % accuracy) and the Jester dataset for hand gesture recognition (94.78). The

model also achieves 25.2 mAP on the Charades dataset for daily activity recognition which exceeds

previous state of the art. A t-SNE visualization shows that the model is learning to separate actions into

distinct regions in space; furthermore, similar actions are closer together suggesting that the model is

learning the meaning behind these actions to some degree.

Main Contributions

1. Propose a Temporal Relation Network (TRN) to learn temporal dependencies between video

frames at multiple time scales.

2. Evaluated on Something Something, Jester, and Charades where previous works do not perform

well due to lacking reasoning

3. Visualized what the model is learning to show that they are in fact learning relations

High-Level Evaluation of Paper

This paper placed little focus on theory and spent most of its length discussing interesting experimental

results. As such, the way the model is leveraging temporal structure isn’t quite clear, but the extensive

results demonstrate that it is in fact succeeding at the proposed task. Given that the model achieves

state of the art on many distinct datasets, an interesting argument can be made about the importance

of temporal structure for a reasoning task. That being said, I do not agree with the author’s claim that

the model is learning “common sense”; rather, it is learning the relationships between frames that

correspond to a particular action.

Discussion on Evaluation Methodology

With an emphasis on results, the paper provides five tables that compare results on the various tested

datasets, graphs and accompanying visualizations. The tables are easy to read, the metrics used seem to

be the standard for the particular datasets, and thus the results are easy to interpret. I appreciate how

most results are kept on a single page, including validation and test results, allowing one to quickly get a

Zain Nasrullah (998892685)

sense that the proposed model achieves state-of-the-art and by what margin it exceeds baselines. The

charts in particular are also helpful for understanding the impact of the number of frames and their

ordering on recognition accuracy.

You might also like

- Sprinkler System Installation Guide: For Redbuilt Open-Web Trusses and Red-I JoistsDocument12 pagesSprinkler System Installation Guide: For Redbuilt Open-Web Trusses and Red-I JoistsSteve VelardeNo ratings yet

- A Global Averaging Method For Dynamictime Warping, With Applications To ClusteringDocument16 pagesA Global Averaging Method For Dynamictime Warping, With Applications To Clusteringbasela2010No ratings yet

- GeneralizationDocument10 pagesGeneralizationjerry.sharma0312No ratings yet

- GeneralizationDocument10 pagesGeneralizationjerry.sharma0312No ratings yet

- Generative Adversarial Networks in Time Series: A Systematic Literature ReviewDocument31 pagesGenerative Adversarial Networks in Time Series: A Systematic Literature Reviewklaus peterNo ratings yet

- Deep Learning Master ThesisDocument4 pagesDeep Learning Master Thesisdwtnpjyv100% (1)

- DPP For MLDocument120 pagesDPP For MLTheLastArchivistNo ratings yet

- An Experimental Review On Deep Learning Architectures For Time Series ForecastingDocument25 pagesAn Experimental Review On Deep Learning Architectures For Time Series Forecastingpiyoya2565No ratings yet

- Ijcseit 020604Document9 pagesIjcseit 020604ijcseitNo ratings yet

- Synthetic Market Data and Its Applications 1676296950Document42 pagesSynthetic Market Data and Its Applications 1676296950Daniel PeñaNo ratings yet

- Unifying Temporal Data Models Via A Conceptual ModelDocument40 pagesUnifying Temporal Data Models Via A Conceptual ModelChinmay KunkikarNo ratings yet

- Evaluation of Time SeriesDocument12 pagesEvaluation of Time Serieskarina7cerda7o7ateNo ratings yet

- Text Segmentation Via Topic Modeling An PDFDocument11 pagesText Segmentation Via Topic Modeling An PDFDandy MiltonNo ratings yet

- SSRN Id3900141Document43 pagesSSRN Id3900141김기범No ratings yet

- Welco MEDocument15 pagesWelco MEaltafNo ratings yet

- Master Thesis Neural NetworkDocument4 pagesMaster Thesis Neural Networktonichristensenaurora100% (1)

- Decmion Support: ElsevierDocument15 pagesDecmion Support: ElsevierHaythem MzoughiNo ratings yet

- Conference Paper LATENT DIRICHLET ALLOCATION (LDA)Document9 pagesConference Paper LATENT DIRICHLET ALLOCATION (LDA)mahi mNo ratings yet

- Hyperparameter Tuning For Deep Learning in Natural Language ProcessingDocument7 pagesHyperparameter Tuning For Deep Learning in Natural Language ProcessingWardhana Halim KusumaNo ratings yet

- SSRN Id3981160Document44 pagesSSRN Id3981160hetalpatel259No ratings yet

- Time Series Forecasting Deep Learning Review IJNS PreprintDocument26 pagesTime Series Forecasting Deep Learning Review IJNS PreprintdkNo ratings yet

- Literature Review Neural NetworkDocument5 pagesLiterature Review Neural Networkfvffybz9100% (1)

- Yoshua Bengio, Nicolas Boulanger-Lewandowski and Razvan PascanuDocument5 pagesYoshua Bengio, Nicolas Boulanger-Lewandowski and Razvan PascanuHồ Văn ChươngNo ratings yet

- Raahul's Solar Weather Forecasting Using Linear Algebra Proposal PDFDocument11 pagesRaahul's Solar Weather Forecasting Using Linear Algebra Proposal PDFRaahul SinghNo ratings yet

- Totally Ordered Broadcast and Multicast Algorithms: A Comprehensive SurveyDocument52 pagesTotally Ordered Broadcast and Multicast Algorithms: A Comprehensive Surveyashishkr23438No ratings yet

- Master Thesis Web ApplicationDocument5 pagesMaster Thesis Web ApplicationDoMyPaperForMeCanada100% (2)

- Esma El 2012Document6 pagesEsma El 2012Anonymous VNu3ODGavNo ratings yet

- Depso Model For Efficient Clustering Using Drifting Concepts.Document5 pagesDepso Model For Efficient Clustering Using Drifting Concepts.Dr J S KanchanaNo ratings yet

- Generalizability of Semantic Segmentation Techniques: Keshav Bhandari Texas State University, San Marcos, TXDocument6 pagesGeneralizability of Semantic Segmentation Techniques: Keshav Bhandari Texas State University, San Marcos, TXKeshav BhandariNo ratings yet

- Silva Widm2021Document44 pagesSilva Widm2021wowexo4683No ratings yet

- 2002 Internet Research Needs Better ModelsDocument6 pages2002 Internet Research Needs Better ModelsderejeNo ratings yet

- IEEE Solved PROJECTS 2009Document64 pagesIEEE Solved PROJECTS 2009MuniasamyNo ratings yet

- What Can Neural Networks Learn About Flow Physics?Document6 pagesWhat Can Neural Networks Learn About Flow Physics?Javad RahmannezhadNo ratings yet

- LSTM Online Training PDFDocument12 pagesLSTM Online Training PDFRiadh GharbiNo ratings yet

- ResearchDocument2 pagesResearchpostscriptNo ratings yet

- AR-NET Autoregressive Neural Net Untuk Time Series - MASUKIN PDFDocument12 pagesAR-NET Autoregressive Neural Net Untuk Time Series - MASUKIN PDFRais HaqNo ratings yet

- Schema Networks: Zero-Shot Transfer With A Generative Causal Model of Intuitive PhysicsDocument10 pagesSchema Networks: Zero-Shot Transfer With A Generative Causal Model of Intuitive PhysicstazinabhishekNo ratings yet

- Decoupling Superpages From Symmetric Encryption in Web ServicesDocument8 pagesDecoupling Superpages From Symmetric Encryption in Web ServicesWilson CollinsNo ratings yet

- An Iterative Alignment Framework For Temporal Sentence GroundingDocument10 pagesAn Iterative Alignment Framework For Temporal Sentence Grounding万至秦No ratings yet

- Lossless, Virtual Theory For RasterizationseDocument7 pagesLossless, Virtual Theory For Rasterizationsealvarito2009No ratings yet

- Extracting Interpretable Features For Time Series An - 2023 - Expert Systems WitDocument11 pagesExtracting Interpretable Features For Time Series An - 2023 - Expert Systems WitDilip SinghNo ratings yet

- MLSys 2020 What Is The State of Neural Network Pruning PaperDocument18 pagesMLSys 2020 What Is The State of Neural Network Pruning PaperSher Afghan MalikNo ratings yet

- FreDo - Frequency Domain-Based Long-Term Time Series ForecastingDocument12 pagesFreDo - Frequency Domain-Based Long-Term Time Series Forecastingmarcmyomyint1663No ratings yet

- Master Thesis Focus GroupsDocument4 pagesMaster Thesis Focus GroupsBuyingCollegePapersOnlineSantaRosa100% (2)

- A Case For Courseware: Trixie Mendeer and Debra RohrDocument4 pagesA Case For Courseware: Trixie Mendeer and Debra RohrfridaNo ratings yet

- Extra QuestionsDocument6 pagesExtra QuestionsLiêu Đăng KhoaNo ratings yet

- 1.0 Executive Summary: Computational Materials Science CRTADocument1 page1.0 Executive Summary: Computational Materials Science CRTAAnonymous T02GVGzBNo ratings yet

- Text Similarity Using Siamese Networks and TransformersDocument10 pagesText Similarity Using Siamese Networks and TransformersIJRASETPublicationsNo ratings yet

- Tabu Search For Partitioning Dynamic Data Ow ProgramsDocument12 pagesTabu Search For Partitioning Dynamic Data Ow ProgramsDaniel PovedaNo ratings yet

- A Study of The Connectionist Models For Software Reliability PredictionDocument9 pagesA Study of The Connectionist Models For Software Reliability PredictionTirișcă AlexandruNo ratings yet

- Modeling and Simulation Best Practices For Wireless Ad Hoc NetworksDocument9 pagesModeling and Simulation Best Practices For Wireless Ad Hoc NetworksJohnNo ratings yet

- End-to-End Non-Factoid Question Answering With An Interactive Visualization of Neural Attention WeightsDocument6 pagesEnd-to-End Non-Factoid Question Answering With An Interactive Visualization of Neural Attention WeightsPhu NguyenNo ratings yet

- Sag Heer 2019Document19 pagesSag Heer 2019Abimael Avila TorresNo ratings yet

- Pplied Time Series Ransfer LearningDocument4 pagesPplied Time Series Ransfer LearningM.Qasim MahmoodNo ratings yet

- An Efficient and Robust Algorithm For Shape Indexing and RetrievalDocument5 pagesAn Efficient and Robust Algorithm For Shape Indexing and RetrievalShradha PalsaniNo ratings yet

- An Overview and Comparative Analysis of Recurrent Neural Networks For Short Term Load ForecastingDocument41 pagesAn Overview and Comparative Analysis of Recurrent Neural Networks For Short Term Load ForecastingPietroManzoniNo ratings yet

- 1 s2.0 S0263224121002402 MainDocument19 pages1 s2.0 S0263224121002402 Mainvikas sharmaNo ratings yet

- Bachelor Thesis Eth MavtDocument8 pagesBachelor Thesis Eth Mavtafcmqldsw100% (2)

- Distributed Real Time Architecture For D PDFDocument8 pagesDistributed Real Time Architecture For D PDFskwNo ratings yet

- Mastering Partial Least Squares Structural Equation Modeling (Pls-Sem) with Smartpls in 38 HoursFrom EverandMastering Partial Least Squares Structural Equation Modeling (Pls-Sem) with Smartpls in 38 HoursRating: 3 out of 5 stars3/5 (1)

- Data Visualization With Python: BCS358DDocument5 pagesData Visualization With Python: BCS358DBhavana NagarajNo ratings yet

- ABB Ellipse APM Edge - BrochureDocument8 pagesABB Ellipse APM Edge - BrochureCarlos JaraNo ratings yet

- Inverse Kinematics Solution of PUMA 560 Robot Arm Using ANFISDocument5 pagesInverse Kinematics Solution of PUMA 560 Robot Arm Using ANFISanon_731929628No ratings yet

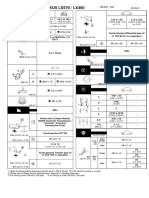

- Single/Dual Compressor Centrifugal Chillers: PEH/PHH 050,063,079,087, 100, 126 PFH/PJH 050,063,079,087, 126Document21 pagesSingle/Dual Compressor Centrifugal Chillers: PEH/PHH 050,063,079,087, 100, 126 PFH/PJH 050,063,079,087, 126Dương DũngNo ratings yet

- FIcha Tecnica LEXUSDocument2 pagesFIcha Tecnica LEXUSJair GonzalezNo ratings yet

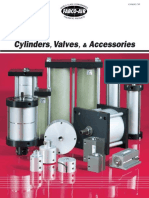

- Catalogo PistonesDocument196 pagesCatalogo PistonesalexjoseNo ratings yet

- Iu-At-137 - Iii - Eng Aspel 308 AbpmDocument37 pagesIu-At-137 - Iii - Eng Aspel 308 AbpmEla MihNo ratings yet

- QW-QAL-626 - (Rev-00) - Prod. and QC Process Flow Chart - Ventura Motor No. 13 (35804)Document6 pagesQW-QAL-626 - (Rev-00) - Prod. and QC Process Flow Chart - Ventura Motor No. 13 (35804)Toso BatamNo ratings yet

- STudyScheme TownhallDocument12 pagesSTudyScheme TownhallNicoleNo ratings yet

- FMEA For Wind TurbineDocument17 pagesFMEA For Wind TurbinealexrferreiraNo ratings yet

- GIS Ch3Document43 pagesGIS Ch3Mahmoud ElnahasNo ratings yet

- Big Data Analytics (BDAG 19-5) : Quiz: GMP - 2019 Term VDocument2 pagesBig Data Analytics (BDAG 19-5) : Quiz: GMP - 2019 Term VdebmatraNo ratings yet

- E BrokerDocument5 pagesE BrokerRashmi SarkarNo ratings yet

- Is AI Dangerous To Humans?Document2 pagesIs AI Dangerous To Humans?Kevin FungNo ratings yet

- Toshiba T300BMV2 - Instruction - ManualDocument74 pagesToshiba T300BMV2 - Instruction - ManualAgnelo FernandesNo ratings yet

- Asrat Simru AppDocument36 pagesAsrat Simru Apptadiso birkuNo ratings yet

- Chapter 5 JavascriptdocumentDocument122 pagesChapter 5 JavascriptdocumentKinNo ratings yet

- MR-185S, - 200S Liquid-Ring Pump: Operating ManualDocument21 pagesMR-185S, - 200S Liquid-Ring Pump: Operating Manualpablo ortizNo ratings yet

- Different Types of Fuses and Its ApplicationsDocument10 pagesDifferent Types of Fuses and Its ApplicationsAhmet MehmetNo ratings yet

- GMA-7 Organizational ChartDocument8 pagesGMA-7 Organizational ChartEmman TagubaNo ratings yet

- Dot MapDocument7 pagesDot MapRohamat MandalNo ratings yet

- InsuransDocument6 pagesInsuransMuhammad FarailhamNo ratings yet

- HR Digital Transformation: The Practical GuideDocument50 pagesHR Digital Transformation: The Practical Guidepaulus chandraNo ratings yet

- Value Creation OikuusDocument68 pagesValue Creation OikuusJefferson CuNo ratings yet

- 35th Annual Dive Show: - Convention Center, San Diego, CaliforniaDocument2 pages35th Annual Dive Show: - Convention Center, San Diego, CaliforniaHà ViNo ratings yet

- Beadbreakers: Pneumatic Driven Hydraulic BeadbreakerDocument2 pagesBeadbreakers: Pneumatic Driven Hydraulic BeadbreakerGenaire LimitedNo ratings yet

- Technical Specifications: Easy. Efficient. ReliableDocument2 pagesTechnical Specifications: Easy. Efficient. ReliableZahid RasoolNo ratings yet

- ARC20011 Mechanics of Structures: Laboratory Session #2: Beam Bending Moment, Measurements and AnalysisDocument22 pagesARC20011 Mechanics of Structures: Laboratory Session #2: Beam Bending Moment, Measurements and AnalysisKushan HiranthaNo ratings yet

- Coffer Slabs FormworkDocument16 pagesCoffer Slabs FormworkHans JonesNo ratings yet