Professional Documents

Culture Documents

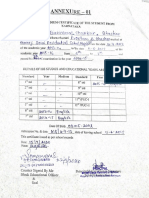

En - K-Nearest Neighbors

Uploaded by

Dhanaraj Bhaskar0 ratings0% found this document useful (0 votes)

9 views3 pagesOriginal Title

en_K-Nearest Neighbors

Copyright

© © All Rights Reserved

Available Formats

TXT, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views3 pagesEn - K-Nearest Neighbors

Uploaded by

Dhanaraj BhaskarCopyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

You are on page 1of 3

Hello and welcome!

In this video, we’ll be covering the k-nearest neighbors algorithm.

So let’s get started.

Imagine that a telecommunications provider has segmented its customer base by

service

usage patterns, categorizing the customers into four groups.

If demographic data can be used to predict group membership, the company can

customize

offers for individual prospective customers.

This is a classification problem.

That is, given the dataset, with predefined labels, we need to build a model to be

used

to predict the class of a new or unknown case.

The example focuses on using demographic data, such as region, age, and marital

status, to

predict usage patterns.

The target field, called custcat, has four possible values that correspond to the

four

customer groups, as follows: Basic Service, E-Service, Plus Service, and Total

Service.

Our objective is to build a classifier, for example using the rows 0 to 7, to

predict

the class of row 8.

We will use a specific type of classification called K-nearest neighbor.

Just for sake of demonstration, let’s use only two fields as predictors -

specifically,

Age and Income, and then plot the customers based on their group membership.

Now, let’s say that we have a new customer, for example, record number 8 with a

known

age and income.

How can we find the class of this customer?

Can we find one of the closest cases and assign the same class label to our new

customer?

Can we also say that the class of our new customer is most probably group 4 (i.e.

total

service) because its nearest neighbor is also of class 4?

Yes, we can.

In fact, it is the first-nearest neighbor.

Now, the question is, “To what extent can we trust our judgment, which is based on

the

first nearest neighbor?”

It might be a poor judgment, especially if the first nearest neighbor is a very

specific

case, or an outlier, correct?

Now, let’s look at our scatter plot again.

Rather than choose the first nearest neighbor, what if we chose the five nearest

neighbors,

and did a majority vote among them to define the class of our new customer?

In this case, we’d see that three out of five nearest neighbors tell us to go for

class

3, which is ”Plus service.”

Doesn’t this make more sense?

Yes, in fact, it does!

In this case, the value of K in the k-nearest neighbors algorithm is 5.

This example highlights the intuition behind the k-nearest neighbors algorithm.

Now, let’s define the k-nearest neighbors.

The k-nearest-neighbors algorithm is a classification algorithm that takes a bunch

of labelled points

and uses them to learn how to label other points.

This algorithm classifies cases based on their similarity to other cases.

In k-nearest neighbors, data points that are near each other are said to be

“neighbors.”

K-nearest neighbors is based on this paradigm: “Similar cases with the same class

labels

are near each other.”

Thus, the distance between two cases is a measure of their dissimilarity.

There are different ways to calculate the similarity, or conversely, the distance

or

dissimilarity of two data points.

For example, this can be done using Euclidian distance.

Now, let’s see how the k-nearest neighbors algorithm actually works.

In a classification problem, the k-nearest neighbors algorithm works as follows:

1. Pick a value for K.

2. Calculate the distance from the new case (holdout from each of the cases in the

dataset).

3. Search for the K observations in the training data that are ‘nearest’ to the

measurements

of the unknown data point.

And 4, predict the response of the unknown data point using the most popular

response value from

the K nearest neighbors.

There are two parts in this algorithm that might be a bit confusing.

First, how to select the correct K; and second, how to compute the similarity

between cases,

for example, among customers?

Let’s first start with second concern, that is, how can we calculate the similarity

between

two data points?

Assume that we have two customers, customer 1 and customer 2.

And, for a moment, assume that these 2 customers have only one feature, Age.

We can easily use a specific type of Minkowski distance to calculate the distance

of these

2 customers.

It is indeed, the Euclidian distance.

Distance of x1 from x2 is root of 34 minus 30 to power of 2, which is 4.

What about if we have more than one feature, for example Age and Income?

If we have income and age for each customer, we can still use the same formula, but

this

time, we’re using it in a 2-dimensional space.

We can also use the same distance matrix for multi-dimensional vectors.

Of course, we have to normalize our feature set to get the accurate dissimilarity

measure.

There are other dissimilarity measures as well that can be used for this purpose

but,

as mentioned, it is highly dependent on data type and also the domain that

classification

is done for it.

As mentioned, K in k-nearest neighbors, is the number of nearest neighbors to

examine.

It is supposed to be specified by the user.

So, how do we choose the right K?

Assume that we want to find the class of the customer noted as question mark on the

chart.

What happens if we choose a very low value of K, let’s say, k=1?

The first nearest point would be Blue, which is class 1.

This would be a bad prediction, since more of the points around it are Magenta, or

class 4.

In fact, since its nearest neighbor is Blue, we can say that we captured the noise

in the

data, or we chose one of the points that was an anomaly in the data.

A low value of K causes a highly complex model as well, which might result in over-

fitting

of the model.

It means the prediction process is not generalized enough to be used for out-of-

sample cases.

Out-of-sample data is data that is outside of the dataset used to train the model.

In other words, it cannot be trusted to be used for prediction of unknown samples.

It’s important to remember that over-fitting is bad, as we want a general model

that works

for any data, not just the data used for training.

Now, on the opposite side of the spectrum, if we choose a very high value of K,

such

as K=20, then the model becomes overly generalized.

So, how we can find the best value for K?

The general solution is to reserve a part of your data for testing the accuracy of

the

model.

Once you’ve done so, choose k =1, and then use the training part for modeling, and

calculate

the accuracy of prediction using all samples in your test set.

Repeat this process, increasing the k, and see which k is best for your model.

For example, in our case, k=4 will give us the best accuracy.

Nearest neighbors analysis can also be used to compute values for a continuous

target.

In this situation, the average or median target value of the nearest neighbors is

used to

obtain the predicted value for the new case.

For example, assume that you are predicting the price of a home based on its

feature set,

such as number of rooms, square footage, the year it was built, and so on.

You can easily find the three nearest neighbor houses, of course -- not only based

on distance,

but also based on all the attributes, and then predict the price of the house, as

the

median of neighbors.

This concludes this video. Thanks for watching!

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (589)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5806)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Research Methods in Applied SettingsDocument451 pagesResearch Methods in Applied SettingsMark SmithNo ratings yet

- Faculty of Engineering and Technology Ramaiah University of Applied SciencesDocument5 pagesFaculty of Engineering and Technology Ramaiah University of Applied SciencesDhanaraj BhaskarNo ratings yet

- Minor Project Pre PresentationDocument18 pagesMinor Project Pre PresentationDhanaraj BhaskarNo ratings yet

- 1-12 THDocument4 pages1-12 THDhanaraj BhaskarNo ratings yet

- Development of Pigeon Pea Crop Reaper Machine: ©M. S. Ramaiah University of Applied SciencesDocument55 pagesDevelopment of Pigeon Pea Crop Reaper Machine: ©M. S. Ramaiah University of Applied SciencesDhanaraj BhaskarNo ratings yet

- SAS Cluster Project ReportDocument24 pagesSAS Cluster Project ReportBiazi100% (1)

- Z-Test: Montilla, Kurt Kubain M. Cabugatan, Hanif S. Mendez, Mariano Ryan JDocument18 pagesZ-Test: Montilla, Kurt Kubain M. Cabugatan, Hanif S. Mendez, Mariano Ryan JHanif De CabugatanNo ratings yet

- Multivariate and Univariate Process Control: Geometry and Shift DirectionsDocument6 pagesMultivariate and Univariate Process Control: Geometry and Shift DirectionsLata DeshmukhNo ratings yet

- Probability: Mathematical Measure of ChanceDocument22 pagesProbability: Mathematical Measure of ChanceRam MohanNo ratings yet

- Non Parametric Density EstimationDocument4 pagesNon Parametric Density Estimationzeze1No ratings yet

- Inferential Stat 1Document124 pagesInferential Stat 1Israel EsmileNo ratings yet

- The Research Process: © Metpenstat I - Fpsiui 1Document38 pagesThe Research Process: © Metpenstat I - Fpsiui 1DrSwati BhargavaNo ratings yet

- Math3302 Fa2021 SyllabusDocument4 pagesMath3302 Fa2021 SyllabusCuong TranNo ratings yet

- Quantitative FinalDocument43 pagesQuantitative FinaldagusclemlaurenceNo ratings yet

- Netspan Counters and KPIs Overview Document - SR17.00 - Standard - Rev 13.2Document42 pagesNetspan Counters and KPIs Overview Document - SR17.00 - Standard - Rev 13.2BIRENDRA BHARDWAJNo ratings yet

- Cbcs Ba-Bsc Hons Sem-5 Mathematics Cc-11 Probability & Statistics-10230Document5 pagesCbcs Ba-Bsc Hons Sem-5 Mathematics Cc-11 Probability & Statistics-10230SDasNo ratings yet

- The Impact of Strategic Planning On Organizational Performance Through Strategy ImplementationDocument6 pagesThe Impact of Strategic Planning On Organizational Performance Through Strategy ImplementationStephen NyakundiNo ratings yet

- Edexcel GCE: Tuesday 19 June 2001 Time: 1 Hour 30 MinutesDocument5 pagesEdexcel GCE: Tuesday 19 June 2001 Time: 1 Hour 30 MinutesRahyan AshrafNo ratings yet

- Chase Cats1dDocument26 pagesChase Cats1dagox194No ratings yet

- An Introduction To The BootstrapDocument7 pagesAn Introduction To The Bootstraphytsang123No ratings yet

- SMU MBA 103 - Statistics For Management Free Solved AssignmentDocument12 pagesSMU MBA 103 - Statistics For Management Free Solved Assignmentrahulverma25120% (2)

- A Pre Study For Developing and Exploring An "Impulsive Buying Scale" For Turkish PopulationDocument12 pagesA Pre Study For Developing and Exploring An "Impulsive Buying Scale" For Turkish PopulationIndrayani Gustami PrayitnoNo ratings yet

- MFIN6201 Empirical Techniques and Application in Finance S12017Document16 pagesMFIN6201 Empirical Techniques and Application in Finance S12017Sam TripNo ratings yet

- Modeling of Activated Sludge With ASM1 Model, Case Study On Wastewater Treatment Plant of South of IsfahanDocument10 pagesModeling of Activated Sludge With ASM1 Model, Case Study On Wastewater Treatment Plant of South of IsfahanAnish GhimireNo ratings yet

- Tisa Random FileDocument23 pagesTisa Random Filedaenieltan2No ratings yet

- International Business ManagementDocument14 pagesInternational Business Managementmapas_salemNo ratings yet

- Proc GLM - Sas User GuideDocument190 pagesProc GLM - Sas User GuideNilkanth ChapoleNo ratings yet

- RM - 10 - Hypothesis TestingDocument18 pagesRM - 10 - Hypothesis Testingrizul SAININo ratings yet

- ISYE 6420 SyllabusDocument1 pageISYE 6420 SyllabusYasin Çagatay GültekinNo ratings yet

- Template - Chapter 3Document5 pagesTemplate - Chapter 3Anony MousNo ratings yet

- Bmgt25 - LM 5 - Forecasting Pt. 2Document41 pagesBmgt25 - LM 5 - Forecasting Pt. 2Ronald ArboledaNo ratings yet

- AI Lab 12 & 13Document8 pagesAI Lab 12 & 13Rameez FazalNo ratings yet

- BDM Curriculum 1665047518017Document2 pagesBDM Curriculum 1665047518017syedNo ratings yet

- Uma SekaranDocument158 pagesUma SekaranSehrish Jabeen67% (3)