Professional Documents

Culture Documents

Pyspark RDD Cheat Sheet Python For Data Science

Uploaded by

Angel ChirinosOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pyspark RDD Cheat Sheet Python For Data Science

Uploaded by

Angel ChirinosCopyright:

Available Formats

> Retrieving RDD Information

> Reshaping Data

Basic Information Re ducing

Python For Data Science

>>> rdd.getNumPartitions() #List the number of partitions

>>> rdd.reduceByKey(lambda x,y : x+y).collect() #Merge the

[('a',9),('b',2)]

>>> rdd.reduce(lambda a, b: a + b) #Merge the rdd values

rdd values for each key

>>> rdd.count() #Count RDD instances 3

('a',7,'a',2,'b',2)

>>> rdd.countByKey() #Count RDD instances by key

PySpark RDD Cheat Sheet defaultdict(<type 'int'>,{'a':2,'b':1})

>>> rdd.countByValue() #Count RDD instances by value

Grouping by

>>> rdd3.groupBy(lambda x: x % 2) #Return RDD of grouped values

defaultdict(<type 'int'>,{('b',2):1,('a',2):1,('a',7):1})

.mapValues(list)

>>> rdd.collectAsMap() #Return (key,value) pairs as a dictionary

Learn PySpark RDD online at www.DataCamp.com .collect()

{'a': 2,'b': 2}

>>> rdd.groupByKey() #Group rdd by key

>>> rdd3.sum() #Sum of RDD elements 4950

.mapValues(list)

>>> sc.parallelize([]).isEmpty() #Check whether RDD is empty

.collect()

True

[('a',[7,2]),('b',[2])]

Aggregating

Spark S ummary >>> seqOp = (lambda x,y: (x[0]+y,x[1]+1))

>>> combOp = (lambda x,y:(x[0]+y[0],x[1]+y[1]))

>>> r dd3.max() #Maximum value of RDD elements

#Aggregate RDD elements of each partition and then the results

99

>>> rdd3.aggregate((0,0),seqOp,combOp)

PySpark is the Spark Python API that exposes

>>> r dd3.min() #Minimum value of RDD elements

(4950,100)

the Spark programming model to Python. #Aggregate values of each RDD key

>>> rdd3.mean() #Mean value of RDD elements

>>> rdd.aggregateByKey((0,0),seqop,combop).collect()

49.5

[('a',(9,2)), ('b',(2,1))]

>>> rdd3.stdev() #Standard deviation of RDD elements

#Aggregate the elements of each partition, and then the results

28.866070047722118

>>> rdd3.fold(0,add)

>>> rdd3.variance() #Compute variance of RDD elements

> Initializing Spark 833.25

4950

#Merge the values for each key

>>> rdd3.histogram(3) #Compute histogram by bins

>>> rdd.foldByKey(0, add).collect()

([0,33,66,99],[33,33,34])

SparkC ontext >>> rdd3.stats() #Summary statistics (count, mean, stdev, x &

ma min)

[('a',9),('b',2)]

#Create tuples of RDD elements by applying a function

>>> rdd3.keyBy(lambda x: x+x).collect()

>>> from pyspark import SparkContext

>>> sc = SparkContext(master = 'local[2]')

> Applying Functions

Inspect SparkContext > Mathematical Operations

A

# pply a function to each RDD element

>>> sc .version #Retrieve SparkContext version

>>> rdd.map(lambda x: x+(x[1],x[0])).collect()

>>> rdd.subtract(rdd2).collect() #Return each rdd value not contained in rdd 2

>>> sc.pythonVer #Retrieve Python version

[('a',7,7,'a'),('a',2,2,'a'),('b',2,2,'b')]

[('b',2),('a',7)]

>>> sc.master #Master URL to connect to

#Apply a function to each RDD element and flatten the result

#Return each (key,value) pair of rdd2 with no matching key in rd d

>>> str(sc.sparkHome) #Path where Spark is installed on worker nodes

>>> rdd5 = rdd.flatMap(lambda x: x+(x[1],x[0]))

>>> rdd2.subtractByKey(rdd).collect()

>>> str(sc.sparkUser()) #Retrieve name of the Spark User running SparkContext

>>> rdd5.collect()

[('d', 1)]

>>> sc.appName #Return application name

['a',7,7,'a','a',2,2,'a','b',2,2,'b']

>>> rdd.cartesian(rdd2).collect() #Return the Cartesian product of rdd and rdd 2

>>> sc.applicationId #Retrieve application ID

#Apply a flatMap function to each (key,value) pair of g g

rdd4 without chan in s

the key

>>> sc.defaultParallelism #Return default level of parallelism

>>> rdd4.flatMapValues(lambda x: x).collect()

>>> sc.defaultMinPartitions #Default minimum number of partitions for RDDs [('a','x'),('a','y'),('a','z'),('b','p'),('b','r')]

> Sort

C onfiguration

>>> from pyspark import SparkConf , SparkContext

> Selecting Data >>> rdd2.sortBy(lambda x: x[1]).collect() #Sort RDD by given function

[('d',1),('b',1),('a',2)]

>>> conf = (SparkConf()

>>> rdd2.sortByKey().collect() #Sort (key, value) RDD by key

.setMaster("local")

Getting [('a',2),('b',1),('d',1)]

.setAppName("My app")

.set("spark.executor.memory", "1g"))

>>> rdd.collect() #Return a list with all RDD elements

[('a', 7), ('a', 2), ('b', 2)]

>>> sc = SparkContext(conf = conf)

>>> rdd.take(2) #Take first 2 RDD elements

[('a', 7), ('a', 2)]

Using The Shell >>> rdd.first() #Take first RDD element

> Repartitioning

('a', 7)

>>> rdd.top(2) #Take top 2 RDD elements

In the PySpark shell, a special interpreter-aware SparkContext is already created in the variable called sc. >>> r dd.repartition(4) #New RDD with 4 partitions

[('b', 2), ('a', 7)]

>>> rdd.coalesce(1) #Decrease the number of partitions in the RDD to 1

$ ./bin/spark-shell --master local[2]

$ ./bin/pyspark --master local[4] --py-files d .

co e py

Samplin g

>>> rdd3.sample(False, 0.15, 81).collect() #Return sampled subset of rdd3

Set which master the context connects to with the --master argument, and

add Python .zip, .egg or .py files to the

[3,4,27,31,40,41,42,43,60,76,79,80,86,97]

runtime path by passing a

comma-separated list to --py-files.

Filtering > Saving

>>> rdd.filter(lambda x: "a" in x).collect()

#Filter the RDD

[('a',7),('a',2)]

>>> r dd.saveAsTextFile("rdd.txt")

> Loading Data >>> rdd5.distinct().collect() #Return distinct RDD values

['a',2,'b',7]

>>> rdd.saveAsHadoopFile("hdfs://namenodehost/parent/child",

’org.apache.hadoop.mapred.TextOutputFormat')

>>> rdd.keys().collect() #Return (key,value) RDD's keys

Para e ll lized Collections ['a', 'a', 'b']

>>> r dd = sc.parallelize([('a',7),('a',2),('b',2)])

> Stopping SparkContext

>>> rdd2 = sc.parallelize([('a',2),('d',1),('b',1)])

>>> rdd3 = sc.parallelize(range(100))

> Iterating .

>>> sc stop()

>>> rdd4 = sc.parallelize([("a",["x","y","z"]),

("b",["p", "r"])])

>>> def g(x): print(x)

>>> rdd.foreach(g) #Apply a function to all RDD elements

External Data ('a', 7)

('b', 2)

> Execution

('a', 2)

Rea d either one text file from HDFS, a local file system or or any

Hadoop-supported file system URI with textFile(),

or read in a directory

of text files with wholeTextFiles() $ ./bin/spark-submit / / / h / .

examples src main pyt on pi py

F .

>>> text ile = sc text ile( F "/my/directory/*.txt")

>>> textFile2 = sc.wholeTextFiles("/my/directory/")

Learn Data Skill s Online at www.DataCamp.com

You might also like

- PySpark Cheat Sheet PythonDocument1 pagePySpark Cheat Sheet PythonsreedharNo ratings yet

- PySpark RDD Basics PDFDocument1 pagePySpark RDD Basics PDFCOCONo ratings yet

- Python Spark RDD Cheat SheetDocument1 pagePython Spark RDD Cheat Sheetram179No ratings yet

- PySpark RDD Cheat SheetDocument1 pagePySpark RDD Cheat SheetJuan Ignacio NavarreteNo ratings yet

- CS226 06 RDDDocument29 pagesCS226 06 RDDchenna kesavaNo ratings yet

- PySpark Cheat Sheet For RDD OperationsDocument1 pagePySpark Cheat Sheet For RDD OperationsZyad AhmedNo ratings yet

- Chapter 2Document35 pagesChapter 2AceNo ratings yet

- Chapter 2Document35 pagesChapter 2amirNo ratings yet

- PySpark CheatSheet EdurekaDocument1 pagePySpark CheatSheet EdurekaBL PipasNo ratings yet

- Basics of RDDDocument84 pagesBasics of RDDjustin maxtonNo ratings yet

- Task SparkDocument4 pagesTask SparkAzza A. AzizNo ratings yet

- PySpark Transformations TutorialDocument58 pagesPySpark Transformations Tutorialravikumar lanka100% (1)

- SparkDocument12 pagesSparkPRAMOTH KJNo ratings yet

- Big Data Analysis with Scala and Spark Transformations and ActionsDocument17 pagesBig Data Analysis with Scala and Spark Transformations and ActionsddNo ratings yet

- Apache Spark TutorialsDocument9 pagesApache Spark Tutorialsronics123No ratings yet

- librrd API functionsDocument6 pageslibrrd API functionsAymen ChaoukiNo ratings yet

- "Bdafinal2.Txt" '/T': ###Model Building #Decision TreeDocument3 pages"Bdafinal2.Txt" '/T': ###Model Building #Decision Treeanchit005No ratings yet

- Java - Max Index DiffDocument1 pageJava - Max Index DiffficatssolutionsNo ratings yet

- DS Algo Lab - Dynamic & Jagged ArraysDocument4 pagesDS Algo Lab - Dynamic & Jagged ArraysKakashi HatakaNo ratings yet

- Spark RDD: Nikolay Join Us in Telegram T.me/apache - Spark 2019Document28 pagesSpark RDD: Nikolay Join Us in Telegram T.me/apache - Spark 2019RAHUL SANAPNo ratings yet

- Data Wrangling Cheat SheetDocument1 pageData Wrangling Cheat Sheetcuneyt noksanNo ratings yet

- Python For Data Science: Advanced Indexing Data Wrangling in Pandas Cheat Sheet Combining DataDocument1 pagePython For Data Science: Advanced Indexing Data Wrangling in Pandas Cheat Sheet Combining DatalocutoNo ratings yet

- 4 - Action and RDD TransformationsDocument25 pages4 - Action and RDD Transformationsravikumar lankaNo ratings yet

- Building An LLVM BackendDocument65 pagesBuilding An LLVM BackendfranNo ratings yet

- Assignment Group B16Document3 pagesAssignment Group B16mayankNo ratings yet

- CS 61A Scheme Midterm 2 Cheat SheetDocument2 pagesCS 61A Scheme Midterm 2 Cheat SheetPhelim BradleyNo ratings yet

- Spark TutorialDocument17 pagesSpark TutorialGerardo PerezNo ratings yet

- Pyspark EssentialsDocument24 pagesPyspark EssentialsBasudev ChhotrayNo ratings yet

- RRR Bank Nifty LevelsDocument5 pagesRRR Bank Nifty LevelsRaj GoudNo ratings yet

- Spark Transformations and ActionsDocument4 pagesSpark Transformations and ActionsjuliatomvaNo ratings yet

- Apache Spark: CS240A Winter 2016. T YangDocument36 pagesApache Spark: CS240A Winter 2016. T Yangomegapoint077609No ratings yet

- DUCKDB OutlineDocument3 pagesDUCKDB Outlinedearjais3928No ratings yet

- Opencv CheatsheetDocument2 pagesOpencv CheatsheetJ.Simon GoodallNo ratings yet

- Potensial ListrikDocument3 pagesPotensial Listrikbanana twellzNo ratings yet

- Sparkr: Interactive R at Scale: Shivaram Venkataraman Zongheng YangDocument36 pagesSparkr: Interactive R at Scale: Shivaram Venkataraman Zongheng YangAnil PatialNo ratings yet

- OpenCV 2.1 Cheat Sheet (C++) Reference GuideDocument2 pagesOpenCV 2.1 Cheat Sheet (C++) Reference GuidemartinakosNo ratings yet

- Mysql Cheat Sheet: by ViaDocument2 pagesMysql Cheat Sheet: by ViaSri BalajiNo ratings yet

- Graph Analytics with GraphXDocument34 pagesGraph Analytics with GraphXwebdaxterNo ratings yet

- SPARK SQL AND GRAPHX ANALYSISDocument5 pagesSPARK SQL AND GRAPHX ANALYSISbenben08No ratings yet

- groupByKey VS reduceByKeyDocument3 pagesgroupByKey VS reduceByKeysurendra yandraNo ratings yet

- Spark Join2Document14 pagesSpark Join2Kanna BabuNo ratings yet

- AT - Better C Code For ARM DevicesDocument30 pagesAT - Better C Code For ARM DevicesjvgediyaNo ratings yet

- Guwhati MS 22-23Document7 pagesGuwhati MS 22-23stoppo851No ratings yet

- Mysql Cheat Sheet: by ViaDocument2 pagesMysql Cheat Sheet: by ViaDaniel ŘehákNo ratings yet

- Pandas: Learn Python For Data Science InteractivelyDocument1 pagePandas: Learn Python For Data Science InteractivelySofia HerreraNo ratings yet

- EPDDocument2 pagesEPDBhanuNo ratings yet

- Ravi Pyspark RDD Tutorial 1665758938Document20 pagesRavi Pyspark RDD Tutorial 1665758938Sree KrithNo ratings yet

- Institute - Uie Department-Academic Unit-2: Array & StringsDocument16 pagesInstitute - Uie Department-Academic Unit-2: Array & StringsArjun BNo ratings yet

- R Labs ModifiedDocument25 pagesR Labs ModifiedSarah RabeaNo ratings yet

- RMaria DBDocument14 pagesRMaria DBPedroM.F.NakashimaNo ratings yet

- DMSL Assignment 12Document6 pagesDMSL Assignment 12sirefen421No ratings yet

- Xts Cheat Sheet R For Data Science: Export Xts Objects Missing ValuesDocument1 pageXts Cheat Sheet R For Data Science: Export Xts Objects Missing ValuesAngel ChirinosNo ratings yet

- Anatomy of A Figure: Cheat SheetDocument5 pagesAnatomy of A Figure: Cheat SheetAngel ChirinosNo ratings yet

- Cisco Systems Devnet Associate Devasc 200-901 Official Certification Guide PDFDocument1,338 pagesCisco Systems Devnet Associate Devasc 200-901 Official Certification Guide PDFAngel Chirinos50% (6)

- Basic Two (B02) Hoja de Trabajo A Clave de RespuestasDocument8 pagesBasic Two (B02) Hoja de Trabajo A Clave de RespuestasAngel ChirinosNo ratings yet

- Introduction To Linear RegressionDocument6 pagesIntroduction To Linear RegressionAngel ChirinosNo ratings yet

- Clase 3 UBER Sin PilotosDocument3 pagesClase 3 UBER Sin PilotosAngel ChirinosNo ratings yet

- About InvestopediaDocument10 pagesAbout InvestopediaMuhammad SalmanNo ratings yet

- Student ProfileDocument2 pagesStudent Profileapi-571568290No ratings yet

- Competency MappingDocument20 pagesCompetency MappingMalleyboina NagarajuNo ratings yet

- Business Math - Interest QuizDocument1 pageBusiness Math - Interest QuizAi ReenNo ratings yet

- Partial Molar PropertiesDocument6 pagesPartial Molar PropertiesNISHTHA PANDEYNo ratings yet

- Plates of The Dinosaur Stegosaurus - Forced Convection Heat Loos FinsDocument3 pagesPlates of The Dinosaur Stegosaurus - Forced Convection Heat Loos FinsJuan Fernando Cano LarrotaNo ratings yet

- SDS SikarugasolDocument9 pagesSDS SikarugasolIis InayahNo ratings yet

- Unusual Crowd Activity Detection Using Opencv and Motion Influence MapDocument6 pagesUnusual Crowd Activity Detection Using Opencv and Motion Influence MapAkshat GuptaNo ratings yet

- (332-345) IE - ElectiveDocument14 pages(332-345) IE - Electivegangadharan tharumarNo ratings yet

- Nicotin ADocument12 pagesNicotin ACristina OzarciucNo ratings yet

- CBSE Class 8 Mathematics Ptactice WorksheetDocument1 pageCBSE Class 8 Mathematics Ptactice WorksheetArchi SamantaraNo ratings yet

- Understanding and Applying The ANSI/ ISA 18.2 Alarm Management StandardDocument260 pagesUnderstanding and Applying The ANSI/ ISA 18.2 Alarm Management StandardHeri Fadli SinagaNo ratings yet

- Cisco VoipDocument37 pagesCisco VoipLino Vargas0% (1)

- NI Kontakt Vintage Organs Manual EnglishDocument31 pagesNI Kontakt Vintage Organs Manual Englishrocciye100% (1)

- Syntax Score Calculation With Multislice Computed Tomographic Angiography in Comparison To Invasive Coronary Angiography PDFDocument5 pagesSyntax Score Calculation With Multislice Computed Tomographic Angiography in Comparison To Invasive Coronary Angiography PDFMbak RockerNo ratings yet

- Hantavirus Epi AlertDocument4 pagesHantavirus Epi AlertSutirtho MukherjiNo ratings yet

- Teltonika Networks CatalogueDocument40 pagesTeltonika Networks CatalogueazizNo ratings yet

- Creating and Using Virtual DPUsDocument20 pagesCreating and Using Virtual DPUsDeepak Gupta100% (1)

- REST API For Oracle Fusion Cloud HCMDocument19 pagesREST API For Oracle Fusion Cloud HCMerick landaverdeNo ratings yet

- (Nick Lund - Grenadier) Fantasy Warriors Special RulesDocument11 pages(Nick Lund - Grenadier) Fantasy Warriors Special Rulesjasc0_hotmail_it100% (1)

- PK 7Document45 pagesPK 7Hernan MansillaNo ratings yet

- Group5 AssignmentDocument10 pagesGroup5 AssignmentYenew AyenewNo ratings yet

- Seven Tips For Surviving R: John Mount Win Vector LLC Bay Area R Users Meetup October 13, 2009 Based OnDocument15 pagesSeven Tips For Surviving R: John Mount Win Vector LLC Bay Area R Users Meetup October 13, 2009 Based OnMarco A SuqorNo ratings yet

- Lead Creation v. Schedule A - 1st Amended ComplaintDocument16 pagesLead Creation v. Schedule A - 1st Amended ComplaintSarah BursteinNo ratings yet

- Diaphragm Wall PresentationDocument52 pagesDiaphragm Wall PresentationGagan Goswami100% (11)

- Analyzing Memory Bus to Meet DDR Specification Using Mixed-Domain Channel ModelingDocument30 pagesAnalyzing Memory Bus to Meet DDR Specification Using Mixed-Domain Channel ModelingVăn CôngNo ratings yet

- UEM Sol To Exerc Chap 097Document11 pagesUEM Sol To Exerc Chap 097sibieNo ratings yet

- Alternative Price List Usa DypartsDocument20 pagesAlternative Price List Usa DypartsMarcel BaqueNo ratings yet

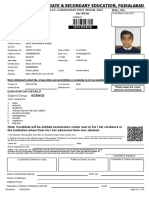

- Roll No. Form No.: Private Admission Form S.S.C. Examination First Annual 2023 9th FRESHDocument3 pagesRoll No. Form No.: Private Admission Form S.S.C. Examination First Annual 2023 9th FRESHBeenish MirzaNo ratings yet

- Man 040 0001Document42 pagesMan 040 0001arturo tuñoque effioNo ratings yet