Professional Documents

Culture Documents

Hadoop and Mapreduce Cheat Sheet

Hadoop and Mapreduce Cheat Sheet

Uploaded by

connectbalajirCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hadoop and Mapreduce Cheat Sheet

Hadoop and Mapreduce Cheat Sheet

Uploaded by

connectbalajirCopyright:

Available Formats

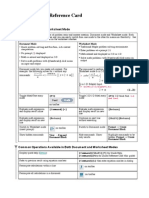

HADOOP AND Hadoop HDFS List File Commands

hdfs dfs –ls /

Tasks

Lists all the files and directories given for the hdfs

Commands used to interact with MapReduce

Commands Tasks

MAPREDUCE

destination path

hdfs dfs –ls –d /hadoop This command lists all the details of the Hadoop files hadoop job -submit <job-file> used to submit the Jobs created

Recursively lists all the files in the Hadoop directory shows map & reduce completion

hdfs dfs –ls –R /hadoop hadoop job -status <job-id>

C H E AT S H E E T

and al sub directories in Hadoop directory

status and all job counters

This command lists all the files in the Hadoop directory

hdfs dfs –ls hadoop/ dat* hadoop job -counter <job-id> <group-

starting with ‘dat’ prints the counter value

name><countername>

Hadoop & MapReduce

Hdfs basic commands Tasks hadoop job -kill <job-id> This command kills the job

hdfs dfs -put logs.csv /data/

This command is used to upload the files from local file

MapReduce hadoop job -events <job-id> shows the event details received

Basics

system to HDFS

<fromevent-#> <#-of-events> by the job tracker for given range

hdfs dfs -cat /data/logs.csv This command is used to read the content from the file MapReduce is a framework for processing parallelizable problems Prints the job details, and killed

across huge datasets using a large number of systems referred as hadoop job -history [all] <jobOutputDir>

hdfs dfs -chmod 744 /data/logs.csv This command is used to change the permission of the files and failed tip details

clusters. Basically, it is a processing technique and program model for This command is used to display

Hadoop hdfs dfs -chmod –R 744 /data/logs.csv

This command is used to change the permission of the files

distributed computing based on Java

hadoop job -list[all]

all the jobs

recursively

Hadoop is a framework basically designed to handle a large volume of data This command is used to kill the

hdfs dfs -setrep -w 5 /data/logs.csv This command is used to set the replication factor to 5 hadoop job -kill-task <task-id>

both structured and unstructured tasks

hdfs dfs -du -h /data/logs.csv This command is used to check the size of the file Mahout hadoop job -fail-task <task-id>

This command is used to fail the

This command is used to move the files to a newly created

HDFS hdfs dfs -mv logs.csv logs/

subdirectory Apache Mahout is an open source algebraic framework used for data

hadoop job -set-priority <job-id>

task

Changes and sets the priority of

mining which works along with the distributed environments with

Hadoop Distributed File System is a framework designed to manage huge hdfs dfs -rm -r logs This command is used to remove the directories from Hdfs <priority> the job

volumes of data in a simple and pragmatic way. It contains a vast amount of simple programming languages

stop-all.sh This command is used to stop the cluster HADOOP_HOME/bin/hadoop job -kill This command kills the job

servers and each stores a part of file system start-all.sh This command is used to start the cluster <JOB-ID> created

In order to secure Hadoop, configure Hadoop with the following aspects Hadoop version This command is used to check the version of Hadoop HADOOP_HOME/bin/hadoop job - This is used to show the history

Components of history <DIR-NAME> of the jobs

• Authentication: hdfs fsck/ This command is used to check the health of the files

• Define users

This command is used to turn off the safemode of

MapReduce Important commands used in MapReduce

Hdfs dfsadmin –safemode leave

• Enable Kerberos in Hadoop namenode PayLoad: The applications implement Map and Reduce functions and

Usage: mapred [Generic commands] <parameters>

• Set-up Knox gateway to control access and authentication Hdfs namenode -format This command is used to format the NameNode form the core of the job

to the HDFS cluster hadoop [--config confdir]archive - Parameters Tasks

MRUnit: Unit test framework for MapReduce

This command is used to create a Hadoop archieve

• Authorization: archiveName NAME -p

Mapper: Mapper maps the input key/value pairs to the set of -input directory/file-name Shows Inputs the location for mapper

• Define groups hadoop fs [generic options] -touchz

intermediate key/value pairs -output directory-name Shows output location for the mapper

This is used to create an empty files in a hdfs directory

• Define HDFS permissions <path> ... -mapper executable or

NameNode: Node that manages the HDFS is known as namednode Used for Mapper executable

• Define HDFS ACL’s hdfs dfs [generic options] -getmerge This is used to concatenate all files in a directory into one script or JavaClassName

DataNode: Node where the data is presented before processing takes

[-nl] <src> <localdst> file

• Audit: -reducer executable or

hdfs dfs -chown -R admin:hadoop This is used to change the owner of the group

place Used for reducer executable

Enable process execution audit trail script or JavaClassName

/new-dir MasterNode: Node where the jobtrackers runs and accept the job

• Data protection: Makes the mapper, reducer, combiner

request from the clients

Enable wire encryption with Hadoop Commands Tasks

SlaveNode: Node where the Map and Reduce program runs -file file-name executable available locally on the

yarn This command shows the yarn help

JobTracker: Schedules jobs and tracks the assigned jobs to the task computing nodes

yarn [--config confdir] This command is used to define configuration file

tracker -numReduceTasks This is used to specify number of reducers

yarn [--loglevel loglevel] This can be used to define the log level, which can be

fatal, error, warn, info, debug or trace -mapdebug Script to call when the map task fails

TaskTracker: Tracks the task and updates the status to the job tracker

yarn classpath This is used to show the Hadoop classpath -reducedebug Script to call when the reduce task fails

Job: A program which is an execution of a Mapper and Reducer across

yarn application This is used to show and kill the Hadoop applications a dataset

yarn applicationattempt This shows the application attempt

Task: An execution of Mapper and Reducer on a piece of data

yarn container This command shows the container information

Task Attempt: A particular instance of an attempt to execute a task on

yarn node This shows the node information FURTHERMORE:

a SlaveNode

yarn queue This shows the queue information Big Data Hadoop Certification Training Course

You might also like

- Database Systems The Complete Book PDFDocument20 pagesDatabase Systems The Complete Book PDFmubashir nasirNo ratings yet

- A Prolog Introduction For HackersDocument9 pagesA Prolog Introduction For HackersMC. Rene Solis R.No ratings yet

- Singular Value Decomposition - Lecture NotesDocument87 pagesSingular Value Decomposition - Lecture NotesRoyi100% (1)

- Rsa - TCR PDFDocument89 pagesRsa - TCR PDFGigi FloricaNo ratings yet

- BayesforbeginnersDocument21 pagesBayesforbeginnersapi-284811969No ratings yet

- HUAWEI GENEX Discovery PS Collector Configuration Guide V2.0Document12 pagesHUAWEI GENEX Discovery PS Collector Configuration Guide V2.0eduardo2307No ratings yet

- Complex Vector Spaces PDFDocument42 pagesComplex Vector Spaces PDFmechmaster4uNo ratings yet

- Avl TreesDocument25 pagesAvl TreesNarendra ChindanurNo ratings yet

- Symbolic Mathematics in PythonDocument1 pageSymbolic Mathematics in PythonLuis Solis HernandezNo ratings yet

- Pre-Placements ChecklistDocument7 pagesPre-Placements ChecklistVikram MohantyNo ratings yet

- Kaldewaij - ProgrammingDocument117 pagesKaldewaij - ProgrammingWilly Alexis Peña Vilca100% (1)

- Octave AtBFHDocument296 pagesOctave AtBFHlllNo ratings yet

- Sipser Chapter 2 SolutionsDocument9 pagesSipser Chapter 2 SolutionsSGNo ratings yet

- Engineering Optimization: An Introduction with Metaheuristic ApplicationsFrom EverandEngineering Optimization: An Introduction with Metaheuristic ApplicationsNo ratings yet

- Functional Data Analysis PDFDocument8 pagesFunctional Data Analysis PDFAdnan SyarifNo ratings yet

- Introduction To GRAPH DatabaseDocument18 pagesIntroduction To GRAPH DatabaseSantanu GhoshNo ratings yet

- MathmlDocument385 pagesMathmlAgustin RomeroNo ratings yet

- RPA For Finance and Accounting by SquareOne Technologies V2Document4 pagesRPA For Finance and Accounting by SquareOne Technologies V2Salman RabithNo ratings yet

- AlgoXY Elementary AlgorithmsDocument749 pagesAlgoXY Elementary Algorithmselidrissia0No ratings yet

- Morris - Laplace Transform in Probability Distributions and in Pure Birth Processes-1 - Rozwiazanie Mojego Zagadnienia PDFDocument608 pagesMorris - Laplace Transform in Probability Distributions and in Pure Birth Processes-1 - Rozwiazanie Mojego Zagadnienia PDFbaja2014100% (1)

- Math208discretemathv2 1Document332 pagesMath208discretemathv2 1Omar HamadaNo ratings yet

- Ds and AlgoDocument2 pagesDs and AlgoUbi BhattNo ratings yet

- AIML001 Generative AI On AWS - Build and Scale Generative AI Applications With Foundation ModelsDocument28 pagesAIML001 Generative AI On AWS - Build and Scale Generative AI Applications With Foundation ModelsDurga Sai Deepak YelletiNo ratings yet

- Text SummarizationDocument6 pagesText SummarizationVIVA-TECH IJRINo ratings yet

- Graphs Breadth First Search & Depth First Search: by Shailendra UpadhyeDocument21 pagesGraphs Breadth First Search & Depth First Search: by Shailendra Upadhyeجعفر عباسNo ratings yet

- Learn Ruby The Hard Way (Part 2)Document6 pagesLearn Ruby The Hard Way (Part 2)irohaNo ratings yet

- SdaDocument1,298 pagesSdasandeepNo ratings yet

- NotesDocument422 pagesNotesPrahitha MovvaNo ratings yet

- Lesson1 Notes FastaiDocument18 pagesLesson1 Notes FastaiOscar TokuNo ratings yet

- Maple 13 Quick Reference Card: Document Mode vs. Worksheet ModeDocument5 pagesMaple 13 Quick Reference Card: Document Mode vs. Worksheet ModeteachopensourceNo ratings yet

- Bielajew, A. F. - Introduction To Computers and Programming Using C++ and MATLAB - 2002Document440 pagesBielajew, A. F. - Introduction To Computers and Programming Using C++ and MATLAB - 2002Natrix2No ratings yet

- Humble Ruby BookDocument141 pagesHumble Ruby Book5xnewxNo ratings yet

- Aws Technical EssentialsDocument18 pagesAws Technical EssentialsRishi MukherjeeNo ratings yet

- Notes PDFDocument407 pagesNotes PDFsrasrk11No ratings yet

- Mathml TutorialDocument43 pagesMathml Tutorialichank_ymnNo ratings yet

- (Skiena, 2017) - Book - The Data Science Design Manual - 3Document1 page(Skiena, 2017) - Book - The Data Science Design Manual - 3AnteroNo ratings yet

- On Rice S Theorem: 1 PropertiesDocument7 pagesOn Rice S Theorem: 1 PropertiesVikasThadaNo ratings yet

- Simulation Methods in Statistical Physics, Project Report, FK7029, Stockholm UniversityDocument31 pagesSimulation Methods in Statistical Physics, Project Report, FK7029, Stockholm Universitysebastian100% (2)

- Automating Legacy Desktop Applications With JRuby and Sikuli (No Notes)Document112 pagesAutomating Legacy Desktop Applications With JRuby and Sikuli (No Notes)turtleyachtNo ratings yet

- CS85: Data Stream Algorithms Lecture Notes, Fall 2009: Amit Chakrabarti Dartmouth CollegeDocument61 pagesCS85: Data Stream Algorithms Lecture Notes, Fall 2009: Amit Chakrabarti Dartmouth CollegearnettmoultrieNo ratings yet

- Introduction To Orbital-Free Density-Functional Theory: Ralf Gehrke FHI Berlin, February 8th 2005Document35 pagesIntroduction To Orbital-Free Density-Functional Theory: Ralf Gehrke FHI Berlin, February 8th 2005ursml12No ratings yet

- Python Homework 1Document4 pagesPython Homework 1pace_saNo ratings yet

- Anomaly Detection RapidMinerDocument12 pagesAnomaly Detection RapidMinerAnwar ZahroniNo ratings yet

- C For Engineers HandoutDocument44 pagesC For Engineers HandoutIbrahim HejabNo ratings yet

- Math NotationDocument20 pagesMath NotationhelloapurbaNo ratings yet

- Tikz TutorialDocument14 pagesTikz Tutorialmitooquerer7260No ratings yet

- Algorithms: 2006 S. Dasgupta, C. H. Papadimitriou, and U. V. Vazirani July 18, 2006Document8 pagesAlgorithms: 2006 S. Dasgupta, C. H. Papadimitriou, and U. V. Vazirani July 18, 2006A.K. MarsNo ratings yet

- Chaitin's Constant: An Uncomputable NumberDocument8 pagesChaitin's Constant: An Uncomputable NumberMarcelo BesserNo ratings yet

- Bayesian Network SolutionsDocument7 pagesBayesian Network SolutionsVin NgoNo ratings yet

- Probability and Computing Lecture NotesDocument252 pagesProbability and Computing Lecture NotesAl AreefNo ratings yet

- EisensteinDocument305 pagesEisensteinjoseduardoNo ratings yet

- ML - Lab - Classifiers BLANK PDFDocument68 pagesML - Lab - Classifiers BLANK PDFAndy willNo ratings yet

- C++ Bank ManagementDocument11 pagesC++ Bank Managementsumeetdude100No ratings yet

- Statistics With Common Sense - David Kault (2003)Document272 pagesStatistics With Common Sense - David Kault (2003)Mary Joyce RaymundoNo ratings yet

- Fall in Love With SPSS Again: Mapping in SPSS 20Document5 pagesFall in Love With SPSS Again: Mapping in SPSS 20odcardozoNo ratings yet

- Combinatorial Mathematics, Optimal Designs, and Their ApplicationsFrom EverandCombinatorial Mathematics, Optimal Designs, and Their ApplicationsNo ratings yet

- ESE-Testing & Commissioning For Alarm & Door Access Control SystemDocument2 pagesESE-Testing & Commissioning For Alarm & Door Access Control SystemalifNo ratings yet

- Solutions Manual To Accompany Engineering Mechanics Dynamics 6th Edition 9780471739319Document18 pagesSolutions Manual To Accompany Engineering Mechanics Dynamics 6th Edition 9780471739319StephenDominguezcrkn100% (18)

- Qca - b2g RepDocument42 pagesQca - b2g Repsanoop joseNo ratings yet

- LED Display: Fixed Installation SeriesDocument1 pageLED Display: Fixed Installation SeriesHadi AzhariNo ratings yet

- Cover Letter Uni PDFDocument11 pagesCover Letter Uni PDFAhnaf ShahriarNo ratings yet

- FYP Test PlanDocument5 pagesFYP Test PlanMuhammad AbdullahNo ratings yet

- Memory - Management - Exercises and SolutionDocument21 pagesMemory - Management - Exercises and Solutionkz33252000No ratings yet

- Networking Basic TopicsDocument5 pagesNetworking Basic TopicsJSON AMARGANo ratings yet

- Design of Elevated Intz Tank-Staad Pro-1-1Document23 pagesDesign of Elevated Intz Tank-Staad Pro-1-1John RomanusNo ratings yet

- Youtube Best Practices ShortenedDocument18 pagesYoutube Best Practices Shortenedapi-583714074No ratings yet

- LR-Heteroskedastisitas Test-Log10 MethodDocument4 pagesLR-Heteroskedastisitas Test-Log10 MethodFikri BaharuddinNo ratings yet

- Quadratic Function and Expression in One VariableDocument14 pagesQuadratic Function and Expression in One VariableNassim NoriqmalNo ratings yet

- HERTZ Catalogo-MARINE 2020 DefDocument19 pagesHERTZ Catalogo-MARINE 2020 DefАртём КостюкNo ratings yet

- Mini-Seis III ManualDocument40 pagesMini-Seis III ManualmisaeluffNo ratings yet

- Installation & System Management: Microsoft Business Solutions-Navision Database ServerDocument174 pagesInstallation & System Management: Microsoft Business Solutions-Navision Database ServersofieneNo ratings yet

- Campus RecuDocument71 pagesCampus Recusatya durga prasad kalagantiNo ratings yet

- MULTIPLE CHOICE. Choose The One Alternative That Best Completes The Statement or Answers The QuestionDocument6 pagesMULTIPLE CHOICE. Choose The One Alternative That Best Completes The Statement or Answers The QuestionAbhinav TayadeNo ratings yet

- Update Guide For New Model Launched in 2008 YearDocument15 pagesUpdate Guide For New Model Launched in 2008 YearALEXISNo ratings yet

- GW Conversion FinalDocument4 pagesGW Conversion FinalSpit FireNo ratings yet

- Cyber Crime Precautionary Measures Prepared by Cyber Expert Adv - Dattatray Bhgwan Dhainje, Pune PDFDocument4 pagesCyber Crime Precautionary Measures Prepared by Cyber Expert Adv - Dattatray Bhgwan Dhainje, Pune PDFPravin PawarNo ratings yet

- CertiK Audit Report For CluCoinDocument12 pagesCertiK Audit Report For CluCoinGAHA Ltd.No ratings yet

- Siemens FP 11Document4 pagesSiemens FP 11FAMYA FIRE SAFETY SYSTEMSNo ratings yet

- Implementing and Configuring Cisco Identity Services Engine (SISE) v3.0Document4 pagesImplementing and Configuring Cisco Identity Services Engine (SISE) v3.0Maruthi HalaviNo ratings yet

- Case StudyDocument26 pagesCase StudyErica Mae Mariano Ingco75% (4)

- Nerve Sensitivity Alert System For Comatose Patients: Submitted byDocument83 pagesNerve Sensitivity Alert System For Comatose Patients: Submitted byGaurav SinghNo ratings yet

- Activty 2 Command Line Skills For Systems Administration FundamentalsDocument3 pagesActivty 2 Command Line Skills For Systems Administration FundamentalsMelon TrophyNo ratings yet

- GeethaGovindam PDFDocument12 pagesGeethaGovindam PDFraughavaNo ratings yet

- ProjectDocument6 pagesProjecthazimsyakir69No ratings yet