Professional Documents

Culture Documents

How To Make The Best Use of Live Sessions

Uploaded by

venkatraji719568Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

How To Make The Best Use of Live Sessions

Uploaded by

venkatraji719568Copyright:

Available Formats

How to make the best use of Live Sessions

• Please login on time

• Please do a check on your network connection and audio before the class to have a smooth session

• All participants will be on mute, by default. You will be unmuted when requested or as needed

• Please use the “Questions” panel on your webinar tool to interact with the instructor at any point during the

class

• Ask and answer questions to make your learning interactive

• Please have the support phone number (US : 1855 818 0063 (toll free), India : +91 90191 17772) and raise

tickets from LMS in case of any issues with the tool

• Most often logging off or rejoining will help solve the tool related issues

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Big Data & Hadoop Certification Training

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Course Outline

Understanding Big Data Kafka Monitoring &

Hive

Stream Processing

and Hadoop

Hadoop Architecture Integration of Kafka

Kafka Producer Advance

with Hive&and

Hadoop HBase

Storm

and HDFS

Hadoop MapReduce Integration of Kafka

Kafka Consumer Advance

Framework with Spark &HBase

Flume

Kafka Operation and Processing Distributed Data

Advance MapReduce

Performance Tuning with Apache Spark

Kafka Cluster Architectures Apache Oozie and Hadoop

Pig Kafka Project

& Administering Kafka Project

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Module 4: Advance MapReduce

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Objectives

At the end of this module, you will be able to:

• Implement Counters in MapReduce

• Understand Map and Reduce Side joins

• Test MapReduce Programs

• Implement Distributed Cache Concept in MapReduce

• Implement Custom Input Format in MapReduce

• Implement Sequence Input Format in MapReduce

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

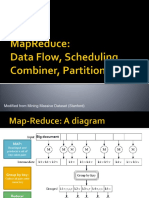

Let’s Revise

INPUT DATA

Node 1 Node 2

Input data is distributed to nodes

Map Map

Each map task works on a “split” of data

Mapper outputs intermediate data

Data exchange between nodes in a “shuffle” process

Intermediate data of the same key goes to the same reducer

Reduce Reduce

Reducer output is stored

Node 1 Node 2

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Question

Can you use the Map-Reduce algorithm to perform a relational join

on two large tables sharing a key? Assume that the two tables are

formatted as comma-separated files in HDFS:

a. Yes

b. No

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Answer

Ans. Yes using Map-Reduce we perform Join Algorithms such as

Map-side, Reduce-side, and In-Memory join in Map-Reduce.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Question

What types of algorithms are difficult to express in Map-Reduce?

a. Algorithms that require global, shared state

b. Algorithms that requires application of the same mathematical

function to large numbers of individual binary records

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Answer

Ans. The correct option is ‘a’. Map-Reduce paradigm works in a

massively parallel system. Map and Reduce tasks execute in isolation

on a chunk of data (input splits), so algorithms which require a

global shared state to be maintained aren’t suitable for Map-Reduce

framework.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Question

At what stage in Map-Reduce tasks execution, a reducer's reduce

function starts?

a. At least one mapper is ready with its output

b. map() and reduce() starts simultaneously

c. After processing for all the map tasks is completed

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Answer

Ans. The correct option is ‘c’. The Reduce tasks works on the output

of Map tasks and the output from all the Mappers is required to start

the Reduce process of Map-Reduce algorithm.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Question

You want to reduce the traffic between mapper and reducer. Your class

should implement which interface?

a. Partitioner

b. Combiner

c. Writable

d. WritableComparable

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Annie’s Answer

Ans. The correct option is ‘b’. Combiners are basically mini-reducers.

They essentially lessen the workload which is passed on further to the

reducers.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Map and Reduce Side Joins

Fragment (large table)

Map tasks: Split 1 Split 2 Split 3 Split 4

Duplicate

(small table)

Duplicate

Blog: http://www.edureka.in/blog/map-side-vs-join/

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Map and Reduce Side Joins

Small Table

Task

Data

a

MapReduce Local Task Hash Table Files Compressed and Archived

Distributed Cache

Mapper

Mapper

Record

Mapper Record

Record

b Record Big Table Data

.

Output

.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: Joins in Map-Reduce

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Input Format

Input file Input file

Input Split Input Split Input Split Input Split

Input Format

Record Record Record Record

Reader Reader Reader Reader

Mapper Mapper Mapper Mapper

(Intermediates) (Intermediates) (Intermediates) (Intermediates)

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Input Format – Class Hierarchy

Combine File

Input Format<K,V>

Text Input Format

Input Format File Input Format Key Value Text

<K,V> <K,V> Input Format

Nline Input Format

Sequence File Sequence File As

Input Format<K,V> Binary Input Format

<<interface>>

Composable

Sequence File As

Input Format Composite Input Format

Text Input Format

<K,V> <K,V>

DB Input Format Sequence File Input

<T> Filter<K,V>

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Output Format

Reducer Reducer Reducer

Output Format

RecordWriter RecordWriter RecordWriter

Output file Output file Output file

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Output Format – Class Hierarchy

Text Output Format

Output Format File Output Format <K,V>

<K,V> <K,V>

Sequence File

Output Format<K,V>

Null Output Format

<K,V>

Sequence File As Binary

Output Format

DB Output Format

<K,V>

Filter Output Format Lazy Output Format

<K,V> <K,V>

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: Custom Input Format

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

MRUnit Testing Framework

▪ Provides 4 drivers for separately testing MapReduce code

MapDriver

ReduceDriver

MapReduceDriver *JUnit is a simple framework to

PipelineMapReduceDriver write repeatable tests.

▪ Helps in filling the gap between MapReduce programs and JUnit*

▪ Better control on log messages with JUnit Integration

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: MRUnit Testing Framework

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Counters

▪ Counters are lightweight objects in Hadoop that allow you to keep track of system progress in both the map and reduce

stages of processing.

▪ Counters are used to gather information about the data we are analysing, like how many types of records were processed,

how many invalid records were found while running the job, etc.

Counters

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: Counters

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Distributed Cache

MapReduce MapReduce MapReduce MapReduce

Distributed Cache is a facility provided by the Map-Reduce

framework to cache files (text, archives, jars etc.) needed by

applications

Files are copied only once per job and should not be modified

by the application or externally while the job is executing.

Distributed Cache can be used to distribute simple, read-only HDFS – Hadoop Distributed Cache

data/text files and/or more complex types such as archives, jars

etc via the JobConf.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: Distributed Cache

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Sequence File

▪ Hadoop is not restricted to processing plain text data. For user custom binary data type, one can use the SequenceFile

▪ SequenceFile is a flat file consisting of binary key/value pairs

▪ Used in MapReduce as input/output formats

▪ Output of Maps are stored using SequenceFile

▪ Provides

▪ A Writer

▪ A Reader

▪ A Sorter

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Sequence File

Three different SequenceFile formats:

▪ Uncompressed key/value records

▪ Record compressed key/value rec

• Only ‘values’ are compressed here

▪ Block compressed key/value records

• Both keys and values are collected in ‘blocks’ separately and compressed

▪ The other objective of using SequenceFile is to 'pack' many small files into a single large SequenceFile for the

MapReduce computation since the design of Hadoop prefers large files (Remember that Hadoop default block

size for data is 64MB).

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Sequence File – Record Compression

Header Record Record Sync Record Record Record Sync Record

Block Record Key Key Value

compression length length

4 4

Record Record Key Compressed

Key Value

compression length length

4 4

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Sequence File – Block Compression

Header Sync Block Sync Block Sync Block Sync Block

Block Number of Compressed Compressed Compressed Compressed

compression records Key lengths keys value lengths values

1-5

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Demo: SequenceFile

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Assignment

Practice “Advance MR Codes” present in the LMS in the Cloud Lab

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Pre-work

Review the following PIG blogs:

http://www.edureka.in/blog/pig-programming-create-your-first-apache-pig-script/

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Agenda for Next Class

• PIG and its need

• Difference between PIG MapReduce

• PIG features and programming structure

• PIG running modes

• PIG components and data model

• Basic operations in PIG

• UDF in PIG

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

Copyright © 2017, edureka and/or its affiliates. All rights reserved.

You might also like

- Apache Kafka TutorialDocument61 pagesApache Kafka Tutoriala3172741100% (1)

- A Brief On MapReduce PerformanceDocument6 pagesA Brief On MapReduce PerformanceIJIRAENo ratings yet

- A Comparative Between Hadoop MapReduce and ApacheDocument4 pagesA Comparative Between Hadoop MapReduce and Apachesalah AlswiayNo ratings yet

- Chapter 3MapReduceDocument30 pagesChapter 3MapReduceKomalNo ratings yet

- Adobe Scan Dec 05, 2023Document7 pagesAdobe Scan Dec 05, 2023dk singhNo ratings yet

- Performance Analysis of Hadoop Map Reduce OnDocument4 pagesPerformance Analysis of Hadoop Map Reduce OnArabic TigerNo ratings yet

- Distributed and Cloud ComputingDocument58 pagesDistributed and Cloud Computing18JE0254 CHIRAG JAINNo ratings yet

- CCD-333 Exam TutorialDocument20 pagesCCD-333 Exam TutorialMohammed Haleem SNo ratings yet

- 7 Full Hadoop Performance Modeling For Job Estimation and Resource ProvisioningDocument94 pages7 Full Hadoop Performance Modeling For Job Estimation and Resource ProvisioningarunkumarNo ratings yet

- Chapter4 PDFDocument50 pagesChapter4 PDFVard FarrellNo ratings yet

- Hadoop EcosystemDocument26 pagesHadoop EcosystemainNo ratings yet

- Hadoop Mapreduce Research PaperDocument7 pagesHadoop Mapreduce Research Papergz83v005100% (1)

- By - Shubham ParmarDocument14 pagesBy - Shubham ParmarGagan DeepNo ratings yet

- Hadoop: A Seminar Report OnDocument28 pagesHadoop: A Seminar Report OnRoshni KhairnarNo ratings yet

- BDL8 PDFDocument41 pagesBDL8 PDFMrs. Usha Naidu SNo ratings yet

- What Is MapReduce in Hadoop - Architecture - ExampleDocument7 pagesWhat Is MapReduce in Hadoop - Architecture - Examplejppn33No ratings yet

- Big Data Hadoop & Spark CurriculumDocument10 pagesBig Data Hadoop & Spark CurriculumManish NashikkarNo ratings yet

- CloudDocument11 pagesCloudDebankan GangulyNo ratings yet

- Map Reduce ReportDocument16 pagesMap Reduce ReportTrishala KumariNo ratings yet

- HADOOP: A Solution To Big Data Problems Using Partitioning Mechanism Map-ReduceDocument6 pagesHADOOP: A Solution To Big Data Problems Using Partitioning Mechanism Map-ReduceEditor IJTSRDNo ratings yet

- Module 3 - MapreduceDocument40 pagesModule 3 - MapreduceAditya RajNo ratings yet

- Hadoop Ecosystem: An Introduction: Sneha Mehta, Viral MehtaDocument6 pagesHadoop Ecosystem: An Introduction: Sneha Mehta, Viral MehtaSivaprakash ChidambaramNo ratings yet

- Hadoop: A Report Writing OnDocument13 pagesHadoop: A Report Writing Ondilip kodmourNo ratings yet

- Discuss Mesos and Yarn and The Relative Placement of The Two RespectivelyDocument6 pagesDiscuss Mesos and Yarn and The Relative Placement of The Two RespectivelyPankhuri BhatnagarNo ratings yet

- Low-Latency, High-Throughput Access To Static Global Resources Within The Hadoop FrameworkDocument15 pagesLow-Latency, High-Throughput Access To Static Global Resources Within The Hadoop Frameworksandeep_nagar29No ratings yet

- Big Data and Analytics and MapReduce 29052023 054155pmDocument35 pagesBig Data and Analytics and MapReduce 29052023 054155pmTalha MughalNo ratings yet

- BFSMpR:A BFS Graph Based Recommendation System Using Map ReduceDocument5 pagesBFSMpR:A BFS Graph Based Recommendation System Using Map ReduceEditor IJRITCCNo ratings yet

- 2012 Efficient Big Data Processing in Hadoop MapReduceDocument2 pages2012 Efficient Big Data Processing in Hadoop MapReduceEduardo Cotrin TeixeiraNo ratings yet

- Chapter 05 Google ReDocument69 pagesChapter 05 Google ReWilliamNo ratings yet

- MapReduce OnlineDocument15 pagesMapReduce OnlineVyhxNo ratings yet

- Implementation of K-Means Algorithm Using Different Map-Reduce ParadigmsDocument6 pagesImplementation of K-Means Algorithm Using Different Map-Reduce ParadigmsNarayan LokeNo ratings yet

- DSBDA Manual Assignment 11Document6 pagesDSBDA Manual Assignment 11kartiknikumbh11No ratings yet

- Hadoop (Mapreduce)Document43 pagesHadoop (Mapreduce)Nisrine MofakirNo ratings yet

- Hdfs Architecture and Hadoop MapreduceDocument10 pagesHdfs Architecture and Hadoop MapreduceNishkarsh ShahNo ratings yet

- MapReduce TutorialDocument32 pagesMapReduce TutorialShivanshuSinghNo ratings yet

- Map-Reduce Is A Programming Model That Is Mainly Divided Into TwoDocument2 pagesMap-Reduce Is A Programming Model That Is Mainly Divided Into TwoFahim MuntasirNo ratings yet

- HadoopDocument5 pagesHadoopVaishnavi ChockalingamNo ratings yet

- 1 Purpose: Single Node Setup Cluster SetupDocument1 page1 Purpose: Single Node Setup Cluster Setupp001No ratings yet

- Mapreduce Research PaperDocument5 pagesMapreduce Research Paperqhfyuubnd100% (1)

- 3a - MapReduce Data Flow Scheduling Combiner Partitioner PDFDocument22 pages3a - MapReduce Data Flow Scheduling Combiner Partitioner PDF23522020 Danendra Athallariq Harya PNo ratings yet

- Apache Hadoop YARNDocument24 pagesApache Hadoop YARNshoebNo ratings yet

- Hadoop: Er. Gursewak Singh DsceDocument15 pagesHadoop: Er. Gursewak Singh DsceDaisy KawatraNo ratings yet

- Hadoop MapReduce2.0 (Part-I)Document18 pagesHadoop MapReduce2.0 (Part-I)Anonymous SaitamaNo ratings yet

- Hadoopvsspark 180108070838Document17 pagesHadoopvsspark 180108070838salah AlswiayNo ratings yet

- Hadoop and MapreduceDocument21 pagesHadoop and Mapreduce18941No ratings yet

- Performing Indexing Operation Using Hadoop MapReduceDocument5 pagesPerforming Indexing Operation Using Hadoop MapReduceKhushali DaveNo ratings yet

- Big Data TutorialDocument2 pagesBig Data TutorialAnand Singh GadwalNo ratings yet

- Hadoop Vs Apache SparkDocument6 pagesHadoop Vs Apache Sparkindolent56No ratings yet

- System Design and Implementation 5.1 System DesignDocument14 pagesSystem Design and Implementation 5.1 System DesignsararajeeNo ratings yet

- Hadoop Ecosystem: Data Is Mainly Categorized in 3 Types Under Big Data PlatformDocument12 pagesHadoop Ecosystem: Data Is Mainly Categorized in 3 Types Under Big Data PlatformDoubt broNo ratings yet

- 2011 Daniel Crawl WORKS Map-Reduce, Provenance, WorkflowsDocument9 pages2011 Daniel Crawl WORKS Map-Reduce, Provenance, WorkflowsSunil ThaliaNo ratings yet

- 2-HadoopArchitecture HDFSDocument84 pages2-HadoopArchitecture HDFSvenkatraji719568No ratings yet

- Big Data Had OopDocument2 pagesBig Data Had OopGiheNoidaNo ratings yet

- Spark Streaming ResearchDocument6 pagesSpark Streaming Researchreshmashaik4656No ratings yet

- HadoopMapreduce SummerizationDocument24 pagesHadoopMapreduce SummerizationAtharv ChaudhariNo ratings yet

- ETRI Journal - 2020 - C - Task Failure Resilience Technique For Improving The Performance of MapReduce in HadoopDocument13 pagesETRI Journal - 2020 - C - Task Failure Resilience Technique For Improving The Performance of MapReduce in HadoopkavithaChinnaduraiNo ratings yet

- MapReduce FlowDocument8 pagesMapReduce FlowChilaka KumarNo ratings yet

- Lecture 2 - Mapreduce: Cpe 458 - Parallel Programming, Spring 2009Document26 pagesLecture 2 - Mapreduce: Cpe 458 - Parallel Programming, Spring 2009rhshrivaNo ratings yet

- Lec 6Document16 pagesLec 6bhargaviNo ratings yet

- Map ReduceDocument69 pagesMap ReducepdvdmNo ratings yet

- Shell Scripting TutorialDocument1 pageShell Scripting Tutorialvenkatraji719568No ratings yet

- NagiosDocument16 pagesNagiosMojo CratNo ratings yet

- MySQL Lab GuideDocument3 pagesMySQL Lab Guideboxbe9876No ratings yet

- 523 Hue Lab Guide-Hadoop v1.0Document4 pages523 Hue Lab Guide-Hadoop v1.0venkatraji719568No ratings yet

- Shell InoDocument13 pagesShell Inoericdravenll6427No ratings yet

- UNIXDocument2 pagesUNIXmastersrikanthNo ratings yet

- Managing Components With JMXDocument16 pagesManaging Components With JMXvenkatraji719568No ratings yet

- Module 1: Understanding Big Data and Hadoop: AssignmentDocument2 pagesModule 1: Understanding Big Data and Hadoop: AssignmentsrikanthNo ratings yet

- Java and Eclipse Installation: Installation Guide On WindowsDocument12 pagesJava and Eclipse Installation: Installation Guide On Windowsvenkatraji719568No ratings yet

- Ijiset V3 I10 04Document10 pagesIjiset V3 I10 04aminudin2008No ratings yet

- 523 Hue Lab Guide-Hadoop v1.0Document4 pages523 Hue Lab Guide-Hadoop v1.0venkatraji719568No ratings yet

- 7-AdvHive HBaseDocument82 pages7-AdvHive HBasevenkatraji719568No ratings yet

- Java and Eclipse Installation GuidesDocument5 pagesJava and Eclipse Installation GuidesGirish ShetNo ratings yet

- Flume ContentDocument12 pagesFlume Contentvenkatraji719568No ratings yet

- How To Make The Best Use of Live Sessions: Log in 10 Mins BeforeDocument63 pagesHow To Make The Best Use of Live Sessions: Log in 10 Mins Beforevenkatraji719568No ratings yet

- How To Make The Best Use of Live SessionsDocument36 pagesHow To Make The Best Use of Live Sessionsvenkatraji719568No ratings yet

- 2-HadoopArchitecture HDFSDocument84 pages2-HadoopArchitecture HDFSvenkatraji719568No ratings yet

- Apache Spark With JavaDocument209 pagesApache Spark With Javavenkatraji719568No ratings yet

- Real Time Data ProcessingDocument34 pagesReal Time Data Processingvenkatraji719568No ratings yet

- How To Make The Best Use of Live Sessions: Log in 10 Mins BeforeDocument71 pagesHow To Make The Best Use of Live Sessions: Log in 10 Mins Beforevenkatraji719568No ratings yet

- 5 PigDocument98 pages5 Pigvenkatraji719568No ratings yet

- How To Make The Best Use of Live Sessions: Log in 10 Mins BeforeDocument76 pagesHow To Make The Best Use of Live Sessions: Log in 10 Mins Beforevenkatraji719568No ratings yet

- Scaling Security Using KafkaDocument40 pagesScaling Security Using Kafkavenkatraji719568No ratings yet

- Kafka Architecture FundamentalsDocument33 pagesKafka Architecture Fundamentalsvenkatraji719568No ratings yet

- Avoiding Container VulnerabilitiesDocument15 pagesAvoiding Container Vulnerabilitiesvenkatraji719568No ratings yet

- Building Data Streaming Applications With Apache Kafka. PDFDocument269 pagesBuilding Data Streaming Applications With Apache Kafka. PDFvenkatraji719568100% (2)

- Scaling Security Using KafkaDocument40 pagesScaling Security Using Kafkavenkatraji719568No ratings yet

- On Research of Big Data Ecosystem 2Document22 pagesOn Research of Big Data Ecosystem 2Krupa PatelNo ratings yet

- Data Ingestion, Processing and Architecture Layers For Big Data and IotDocument32 pagesData Ingestion, Processing and Architecture Layers For Big Data and IotRome Empe BalNo ratings yet

- Jatin Aggarwal: Data EngineerDocument1 pageJatin Aggarwal: Data EngineerAkashNo ratings yet

- Lab Guide - PDF - EN - SparkDocument102 pagesLab Guide - PDF - EN - Sparkravi mijhail quispe acevedoNo ratings yet

- Data Engineering Roadmap 2024Document4 pagesData Engineering Roadmap 2024christianmbuyal98No ratings yet

- Configuring Kafka For High ThroughputDocument11 pagesConfiguring Kafka For High Throughputnilesh86378No ratings yet

- Apache MetronDocument15 pagesApache MetronYagnesh BalakrishnanNo ratings yet

- Understanding Etl Er1Document34 pagesUnderstanding Etl Er1nizammbb2uNo ratings yet

- Big Data Hadoop Interview Questions and AnswersDocument25 pagesBig Data Hadoop Interview Questions and Answersparamreddy2000No ratings yet

- Apache Kafka EssentialsDocument10 pagesApache Kafka EssentialsPham ThaoNo ratings yet

- Apache Cassandra Sample ResumeDocument17 pagesApache Cassandra Sample Resumerevanth reddyNo ratings yet

- Kafka: Big Data Huawei CourseDocument14 pagesKafka: Big Data Huawei CourseThiago SiqueiraNo ratings yet

- Code ExplanationDocument3 pagesCode ExplanationShilpa KamagariNo ratings yet

- Additional Producer Configuration: Find Answers On The Fly, or Master Something New. Subscribe TodayDocument1 pageAdditional Producer Configuration: Find Answers On The Fly, or Master Something New. Subscribe TodayDallas GuyNo ratings yet

- Sankirthan Raj Mavella - Full Stack Java DeveloperDocument6 pagesSankirthan Raj Mavella - Full Stack Java Developersamarjeet.talentruptNo ratings yet

- Aakarsh Chaudhary: Sensitivity: Internal & RestrictedDocument8 pagesAakarsh Chaudhary: Sensitivity: Internal & RestrictedKavithaNo ratings yet

- A Study of Distributed SDN Controller Based On Apache KafkaDocument4 pagesA Study of Distributed SDN Controller Based On Apache Kafkasaeed moradpourNo ratings yet

- Open Positions - Referrals - 05 Oct 2021Document22 pagesOpen Positions - Referrals - 05 Oct 2021Krishna mENo ratings yet

- Arun Merum Senior Backend Developer Java Kotlin Springboot AWS TDDDocument2 pagesArun Merum Senior Backend Developer Java Kotlin Springboot AWS TDDArun KumarNo ratings yet

- Rajivranjan ResumeDocument4 pagesRajivranjan ResumeAshwin ShuklaNo ratings yet

- Lab - Qlik Replicate Database With KafkaDocument29 pagesLab - Qlik Replicate Database With KafkaFrancisco Javier Arango CastilloNo ratings yet

- DataStax-WP-Apache-Cassandra-Architecture (Technical) PDFDocument22 pagesDataStax-WP-Apache-Cassandra-Architecture (Technical) PDFshah_d_pNo ratings yet

- Database Transactional Event Queues and Advanced Queuing Users GuideDocument546 pagesDatabase Transactional Event Queues and Advanced Queuing Users GuideNikolya SmirnoffNo ratings yet

- Spring Cloud Stream ReferenceDocument120 pagesSpring Cloud Stream ReferencekumarNo ratings yet

- Apache Storm TutorialDocument64 pagesApache Storm TutorialLudiaEkaFeriNo ratings yet

- KAAS DesignDocument4 pagesKAAS DesignhareendrareddyNo ratings yet

- FastFabric Scaling Hyperledger Fabric To 20,000 Transactions Per SecondDocument9 pagesFastFabric Scaling Hyperledger Fabric To 20,000 Transactions Per SecondbitmapeNo ratings yet

- Kafka Streams in Action - Bill BejeckDocument324 pagesKafka Streams in Action - Bill BejeckLennon TavaresNo ratings yet

- Girija PisheDocument4 pagesGirija PisheAbhishek RajpootNo ratings yet