Professional Documents

Culture Documents

Site Audit Reveals 14 Duplicate Title/Description Issues

Uploaded by

Atiqul IslamOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Site Audit Reveals 14 Duplicate Title/Description Issues

Uploaded by

Atiqul IslamCopyright:

Available Formats

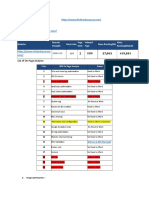

Site Audit: Issues

our plannet

Generated on January 1, 2022

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

Site Audit: Issues

Subdomain: ourplanetinfo.com

Last Update: January 1, 2022

Crawled Pages: 17

ourplanetinfo.com

44 0

14 issues with duplicate title tags

About this issue: Our crawler reports pages that have duplicate title tags only if they are exact

14 0

matches. Duplicate <title> tags make it di cult for search engines to determine which of a

website’s pages is relevant for a speci c search query, and which one should be prioritized in

search results. Pages with duplicate titles have a lower chance of ranking well and are at risk of

being banned.

Moreover, identical <title> tags confuse users as to which webpage they should follow. How to

x: Provide a unique and concise title for each of your pages that contains your most important

keywords. For information on how to create e ective titles, please see this Google article:

https://support.google.com/webmasters/answer/35624.

14 pages have duplicate content issues

About this issue: Webpages are considered duplicates if their content is 85% identical. Having

14 0

duplicate content may signi cantly a ect your SEO performance. First of all, Google will typically

show only one duplicate page, ltering other instances out of its index and search results, and

this page may not be the one you want to rank.

In some cases, search engines may consider duplicate pages as an attempt to manipulate search

engine rankings and, as a result, your website may be downgraded or even banned from search

results. Moreover, duplicate pages may dilute your link pro le. How to x.

Here are a few ways to x duplicate content issues: 1. Add a rel="canonical" link to one of your

duplicate pages to inform search engines which page to show in search results. 2.

Use a 301 redirect from a duplicate page to the original one. 3. Use a rel="next" and a rel="prev"

link attribute to x pagination duplicates.

4. Instruct GoogleBot to handle URL parameters di erently using Google Search Console. 5.

Provide some unique content on the webpage. For more information, please read these articles:

https://support.google.com/webmasters/answer/66359?hl=en and

https://support.google.com/webmasters/answer/139066?hl=en.

Generated on January 1, 2022 The report data is taken from SEMrush.com 2

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

14 pages have duplicate meta descriptions

About this issue: Our crawler reports pages that have duplicate meta descriptions only if they are

14 0

exact matches. A <meta description> tag is a short summary of a webpage's content that helps

search engines understand what the page is about and can be shown to users in search results.

Duplicate meta descriptions on di erent pages mean a lost opportunity to use more relevant

keywords.

Also, duplicate meta descriptions make it di cult for search engines and users to di erentiate

between di erent webpages. It is better to have no meta description at all than to have a

duplicate one. How to x: Provide a unique, relevant meta description for each of your

webpages.

For information on how to create e ective meta descriptions, please see this Google article:

https://support.google.com/webmasters/answer/35624 .

1 page returned a 4XX status code

About this issue: A 4xx error means that a webpage cannot be accessed. This is usually the result

1 0

of broken links. These errors prevent users and search engine robots from accessing your

webpages, and can negatively a ect both user experience and search engine crawlability.

This will in turn lead to a drop in tra c driven to your website. Please be aware that crawler may

detect a working link as broken if your website blocks our crawler from accessing it. This usually

happens due to the following reasons: 1.

DDoS protection system. 2. Overloaded or miscon gured server.

How to x: If a webpage returns an error, remove all links leading to the error page or replace it

with another resource. To identify all pages on your website that contain links to a 4xx page, click

"View broken links" next to the error page. If the links reported as 4xx do work when accessed

with a browser, you can try either of the following: 1.

Contact your web hosting support team. 2. Instruct search engine robots not to crawl your

website too frequently by specifying the "crawl-delay" directive in your robots.txt.

No redirect or canonical to HTTPS homepage from HTTP version

About this issue: If you're running both HTTP and HTTPS versions of your homepage, it is very

1 0

important to make sure that their coexistence doesn't impede your SEO. Search engines are not

able to gure out which page to index and which one to prioritize in search results. As a result,

you may experience a lot of problems, including pages competing with each other, tra c loss

and poor placement in search results.

To avoid these issues, you must instruct search engines to only index the HTTPS version. How to

x: Do either of the following: 1. Redirect your HTTP page to the HTTPS version via a 301

redirect.

2. Mark up your HTTPS version as the preferred one by adding a rel="canonical" to your HTTP

pages.

0 pages returned 5XX status code

0 0

0 pages don't have title tags

0 0

0 internal links are broken

0 0

0 pages couldn't be crawled

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 3

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

0 pages couldn't be crawled (DNS resolution issues)

0 0

0 pages couldn't be crawled (incorrect URL formats)

0 0

0 internal images are broken

0 0

Robots.txt le has format errors

0 0

0 sitemap.xml les have format errors

0 0

0 incorrect pages found in sitemap.xml

0 0

0 pages have a WWW resolve issue

0 0

This page has no viewport tag

0 0

0 pages have too large HTML size

0 0

0 AMP pages have no canonical tag

0 0

0 issues with hre ang values

0 0

0 hre ang con icts within page source code

0 0

0 issues with incorrect hre ang links

0 0

0 non-secure pages

0 0

0 issues with expiring or expired certi cate

0 0

0 issues with old security protocol

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 4

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

0 issues with incorrect certi cate name

0 0

0 issues with mixed content

0 0

0 redirect chains and loops

0 0

0 pages with a broken canonical link

0 0

0 pages have multiple canonical URLs

0 0

0 pages have a meta refresh tag

0 0

0 issues with broken internal JavaScript and CSS les

0 0

0 subdomains don’t support secure encryption algorithms

0 0

0 sitemap.xml les are too large

0 0

0 links couldn't be crawled (incorrect URL formats)

0 0

0 structured data items are invalid

0 0

0 pages are missing the viewport width value

0 0

0 pages have slow load speed

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 5

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

ourplanetinfo.com

364 0

112 issues with uncompressed JavaScript and CSS les

About this issue: This issue is triggered if compression is not enabled in the HTTP response.

112 0

Compressing JavaScript and CSS les signi cantly reduces their size as well as the overall size of

your webpage, thus improving your page load time. Uncompressed JavaScript and CSS les make

your page load slower, which negatively a ects user experience and may worsen your search

engine rankings.

If your webpage uses uncompressed CSS and JS les that are hosted on an external site, you

should make sure they do not a ect your page's load time. For more information, please see this

Google article https://developers.google.com/web/fundamentals/performance/optimizing-

content-e ciency. How to x: Enable compression for your JavaScript and CSS les on your

server.

If your webpage uses uncompressed CSS and JS les that are hosted on an external site, contact

the website owner and ask them to enable compression on their server. If this issue doesn't

a ect your page load time, simply ignore it.

112 issues with uncached JavaScript and CSS les

About this issue: This issue is triggered if browser caching is not speci ed in the response header.

112 0

Enabling browser caching for JavaScript and CSS les allows browsers to store and reuse these

resources without having to download them again when requesting your page. That way the

browser will download less data, which will decrease your page load time.

And the less time it takes to load your page, the happier your visitors are. For more information,

please see this Google article

https://developers.google.com/web/fundamentals/performance/optimizing-content-e ciency.

How to x: If JavaScript and CSS les are hosted on your website, enable browser caching for

them.

If JavaScript and CSS les are hosted on a website that you don't own, contact the website owner

and ask them to enable browser caching for them. If this issue doesn't a ect your page load

time, simply ignore it.

56 issues with unmini ed JavaScript and CSS les

About this issue: Mini cation is the process of removing unnecessary lines, white space and

56 0

comments from the source code. Minifying JavaScript and CSS les makes their size smaller,

thereby decreasing your page load time, providing a better user experience and improving your

search engine rankings. For more information, please see this Google article

https://developers.google.com/web/fundamentals/performance/optimizing-content-e ciency.

How to x: Minify your JavaScript and CSS les. If your webpage uses CSS and JS les that are

hosted on an external site, contact the website owner and ask them to minify their les. If this

issue doesn't a ect your page load time, simply ignore it.

14 pages don't have enough text within the title tags

About this issue: Generally, using short titles on webpages is a recommended practice. However,

14 0

keep in mind that titles containing 10 characters or less do not provide enough information

about what your webpage is about and limit your page's potential to show up in search results

for di erent keywords. For more information, please see this Google article:

https://support.google.com/webmasters/answer/35624.

How to x: Add more descriptive text inside your page's <title> tag.

Generated on January 1, 2022 The report data is taken from SEMrush.com 6

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

14 pages don't have an h1 heading

About this issue: While less important than <title> tags, h1 headings still help de ne your page’s

14 0

topic for search engines and users. If an <h1> tag is empty or missing, search engines may place

your page lower than they would otherwise. Besides, a lack of an <h1> tag breaks your page’s

heading hierarchy, which is not SEO friendly.

How to x: Provide a concise, relevant h1 heading for each of your page.

14 images don't have alt attributes

About this issue: Alt attributes within <img> tags are used by search engines to understand the

14 0

contents of your images. If you neglect alt attributes, you may miss the chance to get a better

placement in search results because alt attributes allow you to rank in image search results. Not

using alt attributes also negatively a ects the experience of visually impaired users and those

who have disabled images in their browsers.

For more information, please see these articles: Using ALT attributes smartly:

https://webmasters.googleblog.com/2007/12/using-alt-attributes-smartly.html and Google

Image Publishing Guidelines: https://support.google.com/webmasters/answer/114016?hl=en.

How to x: Specify a relevant alternative attribute inside an <img> tag for each image on your

website, e.g., "<img src="mylogo.png" alt="This is my company logo">".

14 pages have low text-HTML ratio

About this issue: Your text to HTML ratio indicates the amount of actual text you have on your

14 0

webpage compared to the amount of code. This issue is triggered when your text to HTML is 10%

or less. Search engines have begun focusing on pages that contain more content.

That's why a higher text to HTML ratio means your page has a better chance of getting a good

position in search results. Less code increases your page's load speed and also helps your

rankings. It also helps search engine robots crawl your website faster.

How to x: Split your webpage's text content and code into separate les and compare their size.

If the size of your code le exceeds the size of the text le, review your page's HTML code and

consider optimizing its structure and removing embedded scripts and styles.

14 pages have a low word count

About this issue: This issue is triggered if the number of words on your webpage is less than 200.

14 0

The amount of text placed on your webpage is a quality signal to search engines. Search engines

prefer to provide as much information to users as possible, so pages with longer content tend to

be placed higher in search results, as opposed to those with lower word counts.

For more information, please view this video: https://www.youtube.com/watch?v=w3-obcXkyA4.

How to x: Improve your on-page content and be sure to include more than 200 meaningful

words.

14 uncompressed pages

About this issue: This issue is triggered if the Content-Encoding entity is not present in the

14 0

response header. Page compression is essential to the process of optimizing your website. Using

uncompressed pages leads to a slower page load time, resulting in a poor user experience and a

lower search engine ranking.

How to x: Enable compression on your webpages for faster load time.

0 external links are broken

0 0

0 external images are broken

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 7

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

0 links on HTTPS pages leads to HTTP page

0 0

0 pages have too much text within the title tags

0 0

0 pages have duplicate H1 and title tags

0 0

0 pages don't have meta descriptions

0 0

0 pages have too many on-page links

0 0

0 URLs with a temporary redirect

0 0

0 pages have too many parameters in their URLs

0 0

0 pages have no hre ang and lang attributes

0 0

0 pages don't have character encoding declared

0 0

0 pages don't have doctype declared

0 0

0 pages have incompatible plugin content

0 0

0 pages contain frames

0 0

0 pages have underscores in the URL

0 0

0 outgoing internal links contain nofollow attribute

0 0

Sitemap.xml not indicated in robots.txt

0 0

Sitemap.xml not found

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 8

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

Homepage does not use HTTPS encryption

0 0

0 subdomains don't support SNI

0 0

0 HTTP URLs in sitemap.xml for HTTPS site

0 0

0 issues with blocked internal resources in robots.txt

0 0

0 pages have a JavaScript and CSS total size that is too large

0 0

0 pages use too many JavaScript and CSS les

0 0

0 link URLs are too long

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 9

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

ourplanetinfo.com

3 0

2 subdomains don't support HSTS

2 0

Robots.txt not found

About this issue: A robots.txt le has an important impact on your overall SEO website's

1 0

performance. This le helps search engines determine what content on your website they should

crawl. Utilizing a robots.txt le can cut the time search engine robots spend crawling and

indexing your website.

For more information, please see this Google article:

https://support.google.com/webmasters/answer/6062608. How to x: If you don't want speci c

content on your website to be crawled, creating a robots.txt le is recommended. To check your

robots.txt le, use Google's robots.txt Tester in Google Search Console:

https://www.google.com/webmasters/tools/robots-testing-tool.

0 pages have more than one H1 tag

0 0

0 pages are blocked from crawling

0 0

0 page URLs are longer than 200 characters

0 0

0 outgoing external links contain nofollow attributes

0 0

0 pages have hre ang language mismatch issues

0 0

0 orphaned pages in Google Analytics

0 0

0 orphaned pages in sitemaps

0 0

0 pages take more than 1 second to become interactive

0 0

0 pages blocked by X-Robots-Tag: noindex HTTP header

0 0

0 issues with blocked external resources in robots.txt

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 10

800 BOYLSTON STREET, SUITE 2475, BOSTON, MA 02199

WWW.SEMRUSH.COM

0 issues with broken external JavaScript and CSS les

0 0

0 pages need more than 3 clicks to be reached

0 0

0 pages have only one incoming internal link

0 0

0 URLs with a permanent redirect

0 0

0 resources are formatted as page link

0 0

0 links on this page have no anchor text

0 0

0 links on this page have non-descriptive anchor text

0 0

0 links to external pages or resources returned a 403 HTTP status code

0 0

Generated on January 1, 2022 The report data is taken from SEMrush.com 11

You might also like

- SEMrush-Site Audit Issues-Kjbkaraoke Com-12th Jul 2022Document11 pagesSEMrush-Site Audit Issues-Kjbkaraoke Com-12th Jul 2022Akmal Aghora DjambakNo ratings yet

- SemrushDocument13 pagesSemrushThalyta AlencarNo ratings yet

- Semrush-Site Audit Issues-Faevmoto Com-12º Feb. 2024Document12 pagesSemrush-Site Audit Issues-Faevmoto Com-12º Feb. 2024gopallalsharma95No ratings yet

- SEMrush-Site - Audit - Issues-Www - Smifs - Com-8th - Aug - 2022Document12 pagesSEMrush-Site - Audit - Issues-Www - Smifs - Com-8th - Aug - 2022SMIFS LIMITEDNo ratings yet

- Semrush-Site Audit Issues-Www Whitehatjr Com-5 Déc. 2022Document11 pagesSemrush-Site Audit Issues-Www Whitehatjr Com-5 Déc. 2022Raghav sowryaNo ratings yet

- Site Audit: Issues: Generated On November 1, 2021Document11 pagesSite Audit: Issues: Generated On November 1, 2021Ajiteru GbolahanNo ratings yet

- Site Audit Issues at facilio.comDocument13 pagesSite Audit Issues at facilio.comManaswini Rao KakiNo ratings yet

- Semrush-Site Audit Issues-Mobiledokkan-19th May 2023Document9 pagesSemrush-Site Audit Issues-Mobiledokkan-19th May 2023sohag gamingNo ratings yet

- Full Site Audit Report for WWW.BRESOS.COMDocument17 pagesFull Site Audit Report for WWW.BRESOS.COMTarteil TradingNo ratings yet

- SEMrush-Full Site Audit Report-WWW BRESOS COM-6th Dec 2021Document63 pagesSEMrush-Full Site Audit Report-WWW BRESOS COM-6th Dec 2021Tarteil TradingNo ratings yet

- Semrush-Site Audit Full Report-Edumasterprivat Com-28th Nov 2022Document111 pagesSemrush-Site Audit Full Report-Edumasterprivat Com-28th Nov 2022Jaka DarmawanNo ratings yet

- Semrush-Site Audit Issues-Silicon Valley 4 Kids-27th Nov 2023Document10 pagesSemrush-Site Audit Issues-Silicon Valley 4 Kids-27th Nov 2023Avinash NaikwadiNo ratings yet

- Site Audit ReportDocument49 pagesSite Audit ReportNeha GuptaNo ratings yet

- SEMrush-Site Audit: Full Report-Vogue-29th Nov 2019Document55 pagesSEMrush-Site Audit: Full Report-Vogue-29th Nov 2019ampani srikanthNo ratings yet

- SEMrush-Site Audit Issues-Thedailystar Net-6th Nov 2021Document10 pagesSEMrush-Site Audit Issues-Thedailystar Net-6th Nov 2021Julkar Nain ShakibNo ratings yet

- Semrush-Site Audit Issues-Www Conveyz Com Au-22!12!2023Document11 pagesSemrush-Site Audit Issues-Www Conveyz Com Au-22!12!2023conveyzseoNo ratings yet

- Website SEO AuditDocument54 pagesWebsite SEO AuditUmer AshfaqNo ratings yet

- Site Audit ReportDocument61 pagesSite Audit ReportNeha GuptaNo ratings yet

- SEMrush-Full Site Audit Report-Airport Vienna Transfer-17th Sep 2019-1672948779473Document32 pagesSEMrush-Full Site Audit Report-Airport Vienna Transfer-17th Sep 2019-1672948779473CUCULEAC STEFANNo ratings yet

- Semrush-Full Site Audit Report-Express Assignment-7th Feb 2024Document78 pagesSemrush-Full Site Audit Report-Express Assignment-7th Feb 2024postguest1012023No ratings yet

- Full Site Audit Report-Inter Pro Web HostDocument125 pagesFull Site Audit Report-Inter Pro Web Hostram pradeepNo ratings yet

- Semrush-Site Audit Issues-Morelloassociates Com-20th Mar 2024Document9 pagesSemrush-Site Audit Issues-Morelloassociates Com-20th Mar 2024rohitNo ratings yet

- Website SEO Adudit Report ThecopycreatorsDocument21 pagesWebsite SEO Adudit Report ThecopycreatorsNoahNo ratings yet

- Full Site Audit Report: Generated On January 17, 2022Document22 pagesFull Site Audit Report: Generated On January 17, 2022Tarteil TradingNo ratings yet

- Full Site Audit Report: Generated On January 24, 2022Document22 pagesFull Site Audit Report: Generated On January 24, 2022Tarteil TradingNo ratings yet

- Website Audit Report Www4overtimelawyercom 20151103Document30 pagesWebsite Audit Report Www4overtimelawyercom 20151103gmapsextractor48No ratings yet

- Site Audit: REPORT Reveals Major Crawlabilty Issue Blocking Google IndexingDocument6 pagesSite Audit: REPORT Reveals Major Crawlabilty Issue Blocking Google IndexingJesika ChandwadaNo ratings yet

- Site Audit (Details)Document14 pagesSite Audit (Details)Adam AchouihNo ratings yet

- Site Audit (Details)Document22 pagesSite Audit (Details)Adam AchouihNo ratings yet

- Site Audit (Details) - Mckinleyrice - Com - 2020-10-31 PDFDocument65 pagesSite Audit (Details) - Mckinleyrice - Com - 2020-10-31 PDFHemprasad BadgujarNo ratings yet

- SEO Audit Report Highlights Key Issues and Recommendations for Triple Star Commercial Cleaning ServicesDocument18 pagesSEO Audit Report Highlights Key Issues and Recommendations for Triple Star Commercial Cleaning ServicesTop Cooling fanNo ratings yet

- SEO Audit Report For IconicStreams by Ackta LTDDocument14 pagesSEO Audit Report For IconicStreams by Ackta LTDshah zeb razaNo ratings yet

- Digitaljust Fullexport 2021-08-02Document38 pagesDigitaljust Fullexport 2021-08-02Adrian BorleaNo ratings yet

- SEO Audit Report For IconicStreams by Ackta LTDDocument14 pagesSEO Audit Report For IconicStreams by Ackta LTDshah zeb razaNo ratings yet

- 1 Domain 2 IP Address 3 Domain Age 4 Domain Authority 5 Total Number of Indexed Pages in Google 6 Last Caching DateDocument13 pages1 Domain 2 IP Address 3 Domain Age 4 Domain Authority 5 Total Number of Indexed Pages in Google 6 Last Caching DateImperial universitiesNo ratings yet

- The Complete SEO Checklist For 2020 PDFDocument37 pagesThe Complete SEO Checklist For 2020 PDFNikita Shevchenko91% (11)

- SEO Score: 17 FailedDocument12 pagesSEO Score: 17 FailedlinkedNo ratings yet

- Website Report ForDocument13 pagesWebsite Report ForheymuraliNo ratings yet

- Bimal Maruti Audit ReportDocument25 pagesBimal Maruti Audit ReportSumukha KeshavaNo ratings yet

- Bedkingdom - Technical SEO AuditDocument121 pagesBedkingdom - Technical SEO AuditSanjay KumarNo ratings yet

- SEOptimer SEO Report SampleDocument11 pagesSEOptimer SEO Report Sampleedukacija11No ratings yet

- Semrush-Site Audit Compare Crawls-Mobiledokkan-19th May 2023Document7 pagesSemrush-Site Audit Compare Crawls-Mobiledokkan-19th May 2023sohag gamingNo ratings yet

- SEO ReportDocument11 pagesSEO ReportNilesh Kumar ChoudharyNo ratings yet

- SeocheckDocument22 pagesSeocheckjews srinathNo ratings yet

- SeoToolAdda - Check Tweov.com's SEODocument4 pagesSeoToolAdda - Check Tweov.com's SEOMNR SolutionsNo ratings yet

- Site Audit (Details)Document53 pagesSite Audit (Details)Adam AchouihNo ratings yet

- The SEO Pro Checklist For Marketing AgenciesDocument24 pagesThe SEO Pro Checklist For Marketing AgenciesSarah JonesNo ratings yet

- Search Engine Optimization: How to Make your Site Stand Out from the Apocalyptic Horde: Undead Institute, #13From EverandSearch Engine Optimization: How to Make your Site Stand Out from the Apocalyptic Horde: Undead Institute, #13No ratings yet

- Site AuditDocument90 pagesSite AuditKishan LimbaniNo ratings yet

- Ultimate AuditDocument11 pagesUltimate AuditPaul StoresNo ratings yet

- Web Report - Carpetcellar ComDocument9 pagesWeb Report - Carpetcellar ComAlaina LongNo ratings yet

- Darsul QuranDocument13 pagesDarsul QuranGM KibriaNo ratings yet

- Font GeneratorDocument22 pagesFont GeneratorAdeilson SilvaNo ratings yet

- SEO Audit Pear Tree HomeDocument17 pagesSEO Audit Pear Tree HomeTop Cooling fanNo ratings yet

- SEO Campaign Checklist - SEO and WebflowDocument15 pagesSEO Campaign Checklist - SEO and WebflowPavleNo ratings yet

- Project AnalysisDocument5 pagesProject AnalysisHimanshu SharmaNo ratings yet

- ElizaDocument11 pagesElizaNatural Herbs CareNo ratings yet

- Dahbi Morocco Tours Site Audit (Summary)Document2 pagesDahbi Morocco Tours Site Audit (Summary)Adam AchouihNo ratings yet

- SEO Audit ChecklistDocument82 pagesSEO Audit ChecklistlovelNo ratings yet

- Area of Experienceatiqul IslamDocument3 pagesArea of Experienceatiqul IslamAtiqul IslamNo ratings yet

- Area of Experienceatiqul IslamDocument3 pagesArea of Experienceatiqul IslamAtiqul IslamNo ratings yet

- Why Al-Qaeda Are Still A Threat 20 Years After 9/11Document5 pagesWhy Al-Qaeda Are Still A Threat 20 Years After 9/11Atiqul IslamNo ratings yet

- Academic NoteDocument18 pagesAcademic NoteAtiqul IslamNo ratings yet

- Study PaperDocument6 pagesStudy PaperAtiqul IslamNo ratings yet

- Proposal For E - CommerceDocument28 pagesProposal For E - CommerceAtiqul Islam100% (2)

- 01 Ecommerce BlueprintDocument4 pages01 Ecommerce Blueprintkhanh nguyenNo ratings yet

- What Is Ecommerce Comprehensive Guide To Ecommerce Strategy InfographicDocument1 pageWhat Is Ecommerce Comprehensive Guide To Ecommerce Strategy InfographicAtiqul IslamNo ratings yet

- Freelancing & Outsourcing PresentationDocument8 pagesFreelancing & Outsourcing PresentationAtiqul IslamNo ratings yet

- Lec 8 - DOMDocument144 pagesLec 8 - DOMBinh PhamNo ratings yet

- Responsive Web Design Free Code CampDocument18 pagesResponsive Web Design Free Code CampsangiwenmoyoNo ratings yet

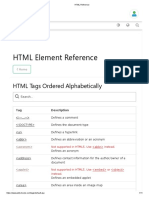

- HTML Elements W3 SchoolsDocument11 pagesHTML Elements W3 SchoolsRuthra DeviNo ratings yet

- Front-End Fundamentals - A Practical Guide To Front-End Web Development.Document137 pagesFront-End Fundamentals - A Practical Guide To Front-End Web Development.shafiq rehmanNo ratings yet

- MixedDocument15 pagesMixedzihadNo ratings yet

- WTA-7th A & B QB-All Modules-2019Document4 pagesWTA-7th A & B QB-All Modules-2019Mukul KumarNo ratings yet

- Wiley - PHP & MySQL - Server-Side Web Development - 978-1-119-14921-7Document2 pagesWiley - PHP & MySQL - Server-Side Web Development - 978-1-119-14921-7FranklinNo ratings yet

- Fundamentals of Internet Programming All in One HandoutDocument60 pagesFundamentals of Internet Programming All in One HandoutGamal BohoutaNo ratings yet

- QuestwebDocument12 pagesQuestwebAniket SinghNo ratings yet

- 12 Things You Must Know When Choosing A B2B Ecommerce PlatformDocument16 pages12 Things You Must Know When Choosing A B2B Ecommerce PlatformJorge GarzaNo ratings yet

- Learn Django Web Development FrameworkDocument10 pagesLearn Django Web Development FrameworkRaghavendra KamsaliNo ratings yet

- 12Document24 pages12pankajjaiswalkumarNo ratings yet

- (Indian Electronic Payment & E-Commerce Brand) : Neeraj Kr. Gupta Asstt. Prof. DVSGI, MeerutDocument7 pages(Indian Electronic Payment & E-Commerce Brand) : Neeraj Kr. Gupta Asstt. Prof. DVSGI, MeerutNeeraj GuptaNo ratings yet

- CSS Tutorial: W3schoolsDocument12 pagesCSS Tutorial: W3schoolsImran HossenNo ratings yet

- JavaScript Calculator & Text EffectsDocument27 pagesJavaScript Calculator & Text EffectsAnubhaw AnandNo ratings yet

- (XXXX) Syllabus - Front-End & Back-End Web Dev. Node - Js Express - Js - HS 260919Document1 page(XXXX) Syllabus - Front-End & Back-End Web Dev. Node - Js Express - Js - HS 260919Joker JrNo ratings yet

- KimiaDocument25 pagesKimiaSeptia Kemala wintaNo ratings yet

- HTML - CSS NotesDocument6 pagesHTML - CSS NotesTyler KennedyNo ratings yet

- E CommerceDocument4 pagesE CommerceKaif ShaikhNo ratings yet

- Organic Tilapia Home (38Document4 pagesOrganic Tilapia Home (38Russe edusmaNo ratings yet

- Daphne Feresin - ResumeDocument1 pageDaphne Feresin - ResumeDaphne FeresinNo ratings yet

- Strategi Pemasaran Agrowisata 1Document11 pagesStrategi Pemasaran Agrowisata 1faisal al sunandiNo ratings yet

- Web TechDocument42 pagesWeb TechJHanviNo ratings yet

- Data BreachDocument5 pagesData Breachallen martin almeidaNo ratings yet

- Pro Material Series: Free End To End Placement Preparation Online Course With Mock Test VisitDocument6 pagesPro Material Series: Free End To End Placement Preparation Online Course With Mock Test VisitAjay kumar TNPNo ratings yet

- 4Document46 pages4Kevin SengNo ratings yet

- Vedic scripture page on worship of weaponsDocument7 pagesVedic scripture page on worship of weaponsShridhar JoshiNo ratings yet

- Shri Sai Computer Institution Fees StructureDocument2 pagesShri Sai Computer Institution Fees StructurepjatinNo ratings yet

- Salesforce JMeter Test PlanDocument60 pagesSalesforce JMeter Test PlanReX_XaR_No ratings yet

- Computer 10 4th MY ANSWERDocument11 pagesComputer 10 4th MY ANSWERMark Jeffrey JuanNo ratings yet