Professional Documents

Culture Documents

Hidden Markov Model

Uploaded by

Kishore Kumar DasOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hidden Markov Model

Uploaded by

Kishore Kumar DasCopyright:

Available Formats

2.

Hidden Markov Model

HMM is probabilistic model for machine learning. It is mostly used in speech

recognition, to some extent it is also applied for classification task. HMM provides

solution of three problems : evaluation, decoding and learning to find most likelihood

classification. This chapter starts with description of Markov chain sequence labeler

and then it follows elaboration of HMM, which is based on Markov chain. Discussion

of all algorithms used to solve three basic problem of evaluation, decoding and

learning is included. Chapter ends with enumerating the HMM.

A sequence classifier or sequence labeler is a model whose job is to assign some label

or class to each unit in a sequence. The HMM is probabilistic sequence classifiers;

which means given a sequence of units (words, letters, morphemes, sentences etc) its

job is to compute a probability distribution over possible labels and choose the best

label sequence.

According to Han Shu [1997] Hidden Markov Models (HMMs) were initially

developed in the 1960’s by Baum and Eagon in the Institute for Defense Analyses.

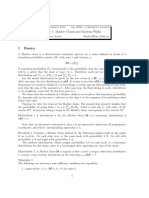

a. Markov Chain

The Hidden Markov Model is one of the most important machine learning models in

speech and language processing. In order to define it properly, we need to first

introduce the Markov chain.

Markov chains and Hidden Markov Models are both extensions of the finite automata

which is based on the input observations. According to Jurafsky, Martin [2005] a

weighted finite-state automaton is a simple augmentation of the finite automaton in

which each arc is associated with a probability, indicating how likely that path is to be

taken. The sum of associated probabilities on all the arcs leaving a node must be 1.

Chapter 3 : Hidden Markov Model Page 33

According to Sajja [2012] a Markov chain is a special case of a weighted automaton in

which the input sequence uniquely determines states the automaton will go through

for that input sequence. Since they can’t represent inherently ambiguous problems.

Markov chain is only useful for assigning probabilities to unambiguous sequences.

A Markov chain is specified by the following components:

Q = q1q2 . . .qN a set of states

A = [aij]NxN a transition probability matrix A, each aij representing the

probability of moving from state i to state j.

q0,qend a special start state and end state which are not associated with

observations.

A Markov chain embodies an important assumption about these probabilities In a

first-order Markov chain, the probability of a particular state is dependent only on the

immediate previous state, Symbolically

Markov Assumption: P(qi|q1...qi−1) = P(qi|qi−1)

Since each aij expresses the probability p(qj|qi), the laws of probability require that the

values of the probabilities for some state must sum to 1.

= 1, 2, ..., N an initial probability distribution over states. i is the

probability that the Markov chain will start in state i. Some

states j may have j =0, meaning that they cannot be initial

states.

Each i expresses the probability p(qi|START), all the probabilities must sum to 1:

b. The Hidden Markov Model

A Markov chain is useful when we need to compute the probability for a sequence of

events that we can observe in the world. It is useful even in events in which one is

interested, but may not be directly observable in the world. For example, for NER

named entities tags are not observed in real world as we observe sequence of various

weather cold, hot, rain. But sequence of words observed in real world and NER has to

infer the correct named entities tags from these word sequence. The named entity

tags are said to be hidden because they are not observed.

Chapter 3 : Hidden Markov Model Page 34

Jurafsky, Martin [2005] Hidden Markov Model (HMM) allows us to talk about both

observed events (like words that we see in the input) and hidden events (like named

entity tags) that we think of as causal factors in our probabilistic model.

i. Definition of HMM

According to Trujillo [1999], Jurafsky, Martin [2005] an HMM is specified by a set

of states Q, a set of transition probabilities A, a set of observation likelihoods B, a

defined start state and end state(s), and a set of observation symbols O, is not

constructed from the state set Q which means observations may be disjoint from

states. For example in NER sequence of words to be tagged are considered as

observation where as States will be set of named entities classes :

Let’s begin with a formal definition of a HMM, focusing on how it differs from a

Markov chain. According to Jurafsky, Martin [2005] an HMM is specified by the

following components:

Q = q1q2 . . .qN a set of states

A = [aij] NxN a transition probability matrix A, each aij representing the

probability of moving from state i to state j,

O = o1o2 . . .oN a set of observations, each one drawn from a vocabulary

V = v1,v2, ...,vV .

B = bi(ot ) A set of observation likelihoods:, also called emission

probabilities, each expressing the probability of an

observation ot being generated from a state i.

q0,qend a special start state and end state which are not associated

with observation.

An alternative representation for Markov chains is sometimes used for HMM,

doesn’t rely on a start or end state, instead representing the distribution over initial

and accepting states explicitly:

Chapter 3 : Hidden Markov Model Page 35

= 1, 2, ..., N an initial probability distribution over states. i is the

probability that the Markov chain will start in state i. Some

states j may have j =0, meaning that they cannot be initial

states.

QA = {qx,qy...} a set QA Q of legal accepting states

ii. HMM Assumptions

Markov Assumption: A first-order Hidden Markov Model instantiates two simplifying

assumptions. One: as in case of first-order Markov chain, the probability of a

particular state is dependent only on the immediate previous state:

P(qi|q1... qi−1) = P(qi|qi−1)

Independent Assumption: The probability of an output observation oi is dependent

only on the state that produced the observation qi, and not on any other state or any

other observations:

P(oi|q1 . . .qi, . . . ,qn,o1, . . . ,oi, . . . ,on)=P(oi|qi)

The Stationary Assumption Here it is assumed that state transition probabilities are

independent of the actual time at which the transitions take place. Mathematically,

P(qt1+1 = j | qt1 = i) = P(qt2+1 = j|qt2 = i) for time t1 and t2

Having described the structure of an HMM, it will be logical to introduce the

algorithms for computing things with them. An influential tutorial by Rabiner [1989],

introduced the idea that Hidden Markov Models should be characterized by three

fundamental problems: Evaluation problem can be used for isolated (word)

recognition. Decoding problem is related to the continuous recognition as well as to

the segmentation. Learning problem must be solved, if we want to train an HMM for

the subsequent use of recognition tasks.

Evaluation: By evaluation it is meant what is the probability that a particular

sequence of symbols is produced by a particular model? That is given an HMM =

Chapter 3 : Hidden Markov Model Page 36

(A,B) and an observation sequence O, to determine the likelihood P(O|). For

evaluation following two algorithms are specified : the forward algorithm or the

backwards algorithm, it may be noted this does not mean the forward-backward

algorithm.

iii. Computing Likelihood: The Forward Algorithm

Our first problem is to compute the likelihood of a particular observation sequence,

That is a new observation sequence and a set of models. Thus the problem is to find a

model which explains best the sequence, or in other terms which model gives the

highest likelihood to the data.

Computing Likelihood: Given an HMM = (A,B) and an observation sequence O,

determine the likelihood P(O|).

In Hidden Markov Models, each hidden state produces only a single observation.

Thus the sequence of hidden states and the sequence of observations have the same

length. For example, if there are n words (Observation) in a sentence then there will

ben tags (hidden state).

Given this one-to-one mapping, and the Markov assumptions for a particular hidden

state sequence Q=q0,q1,q2, ...,qn and an observation sequence O = o1,o2, ...,on, the

likelihood of the observation sequence (using a special start state q0 rather than

probabilities) is:

P(O|Q) = P(oi|qi) × P(qi|qi-1) for i = 1 to n

The computation of the forward probability for our observation ( Ram lives in Jaipur )

from one possible hidden state sequence PER NAN NAN CITY is as follows-

In order to compute the true total likelihood of Ram lives in Jaipur however, we need

to sum over all possible hidden state sequences. For an HMM with N hidden states

Chapter 3 : Hidden Markov Model Page 37

and an observation sequence of T observations, there are NT possible hidden

sequences. For real tasks, where N and T are both large, NT is a very large number,

and so one cannot compute the total observation likelihood by computing a separate

observation likelihood for each hidden state sequence and then summing them up.

Instead of using such an extremely exponential algorithm, we use an efficient

algorithm called the forward algorithm. The forward algorithm is a kind of dynamic

programming algorithm; the forward algorithm computes the observation probability

by summing over the probabilities of all possible hidden-state paths that could

generate the observation sequence.

Decoding: decoding means determining the most likely sequence of states that

produced the sequence. Thus given an observation sequence O and an HMM =

(A,B), discover the best hidden state sequence Q. For this problem we use the Viterbi

algorithm.

iv. Decoding: The Viterbi Algorithm

For any model, such as an HMM, that contains hidden variables, the task of

determining which sequence of variables is the underlying source of some sequence

of observations is called the decoding task. The task of the decoder is to find the best

hidden named entity tag sequence. More formally, given as input an HMM = (A,B)

and a sequence of observations O = o1,o2, ...,oT , find the most probable

sequence of states Q = q1q2q3 . . .qT .

The following procedure is proposed to find the best sequence: for each possible

hidden state sequence (PERLOCNANNAN, NANPERNANLOC, etc.), one could run

the forward algorithm and compute the likelihood of the observation sequence for the

given hidden state sequence.

Then one could choose the hidden state sequence with the max observation

likelihood. But this cannot be done since there are an exponentially large number of

Chapter 3 : Hidden Markov Model Page 38

state sequences. Instead, the most common decoding algorithms for HMMs is the

Viterbi algorithm.

Like the forward algorithm it estimates the probability that the HMM is in state j after

seeing the first t observations and passing through the most likely state sequence

q1...qt−1, and given the automaton .

Each cell of the vector, vt ( j) represents the probability that the HMM is in state j

after seeing the first t observations and passing through the most likely state sequence

q1...qt−1, given the automaton . The value of each cell vt( j) is computed recursively

taking the most probable path that could lead us to this cell. Formally, each cell

expresses the following probability:

vt( j) = P(q0,q1...qt−1,o1,o2 . . .ot ,qt = j|)

vt ( j) = max vt−1(i) ai j bj(ot ) 1≤i≤N−1

The three factors that are present in above equation for extending the previous paths

to compute the Viterbi probability at time t are:

vt−1(i) the previous Viterbi path probability from the previous time step

ai j the transition probability from previous state qi to current state qj

bj(ot ) the state observation likelihood of the observation symbol ot given

the current state j.

Viterbi algorithm is identical to the forward algorithm exceptions. It takes the max

over the previous path probabilities whereas the forward algorithm takes the sum.

Back pointers component is only component present in Viterbi algorithm that forward

algorithm lacks. This is because while the forward algorithm needs to produce

observation likelihood, the Viterbi algorithm must produce a probability and also the

most likely state sequence. This best state sequence is computed by keeping track of

the path of hidden states that led to each state.

Chapter 3 : Hidden Markov Model Page 39

v. Learning in HMM

Learning Generally, the learning problem is the adjustment of the HMM parameters,

so that the given set of observations (called the training set) is represented by the

model in the best way for the intended application. More explicitly given an

observation sequence O and the set of states in the HMM, learn the HMM parameters

A and B. Thus it would be clear that the ``quantity'' which is to be optimized during

the learning process can be different from application to application. In other words,

there may be several optimization criteria for learning, out of them a suitable one is

selected depending on the application.

In essence it is to find the model that best fits the data. For obtaining the desired

model the following 3 algorithms are applied:

1. MLE (maximum likelihood estimation)

2. Viterbi training (different from Viterbi decoding)

3. Baum Welch = forward-backward algorithm

Advantages and Disadvantages of HMM

The underlying theoretical basis is much more sound, elegant and easy to

understand.

It is easier to implement and analyze.

HMM taggers are very simple to train (just need to compile counts from the

training corpus).

Performs relatively well (over 90% performance on named entities).

Statisticians are comfortable with the theoretical base of HMM.

Liberty to manipulate the training and verification processes.

Mathematical / theoretical analysis of the results and processes.

Incorporates prior knowledge into the architecture with good design.

Initialize the model close to something believed to be correct.

It eliminates label bias problem.

Chapter 3 : Hidden Markov Model Page 40

It has also been proved effective for a number of other tasks, such as speech

recognition, handwriting recognition and sign language recognition.

Because each HMM uses only positive data, they scale well; since new words

can be added without affecting learnt HMMs.

Disadvantages :

In order to define joint probability over observation and label sequence HMM

needs to enumerate all possible observation sequences.

Main difficulty is modeling probability of assigning a tag to word can be very

difficult if “words” are complex.

It is not practical to represent multiple overlapping features and long term

dependencies.

Number of parameters to be evaluated is huge. So it needs a large data set for

training.

It requires huge amount of training in order to obtain better results.

HMMs only use positive data to train. In other words, HMM training involves

maximizing the observed probabilities for examples belonging to a class. But

it does not minimize the probability of observation of instances from other

classes.

It adopts the Markovian assumption: that the emission and the transition

probabilities depend only on the current state, which does not map well to

many real-world domains;

Chapter 3 : Hidden Markov Model Page 41

You might also like

- HiddenMarkovModel FINALDocument73 pagesHiddenMarkovModel FINALTapasKumarDash100% (2)

- Markov ChainsDocument91 pagesMarkov Chainscreativechand100% (7)

- Markov Chain Implementation in C++ Using EigenDocument9 pagesMarkov Chain Implementation in C++ Using Eigenpi194043No ratings yet

- Hidden Markov Model ExplainedDocument9 pagesHidden Markov Model Explainedchala mitafaNo ratings yet

- Hidden Markov Models (HMMS) : Prabhleen Juneja Thapar Institute of Engineering & TechnologyDocument36 pagesHidden Markov Models (HMMS) : Prabhleen Juneja Thapar Institute of Engineering & TechnologyRohan SharmaNo ratings yet

- Lec-6 HMMDocument63 pagesLec-6 HMMGia ByNo ratings yet

- Hidden Markov ModelsDocument17 pagesHidden Markov ModelsSpam ScamNo ratings yet

- Hidden Markov ModelsDocument4 pagesHidden Markov Modelsshanigondal126No ratings yet

- Module 5Document51 pagesModule 5Reshma SindhuNo ratings yet

- Markov ChainsDocument6 pagesMarkov ChainsMohamed Fawzy ShrifNo ratings yet

- Markov Chain and Markov ProcessesDocument9 pagesMarkov Chain and Markov Processesdeary omarNo ratings yet

- Multiple Sequence AlignmentDocument16 pagesMultiple Sequence AlignmentjuanpapoNo ratings yet

- HMMDocument24 pagesHMMKaran NarangNo ratings yet

- Machine Learning For Natural Language Processing: Hidden Markov ModelsDocument33 pagesMachine Learning For Natural Language Processing: Hidden Markov ModelsHưngNo ratings yet

- PCB Lect06 HMMDocument55 pagesPCB Lect06 HMMbalj balhNo ratings yet

- Hidden Markov Model (HMM) ArchitectureDocument15 pagesHidden Markov Model (HMM) ArchitecturejuanpapoNo ratings yet

- Sequence Model:: Hidden Markov ModelsDocument60 pagesSequence Model:: Hidden Markov Models21020641No ratings yet

- Math 312 Lecture Notes Markov ChainsDocument9 pagesMath 312 Lecture Notes Markov Chainsfakame22No ratings yet

- ML 5Document28 pagesML 5V Yaswanth Sai 22114242113No ratings yet

- Sequence Model:: Hidden Markov ModelsDocument58 pagesSequence Model:: Hidden Markov ModelsKỉ Nguyên Hủy DiệtNo ratings yet

- Summary Sophia VentDocument16 pagesSummary Sophia VentSophia VentNo ratings yet

- Computing Absorption Probabilities For A Markov ChainDocument8 pagesComputing Absorption Probabilities For A Markov ChainDaniel Camilo Mesa RojasNo ratings yet

- Introduction to Hidden Markov ModelsDocument56 pagesIntroduction to Hidden Markov ModelsRaees AhmadNo ratings yet

- Hidden Markov ModelDocument32 pagesHidden Markov ModelAnubhab BanerjeeNo ratings yet

- 1.1. Multiple Sequence AlignmentDocument16 pages1.1. Multiple Sequence AlignmentjuanpapoNo ratings yet

- Hidden Markov ModelDocument17 pagesHidden Markov ModelAjayChandrakarNo ratings yet

- 3Document58 pages3Mijailht SosaNo ratings yet

- Cu HMMDocument13 pagesCu HMMRupesh ParabNo ratings yet

- Title: Hidden Markov Model: Hidden Markov Model The States That Were Responsible For Emitting The Various Symbols AreDocument5 pagesTitle: Hidden Markov Model: Hidden Markov Model The States That Were Responsible For Emitting The Various Symbols AreVivek BarsopiaNo ratings yet

- 3 Markov Chains and Markov ProcessesDocument3 pages3 Markov Chains and Markov ProcessescheluvarayaNo ratings yet

- T6-Hang Li - Machine Learning Methods-Springer (2023) - 230-252Document23 pagesT6-Hang Li - Machine Learning Methods-Springer (2023) - 230-252Furqoon黒七No ratings yet

- HMM TitleDocument13 pagesHMM TitleSergiu ReceanNo ratings yet

- Lecture5 hmms1Document37 pagesLecture5 hmms1Beyene TsegayeNo ratings yet

- Tutorial HMM CIDocument14 pagesTutorial HMM CITrương Tiểu PhàmNo ratings yet

- Minimal Stable Partial Realization ?: Automatic, 497-506Document10 pagesMinimal Stable Partial Realization ?: Automatic, 497-506Bakri AlsirNo ratings yet

- Markov ChainDocument7 pagesMarkov Chainshanigondal126No ratings yet

- Hidden Markov ModelsDocument51 pagesHidden Markov ModelsSauki RidwanNo ratings yet

- Algorithmic Methods For Markov ChainsDocument27 pagesAlgorithmic Methods For Markov ChainsZhaospeiNo ratings yet

- HMM TutorialDocument7 pagesHMM Tutoriallou_ferrigno00No ratings yet

- Part IB - Markov Chains: Based On Lectures by G. R. GrimmettDocument42 pagesPart IB - Markov Chains: Based On Lectures by G. R. GrimmettBob CrossNo ratings yet

- HMM Cuda Baum WelchDocument8 pagesHMM Cuda Baum WelchBegüm ÇığNo ratings yet

- Implementation of Discrete Hidden Markov Model For Sequence Classification in C++ Using EigenDocument8 pagesImplementation of Discrete Hidden Markov Model For Sequence Classification in C++ Using Eigenpi194043No ratings yet

- Markov Chains Lecture: Transition Probabilities and State ClassificationDocument9 pagesMarkov Chains Lecture: Transition Probabilities and State Classificationdark689No ratings yet

- Hidden Markov ModelsDocument26 pagesHidden Markov ModelsDipta BiswasNo ratings yet

- Probabilidadesde TransicionDocument40 pagesProbabilidadesde TransicionLuis CorteNo ratings yet

- HMM-Mona SinghDocument11 pagesHMM-Mona SinghSiddharth ShrivastavaNo ratings yet

- A Tutorial On Hidden Markov Models PDFDocument22 pagesA Tutorial On Hidden Markov Models PDFNicky MuscatNo ratings yet

- Markov Chains ExplainedDocument8 pagesMarkov Chains ExplainedDiego SosaNo ratings yet

- MCMCDocument70 pagesMCMCPaul WanjoliNo ratings yet

- Markov Chains ErgodicityDocument8 pagesMarkov Chains ErgodicitypiotrpieniazekNo ratings yet

- Zadaci Markov LanacDocument10 pagesZadaci Markov LanacPripreme UčiteljskiNo ratings yet

- Algorithms - Hidden Markov ModelsDocument7 pagesAlgorithms - Hidden Markov ModelsMani NadNo ratings yet

- The Fundamental Theorem of Markov ChainsDocument4 pagesThe Fundamental Theorem of Markov ChainsMichael PearsonNo ratings yet

- A Revealing Introduction To Hidden Markov ModelsDocument20 pagesA Revealing Introduction To Hidden Markov ModelsChinthaka Muditha DissanayakeNo ratings yet

- CS 4705 Hidden Markov Models: Slides Adapted From Dan Jurafsky, and James MartinDocument35 pagesCS 4705 Hidden Markov Models: Slides Adapted From Dan Jurafsky, and James MartinqmbasheerNo ratings yet

- Introduction To Hidden Markov ModelsDocument31 pagesIntroduction To Hidden Markov ModelsAnshy SinghNo ratings yet

- Computational Genomics HMM Lecture NotesDocument7 pagesComputational Genomics HMM Lecture NotesKhizer FerozeNo ratings yet

- MVE220 Financial Risk IntroductionDocument8 pagesMVE220 Financial Risk IntroductionVishnuvardhanNo ratings yet

- MPSK BER over Multipath ChannelsDocument3 pagesMPSK BER over Multipath ChannelsKishore Kumar DasNo ratings yet

- Kishore K. Das: All AllDocument2 pagesKishore K. Das: All AllKishore Kumar DasNo ratings yet

- CDMA Properties ExplainedDocument3 pagesCDMA Properties ExplainedKishore Kumar DasNo ratings yet

- Singular Value Decomposition (SVD) : Kishore K. DasDocument1 pageSingular Value Decomposition (SVD) : Kishore K. DasKishore Kumar DasNo ratings yet

- Sparse Dictionary Learning Algorithms ExplainedDocument4 pagesSparse Dictionary Learning Algorithms ExplainedKishore Kumar DasNo ratings yet

- Basics of CommunicationDocument1 pageBasics of CommunicationKishore Kumar DasNo ratings yet

- Information Theory in Machine LearningDocument3 pagesInformation Theory in Machine LearningKishore Kumar DasNo ratings yet

- Gaussian Mixture Model: P (X - Y) P (Y - X) P (X)Document3 pagesGaussian Mixture Model: P (X - Y) P (Y - X) P (X)Kishore Kumar DasNo ratings yet

- SRM Valliammai Engineering College (An Autonomous Institution)Document9 pagesSRM Valliammai Engineering College (An Autonomous Institution)sureshNo ratings yet

- ASR System: Rashmi KethireddyDocument28 pagesASR System: Rashmi KethireddyRakesh PatelNo ratings yet

- Cis262 HMMDocument34 pagesCis262 HMMAndrej IlićNo ratings yet

- UNIT-III Support Vector MachinesDocument43 pagesUNIT-III Support Vector MachinesPuli VilashNo ratings yet

- Unit-3 (NLP)Document28 pagesUnit-3 (NLP)20bq1a4213No ratings yet

- Asr04 HMM IntroDocument38 pagesAsr04 HMM IntroGetachew MamoNo ratings yet

- HIDDEN MARKOV MODELS FOR SPEECH RECOGNITIONDocument35 pagesHIDDEN MARKOV MODELS FOR SPEECH RECOGNITIONMuni Sankar MatamNo ratings yet

- IS 7118 Unit-6 HMMDocument78 pagesIS 7118 Unit-6 HMMJmpol JohnNo ratings yet

- Hidden Markov Model (HMM) Tutorial: Home Ciphers Cryptanalysis Hashes ResourcesDocument5 pagesHidden Markov Model (HMM) Tutorial: Home Ciphers Cryptanalysis Hashes ResourcesMasud SarkerNo ratings yet

- Gesture Recognition Using Computer VisionDocument52 pagesGesture Recognition Using Computer VisionJithin RajNo ratings yet

- Machine Learning Assignment SolutionDocument30 pagesMachine Learning Assignment Solutionrajesh badmanjiNo ratings yet

- Hidden Markov Model in Automatic Speech RecognitionDocument29 pagesHidden Markov Model in Automatic Speech Recognitionvipkute0901No ratings yet

- Chapter15 1Document36 pagesChapter15 1Reyazul HasanNo ratings yet

- Asr05 HMM AlgorithmsDocument47 pagesAsr05 HMM AlgorithmsGetachew MamoNo ratings yet

- ViteRbi AlgorithmDocument19 pagesViteRbi AlgorithmRituraj KaushikNo ratings yet

- Regime-Switching Factor Investing With Hidden Markov ModelsDocument15 pagesRegime-Switching Factor Investing With Hidden Markov ModelsHao OuYangNo ratings yet

- 19MAT301 - Practice Sheet 2 & 3Document10 pages19MAT301 - Practice Sheet 2 & 3Vishal CNo ratings yet

- Cu HMMDocument13 pagesCu HMMRupesh ParabNo ratings yet

- Hidden Markov ModelDocument9 pagesHidden Markov ModelKishore Kumar DasNo ratings yet

- Implementation of Discrete Hidden Markov Model For Sequence Classification in C++ Using EigenDocument8 pagesImplementation of Discrete Hidden Markov Model For Sequence Classification in C++ Using Eigenpi194043No ratings yet

- Algorithms - Hidden Markov ModelsDocument7 pagesAlgorithms - Hidden Markov ModelsMani NadNo ratings yet

- Introduction To Machine Learning CMU-10701: Hidden Markov ModelsDocument30 pagesIntroduction To Machine Learning CMU-10701: Hidden Markov Modelsricksant2003No ratings yet

- NLP2 7Document400 pagesNLP2 7srirams007No ratings yet

- HMM Cuda Baum WelchDocument8 pagesHMM Cuda Baum WelchBegüm ÇığNo ratings yet