Professional Documents

Culture Documents

ML Session Basic

Uploaded by

somukhannanOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ML Session Basic

Uploaded by

somukhannanCopyright:

Available Formats

Standard Deviation - How much points are deviated from the mean(Average) - data point - used for

outliers

Variance - square root of standard deviation,square root of difference from mean.

Overfitting - model learn noise and other details to some extent , predict the new data has more

differences

Underfitting - not sufficiently complex to predict, which means model is simple and not sufficient

complex

Cross Validation Error - means Test Data Error

Cost Function -

Bias - Low Bias and High Bias - means Error in training data - Bias is difference between the expected

estimator value and predictor value.

Variance - Low Variance and High Variance - means Error in Testing Data. - how much expected

predicted value is varies.

Underfitting - data are not sufficient for trianing very simple data

Mean - Average in statistics

Gradient Descent Algorithm - it is optimizing algorithm and it to find the Global Optimum model.

Local Optimum

Mid Point

Drop Out - dropping the random units (hidden and visible layers) in neural network.

Cost function - to minimize the operation cost

SVM is used for Multidimensional Data

Normalization - all values are come between 0 and 1 , from different values

Neural Networks - it mimic the human brain neuron and connections, to learn the thinks

Is a process - neural network is a serious of algorithm try to achieve relationship from the give data

through process

Back Propagation

Neural Networks parameters - Bias , Activation , Neurons(features)

Outliar

Vanished Gradient

Deep Learning - Deep learning is the part of Machine learning ,deep learning is mimic of human brain

to learn as like human,It have networks and neurons as like human brain.

Perceptron - is a simple neural network and it is single layer neural network.

In Gendral Neural Network having

Input Layer

Hidden Layer

Output Layer

Neuron

Weight

Features

How neural network works?

In this , features are send to the input layer, each feature send Seperate node in input layer

(x1,x2,x3)

First layer is layer , second is hidden layer (which may number of layers) and third layer is the

output layer

There is the lines(weight) which connecting with neuron in layers, it transfer data from one end

to others(w1,w2,w3)

From input layer to hidden layer , every feature is connected to all other neuron in the hidden

layer,from hidden layer neurons connected to output layers neurons.

In neuron in the Hidden layer done 2 thinks , one is sumation of weight and feature and

activation of that weight

Sigmoid is example activation function.

Once the weight and feature is multiplied and sum all , which will activate it.while sumation

there is bias(I thought it is training error).

Activation function will your sigmoid function value from 0 to 1.

Multi dimensional data - SVM

Normilization -

Back Propagation

Gradient Algorithm

Hyper Parameter

Vanishing Gradient

Exploding Gradient

Drop out

Overfiting - not gendralized --> to avoid regularization , Drop out , Data Agumentation

Under fitting

What are layer we include in CNN?

Sigmoid function

Software - propaility

Pooling layer ->pooling,Antializing,Max pooling, kernal (3*3 matrix)

Fully Connected Layer

Image Processing - Convolution layer

Cost function (loss function)- best fit

You might also like

- Public Value and Art For All?Document20 pagesPublic Value and Art For All?yolandaniguasNo ratings yet

- PTV Vissim - First Steps ENG PDFDocument34 pagesPTV Vissim - First Steps ENG PDFBeby RizcovaNo ratings yet

- Virgin Galactic Profile & Performance Business ReportDocument10 pagesVirgin Galactic Profile & Performance Business ReportLoic PitoisNo ratings yet

- Lakheri Cement Works: Report On Overhauling (Replacement of Internals) of Vrm-1 Main Gearbox 22-May-09 To 27-Jun-09Document47 pagesLakheri Cement Works: Report On Overhauling (Replacement of Internals) of Vrm-1 Main Gearbox 22-May-09 To 27-Jun-09sandesh100% (1)

- Unit 4Document57 pagesUnit 4HARIPRASATH PANNEER SELVAM100% (1)

- Unit 2 v1.Document41 pagesUnit 2 v1.Kommi Venkat sakethNo ratings yet

- Notes On Introduction To Deep LearningDocument19 pagesNotes On Introduction To Deep Learningthumpsup1223No ratings yet

- Week - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Document7 pagesWeek - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Mrunal BhilareNo ratings yet

- DL Unit 1Document16 pagesDL Unit 1nitinNo ratings yet

- Deep Learning and Its ApplicationsDocument21 pagesDeep Learning and Its ApplicationsAman AgarwalNo ratings yet

- Week 4Document5 pagesWeek 4Mrunal BhilareNo ratings yet

- A Probabilistic Theory of Deep Learning: Unit 2Document17 pagesA Probabilistic Theory of Deep Learning: Unit 2HarshitNo ratings yet

- SVMDocument2 pagesSVMNiraj AnandNo ratings yet

- Machine Learning101Document20 pagesMachine Learning101consaniaNo ratings yet

- Deep Learning PDFDocument55 pagesDeep Learning PDFNitesh Kumar SharmaNo ratings yet

- Institute of Engineering and Technology Davv, Indore: Lab Assingment OnDocument14 pagesInstitute of Engineering and Technology Davv, Indore: Lab Assingment OnNikhil KhatloiyaNo ratings yet

- Unit Iv DMDocument58 pagesUnit Iv DMSuganthi D PSGRKCWNo ratings yet

- Deep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908Document5 pagesDeep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908vikNo ratings yet

- Unit - 2Document24 pagesUnit - 2vvvcxzzz3754No ratings yet

- Deep Learning QuestionsDocument51 pagesDeep Learning QuestionsAditi Jaiswal100% (1)

- Unit 6 MLDocument2 pagesUnit 6 ML091105Akanksha ghuleNo ratings yet

- Ann Cae-3Document22 pagesAnn Cae-3Anurag RautNo ratings yet

- Artificial Neural Network Part-2Document15 pagesArtificial Neural Network Part-2Zahid JavedNo ratings yet

- DL Unit-3Document9 pagesDL Unit-3Kalpana MNo ratings yet

- Deep Learning Interview Questions and AnswersDocument21 pagesDeep Learning Interview Questions and AnswersSumathi MNo ratings yet

- Activation Function - Lect 1Document5 pagesActivation Function - Lect 1NiteshNarukaNo ratings yet

- Neural Networks and Their Statistical ApplicationDocument41 pagesNeural Networks and Their Statistical ApplicationkamjulajayNo ratings yet

- UNIT-1 Foundations of Deep LearningDocument51 pagesUNIT-1 Foundations of Deep Learningbhavana100% (1)

- ShayakDocument6 pagesShayakShayak RayNo ratings yet

- SOS Final SubmissionDocument36 pagesSOS Final SubmissionAyush JadiaNo ratings yet

- Computer Vision NN ArchitectureDocument19 pagesComputer Vision NN ArchitecturePrasu MuthyalapatiNo ratings yet

- Deep LearningDocument12 pagesDeep Learningshravan3394No ratings yet

- Unit 2 DLDocument3 pagesUnit 2 DLAnkit MahapatraNo ratings yet

- 2.building Blocks of Neural NetworksDocument2 pages2.building Blocks of Neural NetworkskoezhuNo ratings yet

- DeepLearing TheoryDocument51 pagesDeepLearing TheorytharunNo ratings yet

- ML3 Unit 4-3Document13 pagesML3 Unit 4-3ISHAN SRIVASTAVANo ratings yet

- Convolutional Neural Networks & ZapierDocument75 pagesConvolutional Neural Networks & ZapierTimer InsightNo ratings yet

- TensorflowDocument25 pagesTensorflowSudharshan VenkateshNo ratings yet

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- Unit 2 SCDocument6 pagesUnit 2 SCKatyayni SharmaNo ratings yet

- Chapter OneDocument9 pagesChapter OneJiru AlemayehuNo ratings yet

- An Introduction To Neural Networks: Instituto Tecgraf PUC-Rio Nome: Fernanda Duarte Orientador: Marcelo GattassDocument45 pagesAn Introduction To Neural Networks: Instituto Tecgraf PUC-Rio Nome: Fernanda Duarte Orientador: Marcelo GattassGiGa GFNo ratings yet

- Deep Learning - DL-2Document44 pagesDeep Learning - DL-2Hasnain AhmadNo ratings yet

- Unit 2 - Machine LearningDocument19 pagesUnit 2 - Machine LearningGauri BansalNo ratings yet

- Artificial Neural NetworksDocument4 pagesArtificial Neural NetworksZUHAL MUJADDID SAMASNo ratings yet

- Unit IvDocument34 pagesUnit Ivdanmanworld443No ratings yet

- Classification by Backpropagation - A Multilayer Feed-Forward Neural Network - Defining A Network Topology - BackpropagationDocument8 pagesClassification by Backpropagation - A Multilayer Feed-Forward Neural Network - Defining A Network Topology - BackpropagationKingzlynNo ratings yet

- Unit 4Document38 pagesUnit 4Abhinav KaushikNo ratings yet

- Neural Network and Fuzzy LogicDocument46 pagesNeural Network and Fuzzy Logicdoc. safe eeNo ratings yet

- Feed Forward Neural NetworkDocument16 pagesFeed Forward Neural NetworkPandey AshmitNo ratings yet

- Neural NetworksDocument11 pagesNeural Networks16Julie KNo ratings yet

- Deep Learning Unit 2Document30 pagesDeep Learning Unit 2Aditya Pratap SinghNo ratings yet

- An Introduction To Artificial Neural Networks - by Srivignesh Rajan - Towards Data ScienceDocument11 pagesAn Introduction To Artificial Neural Networks - by Srivignesh Rajan - Towards Data ScienceRavan FarmanovNo ratings yet

- Artificial Neural NetworkDocument4 pagesArtificial Neural Networkreshma acharyaNo ratings yet

- Application of Back-Propagation Neural Network in Data ForecastDocument23 pagesApplication of Back-Propagation Neural Network in Data ForecastEngineeringNo ratings yet

- Assignment - 4Document24 pagesAssignment - 4Durga prasad TNo ratings yet

- An Example To Understand A Neural Network ModelDocument39 pagesAn Example To Understand A Neural Network ModelFriday JonesNo ratings yet

- Module1 ECO-598 AI & ML Aug 21Document45 pagesModule1 ECO-598 AI & ML Aug 21Soujanya NerlekarNo ratings yet

- BackpropagationDocument12 pagesBackpropagationali.nabeel246230No ratings yet

- UNIT-2 Foundations of Deep LearningDocument64 pagesUNIT-2 Foundations of Deep LearningbhavanaNo ratings yet

- Deep LearningDocument40 pagesDeep LearningDr. Dnyaneshwar KirangeNo ratings yet

- Neural NetworkDocument58 pagesNeural Networkarshia saeedNo ratings yet

- Unit 2Document18 pagesUnit 2Nitya ShuklaNo ratings yet

- Backpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningFrom EverandBackpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningNo ratings yet

- Percakapan BHS Inggris Penerimaan PasienDocument5 pagesPercakapan BHS Inggris Penerimaan PasienYulia WyazztNo ratings yet

- Database NotesDocument4 pagesDatabase NotesKanishka SeneviratneNo ratings yet

- Explanation Teks: How To Keep The Body HealthyDocument4 pagesExplanation Teks: How To Keep The Body HealthyremybonjarNo ratings yet

- BDU-BIT-Electromechanical Engineering Curriculum (Regular Program)Document187 pagesBDU-BIT-Electromechanical Engineering Curriculum (Regular Program)beselamu75% (4)

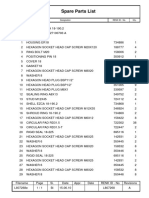

- L807268EDocument1 pageL807268EsjsshipNo ratings yet

- Drug-Induced Sleep Endoscopy (DISE)Document4 pagesDrug-Induced Sleep Endoscopy (DISE)Luis De jesus SolanoNo ratings yet

- EBOOK6131f1fd1229c Unit 3 Ledger Posting and Trial Balance PDFDocument44 pagesEBOOK6131f1fd1229c Unit 3 Ledger Posting and Trial Balance PDFYaw Antwi-AddaeNo ratings yet

- 4 Structure PDFDocument45 pages4 Structure PDFAnil SuryawanshiNo ratings yet

- Assignment 3: Course Title: ECO101Document4 pagesAssignment 3: Course Title: ECO101Rashik AhmedNo ratings yet

- Analogy - 10 Page - 01 PDFDocument10 pagesAnalogy - 10 Page - 01 PDFrifathasan13No ratings yet

- Review of The Householder's Guide To Community Defence Against Bureaucratic Aggression (1973)Document2 pagesReview of The Householder's Guide To Community Defence Against Bureaucratic Aggression (1973)Regular BookshelfNo ratings yet

- HET Neoclassical School, MarshallDocument26 pagesHET Neoclassical School, MarshallDogusNo ratings yet

- Neonatal Phototherapy: Operator ManualDocument16 pagesNeonatal Phototherapy: Operator ManualAbel FencerNo ratings yet

- Teaching Listening and Speaking in Second and Foreign Language Contexts (Kathleen M. Bailey)Document226 pagesTeaching Listening and Speaking in Second and Foreign Language Contexts (Kathleen M. Bailey)iamhsuv100% (1)

- QP English Viii 201920Document14 pagesQP English Viii 201920Srijan ChaudharyNo ratings yet

- Conscious Sedation PaediatricsDocument44 pagesConscious Sedation PaediatricsReeta TaxakNo ratings yet

- Fender Re-Issue 62 Jazzmaster Wiring DiagramDocument1 pageFender Re-Issue 62 Jazzmaster Wiring DiagrambenitoNo ratings yet

- Group1 App 005 Mini PTDocument8 pagesGroup1 App 005 Mini PTAngelito Montajes AroyNo ratings yet

- Garmin GNC 250xl Gps 150xlDocument2 pagesGarmin GNC 250xl Gps 150xltordo22No ratings yet

- IsomerismDocument60 pagesIsomerismTenali Rama KrishnaNo ratings yet

- Test Item Analysis Mathematics 6 - FIRST QUARTER: Item Wisdom Perseverance Gratitude Grace Total MPSDocument6 pagesTest Item Analysis Mathematics 6 - FIRST QUARTER: Item Wisdom Perseverance Gratitude Grace Total MPSQUISA O. LAONo ratings yet

- 2CSE60E14: Artificial Intelligence (3 0 4 3 2) : Learning OutcomesDocument2 pages2CSE60E14: Artificial Intelligence (3 0 4 3 2) : Learning OutcomesB. Srini VasanNo ratings yet

- Excel Subhadip NandyDocument9 pagesExcel Subhadip NandyNihilisticDelusionNo ratings yet

- Phys172 S20 Lab07 FinalDocument8 pagesPhys172 S20 Lab07 FinalZhuowen YaoNo ratings yet

- Unit - 5 SelectionDocument7 pagesUnit - 5 SelectionEhtesam khanNo ratings yet

- Time Value of MoneyDocument11 pagesTime Value of MoneyRajesh PatilNo ratings yet