Professional Documents

Culture Documents

Speed-Up InceptionV3 Inference Time Up To 18x Using Intel Core Processor

Speed-Up InceptionV3 Inference Time Up To 18x Using Intel Core Processor

Uploaded by

Amar0 ratings0% found this document useful (0 votes)

9 views7 pagesOriginal Title

Speed-up InceptionV3 inference time up to 18x using Intel Core processor

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views7 pagesSpeed-Up InceptionV3 Inference Time Up To 18x Using Intel Core Processor

Speed-Up InceptionV3 Inference Time Up To 18x Using Intel Core Processor

Uploaded by

AmarCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 7

Ge Fernando Rodrigues Junior (olow) see

Pr 00126,2018 . Sminread - © Listen

[Save

Speed-up InceptionV3 inference time up to 18x

using Intel Core processor

In this article you will learn how to speed-up your InceptionV3

classification model and start inferring near / real-time images using

your Intel® Core processor and Intel® OpenVINO.

Let's speed-up your inference time up to 18x, are you ready?

Ifyou have a problem that you need to run in near/real-time but you don't want to use a

dedicated GPU, its for you.

Open in app

Experiments ran using an Intel Core i7-7500U — TensorFlow 110 and OpenVINO v2018.3.343

What is the magic?

That's all related to model optimization! I used Intel OpenVINO to optimize the

model and ran the inferences using its Inference Engine. It is really interesting and

easy to setup.

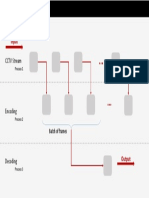

OpenVINO is a toolkit that allows developers to deploy pretrained deep learning

models. It has two principal modules: A Model Optimizer and the Inference Engine.

Model Optimizer

A set of command line tools that allows you to import trained models from many

deep learning frameworks such as Caffe, TensorFlow and others (Supports over 100

public models)

It performs many tasks in your model to optimize and reduce it. These things

include:

+ Transform your model into an intermediate representation (IR) to allow the

usage of the Inference Engine.

Model conversion: Fuse operations, apply quantizations to reduce data length

and prepare the data with channel reordering.

Here you can find more information about supported models

Inference Engine

Uses an API based code to do inferences on the platform of your choice: CPU, GPU,

VPU, or FPGA.

« Execute different layers on different devices (for example, a GPU and selected

lavere an a CPTN)

eo Open in app

Please, check the OpenVINO documentation to setup your SDK installation

accordingly. https://software. intel.com/en-us/articles/OpenVINO-Install-Linux.

Note that OpenVINO requires at least 6th generation processors.

Let's get started!

In this experiment we are going to use a very interesting pre-trained Keras model

from Kaggle for protein classification ( https://www.kaggle.com/kmader/rgb-

transfer-learning-with-inceptionv3-for-protein/).

In order to use OpenVINO, there are some steps we need to go through:

1. Freeze the model if it is not in protobuf format (.pb)

2. Convert the model to an Intermediate Representation (IR)

3. Setup the Inference Engine code to run the IR

Freeze the model if t is not in protobuf format yet (.pb)

In this case, our model was saved in h5 format, so we need to load the model and

save it in .ckpt format first. Also, it is important to know the output node name to

freeze the graph.

(model .hS")

ssion()

-ckpt”)

Open in app

he result 1s: dense_z/sigmoiad

Now we have the checkpoint files, we can freeze it using the TensorFlow

freeze_graph.py script. Generally it is located at:

(your_python_installation_path/lib/python3. 6/site-

packages/tensorflow/python/tools/freeze_graph.py)

# Frozen model

python your_python_installation_path/lib/python3.6/site-

packages/tensorflow/python/tools/freeze_graph.py \

--input_meta_graph-model.ckpt.meta \

checkpoint=model.ckpt \

Convert the model to an Intermediate Representation (IR)

It is possible to generate the IR files using the TensorFlow optimizer module from

OpenVINO SDK, It is important to set the input shape according to your model

topology. In this case we are working with the InceptionV3; thus, the shape used is

[1, 299, 29,3].

python3

/opt/intel/computer_vision_sdk/deployment_tools/model_optimizer/mo_t

f£.py --input_model keras_frozen.pb \

shape [1,299,299,3] \

ype FP32

# The following files will be created:

keras_frozen.bin

keras_frozen. xml

a

# You can optimize even more your model if you use data type FP16

# Check if your accuracy not reduced muc!

et Open in app

Setup the Inference Engine code to run the IR

There are many IE samples in the OpenVINO SDK showing how to load the IR files

and run an inference. You can find them here:

/opt/intel/computer_vision_sdk_2018.3.343/deployment_tools/inference_engine/samples/p

ython_samples

One important step is the pre-processing. OpenVINO uses channels first data

format [CHW], it means you will probably need to do a reshape of your image array

before feeding into the Inference Engine. Here is one example of pre-processing

method:

def pre_process_image(imagePath) :

# Model input format

n, c,h, w = [1, 3, 299, 299]

mage = Image. open (imagePath)

processedimg = image.resize((h, w), resample=Image.BILINEAR)

# Normalize to keep data between 0 - 1

processedimg = (np.array(processedimg) - 0) / 255.0

# Change data layout from HWC to CHW

processedImg = proc: ranspose((2, 0, 1))

proce hape((n, cy hy w))

sedIng.

ingIm

singImg = proc

return image, processin:

mg, imagePath

Finally, let's get into the inference itself.

Note that you can use your Intel CPU, GPU, MYRIAD(Movidius) and FPGA just

changing the variable device! You will be amazed at how fast your algorithm will

run.

on for specified device and load extensions

# Devices: GPU ( )

plugin = IEPlugin("GPU"

CPU, MYRIAD

> plugin_dirs=

# Read IR

a

# Load network to the plugin

exec net = plugin. load (network=net)

del net

# Run inference

image, processedImg, imagePath = pre_process_image (fileName!

res = exec_net.infer(inputs={input_blob: processedImg}

# Access the results and get the index of the highest confidence

score

res = res['dense_2/Sigmoid']

idx = np.argsort (res (0]) (-1]

That's it! Now you are ready to enjoy your inferences. If you have some extra time,

make the comparison between the stock TensorFlow version and the OpenVINO.

Here you are some results that I got running this model.

InceptionV3 Inference - Core i7 7500

BB TensciFlon + OPU-(FP32) Ml OpenViNO + CPU-(FP32) MM OpenViNO+iGPU-(FFS2) Ml OpenvINO+iG°U-GPI6)

400

mages /sacona

89

4a

00

For more information, check the OpenVINO documentation at

a Q a 3

eo Open in app

Follow me on Linkedin: https://www.linkedin.com/in/fernando-rodrigt 7390b991

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5819)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- (Updated) Phase-1 - Final Project Presentation (G7)Document16 pages(Updated) Phase-1 - Final Project Presentation (G7)AmarNo ratings yet

- Architecture & DesignDocument3 pagesArchitecture & DesignAmarNo ratings yet

- Literature ReviewDocument2 pagesLiterature ReviewAmarNo ratings yet

- Pipeline ApproachDocument1 pagePipeline ApproachAmarNo ratings yet

- ReferencesDocument1 pageReferencesAmarNo ratings yet

- Crime SitesDocument3 pagesCrime SitesAmarNo ratings yet

- Medicine List PDFDocument2 pagesMedicine List PDFAmarNo ratings yet

- 4153 11162022144117Document8 pages4153 11162022144117AmarNo ratings yet

- 7TH Semester B.E SEE Tentative Time Table 2022Document5 pages7TH Semester B.E SEE Tentative Time Table 2022AmarNo ratings yet

- Vtu Syllabus 02 PDFDocument6 pagesVtu Syllabus 02 PDFAmarNo ratings yet

- How To Run Game!!Document1 pageHow To Run Game!!AmarNo ratings yet