Professional Documents

Culture Documents

2022 - Week - 1 - Supplement of Conditional Probability

2022 - Week - 1 - Supplement of Conditional Probability

Uploaded by

seungnam kimOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2022 - Week - 1 - Supplement of Conditional Probability

2022 - Week - 1 - Supplement of Conditional Probability

Uploaded by

seungnam kimCopyright:

Available Formats

Supplement of conditional probability

1. Probability space

Consider a partitioned set

mind= { happy , sad }={M h , M s }

and

weather = { sunny ,rainny , foggy }={W s , W r , W f }

2. The intersection( or joint) probability as

Probability table

sunny rainy foggy

happy 0.5 0.05 0.05 0.6

sad 0.2 0.15 0.05 0.4

0.7 0.2 0.1

P ( M h ∩W s )=0.2, P ( M s ∩W f )=0.05

1) Check the legitimacy

3 3

1=P ( M h ) + p ( M s ) =∑ P( M h ∩W ( i ) )+ ∑ P(M s ∩W (i ) ¿ ) ¿

i=1 i=1

2) Conditional probability

You measure the weather condition to infer your mind

P ( M h ∩W s ) 0.5

P ( M h|W s ¿= =

P(W s ) 0.7

You are confined in the room not to see outside. If you are happy. Predict

the weather

P ( W s ∩ M h ) 0.5

P ( W s|M h ) = =

P( M h ) 0.6

P ( W r ∩ M h ) 0. 0 5

P ( W r|M h ) = =

P(M h ) 0.6

P ( W f ∩ M h ) 0.05

P ( W f |M h )= =

P(M h) 0.6

Check the legitimacy on the marginal probability

m m

P( B¿¿ j)=∑ P ( Ai ∩ B j ) =∑ P ( B| A i ) P (A i)¿

i=1 i=1

%% Kim’s comment :

Bayesian rule is very important nowadays. It is indispensable in ML. We should be familiar to

this rule.

P ( B| A i ) P ( A i )

P ( A i|B )=

P( B)

The interpretation may be

- Given B , the prob of A == the prob of A measured B == after measured B estimate A

== posterior probability

- P(A) : the original event of A == prior probabilaity

- Since

n

P ( B )=∑ P ( B| A j ) P( A j )

j=1

It is a marginal prob. It is constant w.r.t. event A i.e., the sunny prob.

- P ( B| A i ) may be called the conditional marginal prob. i.e., it is a marginal regarding “B”

conditioning Ai

You might also like

- STAT 400 Midterm 1 Cheat SheetDocument4 pagesSTAT 400 Midterm 1 Cheat SheetterencezhaoNo ratings yet

- BS EN 50310-2010 BondingDocument40 pagesBS EN 50310-2010 Bondingruhuna01380% (5)

- Assistant Director (Environment) BPS-17Document23 pagesAssistant Director (Environment) BPS-17nomanNo ratings yet

- Catalogo de Pecas Carregadeira Pneus Volvo L120e PDFDocument1,172 pagesCatalogo de Pecas Carregadeira Pneus Volvo L120e PDFPedro Matheus90% (10)

- Theories of Ore GenesisDocument10 pagesTheories of Ore GenesisIrwan EPNo ratings yet

- Oil & Fat AnalysisDocument59 pagesOil & Fat AnalysisNurshaqina SufianNo ratings yet

- Basic Statistics and Probability TheoryDocument45 pagesBasic Statistics and Probability TheoryEduard DănilăNo ratings yet

- The Negative Binomial Distribution: Univariate DistributionsDocument6 pagesThe Negative Binomial Distribution: Univariate Distributionsbig clapNo ratings yet

- Answer Sheet To Prob 2Document3 pagesAnswer Sheet To Prob 2Julian BaybayonNo ratings yet

- Review: Mostly Probability and Some StatisticsDocument35 pagesReview: Mostly Probability and Some StatisticsBadazz doodNo ratings yet

- Lect1 ITCDocument3 pagesLect1 ITCNabanit SarkarNo ratings yet

- Lec4 MissingDocument12 pagesLec4 MissingdayoNo ratings yet

- Bs Lect 10Document18 pagesBs Lect 10jasonnumahnalkelNo ratings yet

- Lecture4 DONT USEDocument13 pagesLecture4 DONT USELamiaNo ratings yet

- EUBS QBM Lecture Notes 1Document2 pagesEUBS QBM Lecture Notes 1Rajdeep SinghNo ratings yet

- Lec 5 STA 403 Non Parametric MethodsDocument4 pagesLec 5 STA 403 Non Parametric MethodsKibetNo ratings yet

- Project STT 311Document79 pagesProject STT 311Joseph AkanbiNo ratings yet

- Probability Theory and Random Processes - Lecture-03Document12 pagesProbability Theory and Random Processes - Lecture-03mail2megp6734No ratings yet

- A Review of Probability:: 1 ConceptsDocument2 pagesA Review of Probability:: 1 ConceptsrakeshNo ratings yet

- CS229 - Probability Theory Review: Taide Ding, Fereshte KhaniDocument37 pagesCS229 - Probability Theory Review: Taide Ding, Fereshte Khanisid sNo ratings yet

- hw2 Sol PDFDocument10 pageshw2 Sol PDFHadeel hdeelNo ratings yet

- Probability Theory and Its ApplicationsDocument9 pagesProbability Theory and Its ApplicationsElianaNo ratings yet

- Lecture 2 Probabilistic RoboticsDocument35 pagesLecture 2 Probabilistic Roboticsf2020019015No ratings yet

- Eigenvalue of Pq-Laplace System Along The Powers oDocument10 pagesEigenvalue of Pq-Laplace System Along The Powers osoumyajit ghoshNo ratings yet

- Chapter 2: Belief, Probability, and Exchangeability: Lecture 1: Probability, Bayes Theorem, DistributionsDocument17 pagesChapter 2: Belief, Probability, and Exchangeability: Lecture 1: Probability, Bayes Theorem, Distributionsxiuxian liNo ratings yet

- IntroductionDocument35 pagesIntroductionzakizadehNo ratings yet

- An Overview of Bayesian EconometricsDocument30 pagesAn Overview of Bayesian Econometrics6doitNo ratings yet

- Conditional ProbabilityDocument5 pagesConditional ProbabilityAlejo valenzuelaNo ratings yet

- Statistical Data Analysis: PH4515: 1 Course StructureDocument5 pagesStatistical Data Analysis: PH4515: 1 Course StructurePhD LIVENo ratings yet

- 02 ProbIntro 2020 AnnotatedDocument44 pages02 ProbIntro 2020 AnnotatedEureka oneNo ratings yet

- Artificial Intelligence: Adina Magda FloreaDocument36 pagesArtificial Intelligence: Adina Magda FloreaPablo Lorenzo Muños SanchesNo ratings yet

- Statistics 1 Revision SheetDocument9 pagesStatistics 1 Revision SheetZira GreyNo ratings yet

- Chapter 4 - ProbabilityDocument2 pagesChapter 4 - ProbabilitySayar HeinNo ratings yet

- Solution Manual For Introductory Statistics 9th by MannDocument25 pagesSolution Manual For Introductory Statistics 9th by MannKatelynWebsterikzj100% (46)

- Statistical Inference III: Mohammad Samsul AlamDocument25 pagesStatistical Inference III: Mohammad Samsul AlamMd Abdul BasitNo ratings yet

- ML Cheat SheetDocument74 pagesML Cheat SheetSasi sasi100% (1)

- Lecture 5Document6 pagesLecture 5Martin WolffeNo ratings yet

- Probability-The Science of Uncertainty and DataDocument4 pagesProbability-The Science of Uncertainty and DataAlmighty59No ratings yet

- Week 1: Review of Probability and StatisticsDocument64 pagesWeek 1: Review of Probability and StatisticsSam SungNo ratings yet

- 2008 MOP Blue Polynomials-IDocument3 pages2008 MOP Blue Polynomials-IWeerasak BoonwuttiwongNo ratings yet

- Handout Part III Riemannian ManifoldsDocument61 pagesHandout Part III Riemannian Manifolds效法羲和No ratings yet

- Uncertainty: CSE-345: Artificial IntelligenceDocument30 pagesUncertainty: CSE-345: Artificial IntelligenceFariha OisyNo ratings yet

- SC MX I I Math 202324 ProbabilityDocument12 pagesSC MX I I Math 202324 Probabilitypythoncurry17No ratings yet

- Exercise 2Document13 pagesExercise 2Филип ЏуклевскиNo ratings yet

- STAT 112 Session 1B - MDocument36 pagesSTAT 112 Session 1B - MProtocol WonderNo ratings yet

- STAT 516 Course Notes Part 0: Review of STAT 515: 1 ProbabilityDocument21 pagesSTAT 516 Course Notes Part 0: Review of STAT 515: 1 ProbabilityMariam LortkipanidzeNo ratings yet

- Lec 2Document27 pagesLec 2DharamNo ratings yet

- Prob BackgroundDocument23 pagesProb BackgroundLnoe torresNo ratings yet

- SCR 1Document4 pagesSCR 1Salbani ChakraborttyNo ratings yet

- Quantum Operations: FIXME: Insert Three Figures From Matthias SlidesDocument3 pagesQuantum Operations: FIXME: Insert Three Figures From Matthias SlidesDaniel Sebastian PerezNo ratings yet

- Problem Set 9 SolutionsDocument5 pagesProblem Set 9 Solutionsglowygamingno1No ratings yet

- 22 Dependent & Independent Events (Notes)Document2 pages22 Dependent & Independent Events (Notes)mohamad.elali01No ratings yet

- Indirectutility PDFDocument2 pagesIndirectutility PDFSalman HamidNo ratings yet

- Indirect UtilityDocument2 pagesIndirect UtilityMd IstiakNo ratings yet

- Indirectutility PDFDocument2 pagesIndirectutility PDFRashidAliNo ratings yet

- Indirectutility PDFDocument2 pagesIndirectutility PDFMaimoona GhaniNo ratings yet

- SOR1211 - ProbabilityDocument17 pagesSOR1211 - ProbabilityMatthew CurmiNo ratings yet

- Geng5507 Stat Tutsheet 1 SolutionsDocument8 pagesGeng5507 Stat Tutsheet 1 SolutionsKay KaiNo ratings yet

- Basic Probability Theory: Lect04.ppt S-38.145 - Introduction To Teletraffic Theory - Spring 2005Document50 pagesBasic Probability Theory: Lect04.ppt S-38.145 - Introduction To Teletraffic Theory - Spring 2005Mann OtNo ratings yet

- Bayesian Basics DIWDocument44 pagesBayesian Basics DIWStevensNo ratings yet

- Introduction To Mobile RoboticsDocument36 pagesIntroduction To Mobile RoboticsAhmad RamadhaniNo ratings yet

- Poisson Probability DistributionDocument4 pagesPoisson Probability DistributionAbdul TukurNo ratings yet

- Lecture2 PDFDocument126 pagesLecture2 PDFmoraesNo ratings yet

- Stat 311: HW 4, Chapters 4 & 5, Solutions: Fritz ScholzDocument3 pagesStat 311: HW 4, Chapters 4 & 5, Solutions: Fritz ScholzjohnNo ratings yet

- The Equidistribution Theory of Holomorphic Curves. (AM-64), Volume 64From EverandThe Equidistribution Theory of Holomorphic Curves. (AM-64), Volume 64No ratings yet

- Bliley P/N: Nvg47A1282: Rohs Compliant ProductDocument3 pagesBliley P/N: Nvg47A1282: Rohs Compliant ProductGabrielitoNo ratings yet

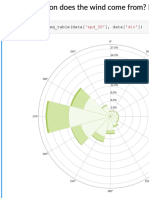

- Predicting The Wind: Data Science in Wind Resource AssessmentDocument61 pagesPredicting The Wind: Data Science in Wind Resource AssessmentFlorian RoscheckNo ratings yet

- WjjwjwnsDocument30 pagesWjjwjwnsElaiza SolimanNo ratings yet

- AmmaDocument3 pagesAmmaABP SMART CLASSESNo ratings yet

- Assignment For Diwali BreakDocument16 pagesAssignment For Diwali BreakArshNo ratings yet

- Pyrolysis of Poultry Litter Fractions For Bio-Char and Bio-Oil ProductionDocument8 pagesPyrolysis of Poultry Litter Fractions For Bio-Char and Bio-Oil ProductionMauricio Escobar LabraNo ratings yet

- Biscuits and Cookies: Sunil KumarDocument13 pagesBiscuits and Cookies: Sunil KumarAlem Abebe AryoNo ratings yet

- DCU RPG - ErrataDocument6 pagesDCU RPG - Erratasergio rodriguezNo ratings yet

- 1a Utilitarianism and John Stuart Mill 5.7 Quantity vs. Quality of PleasureDocument10 pages1a Utilitarianism and John Stuart Mill 5.7 Quantity vs. Quality of Pleasuredili ako iniNo ratings yet

- Passive VoiceDocument4 pagesPassive Voicenguyen minh chanhNo ratings yet

- Classical HomocystinuriaDocument34 pagesClassical Homocystinuriapriyanshu mathurNo ratings yet

- ABB Wire Termination Catalogue EN CANDocument254 pagesABB Wire Termination Catalogue EN CANAlvinNo ratings yet

- Winsor Pilates - Tips, and Some Exercises To DoDocument8 pagesWinsor Pilates - Tips, and Some Exercises To DoudelmarkNo ratings yet

- P5812P0671 IntGL Cat Vertmax Set21 ENG LRDocument32 pagesP5812P0671 IntGL Cat Vertmax Set21 ENG LRglobalcosta42No ratings yet

- SHREKDocument59 pagesSHREKleonidsitnikNo ratings yet

- Application Note CORR-4 PDFDocument15 pagesApplication Note CORR-4 PDFaneesh19inNo ratings yet

- Schneider Sustainability Deck 05.23.23Document16 pagesSchneider Sustainability Deck 05.23.23SchneiderNo ratings yet

- Polaroid FLM1911Document30 pagesPolaroid FLM1911videosonNo ratings yet

- Prostructure SuiteDocument8 pagesProstructure SuiteArshal AzeemNo ratings yet

- 966f Interactivo Esquema ElectricDocument8 pages966f Interactivo Esquema ElectricJavierNo ratings yet

- Piping TableDocument59 pagesPiping TableExsan Othman100% (2)

- LogPerAntenna For RDFDocument4 pagesLogPerAntenna For RDFDoan HoaNo ratings yet

- Wcfs2019 FlyerDocument10 pagesWcfs2019 FlyerZhi Yung TayNo ratings yet

- Chapter-12 - Aldehydes-Ketones-and-Carboxylic-Acids Important QuestionDocument13 pagesChapter-12 - Aldehydes-Ketones-and-Carboxylic-Acids Important QuestionPonuNo ratings yet

- A Wee Drop of Amber NectarDocument2 pagesA Wee Drop of Amber NectarJuan Pablo Olano CastilloNo ratings yet