Professional Documents

Culture Documents

Character-Based Video Summarization

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Character-Based Video Summarization

Copyright:

Available Formats

Volume 8, Issue 5, May – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Character-Based Video Summarization

Abhijith Suresh Aevin Tom Anish

Information Technology Information Technology

Rajagiri School of Engineering & Technology Rajagiri School of Engineering & Technology

Kakkanad, Kerala Kakkanad, Kerala

Simon Alexander Sreelakshmi S

Information Technology Information Technology

Rajagiri School of Engineering & Technology Rajagiri School of Engineering & Technology

Kakkanad, Kerala Kakkanad, Kerala

Tinku Soman Jacob

Asst.Professor, Information Technology

Rajagiri School of Engineering & Technology

Kakkanad, Kerala

Abstract:- The environment of video production and types of videos have different characteristics and viewers

consumption on social media platforms has undergone a have particular demands for summaries such a variety of

significant change as a result of the widespread usage of applications have stimulated heterogeneous research in this

the internet and reasonably priced video capture devices. field.

By producing a brief description of each video, video

summarizing helps viewers rapidly understand the Unfortunately, manual summarization could be time-

content of videos. However, standard paging techniques consuming with a high missing rate. Usually, fans are keen

can result in a severe burden on computer systems, while on summarizing the clips of their favourite star from movies

artificial extraction might be time-consuming with a high or TV series but printed summaries of content are hard to

quantity of missing data. The multi-view description of create and read such that it is highly time-consuming and

videos can also be found in the rich textual content that is video summaries will provide a visual treat. Therefore,

typically included with videos on social media platforms, adequate techniques to automatically extract the character-

such as subtitles or bullet-screen comments. Here, a novel oriented summarization are urgently required. Indeed, the

framework for concurrently modelling visual and textual character-oriented video summarization task is quite different

information is proposed in order to achieve that goal. from the traditional video summarization. An ordinary video

Characters are found randomly using detection summary is expected to consist of important or interesting

techniques, identified by re-identification modules to clips of a long video. However, a character-oriented video

extract probable key-frames, and then the frames are summary should consist of the clips in which the specific

aggregated as a summary. The subtitles and bullet-screen character appears. Thus, to fulfill character-oriented

remarks are also used as multi-source textual information summarization, it is indispensable to identify the clips of a

to create a final text summary of the target character specific person from a long video to perform a person search.

from the input video. The person search task has been tackled by, for example,

joint modelling of detection and re-identification, or the

Keywords:- Video, Summary, Subtitles, Bullet-Screen, memory-guided model. However, these prior arts are

Frames. designed for a different scenario, e.g. surveillance video

analysis. Thus, they mainly focus on the person search with a

I. INTRODUCTION relatively static pose and background, or even similar

clothing. In the scenario of character-oriented summarization,

The widespread use of the internet and affordable video both background and poses of characters are always

capturing devices have dramatically changed the landscape of changing, as well as the different clothing in different

video creation and consumption in social media platforms the scenarios, which extremely increases the difficulty.

purpose of video summaries varies considerably depending Obviously, current person search techniques should be

on application scenarios for sports viewers want to see further enhanced. At the same time, on social media

moments that are critical to the outcome of a game whereas platforms, videos are usually accompanied by rich textual

for surveillance video summaries need to contain scenes that information, which may benefit the understanding of media

are unusual and noteworthy the application scenarios grow as content. Especially, with the so-called “bullet-screen

more videos are created eg there are new types of videos such comments” (i.e., comments flying across the screen like

as video game live streaming and video blogs vlogs this has bullets), namely the time-sync feedback of massive users,

led to a new problem of video summarization as different more comprehensive or even subjective description could be

IJISRT23MAY2469 www.ijisrt.com 3504

Volume 8, Issue 5, May – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

achieved, which results in more explicit cues to capture the paper is a method for creating summaries of 360-degree

characters. videos that are able to focus on the content of the video and

the story being told, while also maintaining a coherent and

To that end in this particular project a novel framework coherent narrative structure.

for jointly modelling visual and textual information for

character-oriented summarization is proposed to be specific C. Scene Summarization via Motion Normalization

we first locate characters indiscriminately through detection This paper proposes a method for creating a summary of

methods then identify these characters via the re- a 360-degree video based on the story being told in the video.

identification module to extract potential key-frames and The method involves using a memory network to analyze the

finally aggregate the frames as summarization moreover as content and structure of the video and select keyframes that

multi-source textual information ie the subtitles and bullet- are important for telling the story. The selected keyframes are

screen comments are utilized. Experiments on real-world then assembled into a summary video that tells the story in a

datasets validate that this solution outperforms several state- condensed form.

of-the-art baselines and further reveal some rules of the

semantic matching between characters and textual The authors propose using their method for summarizing

information. 360-degree videos that are too long or contain too much

excess motion to be easily interpretable. They also suggest

II. LITERATURE SURVEY that their method could be used to create summaries of other

types of videos, such as traditional flat videos or live-

A. CNN and HEVC Video Coding Features for Static Video streaming videos. Overall, the proposal put forward in this

Summarization paper is a method for creating summaries of 360-degree

In this paper video summarization by automatically videos that are able to focus on the content of the video and

selecting keyframes, which is the most common method the story being told, while also maintaining a coherent and

among the two main techniques for summarizing videos. coherent narrative structure.

Examining all frames within a video for selection can be slow

and waste time and computational resources on redundant or D. Similarity Based Block Sparse Subset Selection for Video

similar frames. Additionally, it is important to use space Summarization

reduction to accelerate the process and ensure that only This paper proposes a method for creating a summary of

meaningful features are considered. To address these issues, a 360-degree video based on the story being told in the video.

this study will utilize deep learning techniques, such as The method involves using a memory network to analyze the

conventional neural networks and random forests, which have content and structure of the video and select keyframes that

gained popularity in recent years for generating tasks in image are important for telling the story. The selected keyframes are

and video processing. then assembled into a summary video that tells the story in a

condensed form.

With the advancement of the High-Efficiency Video

Codec (HEVC) video standard, the deep learning community The authors propose using their method for summarizing

often overlooks the potential of the information contained in 360-degree videos that are too long or contain too much

the HEVC video bitstream. This research aims to address this excess motion to be easily interpretable. They also suggest

gap by using low-level HEVC features in combination with that their method could be used to create summaries of other

CNN features to aid in video summarization. This study also types of videos, such as traditional flat videos or live-

introduces a new method for reducing the dimension of the streaming videos. Overall, the proposal put forward in this

feature space obtained from CNNs using stepwise regression, paper is a method for creating summaries of 360-degree

as well as a novel approach for eliminating similar frames videos that are able to focus on the content of the video and

based on HEVC features. the story being told, while also maintaining a coherent and

coherent narrative structure.

B. A memory network approach for story-based temporal

summarization of 360 videos E. HSA-RNN: Hierarchical structure-adaptive RNN for

This paper proposes a method for creating a summary of video summarization

a 360-degree video based on the story being told in the video. This paper proposes a method for creating a summary of

The method involves using a memory network to analyze the a 360-degree video based on the story being told in the video.

content and structure of the video and select keyframes that The method involves using a memory network to analyze the

are important for telling the story. The selected keyframes are content and structure of the video and select keyframes that

then assembled into a summary video that tells the story in a are important for telling the story. The selected keyframes are

condensed form. then assembled into a summary video that tells the story in a

condensed form.

The authors propose using their method for summarizing

360-degree videos that are too long or contain too much The authors propose using their method for summarizing

excess motion to be easily interpretable. They also suggest 360-degree videos that are too long or contain too much

that their method could be used to create summaries of other excess motion to be easily interpretable. They also suggest

types of videos, such as traditional flat videos or live- that their method could be used to create summaries of other

streaming videos. Overall, the proposal put forward in this types of videos, such as traditional flat videos or live-

IJISRT23MAY2469 www.ijisrt.com 3505

Volume 8, Issue 5, May – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

streaming videos.Overall, the proposal put forward in this C. Abstractive Summarization

paper is a method for creating summaries of 360-degree The natural language processing method called

videos that are able to focus on the content of the video and abstractive summarising goes beyond basic copying of

the story being told, while also maintaining a coherent and important phrases to provide a succinct and useful summary

coherent narrative structure. of a given text using an lstm-based encoder-decoder model is

one method for abstractive summarization the neural network

III. METHODS design known as lstm or long short-term memory

consists of four neural networks and memory units known as

A. Identification cells information is stored in the cells and memory operations

The detection module seeks to identify any character- are carried out via gates the forget gate input gate and output

containing regions of interest (RoIs). It makes use of the gate are three gates that are shown in figure 36 information

Faster R-CNN detector, which is renowned for its capacity to that is no longer necessary in the cell state is deleted via the

find objects of various sizes in a variety of scenarios. forget gate it requires two inputs ht-1 output from the

Additionally, to solve overfitting and inference-time preceding cell and xt input at certain

mismatch concerns and improve performance, the cutting- time which are multiplied by weight matrices and

edge Cascade R-CNN detector is used. It's crucial to combined with biases an activation function that creates

remember that the detectors can be changed as necessary. All a binary output is applied to the outcome information is lost if

characters are positioned at this point without difference. The the output is 0 but is saved for use in the future if it is 1 the

Detection module determines whether or not a character is input gate enriches the cell state with important data in a

present in a RoI in order to increase performance. The manner similar to the forget gate it controls the information

classifier's performance is ensured by enough character- using the sigmoid function and filters the values to be

oriented frame training data. As shown in Figure 3.4, the remembered the tanh function produces a vector with all

Region Proposal Network (RPN) is used by the Faster R-CNN feasible values from ht-1 and xt ranging from -1 to 1 then to

to create region proposals. Every proposal goes through ROI provide usable information the vector and the controlled

pooling to . A fixed-length feature vector is extracted from values are multiplied the output gate takes information that is

each proposal using ROI pooling, and it is subsequently pertinent from the current cell state and outputs it the tanh

categorised using Fast R-CNN. Results provide class scores function is first used to transform the cell into a vector

and bounding boxes for items that were identified. By being following that the data is controlled by the sigmoid function

trained and tailored particularly for the detection job, the RPN and filtered using the inputs ht-1 and xt the vector is then

delivers better region recommendations than generic multiplied with the controlled values before being provided as

approaches. The RPN and Fast R-CNN share convolutional output to the next cell

layers, making it possible to combine them into a single

network and streamline training. The RPN creates candidate D. System Architecture

suggestions by applying a sliding window of size nxn to the First the input video will be passed to the identification

feature map, which are then filtered according to their module where each frame may contain several regions of

objectness score. The Faster R-CNN model for person interest (RoIs) in which each RoI indicates a bounding box

detection in frames is trained for the proposed task using a that contain a specific character. Definitely, {RoI}= ∅

customised dataset. indicates that no character appear in the frame. Next we have

the re-identification module where a user query will be

B. Re-Identification inputted. The user query is the query where the character to be

Use The FaceNet technique, which generates precise summarized will be inputted. In the re-identification module,

face mappings using deep learning architectures like ZF-Net the FaceNet module is used to generate feature difference

or Inception Network, is used by the system's re-identification maps for similarity estimation between the inputted image and

module. 1x1 convolutions are introduced to reduce the images in the gallery. Multi-source textual information is

number of parameters. These models normalise the output leveraged to enhance the distinction in the re-ID module. To

embeddings at the L2 level. In order to optimise the distance perform summarization in the re-identification module, the

between embeddings of various identities while decreasing first step is document vectorization, where each document

the squared distance between embeddings of the same (subtitle or bullet-screen comment) is vectorized for semantic

identity, the architecture is then trained using the triplet loss embedding. Subtitles can be easily vectorized given their

function. The convolutional neural network (CNN) extracts strong logic and normality. Bullet-screen comments, on the

information from the input face shot after pooling and fully other hand, are generated by massive users and may contain

connected layers. The CNN's output is a feature vector that short text with informal expressions or even slang. To

represents the input face. The triplet loss function instructs the vectorize them, a character-level LSTM is used. After

network to map input faces to a high-dimensional embedding distinction in the re-ID module, the potential keyframes

space where faces of the same identity are grouped together containing the target character C are obtained. If following the

and faces of different identities are dispersed. The embedding strict definition of character-oriented summarization, all the

layer transfers the CNN output to the embedding space using keyframes are simply spliced together to form the

this method. These embeddings are utilised for facial summarization video. Additionally, the subtitles of the

recognition tasks including identification and verification. keyframes are processed using an LSTM to generate the final

written summary. Subtitles could be direct descriptions of

character status and behaviors in the first-person perspective,

IJISRT23MAY2469 www.ijisrt.com 3506

Volume 8, Issue 5, May – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

with relatively formal expression. On the contrary, bullet- speaking linguists were tasked with creating speech that

screen comments are always subjective comments in the third- would accurately match the subjects and lengths of their own

person perspective, with informal expressions or even slang real-life messenger exchanges. The collection includes slang

generated by massive users. Thus, it is necessary to select the terms, emoticons, typos, and a variety of styles and registers,

appropriate source of textual information based on the visual including casual, semi-formal, and formal English. After the

feature of a certain frame, so that the selected textual dialogues were created, they were annotated with succinct

information could better reflect the identity of the summaries that were written in the third person with the

character. After the distinction in re-ID module, the potential intention of giving quick summaries of the topic.

key-frames which contain the target character C is now

obtained. If following the strict definition of character- In conclusion, the SAMSum dataset provides a broad

oriented summarization, all the keyframes is simply spliced collection of conversational interactions accompanied by

up as the summarization video. However, considering the third-person summaries, enabling the COCO dataset's role as

visual effect that viewers may prefer to fluent videos with a a complete resource for image identification tasks.

continuous story, some frames are tolerated without target studies on conversation synthesis.

characters that connect two extracted clips.

V. RESULTS AND DISCUSSION

Our approach yielded promising outcomes in both text

summary generation and summary video creation. The text

summaries precisely captured vital information about each

character, encompassing their roles, actions, and

relationships. Concurrently, the abstract summary videos

effectively showcased pivotal moments involving the

identified characters, providing a concise overview of the

video content. The amalgamation of textual clues and re-

identification proved to be a formidable strategy for

character-based identification and summary generation. By

integrating natural language processing techniques, we

successfully extracted pertinent information from the textual

clues, thereby enhancing the quality of the generated text

summaries. Furthermore, our re-identification module

proficiently tracked characters across frames, guaranteeing

the inclusion of their crucial moments in the summary video.

Nonetheless, challenges persist in our methodology. The

Fig 1. System Architecture accuracy of the re-identification module heavily depends on

the video footage's quality and the complexity of the scenes.

IV. DATASET Factors such as noisy or low-resolution videos, as well as

crowded scenes with occlusions, can potentially impact the

The COCO (Common Objects in Context) dataset and module's performance. Consequently, future research should

the SAMSum dataset are two prominent datasets that are prioritize refining the re-identification algorithms and

utilized for various reasons. In computer vision research, the exploring advanced video processing techniques to address

COCO dataset is frequently used for tasks including object these challenges.

identification, segmentation, and captioning. It has more than

330,000 annotated photos that are divided into 80 item types In conclusion, our approach combining textual clues

and each image has five illustrative captions. Modern and re-identification for character-based identification and

algorithms for object identification and segmentation have summary generation exhibited promising results. The text

been trained and tested using this dataset. summaries and abstract summary videos derived from this

methodology provide valuable insights into the video

The photos themselves and the related annotations are content, enabling applications in various domains, such as

the two primary parts of the COCO dataset. A hierarchical video analysis, content summarization, and film studies.

directory structure is used to organize the photos, with distinct

folders for the train, validation, and test sets. Each file

representing an annotation is in JSON format and refers to a

distinct picture. The name of the picture file, size (width and

height), object class (such as "person" or "car"), bounding box

coordinates (x, y, width, height), segmentation mask (in

polygon or RLE format), and keypoints with their locations, if

available, are all included in each annotation. Each image in

the collection also has five subtitles that provide background

information about the scene. The SAMSum dataset, on the

other hand, is made up of around 16,000 textual exchanges

that appear to be messenger-like discussions. English- Fig 2 Target Character

IJISRT23MAY2469 www.ijisrt.com 3507

Volume 8, Issue 5, May – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

REFERENCES

[1]. O. Issa and T. Shanableh, "CNN and HEVC Video

Coding Features for Static Video Summarization," in

IEEE Access, vol. 10, pp. 72080-72091, 2022, doi:

10.1109/ACCESS.2022.3188638.

[2]. S. Lee, J. Sung, Y. Yu, and G. Kim, “A memory

network approach for story-based temporal

summarization of 360 videos,” in Proc. IEEE/CVF

Conf. Comput. Vision Pattern Recognit., 2018, pp.

1410–1419.

[3]. S. Wehrwein, K. Bala and N. Snavely, "Scene

Fig 3 Text Summarization Summarization via Motion Normalization," in IEEE

Transactions on Visualization and Computer Graphics,

As creating and watching videos is more active than vol. 27, no. 4, pp. 2495-2501, 1 April 2021, doi:

ever, video summarization now has the potential to have a 10.1109/TVCG.2020.2993195.

larger impact on real-world services and people’s daily lives. [4]. S. Mingyang Ma; Shaohui Mei; Shuai Wan; Zhiyong

A printed summary of any particular character or instance is Wang; David Dagan Feng; Mohammed Bennamoun,

tiring to read so a visual summary is essential as it is easy to "Similarity-Based Block Sparse Subset Selection for

understand. Video summarization will be useful for creating Video Summarization," IEEE Transactions on Circuits

a compact summary of their favorite character in a movie or and Systems for Video Technology, Volume: 31, Issue:

sitcom industry or even for analyzing a particular character 10, October 2021, pp. 3967 - 3980, DOI:

for literature. 10.1109/TCSVT.2020.3044600

[5]. B. Zhao, X. Li, and X. Lu, “HSA-RNN: Hierarchical

In this paper, a novel framework to jointly model the structure-adaptive RNN for video summarization,” in

visual and textual cues for character-oriented summarization Proc. IEEE/CVF Conf. Comput. Vision Pattern

is proposed. Video summarization will help people to Recognit., 2018, pp. 7405–7414.

understand a story or a movie up to the particular instance it [6]. Y. Shen, T. Xiao, H. Li, S. Yi, and X. Wang, “End-to-

has occurred, helping out people to get the continuity of the end deep Kroneckerproduct matching for person re-

story if they have missed any portions. In addition, textual identification,” IEEE/CVF Conf. Comput. Vision

information may help to describe the target characters more Pattern Recognit., pp. 6886–6895, 2018.

completely, and even provide some more clues which could [7]. S. Ren, K. He, R. B. Girshick, and J. Sun, “Faster R-

hardly be revealed by visual features. This phenomenon also CNN: Towards realtime object detection with region

inspires us to fully utilize the potential of time-sync textual proposal networks,” IEEE Trans. Pattern Anal. Mach.

information with the help of audio-to-speech converter to Intell., vol. 39, no. 6, pp. 1137–1149, Jun. 2017

identify textual cues even if the video doesn’t have any [8]. P. Varini, G. Serra and R. Cucchiara, "Personalized

textual information. Egocentric Video Summarization of Cultural Tour on

User Preferences Input," in IEEE Transactions on

Experiments on real-world data sets validated that the Multimedia, vol. 19, no. 12, pp. 2832-2845, Dec. 2017,

solution outperformed several state-of-the-art baselines on doi: 10.1109/TMM.2017.2705915.

both search and summarization tasks, which proved the [9]. A. Sharghi, J. S. Laurel, and B. Gong, “Query-focused

effectiveness of the framework on the character-oriented video summarization: Dataset, evaluation, and a

video summarization problem. Problems with manual memory network based approach,” in Proc. IEEE Conf.

summarization such as excess time consumption and missing Comput. Vision Pattern Recognit., 2017, pp. 2127–

rate were solved. A novel framework to jointly model the 2136.

visual and textual cues for character-oriented summarization [10]. M. Gygli, H. Grabner, and L. V. Gool, “Video

was proposed. summarization by learning submodular mixtures of

objectives,” in Proc. IEEE Conf. Comput. Vision

Pattern Recognit., 2015, pp. 3090–3098

IJISRT23MAY2469 www.ijisrt.com 3508

You might also like

- Monster ENCyclopedia LamiaDocument37 pagesMonster ENCyclopedia LamiaknackkayNo ratings yet

- Tome of Heroes (Kobold Press)Document321 pagesTome of Heroes (Kobold Press)Max cko82% (34)

- Spells From Baldurs GateDocument46 pagesSpells From Baldurs GateKristiaanVilesNo ratings yet

- They Came From Beyond The StarsDocument22 pagesThey Came From Beyond The StarsJarad Kendrick100% (2)

- Avalon Solo Adventure System - Core RulesDocument61 pagesAvalon Solo Adventure System - Core RulesBrian Lai100% (5)

- Traveller - The Third Imperium - Sword WorldsDocument126 pagesTraveller - The Third Imperium - Sword WorldsBakr Abbahou50% (2)

- All Unity Source CodeDocument16 pagesAll Unity Source CodeMohamed AlashramNo ratings yet

- Unearthed Arcana - Awakened MysticDocument7 pagesUnearthed Arcana - Awakened MysticRenato AlvesNo ratings yet

- Sarguro's Compendium - Monk (U - Kaiburr - Kath-Hound)Document7 pagesSarguro's Compendium - Monk (U - Kaiburr - Kath-Hound)Erick Renan100% (1)

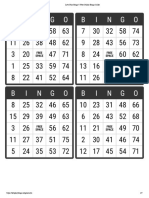

- B I N G O B I N G O: Free Space Free SpaceDocument7 pagesB I N G O B I N G O: Free Space Free SpaceChris LoweNo ratings yet

- SIMOC, Secondary 1 ContestDocument8 pagesSIMOC, Secondary 1 ContestjimmyNo ratings yet

- Software Development: BCS Level 4 Certificate in IT study guideFrom EverandSoftware Development: BCS Level 4 Certificate in IT study guideRating: 3.5 out of 5 stars3.5/5 (2)

- Image Caption Generation Using Deep Learning: Department of Electronics & Instrumentation Engineering NIT Silchar, AssamDocument21 pagesImage Caption Generation Using Deep Learning: Department of Electronics & Instrumentation Engineering NIT Silchar, AssamPotato GamingNo ratings yet

- Video Captioning Using Neural NetworksDocument13 pagesVideo Captioning Using Neural NetworksIJRASETPublicationsNo ratings yet

- Open-Source Revolution : Google’s Streaming Dense Video Captioning ModelDocument8 pagesOpen-Source Revolution : Google’s Streaming Dense Video Captioning ModelMy SocialNo ratings yet

- Semantic Text Summarization of Long Videos: March 2017Document10 pagesSemantic Text Summarization of Long Videos: March 2017AnanyaNo ratings yet

- Stephen W. Smoliar, Hongliang Zhang - Content Based Video Indexing and Retrieval (1994)Document11 pagesStephen W. Smoliar, Hongliang Zhang - Content Based Video Indexing and Retrieval (1994)more_than_a_dreamNo ratings yet

- Video Text Detection by Tong Lu, Shivakumara Palaiahnakote, Chew Lim TanDocument272 pagesVideo Text Detection by Tong Lu, Shivakumara Palaiahnakote, Chew Lim TanHomeabcTalibovlarNo ratings yet

- Summarization of Web Videos by Tag Localization and Key Shot IdentificationDocument17 pagesSummarization of Web Videos by Tag Localization and Key Shot IdentificationBala KrishnanNo ratings yet

- Narrative Dataset: Towards Goal-Driven Narrative Generation: Karen Stephen Rishabh Sheoran Satoshi YamazakiDocument6 pagesNarrative Dataset: Towards Goal-Driven Narrative Generation: Karen Stephen Rishabh Sheoran Satoshi YamazakiSomePersonNo ratings yet

- Automatic Image and Video Caption Generation With Deep Learning: A Concise Review and Algorithmic OverlapDocument15 pagesAutomatic Image and Video Caption Generation With Deep Learning: A Concise Review and Algorithmic OverlapSaket SinghNo ratings yet

- Image Captionbot For Assistive TechnologyDocument3 pagesImage Captionbot For Assistive TechnologyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Synopsis On: Video SummarizationDocument11 pagesSynopsis On: Video SummarizationGaurav YadavNo ratings yet

- Video Database: Role of Video Feature ExtractionDocument5 pagesVideo Database: Role of Video Feature Extractionmulayam singh yadavNo ratings yet

- A Straightforward Framework For Video Retrieval Using CLIPDocument10 pagesA Straightforward Framework For Video Retrieval Using CLIPmultipurposeNo ratings yet

- A Survey of An Adaptive Weighted Spatio-Temporal Pyramid Matching For Video RetrievalDocument3 pagesA Survey of An Adaptive Weighted Spatio-Temporal Pyramid Matching For Video Retrievalseventhsensegroup100% (1)

- Localizing Text On VideosDocument13 pagesLocalizing Text On VideosAbhijeet ChattarjeeNo ratings yet

- Video Summarization Using Deep Semantic FeaturesDocument16 pagesVideo Summarization Using Deep Semantic FeaturessauravguptaaaNo ratings yet

- VideoMiningBook Chapter7 PDFDocument40 pagesVideoMiningBook Chapter7 PDFSwathi VishwanathNo ratings yet

- AI-based Video Summarization Using FFmpeg and NLPDocument6 pagesAI-based Video Summarization Using FFmpeg and NLPInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Content Based Video Retrieval ThesisDocument6 pagesContent Based Video Retrieval Thesishcivczwff100% (2)

- Project Report Video SummarizationDocument20 pagesProject Report Video SummarizationRohit VermaNo ratings yet

- Key-Shots Based Video Summarization by Applying Self-Attention MechanismDocument7 pagesKey-Shots Based Video Summarization by Applying Self-Attention Mechanismrock starNo ratings yet

- 4950-Article Text-8015-1-10-20190709Document8 pages4950-Article Text-8015-1-10-20190709고다윤 / 학생 / 컴퓨터공학부No ratings yet

- Image Captioning Generator Using Deep Machine LearningDocument3 pagesImage Captioning Generator Using Deep Machine LearningEditor IJTSRDNo ratings yet

- Automated Video Summarization Using Speech TranscriptsDocument12 pagesAutomated Video Summarization Using Speech TranscriptsBryanNo ratings yet

- Gray Scale Image Captioning Using CNN and LSTMDocument8 pagesGray Scale Image Captioning Using CNN and LSTMIJRASETPublicationsNo ratings yet

- Dynamic Summarization of Videos Based On Descriptors in Space-Time Video Volumes and Sparse AutoencoderDocument11 pagesDynamic Summarization of Videos Based On Descriptors in Space-Time Video Volumes and Sparse AutoencoderDavid DivadNo ratings yet

- Image Description Generator Using Deep LearningDocument7 pagesImage Description Generator Using Deep LearningIJRASETPublicationsNo ratings yet

- Video DetectionDocument4 pagesVideo DetectionJournalNX - a Multidisciplinary Peer Reviewed JournalNo ratings yet

- Video Liveness VerificationDocument4 pagesVideo Liveness VerificationEditor IJTSRDNo ratings yet

- 19L038 - Deep Learning - Assignment PresentationDocument24 pages19L038 - Deep Learning - Assignment Presentation19L147 - VIBOOSITHASRI N SNo ratings yet

- BTP ReportDocument4 pagesBTP Reportkshalika734No ratings yet

- Action Event Retrieval From Cricket Video Using Audio Energy Feature For Event SummarizationDocument8 pagesAction Event Retrieval From Cricket Video Using Audio Energy Feature For Event SummarizationIAEME PublicationNo ratings yet

- Automatic Text Segmentation and Text Recognition For Video IndexingDocument15 pagesAutomatic Text Segmentation and Text Recognition For Video IndexingSyam AppalaNo ratings yet

- A Survey On Video Coding Optimizations Using Machine LearningDocument5 pagesA Survey On Video Coding Optimizations Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Video and Text Summarisation Using NLPDocument3 pagesVideo and Text Summarisation Using NLPInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Final Mini ProjectDocument50 pagesFinal Mini ProjectHarika RavitlaNo ratings yet

- Css OBDocument14 pagesCss OBOmkar Bankar obNo ratings yet

- Fin Irjmets1649174683Document5 pagesFin Irjmets1649174683Karunya ChavanNo ratings yet

- Image Captioning Generator Using CNN and LSTMDocument8 pagesImage Captioning Generator Using CNN and LSTMIJRASETPublicationsNo ratings yet

- Viprovoq: Towards A Vocabulary For Video Quality Assessment in The Context of Creative Video ProductionDocument9 pagesViprovoq: Towards A Vocabulary For Video Quality Assessment in The Context of Creative Video ProductionS SeriesNo ratings yet

- JPSP - 2022 - 115Document6 pagesJPSP - 2022 - 115Karunya ChavanNo ratings yet

- Unsupervised Video Summarization Framework Using Keyframe Extraction and Video SkimmingDocument6 pagesUnsupervised Video Summarization Framework Using Keyframe Extraction and Video SkimmingLaith QasemNo ratings yet

- Superframes, A Temporal Video SegmentationDocument6 pagesSuperframes, A Temporal Video SegmentationAp MllNo ratings yet

- Video Summarization and Retrieval Using Singular Value DecompositionDocument12 pagesVideo Summarization and Retrieval Using Singular Value DecompositionIAGPLSNo ratings yet

- A Survey On Visual Content-Based Video Indexing and RetrievalDocument23 pagesA Survey On Visual Content-Based Video Indexing and RetrievalGS Naveen KumarNo ratings yet

- Live Video Streaming With Low LatencyDocument4 pagesLive Video Streaming With Low LatencyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Video Summarization Using Deep Neural Networks: A SurveyDocument26 pagesVideo Summarization Using Deep Neural Networks: A SurveyLaith QasemNo ratings yet

- Accepted Manuscript: J. Vis. Commun. Image RDocument47 pagesAccepted Manuscript: J. Vis. Commun. Image RANSHY SINGHNo ratings yet

- Age and Gender DetectionDocument4 pagesAge and Gender DetectionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Implementation of P-N Learning Based Compression in Video ProcessingDocument3 pagesImplementation of P-N Learning Based Compression in Video Processingkoduru vasanthiNo ratings yet

- An Optimization Theoretic Framework For Video Transmission With Minimal Total Distortion Over Wireless NetworkDocument5 pagesAn Optimization Theoretic Framework For Video Transmission With Minimal Total Distortion Over Wireless NetworkEditor InsideJournalsNo ratings yet

- Pattern Recognition Letters: Chengyuan Zhang, Yunwu Lin, Lei Zhu, Anfeng Liu, Zuping Zhang, Fang HuangDocument7 pagesPattern Recognition Letters: Chengyuan Zhang, Yunwu Lin, Lei Zhu, Anfeng Liu, Zuping Zhang, Fang HuangMina MohammadiNo ratings yet

- Graphical Password ReportDocument20 pagesGraphical Password ReportOmkar SangoteNo ratings yet

- Face Recognition For Criminal DetectionDocument7 pagesFace Recognition For Criminal DetectionIJRASETPublicationsNo ratings yet

- Rank Pooling For Action RecognitionDocument4 pagesRank Pooling For Action RecognitionAnonymous 1aqlkZNo ratings yet

- PGCON Paper FinalDocument4 pagesPGCON Paper FinalArbaaz ShaikhNo ratings yet

- Applsci 11 05260 v2Document17 pagesApplsci 11 05260 v2Tania ChakrabortyNo ratings yet

- Video Content Representation With Grammar For Semantic RetrievalDocument6 pagesVideo Content Representation With Grammar For Semantic Retrievaleditor_ijarcsseNo ratings yet

- CVIU Hema 1-S2.0-S1077314222000650-MainDocument13 pagesCVIU Hema 1-S2.0-S1077314222000650-Mainhemalatha7No ratings yet

- An Analysis on Mental Health Issues among IndividualsDocument6 pagesAn Analysis on Mental Health Issues among IndividualsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Harnessing Open Innovation for Translating Global Languages into Indian LanuagesDocument7 pagesHarnessing Open Innovation for Translating Global Languages into Indian LanuagesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diabetic Retinopathy Stage Detection Using CNN and Inception V3Document9 pagesDiabetic Retinopathy Stage Detection Using CNN and Inception V3International Journal of Innovative Science and Research TechnologyNo ratings yet

- Investigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatDocument16 pagesInvestigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Exploring the Molecular Docking Interactions between the Polyherbal Formulation Ibadhychooranam and Human Aldose Reductase Enzyme as a Novel Approach for Investigating its Potential Efficacy in Management of CataractDocument7 pagesExploring the Molecular Docking Interactions between the Polyherbal Formulation Ibadhychooranam and Human Aldose Reductase Enzyme as a Novel Approach for Investigating its Potential Efficacy in Management of CataractInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Making of Object Recognition Eyeglasses for the Visually Impaired using Image AIDocument6 pagesThe Making of Object Recognition Eyeglasses for the Visually Impaired using Image AIInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Relationship between Teacher Reflective Practice and Students Engagement in the Public Elementary SchoolDocument31 pagesThe Relationship between Teacher Reflective Practice and Students Engagement in the Public Elementary SchoolInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Dense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)Document2 pagesDense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Comparatively Design and Analyze Elevated Rectangular Water Reservoir with and without Bracing for Different Stagging HeightDocument4 pagesComparatively Design and Analyze Elevated Rectangular Water Reservoir with and without Bracing for Different Stagging HeightInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Impact of Digital Marketing Dimensions on Customer SatisfactionDocument6 pagesThe Impact of Digital Marketing Dimensions on Customer SatisfactionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Electro-Optics Properties of Intact Cocoa Beans based on Near Infrared TechnologyDocument7 pagesElectro-Optics Properties of Intact Cocoa Beans based on Near Infrared TechnologyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Formulation and Evaluation of Poly Herbal Body ScrubDocument6 pagesFormulation and Evaluation of Poly Herbal Body ScrubInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Advancing Healthcare Predictions: Harnessing Machine Learning for Accurate Health Index PrognosisDocument8 pagesAdvancing Healthcare Predictions: Harnessing Machine Learning for Accurate Health Index PrognosisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Utilization of Date Palm (Phoenix dactylifera) Leaf Fiber as a Main Component in Making an Improvised Water FilterDocument11 pagesThe Utilization of Date Palm (Phoenix dactylifera) Leaf Fiber as a Main Component in Making an Improvised Water FilterInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Cyberbullying: Legal and Ethical Implications, Challenges and Opportunities for Policy DevelopmentDocument7 pagesCyberbullying: Legal and Ethical Implications, Challenges and Opportunities for Policy DevelopmentInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Auto Encoder Driven Hybrid Pipelines for Image Deblurring using NAFNETDocument6 pagesAuto Encoder Driven Hybrid Pipelines for Image Deblurring using NAFNETInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Terracing as an Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains- CameroonDocument14 pagesTerracing as an Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains- CameroonInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Survey of the Plastic Waste used in Paving BlocksDocument4 pagesA Survey of the Plastic Waste used in Paving BlocksInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Hepatic Portovenous Gas in a Young MaleDocument2 pagesHepatic Portovenous Gas in a Young MaleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Design, Development and Evaluation of Methi-Shikakai Herbal ShampooDocument8 pagesDesign, Development and Evaluation of Methi-Shikakai Herbal ShampooInternational Journal of Innovative Science and Research Technology100% (3)

- Explorning the Role of Machine Learning in Enhancing Cloud SecurityDocument5 pagesExplorning the Role of Machine Learning in Enhancing Cloud SecurityInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Review: Pink Eye Outbreak in IndiaDocument3 pagesA Review: Pink Eye Outbreak in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Automatic Power Factor ControllerDocument4 pagesAutomatic Power Factor ControllerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Review of Biomechanics in Footwear Design and Development: An Exploration of Key Concepts and InnovationsDocument5 pagesReview of Biomechanics in Footwear Design and Development: An Exploration of Key Concepts and InnovationsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Mobile Distractions among Adolescents: Impact on Learning in the Aftermath of COVID-19 in IndiaDocument2 pagesMobile Distractions among Adolescents: Impact on Learning in the Aftermath of COVID-19 in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Studying the Situation and Proposing Some Basic Solutions to Improve Psychological Harmony Between Managerial Staff and Students of Medical Universities in Hanoi AreaDocument5 pagesStudying the Situation and Proposing Some Basic Solutions to Improve Psychological Harmony Between Managerial Staff and Students of Medical Universities in Hanoi AreaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Navigating Digitalization: AHP Insights for SMEs' Strategic TransformationDocument11 pagesNavigating Digitalization: AHP Insights for SMEs' Strategic TransformationInternational Journal of Innovative Science and Research Technology100% (1)

- Drug Dosage Control System Using Reinforcement LearningDocument8 pagesDrug Dosage Control System Using Reinforcement LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Effect of Time Variables as Predictors of Senior Secondary School Students' Mathematical Performance Department of Mathematics Education Freetown PolytechnicDocument7 pagesThe Effect of Time Variables as Predictors of Senior Secondary School Students' Mathematical Performance Department of Mathematics Education Freetown PolytechnicInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Formation of New Technology in Automated Highway System in Peripheral HighwayDocument6 pagesFormation of New Technology in Automated Highway System in Peripheral HighwayInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Patch Notes 4.1eDocument10 pagesPatch Notes 4.1eFlash ZacroshNo ratings yet

- Neopets TCGDocument17 pagesNeopets TCGBeatriz FrancoNo ratings yet

- Download Combinatorial Game Theory A Special Collection In Honor Of Elwyn Berlekamp John H Conway And Richard K Guy Richard J Nowakowski full chapterDocument68 pagesDownload Combinatorial Game Theory A Special Collection In Honor Of Elwyn Berlekamp John H Conway And Richard K Guy Richard J Nowakowski full chapterleon.kissinger904100% (6)

- Team Sonic Racing Manual - PC SteamDocument37 pagesTeam Sonic Racing Manual - PC SteamEricNo ratings yet

- Reading Fightins Game NotesDocument21 pagesReading Fightins Game NotesfightinsradioNo ratings yet

- World Cup 2023 Qualified PlayersDocument4 pagesWorld Cup 2023 Qualified Playersdidi rustanjiNo ratings yet

- SW Eberron Character Sheet SWADE v2Document2 pagesSW Eberron Character Sheet SWADE v2figoNo ratings yet

- Shovel Knight Plague of Shadows - Tango of The Troupple KingDocument2 pagesShovel Knight Plague of Shadows - Tango of The Troupple KingPlaF ErreapeNo ratings yet

- Soul Forged Spellbook PDF V 151Document33 pagesSoul Forged Spellbook PDF V 151youaredumb03No ratings yet

- 1304593-Godsrain Prophecies w5Document28 pages1304593-Godsrain Prophecies w5Kai927No ratings yet

- JOH OptionsDocument2 pagesJOH OptionsEgay46198233% (3)

- PM5X4Document2 pagesPM5X4Sergio Sosa NavaNo ratings yet

- Interactive StorytellingDocument545 pagesInteractive StorytellingCésarNo ratings yet

- Aos Warscroll Troggoth HagDocument1 pageAos Warscroll Troggoth HagfaithNo ratings yet

- Distant Worlds II BookDocument7 pagesDistant Worlds II BookJoaoPauloNo ratings yet

- Unit 7 Test A Grupa 1 PDFDocument3 pagesUnit 7 Test A Grupa 1 PDFWojciech ŻakowskiNo ratings yet

- Gambit Killer: by Ivan Salgado LopezDocument18 pagesGambit Killer: by Ivan Salgado LopezJohn Doe 32-8No ratings yet

- The Rogue Sharks: Roadmap 1.0Document3 pagesThe Rogue Sharks: Roadmap 1.0safasdffsdNo ratings yet

- GTA: LCS Cheat Codes: Top Grand Theft Auto CheatsDocument3 pagesGTA: LCS Cheat Codes: Top Grand Theft Auto CheatsAmar HafizNo ratings yet