Professional Documents

Culture Documents

Facial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108

Uploaded by

Aayush SachdevaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Facial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108

Uploaded by

Aayush SachdevaCopyright:

Available Formats

Published by : International Journal of Engineering Research & Technology (IJERT)

http://www.ijert.org ISSN: 2278-0181

Vol. 10 Issue 09, September-2021

Facial Emotion and Object Detection for Visually

Impaired & Blind Persons

Based on Deep Neural Network Concept

Daniel D Rekha M S

PG Student Assistant Professor

Department of Computer Science, Department of Computer Science,

Impact College Of Engineering & Applied Sciences, Impact College of Engineering & Applied Sciences,

Bangalore, India Bangalore, India

Abstract— Across the world there are more than millions of 2. The wearable devices: Raspberry pi along with

visually impaired and blind persons. Many techniques are used computer vision technology the content around the

in assisting them such as smart devices, human guides and Braille blind is noticed and informed through local language.

literature for reading and writing purpose. Technologies like

image processing, computer vision are used in developing these 3. Smart specs: It detects the printed text and produces

assisting devices. The main aspire of this paper is to detecting the the sound output through TTS method.

face and the emotion of the person detected as well as identifying

4. Navigation System: An IoT based system is designed

the object around the visually impaired person and alerting them

through audio output.

using Raspberry Pi and ultrasonic sensor in the waist

belt which identifies the object and obstacles and

Keywords—Neural network, datasets, Opencv, pyttsx, yolo, produces the audio signals.

bounding box

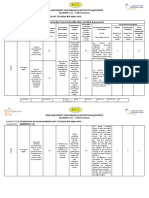

III. PROBLEM DESCRIPTION

I. INTRODUCTION

According to survey in the current world, more than 10

millions of entire populations are visually challenged. Many Datasets Dividing into Pre-

efforts are carried out in order to help and guide visually Collection training and processing

impaired and blind persons to lead their normal daily life. testing set data

Language plays an important role in every one’s life for

communicating with each other but visually impaired and

blind persons communicate through a special language know Build

Test Model Train Model

as Braille most of the schools came up in providing knowledge CNN

about Braille which makes visually challenged to read and Model

write without others help. Other better way of communication

is audible sound through which visually challenged can

understand the things that are happening around them.

Many assisting techniques such as assisting devices and Predict Output

applications are developed using the trending technologies

such as image processing, Raspberry Pi, Computer Graphics

and vision for guiding and providing assistance to visually Figure 1: problem description

impaired and blind in leading day to day life.

Objectives:

The important aspect of this paper is identifying the person in The Objectives of the proposed system are:

front of the visually challenged along with their facial 1. Studying existing systems.

emotions and also identifying the object that is around the 2. Working with image acquisition system.

blind or visually impaired persons and alerting them with the 3. Face and emotion recognition.

audible sound. 4. Object identification.

II. EXISITING SYSTEM 5. Classifying object using CNN

There exits various system in order to assist the visually 6. Finding the position of an object in the input frame.

challenged such as 7. Providing the sound alert about the position of the

object identified.

1. Braille literature: It is used to educate the visually

challenged to read and write without third persons

The processing frame work includes blocks such as

help.

➢ A block responsible for basic preprocessing steps as

required by the module objectives.

IJERTV10IS090108 www.ijert.org 209

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

Published by : International Journal of Engineering Research & Technology (IJERT)

http://www.ijert.org ISSN: 2278-0181

Vol. 10 Issue 09, September-2021

➢ A block for identifying face, emotion and object. IV IMPLEMENTATION

➢ A block for providing voice output.

The modules used in designing the deep neural

network model are

The steps involved in the process are as follows.

1. Dataset collection

Step 1: The dataset is collected from the different sources. 2. Dataset Splitting

3. Network Training

Step 2: The collected datasets are divided into two parts that

4. Evaluation

is 75% training and 25% testing.

Dataset Collection:

Step 3: The data is pre-processed for all the captured images.

It is the basic stage in the construction of deep neural

Step 4: The model is built using CNN. network the images along with the labels are used these labels

are given based on known and unknown image categories

Step 5: Once the Testing and Training is done the output is

The number of image should be same for both the categories

produced as an audio alert. Dataset splitting:

The dataset that is collected and divided into training set

and testing set. The training set is used to study hoe the

different categories of images looks like by using the input

Capturing Image images. After training the performance is evaluated by testing

image Processing the set.

Network Training:

The main idea of training network is to understand the

recognition of labeled data categories. When there is mistake

in the model it is corrected by itself.

Evaluation:

Extraction

The model has to be evaluated. The predictions of the

model are tabulated and compared with the ground label of the

different categories and forms the report.

RUN INITIATE

Loading Face Emotion

PROGRAM CAMERA

Models and Object

Identification

COMPARE

DETECT AND

PERSON CLASSIFY

Output in Text

form

KNOWN UNKNOWN

PYTTSX3

NAME OF

EMOTION

Audible sound Figure 3: Face and Emotion Detection

form

Step for execution:

Step1: creation of the face recognizer and obtaining the images

of the faces that has to be recognized.

Figure 2: system architecture

Step2: The face that is collected has to be converted to the

numerical data.

IJERTV10IS090108 www.ijert.org 210

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

Published by : International Journal of Engineering Research & Technology (IJERT)

http://www.ijert.org ISSN: 2278-0181

Vol. 10 Issue 09, September-2021

Step3: The python script is written with the use of various

libraries such as Opencv, NumPy etc.

Step4: At last the Face and emotion of the person is

recognized.

Object Identification:

Step1: Download the various models such as yolov3.weights,

yolov3.cfg, coco.names.

Step2: The parameters such as nmsThreshold, inpWidth,

inpHeight are initialized. The bounding box is predicted and

provided with confidence score.

Step3: The different models and classes are loaded. The cpu is

set as target and DNN backend as Opencv.

Step4: In this stage the image is read, the frames are saved and

the output bounding boxes are detected.

Step5: The frames are processed and sent to blobFromImage

function which converts it to neural network.

Step6: getUnconnectedOutLayers() function is used to obtain

the names of the output layers of the network.

Figure 5: Training the face data

Step7: The network is preprocessed and bounding boxes are

represented as a vecter of 5 elements. The following figures show the recognition of face and

Step8: The filtered boxes from the non maximum suspension different emotions.

are drawn on the input frame. The object is identified and

produced as an output through pyttsx.

V RESULTS

The window consisting of various buttons as shown in fig

pops up when the python script is executed. This provides the

buttons for capturing the face, training the captured face and

recognizing the face and emotion and object detection.

Figure 6: Face recognized with happy emotion

Figure 4: window with various buttons.

Once the face is captured it as for the user name and trains the

face data as show in the below figure.

Figure 7: Face recognized with Sad emotion

IJERTV10IS090108 www.ijert.org 211

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

Published by : International Journal of Engineering Research & Technology (IJERT)

http://www.ijert.org ISSN: 2278-0181

Vol. 10 Issue 09, September-2021

Object identified with the position of the object as shown in

the below figure. And using the quit button we can exit from

the window.

Figure 8: Identified object with its position

VI CONCLUSION

In this paper we have designed a system using deep neural

network techniques where at first using the face recognizer the

image is captured and is divided training and testing set based

on the known and unknown label categories. The face is

trained and the face and emotion of the person is recognized.

The object is detected using the yolo( you only look once)

technology where the object is captured and the bounding box

is predicted with different confidence score and the output is

provided to the user about the object identified and its position

through audio format. With this the visually impaired and blind

persons can easily come across the face emotion of the person

in front of them and the position of the objects around them.

VII BIBLIOGRAPHY

[1] M.P. Arakeri, N.S. Keerthana, M. Madhura, A. Sankar, T.

Munnavar, “Assistive Technology for the Visually Impaired Using

Computer Vision”, International Conference on Advances in

Computing, Communications and Informatics (ICACCI),

Bangalore, India, pp. 1725-1730, sept. 2018.

[2] R. Ani, E. Maria, J.J. Joyce, V. Sakkaravarthy, M.A. Raja, “Smart

Specs: Voice Assisted Text Reading system for Visually Impaired

Persons Using TTS Method”, IEEE International Conference on

Innovations in Green Energy and Healthcare Technologies

(IGEHT), Coimbatore, India, Mar. 2017

[3] Ahmed Yelamarthi “A Smart Wearable Navigation System for

Visually Impaired”, Research Gate 2016

[4] YogeshDandawate“Hardware Implementation of Obstacle

Detection for Assisting Visually Impaired People in an Unfamiliar

Environment by Using Raspberry Pi” Research Gate 2016

[5] Renuka R. Londhe, Dr. Vrushshen P. Pawar, “Analysis of Facial

Expression and Recognition Based On Statistical Approach”,

International Journal of Soft Computing and Engineering (IJSCE)

Volume-2, May 2012.

[6] AnukritiDureha “An Accurate Algorithm for Generating a Music

Playlist based on Facial Expressions” : IJCA 2014.

[7] V. Tiponuţ, D. Ianchis, Z. Haraszy, “Assisted Movement of

Visually Impaired in Outdoor Environments”, Proceedings of the

WSEAS International Conference on Systems, Rodos, Greece,

pp.386-391, 2009

IJERTV10IS090108 www.ijert.org 212

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

You might also like

- Learn Computer Vision Using OpenCV: With Deep Learning CNNs and RNNsFrom EverandLearn Computer Vision Using OpenCV: With Deep Learning CNNs and RNNsNo ratings yet

- Virtual Eye For Blind People Using Deep LearningDocument8 pagesVirtual Eye For Blind People Using Deep LearningIJRASETPublicationsNo ratings yet

- Object Detection System With Voice Alert For BlindDocument7 pagesObject Detection System With Voice Alert For BlindIJRASETPublicationsNo ratings yet

- Blind AssistanceDocument16 pagesBlind AssistancesivapriyapatnalaNo ratings yet

- Ijirt151872 PaperDocument7 pagesIjirt151872 PaperMAD BoyNo ratings yet

- Assistive Object Recognition System For Visually Impaired IJERTV9IS090382Document5 pagesAssistive Object Recognition System For Visually Impaired IJERTV9IS090382ABD BESTNo ratings yet

- Trinetra: An Assistive Eye For The Visually ImpairedDocument6 pagesTrinetra: An Assistive Eye For The Visually ImpairedInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Vision AI A Deep Learning-Based Object Recognition System For Visually Impaired People Using TensorFlow and OpenCVDocument7 pagesVision AI A Deep Learning-Based Object Recognition System For Visually Impaired People Using TensorFlow and OpenCVIJRASETPublicationsNo ratings yet

- MRI Brain Tumor Segmentation Using U-NetDocument7 pagesMRI Brain Tumor Segmentation Using U-NetIJRASETPublicationsNo ratings yet

- Assitant Visually ImpairmentDocument5 pagesAssitant Visually ImpairmentSandeep ChaurasiaNo ratings yet

- 10 1109@ccoms 2019 8821654Document5 pages10 1109@ccoms 2019 8821654sivakumariyyappan2263No ratings yet

- Computer Vision Based Assistive Technology For Blind and Visually Impaired PeopleDocument9 pagesComputer Vision Based Assistive Technology For Blind and Visually Impaired PeopleKalyan HvNo ratings yet

- Implementation of Virtual Assistant With Sign Language Using Deep Learning and Tensor FlowDocument4 pagesImplementation of Virtual Assistant With Sign Language Using Deep Learning and Tensor FlowIJRASETPublicationsNo ratings yet

- Real Time Object Detection For Visually Impaired PersonDocument8 pagesReal Time Object Detection For Visually Impaired PersonAbdunabi MuhamadievNo ratings yet

- Development Smart Eyeglasses For VisuallDocument9 pagesDevelopment Smart Eyeglasses For Visuallenimssay1230No ratings yet

- Blinds Personal Assistant Application For AndroidDocument7 pagesBlinds Personal Assistant Application For AndroidIJRASETPublicationsNo ratings yet

- The Review of Raspberry Pi Based - Systems To Assist The Disabled PersonsDocument10 pagesThe Review of Raspberry Pi Based - Systems To Assist The Disabled PersonsVelumani sNo ratings yet

- Age and Gender With Mask ReportDocument15 pagesAge and Gender With Mask Reportsuryavamsi kakaraNo ratings yet

- Project-Human Emotion DetectionDocument28 pagesProject-Human Emotion DetectionShweta NagarkarNo ratings yet

- VISION-Wearable Speech Based Feedback System For The Visually Impaired Using Computer VisionDocument5 pagesVISION-Wearable Speech Based Feedback System For The Visually Impaired Using Computer VisionM Chandan ShankarNo ratings yet

- ReportDocument32 pagesReport43FYCM II Sujal NeveNo ratings yet

- Blind Assistance System Using Image ProcessingDocument11 pagesBlind Assistance System Using Image ProcessingIJRASETPublicationsNo ratings yet

- YoloV4 Based Object Detection For Blind StickDocument5 pagesYoloV4 Based Object Detection For Blind StickInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Image Classification Using Convolutional Neural NetworksDocument8 pagesImage Classification Using Convolutional Neural NetworksIJRASETPublicationsNo ratings yet

- Report of Ann Cat3-DevDocument8 pagesReport of Ann Cat3-DevDev Ranjan RautNo ratings yet

- Feasibility ReportDocument12 pagesFeasibility ReportShivam AgarwalITF43No ratings yet

- Ijirt151872 PaperDocument6 pagesIjirt151872 Paperritiksingh150No ratings yet

- SSRN Id3611339Document4 pagesSSRN Id3611339Gerald KapinguraNo ratings yet

- Assistive Technology For Visually Impaired Using Tensor Flow Object Detection in Raspberry Pi and Coral USB AcceleratorDocument4 pagesAssistive Technology For Visually Impaired Using Tensor Flow Object Detection in Raspberry Pi and Coral USB AcceleratorMoidul Hasan KhanNo ratings yet

- Real Time Video Analytics For Object Detection and Face Identification Using Deep Learning IJERTV8IS050298Document6 pagesReal Time Video Analytics For Object Detection and Face Identification Using Deep Learning IJERTV8IS050298Rohit Krishnan MNo ratings yet

- Image Processing and Bone Conduction For Deaf and Blind PeopleDocument5 pagesImage Processing and Bone Conduction For Deaf and Blind PeopleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Android Based Application For Visually Impaired Using Deep Learning ApproachDocument10 pagesAndroid Based Application For Visually Impaired Using Deep Learning ApproachIAES IJAINo ratings yet

- Real-Time Sign Language Detection and RecognitionDocument7 pagesReal-Time Sign Language Detection and RecognitionIJRASETPublicationsNo ratings yet

- (IJCST-V10I5P19) :DR K Sailaja, S GuruPrasadDocument6 pages(IJCST-V10I5P19) :DR K Sailaja, S GuruPrasadEighthSenseGroupNo ratings yet

- Irjet V7i3567 PDFDocument6 pagesIrjet V7i3567 PDFSagor SahaNo ratings yet

- Project Name:: Gender and Age Estimation Using PythonDocument13 pagesProject Name:: Gender and Age Estimation Using PythonArijit SarkarNo ratings yet

- Icrito48877 2020 9197977Document5 pagesIcrito48877 2020 9197977Pallavi BhartiNo ratings yet

- Department of Information Science & Engineering: Open LensDocument13 pagesDepartment of Information Science & Engineering: Open LensPrithvi GopinathNo ratings yet

- Real-Time Object Detection Using Deep LearningDocument4 pagesReal-Time Object Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Object Detection and Recognition Using Tensorflow For Blind PeopleDocument5 pagesObject Detection and Recognition Using Tensorflow For Blind Peoplesivakumar vishnukumarNo ratings yet

- A Deep Neural Framework For Continuous Sign Language Recognition by Iterative Training SurveyDocument4 pagesA Deep Neural Framework For Continuous Sign Language Recognition by Iterative Training SurveyEditor IJTSRDNo ratings yet

- Electronic Eye For Visually Challenged PeopleDocument4 pagesElectronic Eye For Visually Challenged PeopleIJSTENo ratings yet

- Design and Implementation of Digital Braille System For The Visually ImpairedDocument5 pagesDesign and Implementation of Digital Braille System For The Visually ImpairedIvanka MendozaNo ratings yet

- Hand Sign An Incentive-Based On Object Recognition and DetectionDocument5 pagesHand Sign An Incentive-Based On Object Recognition and DetectionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Kamrul 2020 Ijais 451841Document6 pagesKamrul 2020 Ijais 451841kannan RNo ratings yet

- Ai Glass 1Document6 pagesAi Glass 1Magar AnzelNo ratings yet

- Volume Control Hand GestureDocument14 pagesVolume Control Hand Gesturekavya chilukuriNo ratings yet

- Irjet V9i5273Document5 pagesIrjet V9i5273Abdismad HusseinNo ratings yet

- Iarjset 2022 9420Document5 pagesIarjset 2022 9420snookiedoo1105No ratings yet

- AI Slides 1 - DR Ahmed ElBadwayDocument61 pagesAI Slides 1 - DR Ahmed ElBadwayamlNo ratings yet

- Vision 1Document25 pagesVision 1Ritesh TamangNo ratings yet

- Brain Port DeviceDocument3 pagesBrain Port DeviceGaurav PurushanNo ratings yet

- Virtual Assistant For The BlindDocument7 pagesVirtual Assistant For The BlindIJRASETPublicationsNo ratings yet

- Fin Irjmets1680450865Document4 pagesFin Irjmets1680450865kannan RNo ratings yet

- An Expert System For Diagnosis Eye Diseases Using CLIPS PDFDocument9 pagesAn Expert System For Diagnosis Eye Diseases Using CLIPS PDFyulida erlianiNo ratings yet

- Unit-Ii Deep Learning TechniuesDocument51 pagesUnit-Ii Deep Learning TechniuesTemperory MailNo ratings yet

- Object Detection Using CNNDocument6 pagesObject Detection Using CNNInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Wa000Document7 pagesWa000SourabhNo ratings yet

- Face DetectionDocument18 pagesFace DetectionNILANJAN MUKHERJEENo ratings yet

- Seminar On Brain Gate: Submitted By: Nazmeen Begum (3SL19CS027) Under The Guides Of: Prof. Jyothi. NDocument18 pagesSeminar On Brain Gate: Submitted By: Nazmeen Begum (3SL19CS027) Under The Guides Of: Prof. Jyothi. NNazmeen BanuNo ratings yet

- Comey Memo To FBI EmployeesDocument2 pagesComey Memo To FBI EmployeesLaw&Crime100% (2)

- Creating A New Silk UI ApplicationDocument2 pagesCreating A New Silk UI Applicationtsultim bhutiaNo ratings yet

- Ease Us Fix ToolDocument7 pagesEase Us Fix ToolGregorio TironaNo ratings yet

- Vashi Creek Water Quality NaviMumbaiDocument27 pagesVashi Creek Water Quality NaviMumbairanucNo ratings yet

- Pre Int Work Book Unit 9Document8 pagesPre Int Work Book Unit 9Maria Andreina Diaz SantanaNo ratings yet

- Income Tax Law & Practice Code: BBA-301 Unit - 2: Ms. Manisha Sharma Asst. ProfessorDocument32 pagesIncome Tax Law & Practice Code: BBA-301 Unit - 2: Ms. Manisha Sharma Asst. ProfessorVasu NarangNo ratings yet

- 7diesel 2016Document118 pages7diesel 2016JoãoCarlosDaSilvaBrancoNo ratings yet

- Solve A Second-Order Differential Equation Numerically - MATLAB SimulinkDocument2 pagesSolve A Second-Order Differential Equation Numerically - MATLAB Simulinkmohammedshaiban000No ratings yet

- Scheps Omni Channel PDFDocument41 pagesScheps Omni Channel PDFaaronweNo ratings yet

- Student Counseling Management System Project DocumentationDocument4 pagesStudent Counseling Management System Project DocumentationShylandra BhanuNo ratings yet

- TIDCP vs. Manalang-DemigilioDocument2 pagesTIDCP vs. Manalang-DemigilioDi ko alam100% (1)

- Oil and Gas CompaniesDocument4 pagesOil and Gas CompaniesB.r. SridharReddy0% (1)

- Dialux BRP391 40W DM CT Cabinet SystemDocument20 pagesDialux BRP391 40W DM CT Cabinet SystemRahmat mulyanaNo ratings yet

- 2.21 - Hazard Identification Form - Fall ProtectionDocument3 pages2.21 - Hazard Identification Form - Fall ProtectionSn AhsanNo ratings yet

- Newcastle University Dissertation Cover PageDocument5 pagesNewcastle University Dissertation Cover PageThesisPaperHelpUK100% (1)

- The Object-Z Specification Language: Software Verification Research Centre University of QueenslandDocument155 pagesThe Object-Z Specification Language: Software Verification Research Centre University of QueenslandJulian Garcia TalanconNo ratings yet

- Paving The Path To Narrowband 5G With Lte Internet of Things (Iot)Document16 pagesPaving The Path To Narrowband 5G With Lte Internet of Things (Iot)Matt ValroseNo ratings yet

- Cape Notes Unit 2 Module 2 Content 1 2Document13 pagesCape Notes Unit 2 Module 2 Content 1 2Jay B GayleNo ratings yet

- Business Plan: Malaysian NGV SDN BHDDocument81 pagesBusiness Plan: Malaysian NGV SDN BHDMurtaza ShaikhNo ratings yet

- Service Manual: Super Audio CD/DVD ReceiverDocument88 pagesService Manual: Super Audio CD/DVD Receiveralvhann_1No ratings yet

- Algebra 1 Vocab CardsDocument15 pagesAlgebra 1 Vocab Cardsjoero51No ratings yet

- Barangay San Pascual - Judicial Affidavit of PB Armando DeteraDocument9 pagesBarangay San Pascual - Judicial Affidavit of PB Armando DeteraRosemarie JanoNo ratings yet

- Pravin Raut Sanjay Raut V EDDocument122 pagesPravin Raut Sanjay Raut V EDSandeep DashNo ratings yet

- CCS0006L (Computer Programming 1) : ActivityDocument10 pagesCCS0006L (Computer Programming 1) : ActivityLuis AlcalaNo ratings yet

- 01 04 2018Document55 pages01 04 2018sagarNo ratings yet

- PCAR Part 1 ReviewerDocument75 pagesPCAR Part 1 ReviewerMaybelyn ConsignadoNo ratings yet

- Tendon Grouting - VSLDocument46 pagesTendon Grouting - VSLIrshadYasinNo ratings yet

- Case Laws IBBIDocument21 pagesCase Laws IBBIAbhinjoy PalNo ratings yet

- Romualdez v. SB, GR. No. 152259, July 29, 2004Document1 pageRomualdez v. SB, GR. No. 152259, July 29, 2004MonicaCelineCaroNo ratings yet

- Developing IT Security Risk Management PlanDocument5 pagesDeveloping IT Security Risk Management PlanKefa Rabah100% (3)