Professional Documents

Culture Documents

Ijirt151872 Paper

Uploaded by

MAD BoyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijirt151872 Paper

Uploaded by

MAD BoyCopyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/352787495

Third Eye for Blind Person

Conference Paper · June 2021

CITATION READS

1 10,790

3 authors, including:

Ritik Singh

ABES Institute of Technology

3 PUBLICATIONS 1 CITATION

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

3RD EYE FOR BLIND View project

MACHINE LEARNING AI IOT View project

All content following this page was uploaded by Ritik Singh on 28 June 2021.

The user has requested enhancement of the downloaded file.

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

Third Eye for Blind Person

Ritik Singh1, Rishi Kumar Kamat2, Faraj Chisti3

1,2,3

Computer engineering, ABES Institute of Technology, India

Abstract - Third eye for the blind is advancement with the Blind people can live a normal life and do things

assistance of the multidiscipline subjects like software according to their lifestyle but, they have to face a lot

engineering, hardware designing and which encourages of difficulties as compared to the normal people

the visually impaired individuals to explore with speed

without any disabilities. One of the biggest problems

and certainty by recognizing the object and person close-

for visually impaired person, especially the one who is

by deterrents utilizing the assistance of ultrasonic waves

and inform them with a beep sound and audio assistance. totally visually impaired, or blind is that they cannot

The influenced ones have been utilizing the convention use a smart mobile phone.

white stick for a long time which in spite of the fact that [6] There are no such gadgets accessible in the market

being powerful, still has a considerable measure of that can be worn like a material and having such a

weakness. This will be wearable innovations for the minimal effort and straightforwardness [6]. With the

blinds. The raspberry pi 4 module it is a small computer. utilization of this extemporized gadget in a huge scale,

This will be furnished with the ultrasonic sensors, with changes in the model, it will definitely profit the

comprising of raspberry pi 4 modules with camera.

network of the outwardly debilitated or the visually

Utilizing the sensor, outwardly hindered can recognize

impaired individuals [1]. The target of this task The

the articles around them and camera help to identifying

the object which can help can to travel effectively. At the Third Eye for the Blind is to plan an item which is

point when the sensors recognize any question it will particularly helpful to those individuals who are

inform the client by headset. And person and object outwardly debilitated and the individuals who

name will recognize by camera and output by headset frequently need to depend on others. [1] Third eye for

which can help to know about the object in front of client. Blind task is a development which helps the outwardly

In this manner this is a computerized gadget[1]. debilitated individuals to move around and move

Accordingly this gadget will be of an awesome use for between Different places with speed and certainty by

blinds and help them travel better places.

knowing the adjacent hindrances utilizing the

assistance of the wearable band which delivers the

Index Terms - Beep sound, Raspberry pi 4 module, voice

assistant, camera module, speech recognition, object ultrasonic waves which inform them with the inbuilt

detection, pi operating system, pi camera, speech voice assistant.

recognition.

I.INTRODUCTION II.EXISTING SYSTEM

In this modern era of technology, Smartphone devices Till now, there are many new technologies developed

have become one of the most common consumer for visually impaired person but they are hard to

devices. [1] A Smartphone plays a very important role operate and not very accurate like the object detection

in human life. Smartphone's make life easier with its system only detects the object and the direction of the

various functionality like – communicating with object but it does not tells the distance between the

others through voice calls, emails, messages, browsing person and the object. There are some devices which

the internet, taking photos, etc. With the help of can also make contacts and send messages to another

Smartphone's, these all have become a matter of person but it can be hard for the visually impaired

seconds. For example, you just have to dial the person to operate since they don’t know how to select

person’s contact number from your phone and wait till the one who needs to be called or messaged. These are

he/she responds. But this pleasure is only for those the features of the existing system or the systems

people who do not have any disability. which are made till date-

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1115

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

1. System makes two different types of sounds Also we add some extra feature like distance

depending on the situation. The major drawback measurement technique to identify how far the

of this system was that the blind people cannot distance between the object and the client and voice

differentiate the sound even if he/she can then it assistant for various extra features.

will take some time to identify the front obstacle

according to the sound. Software's and Technique

2. Object detection sensor is also introduced in the We can use following software.

new devices which can detect the object but it • Pi operating system.

cannot specify the distance between the person • Object detection Technique

and the object. • Distance Measurement Technique

3. To overcome above limitation JM Benjamin • Speech Recognition.

[3][1] proposed a three-direction detectable laser

cane. [1] The direction is 45 degrees over and Hardware Components.

parallel to the ground and with sharp deepness. • Raspberry pi 4 module

This laser • Ultrasonic sensor

4. works when an object or obstacle comes in its

• Headset

range only and the range was very less so it can

• Pi cam (camera)

only be used in indoor systems and cannot be used

• 5 mm LED: Red

for outdoor systems.

• Slide Switch

5. Now, they are introducing voice assistants who

• Female Header

only tell the output of the sensors to the user like

the distance, direction and the object’s name.[1] • Male Header

All the above systems cannot satisfy the exact needs • Jumper wire

of the blind peoples.[1] To overcome that limitation • Power bank

mentioned above this project will help to make a better • 1kΩ Resistor

and reliable system for the blind people. With the latest • 2kΩ Resistor

technology it will be cheaper and will be an easily • Some elastics and stickers

wearable device with all the functionalities which can

help the visually impaired to perform most of the daily Let us see about the components in brief:

tasks with ease. OBJECT DETECTION USING TENSOR FLOW:

The tensor Flow object detection API is the framework

III.PROPOSED SYSTEM for creating a deep learning network that solves object

detection problems.

In this system we are developing the navigation [4]This technique can be very useful to assist the blind

system for the blind persons. This is very easy to use and the elderly if deployed on their handy mobile.

and work as a navigator to the blind people to easily [4]Objects can be detected via Smartphone's camera,

navigate. identifies them and then reports back audibly to the

In this system the ultrasonic or sensor will detect the user, thus helping the blind navigate and perform daily

object and gives sound (object 'beep sound') and tasks with greater ease.

camera scan the object using object detection [4]To detect an object of your choice, we need to

technique and predict the object and by using speech follow these steps:-

recognize the object name is convert into sound and Data Generation: [4]Gather images of similar objects.

client can know the object by the help of headset. Image Annotation: Label the object with bounding

The object and person name and data are store in the box.

module and if the data is not present it will simply said API Installation: Install Tensor Flow object detection

no data image present give a beep sound.[2] API.

In this system we are using some hardware and Train and validate model: Using annotated images.

software components which are following Freeze the model: [4]To enable mobile deployment.

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1116

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

Deploy and run: [4]In mobile or virtual environment. return(((bounding_box[3]-

Algorithms for object detection and they can be split bounding_box[1]/2)+bounding_box[1],

into two groups: ((bounding_box[2]-

1. Algorithm based on classification- bounding_box[0])/2)+bounding_box[0])

They are implemented in two stages.

a. First, they select regions of interest in an image To calculate centroids-

b. Second, they classify these regions using Def calc_perm(detection_centroids):

convolution neural network Permutations=[]

2. Algorithm based on regression- for current_Permutation in

Instead of selecting interesting parts of an image, they itertools.permutations(detection_centroids, 2):

predict classes and bounding boxes for the whole if current_permutation[::-1]

image in one run of the algorithm. [2]Two best known not in permutation:

examples from this group are the YOLO (You Only permutations.append(current_permutation)

Look Once) and SSD(Single Shot Multibox Detector) return Permutations

DISTANCE MEASUREMENT To Calculate the distance (using Euclidean distance)-

Using ultrasonic we can measure the distance between Def calc_cent_distances(cent1, cent2):

the object and the user. Object can be anything like return math.sqrt((cent2[0]-cent1[0])**2 + (cent2[1]-

vehicle, chair, person, table etc.[3] This will be a cent1[1])**2)

relative measure given that the picture can be different

angles and perspectives. To calculate the distance for a group of permutations-

[3]To draw the lines around the objects we need to Def calc_all_distances(centroids):

import some inbuilt modules- Distances =[]

From PIL import Image, for centroid in centroids:

Image Draw Distances.append(calc_cent_distances(centroid[0],

Import itertools centroid[1]))

From itertools import compress Return Distances

The main idea is to follow the complete the following To draw the lines (normalizing the centroids using the

steps: image width and size)-

• Get every detected object from the Tensor Def nor_centroids(centroids, img_width, img_height):

Flow[3]. newCentroids = []

• Filter them by class and score. It will show the for centroid in centroids:

object matching more than 50%. newCentroids.append((centroid[0]*img_width,centro

• It will then calculate the centroid(center of the id[1]*img_height))

box) of the boxes. return newCentroids

• [3]Calculate permutations between all the

centroids. SPEECH RECOGNITION:

• Calculate distance between the different The blind cannot use keyboard on android Smartphone

permutations. or even if he/she is able to type then it is obvious that

• [3]Apply a threshold to the permutations based on It will take more time than the normal person. So, to

the distance. take the input from the user we will use the speech

recognition module which is a python module which

• Draw the lines.

converts the speech in text and then based on the text

• Show the picture

it will take actions and control other modules based on

the user’s command.

To calculate the centroid of the box-

Def calc_centroid(bounding_box):

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1117

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

We will be using CMU Sphinx engine because it can

work without an internet connection to recognize the

audio as-

recognize sphinx()

To access microphone with SpeechRecognizer we will

use PyAudio Package.

This module will be directly connected to other

modules(object detection, distance measurement) of

the project and these can be called by this speech

recognizer like-

Turn ON “module name” – will activate the module Figure1: Raspberry Pi 4 Module

name spoken after “Tun ON”

Distance between me and “The object name” – will 2. ULTRSONIC SENSOR:

give the distance between the user and the object name The ultrasonic sensor consists of transmitter, receiver

spoken by the user. and transceiver. [1] The transmitter convert electrical

Save Face of “the person’s name” – will save the face signal into sound waves. The receiver converts the

of the person’s face by the specified name sound waves into electrical signal again. [1] It also has

Who is in front of me – will tell the user the person crystal oscillators in it. It will perform the stabilization

standing/sitting in front of him/her operation in the ultrasonic sensor.

Call “person’s name or mobile number” – will the

mobile number. In case, if more than two numbers

exist with same person’s name then it will ask the

mobile number.

Additionally, messaging and emailing feature can also

be added and can be used or accessed via speech

recognizer. If the user receives mail or message then

the content will take as a text and will be read by user’s

command, in case, of audio file it will be played after

user’s command. Figure2: Ultrasonic sensor

1. RASPBERRY PIE 4 MODULES: [5] 3. HEADSET:

The Raspberry Pi 4 is a latest and low cost, credit-card A headset is a hardware device that connects to a

sized computer that plugs into a computer monitor or telephone or computer, allowing the user to talk and

TV, and uses a standard keyboard and mouse. [4] It is listen while keeping their hands free.

a capable little device that enables people of all ages Headsets are commonly used in technical support and

to explore computing, and to learn how to program in customer service centers, and allow the employee to

languages like Scratch and Python. talk to a customer while typing information into a

All over the world, people use Raspberry Pi is to learn computer.

programming skills, build hardware projects, do home

automation, and even use them in industrial

applications.

[5]The Raspberry Pi is a very cheap computer that runs

Linux, but it also provides a set of GPIO (general

purpose input/output) pins that allow you to control

electronic components for physical computing and

explore the Internet of Things (IOT).

Figure3: Headset

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1118

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

4. PI CAMERA: THE RASPBERRY PI WHICH CONTROLS THE

The Pi camera module is a portable light weight OVERALL OPERATION OF THIS PROPOSED

camera that supports Raspberry Pi. It communicates SYSTEM

with Pi using the MIPI camera serial interface

protocol.

It is normally used in image processing, machine

learning or in surveillance projects.

The Camera Module can be used to take high-

definition video, as well as stills photographs. It

supports 1080p30, 720p60 and VGA90 video modes,

as well as still capture. It attaches via a 15cm ribbon

cable to the CSI port on the Raspberry Pi.

V.CONCLUSION AND FUTURE

ENHANCEMENT

As of now, the whole idea of this project is made in a

simple glove, which also means that there is less

feature available since various other types of modules

Figure4: Pi camera cannot be installed in a small glove. In future, the

modules can be installed in other wearables like shoes,

Ultrasonic Distance Sensors glasses, hat etc. which we can access with the help of

our main module giving the gadget some extra features

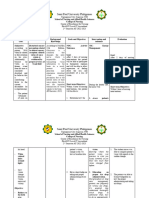

IV.IMPLEMENTATION or the whole venture can be made as a coat, with the

goal that the gadget doesn’t need to be wear one by

DISTANCE MEASUREMENT USING one. Furthermore, the better sensors installation can

ULTRASONIC SENSOR IN RASPBERRY PI. make it more fast and reliable. These are some updates

which can be made in future also the software's can be

upgraded with the supporting functionalities of the

new modules.

In future we will also add so many features like gesture

control for deaf and dump they are also contact with

blind people because they are not contact with blind e

people.

We will also add smart navigation system by this blind

OBJECT DETECTION USING PI CAMERA IN people are navigate easily and go whether they want

RASPBERRY PI. because they cannot able to see maps.

REFERENCES

[1] vpmthane.net/polywebnew/notice/industryproc.p

df

[2] www.ijeat.org/wpcontent/uploads/papers/v9i1s4/

A10901291S419.pdf

[3] medium.com/@drojasug/measuring-social-

distancing-using-tensorflow-object-detection-

api-7c54badb5092

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1119

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002

[4] becominghuman.ai/third-eye-in-your-hand- [22] Mo, J. P. Lewis, and U. Neumann, “Smart

850b77e1d45a Canvas: Agesture driven intelligent drawing desk

[5] medium.com/@codebugged/the-era-of-credit- system”, in Proc. ACM IUI, 2009, pp. 239-243.

card-sized-computer-5087d7bf6e09 [23] S. BHARATHI, A. RAMESH, S. VIVEK,

[6] wthtjsjs.cn/gallery/8-whjj-july-5520.pdf Effective navigation for visually impaired by

[7] www.raspberrypi.org/help/what- is-a-raspberry- wearable obstacle avoidance system.

pi/ International Conference on Computing,

[8] medium.com/@AnandAI/excellent-work-can-i- Electronics and Electrical Technologies

ask-you-one-question-13cdc040e549 (ICCEET), 2012.

[9] www.analyticsvidhya.com/blog/2020/04/build- [24] K. XIANGXIN, W. YUANLONG, L.

your-own-object-detection-model-using- MINCHEOL, Vision based guidedog robot

tensorflow-api/ system for visually impaired in urban system.

[10] laserstechs.wordpress.com/2017/11/09/for- 13th International Conference on Control,

home-use-check-cosmetic-lasers-for-sale-online/ Automation and Systems (ICCAS), 2013.

[11] S. Shovel, I Ulrich, J. Borenstien.Nav Belt and the [25] THIRD EYE FOR BLIND PEOPLE USING

Guide Cane, IEEE“Transactions on Robotics & ULTRASONIC VIBRATING GLOVES WITH

Automation”. 2003;10(1):9-20. IMAGE PROCESSING. Suprabha Potphode1,

[12] D. Yuan R. Manduchi. “Dynamic Environment Sneha Kumbhar2, Prashant Mhargude3, Parvin

Exploration Using a Virtual White Cane”, Kinikae-ISSN: 2395-0056 Volume: 07 Issue: 03 |

Proceedings of the IEEE Computer Society Mar 2020 www.irjet.net p-ISSN: 2395-0072.

Conference on Computer Vision and Pattern

Recognition (CVPR), University of California. AUTHOR PROFILE

[13] JM. Benjamin,NA. Ali, AF. Schepis. “A laser

cane for the blind”, Proceedings of San Diego Ms. Faraj Chisti working as an

Medical Symposium, 1973, 443-450. assistant professor in ABES

[14] S. Sabarish. “Navigation Tool for Visually institute of technology

Challenged using Microcontroller”, International Ghaziabad. Her area of interest

Journal of Engineering and Advanced are machine learning, artificial

Technology (IJEAT), 2013; 2(4):139-143. intelligence and software

[15] wthtjsjs.cn/gallery/8-whjj-july-5520.pdf engineering.

[16] Pooja Sharma,SL. Shimi,S. Chatterji. “A Review

on Obstacle Detection and Vision”, International Ritik Singh currently pursuing his

Journal of Science and Research Technology. B.Tech (4th year) degree in

2015; 4(1):1-11. Information Technology through

[17] AA. Tahat.” A wireless ranging system for the ABESIT, Ghaziabad associated by

blind long-cane utilizing a smart-phone”, in AKTU Lucknow, Uttar Pradesh.

Proceedings of the 10th International Conference His area of interest is backend

on Telecommunications. (ConTEL '09), IEEE, development, Algorithm and

Zagreb, Croatia, June. View at Scopus. 2009, 111- Operating system.

117.

[18] Smart walking stick by Mohammed H. Rana and Rishi Kumar Kamat currently

Sayemil (2013) pursuing his B.Tech (4th year)

[19] The electronic travelling aid for blind navigation degree in Information Technology

and monitoring by Mohan M.S Madulika (2013) through ABESIT, Ghaziabad

[20] Multi-dimensional walking aid by Olakanmi. O. associated by AKTU Lucknow,

Oladayo (2014). Uttar Pradesh. His area of interest

[21] 3D ultrasonic stick for the blind by the Osama is backend development.

Bader AlBarm (2014):

IJIRT 151872 INTERNATIONAL JOURNAL OF INNOVATIVE RESEARCH IN TECHNOLOGY 1120

View publication stats

You might also like

- IJIRT151872_PAPER (2)Document6 pagesIJIRT151872_PAPER (2)ritiksingh150No ratings yet

- Smart Gloves For BlindDocument5 pagesSmart Gloves For BlindLois Moyano0% (1)

- Smart Third Eye With Optimum and Safe Path Detection Based On Neural Networks For Blind Persons Using Raspberry-PiDocument6 pagesSmart Third Eye With Optimum and Safe Path Detection Based On Neural Networks For Blind Persons Using Raspberry-PiEditor IJRITCCNo ratings yet

- Smart Stick For Blind Using Machine LearningDocument7 pagesSmart Stick For Blind Using Machine LearningAnonymous izrFWiQNo ratings yet

- ReportDocument32 pagesReport43FYCM II Sujal NeveNo ratings yet

- Real Time Obj Detect For BlindDocument6 pagesReal Time Obj Detect For Blinddata proNo ratings yet

- Chapter OneDocument4 pagesChapter Oneابو علي الحيدريNo ratings yet

- Vision 1Document25 pagesVision 1Ritesh TamangNo ratings yet

- Smart Stick For Visually ImpairedDocument6 pagesSmart Stick For Visually ImpairedAnonymous izrFWiQNo ratings yet

- Fin Irjmets1680450865Document4 pagesFin Irjmets1680450865kannan RNo ratings yet

- Voice Assisted Blind Stick Using Ultrasonic SensorDocument3 pagesVoice Assisted Blind Stick Using Ultrasonic SensorOzigi abdulrasaqNo ratings yet

- Object Detection System With Voice Alert For BlindDocument7 pagesObject Detection System With Voice Alert For BlindIJRASETPublicationsNo ratings yet

- Blind Persons Aid For Indoor Movement Using RFIDDocument6 pagesBlind Persons Aid For Indoor Movement Using RFIDSajid BashirNo ratings yet

- UPDATED CPE 1Document34 pagesUPDATED CPE 138 aarti dhageNo ratings yet

- Smart Glasses For The BlindDocument20 pagesSmart Glasses For The BlindTummuri Shanmuk0% (1)

- Third Eye An Aid For Visually Impaired 1Document6 pagesThird Eye An Aid For Visually Impaired 1ABD BESTNo ratings yet

- An Intelligent Walking Stick for the Visually ImpairedDocument4 pagesAn Intelligent Walking Stick for the Visually ImpairedArchana SinghNo ratings yet

- Irjet V7i3567 PDFDocument6 pagesIrjet V7i3567 PDFSagor SahaNo ratings yet

- Title Lorem Ipsum: Sit Dolor AmetDocument15 pagesTitle Lorem Ipsum: Sit Dolor Ametvivekanand_bonalNo ratings yet

- YoloV4 Based Object Detection For Blind StickDocument5 pagesYoloV4 Based Object Detection For Blind StickInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Blind People Helping HandDocument18 pagesBlind People Helping HandIJRASETPublicationsNo ratings yet

- The Review of Raspberry Pi Based - Systems To Assist The Disabled PersonsDocument10 pagesThe Review of Raspberry Pi Based - Systems To Assist The Disabled PersonsVelumani sNo ratings yet

- Kamrul 2020 Ijais 451841Document6 pagesKamrul 2020 Ijais 451841kannan RNo ratings yet

- Assistive Object Recognition System For Visually Impaired IJERTV9IS090382Document5 pagesAssistive Object Recognition System For Visually Impaired IJERTV9IS090382ABD BESTNo ratings yet

- Automated Navigation System With Indoor Assistance For The BlindDocument33 pagesAutomated Navigation System With Indoor Assistance For The Blindveda sreeNo ratings yet

- Third eye for blind using ultrasonic sensorsDocument5 pagesThird eye for blind using ultrasonic sensorssumaniceNo ratings yet

- Thesis 10 12Document26 pagesThesis 10 12merry sheeryNo ratings yet

- AI BASED WHITE CANE ReschDocument3 pagesAI BASED WHITE CANE ReschDivya BharathiNo ratings yet

- Virtual Eye For Blind People Using Deep LearningDocument8 pagesVirtual Eye For Blind People Using Deep LearningIJRASETPublicationsNo ratings yet

- SmartblindstickprojectDocument9 pagesSmartblindstickprojectDeepika RadhaNo ratings yet

- Facial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108Document4 pagesFacial Emotion and Object Detection For Visually Impaired Blind Persons IJERTV10IS090108Aayush SachdevaNo ratings yet

- 3 Rdeyepdfpptf INAL1Document13 pages3 Rdeyepdfpptf INAL1caifasoaustinNo ratings yet

- IVA For Visually Impaired People Using Raspberry PIDocument8 pagesIVA For Visually Impaired People Using Raspberry PIIJRASETPublicationsNo ratings yet

- Vijayank-A Smart Spectacle For DifferentlyDocument7 pagesVijayank-A Smart Spectacle For DifferentlyPRIYANSHU RAJNo ratings yet

- Ai Glass 1Document6 pagesAi Glass 1Magar AnzelNo ratings yet

- Internet of Things: Kanak Manjari, Madhushi Verma, Gaurav SingalDocument20 pagesInternet of Things: Kanak Manjari, Madhushi Verma, Gaurav SingalCristhian Jover CastroNo ratings yet

- Aa 11Document6 pagesAa 11Muhammad Sheharyar MohsinNo ratings yet

- Blinds Personal Assistant Application For AndroidDocument7 pagesBlinds Personal Assistant Application For AndroidIJRASETPublicationsNo ratings yet

- Fin Irjmets1657369160Document9 pagesFin Irjmets1657369160KP D DRAVIDIANNo ratings yet

- Compusoft, 3 (4), 758-764 PDFDocument7 pagesCompusoft, 3 (4), 758-764 PDFIjact EditorNo ratings yet

- Trinetra: An Assistive Eye For The Visually ImpairedDocument6 pagesTrinetra: An Assistive Eye For The Visually ImpairedInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Electronic Stick For Visually Impaired People Using GSM and GPS TrackingDocument64 pagesElectronic Stick For Visually Impaired People Using GSM and GPS TrackingShree KanthNo ratings yet

- IoT For DisabilitiesDocument7 pagesIoT For DisabilitiesHassan KhaledNo ratings yet

- Object Detection and Recognition Using Tensorflow For Blind PeopleDocument5 pagesObject Detection and Recognition Using Tensorflow For Blind Peoplesivakumar vishnukumarNo ratings yet

- THIRD EYE 360° Object Detection and Assistance For Visually Impaired PeopleDocument8 pagesTHIRD EYE 360° Object Detection and Assistance For Visually Impaired PeopleVelumani sNo ratings yet

- Tharshigan Logeswaran, Krishnarajah Bhargavan, Ginthozan Varnakulasingam, Ganasampanthan Tizekesan, Dhammika de SilvaDocument7 pagesTharshigan Logeswaran, Krishnarajah Bhargavan, Ginthozan Varnakulasingam, Ganasampanthan Tizekesan, Dhammika de SilvaAnonymous lAfk9gNPNo ratings yet

- IoT Based Real-Time Communication For People With Sensory ImpairmentDocument8 pagesIoT Based Real-Time Communication For People With Sensory ImpairmentInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Assistive Technology For Visually Impaired Using Tensor Flow Object Detection in Raspberry Pi and Coral USB AcceleratorDocument4 pagesAssistive Technology For Visually Impaired Using Tensor Flow Object Detection in Raspberry Pi and Coral USB AcceleratorMoidul Hasan KhanNo ratings yet

- Naveye: Smart Guide For Blind Students: Hamza A. Alabri, Ahmed M. Alwesti, Mohammed A. Almaawali, and Ali A. AlshidhaniDocument6 pagesNaveye: Smart Guide For Blind Students: Hamza A. Alabri, Ahmed M. Alwesti, Mohammed A. Almaawali, and Ali A. AlshidhaniShekhar RakheNo ratings yet

- Effectiveness of Sensors For An Arduino Microcontroller: Initial ComparisonsDocument7 pagesEffectiveness of Sensors For An Arduino Microcontroller: Initial ComparisonsRye Exodus PrillesNo ratings yet

- Irjet V5i12304 PDFDocument7 pagesIrjet V5i12304 PDFAnonymous NBK4baNo ratings yet

- DfajdfaDocument4 pagesDfajdfasofayo9217No ratings yet

- Stick For Helping The Blind PersonDocument6 pagesStick For Helping The Blind PersonIJRASETPublicationsNo ratings yet

- Blind Assistance System Using Image ProcessingDocument11 pagesBlind Assistance System Using Image ProcessingIJRASETPublicationsNo ratings yet

- SAVI_Smart_Assistant_for_Visually_ImpairDocument7 pagesSAVI_Smart_Assistant_for_Visually_Impairelecgabrielr.123No ratings yet

- Blind PDFDocument6 pagesBlind PDFNelarapuMaheshNo ratings yet

- 2303 07451 PDFDocument6 pages2303 07451 PDFViney ChhillarNo ratings yet

- A Smart Blind Stick For Aiding Visually Impaired PeopleDocument3 pagesA Smart Blind Stick For Aiding Visually Impaired PeopleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Format Part IIDocument53 pagesFormat Part IIyashNo ratings yet

- School Health NursingDocument11 pagesSchool Health NursingCeelin Robles100% (4)

- Parts of An Essay - Introduction - ThesisDocument33 pagesParts of An Essay - Introduction - ThesisHazel ZNo ratings yet

- Article On Siddaraju V State of KarnatakaDocument4 pagesArticle On Siddaraju V State of KarnatakaHeena ShaikhNo ratings yet

- Assessing The Ability of Blind and Partially Sighted People: Are Psychometric Tests Fair?Document31 pagesAssessing The Ability of Blind and Partially Sighted People: Are Psychometric Tests Fair?Fedi TrabelsiNo ratings yet

- Lesson PhilosophyDocument9 pagesLesson PhilosophyRgen Al VillNo ratings yet

- NCP GlaucomaDocument11 pagesNCP GlaucomafloagbayanibautistaNo ratings yet

- Presentasi Low VisionDocument30 pagesPresentasi Low VisionAySa AysaNo ratings yet

- Torch-It, Torch For Visually ImpairedDocument25 pagesTorch-It, Torch For Visually ImpairedHunny BhagchandaniNo ratings yet

- DEAF-MUTE-BLIND-SLD ModuleDocument25 pagesDEAF-MUTE-BLIND-SLD ModuleRogelio TrasgaNo ratings yet

- Here are the answers to questions 1-5:1. B2. C 3. D4. E5. ADocument13 pagesHere are the answers to questions 1-5:1. B2. C 3. D4. E5. APhan Thanh BinhNo ratings yet

- WHOLE How To Overcome Barriers in CommunicationDocument41 pagesWHOLE How To Overcome Barriers in Communicationhannah3700No ratings yet

- Client Who Is BlindDocument17 pagesClient Who Is BlindArasi MuniandyNo ratings yet

- Teaching Choice MakingDocument14 pagesTeaching Choice MakingEmma CrăciunNo ratings yet

- NEO Scavenger - Manual PDFDocument37 pagesNEO Scavenger - Manual PDFFilha Do ReiNo ratings yet

- Monika Baár - EN - Guide Dogs For The Blind: A Transnational HistoryDocument12 pagesMonika Baár - EN - Guide Dogs For The Blind: A Transnational HistoryFondation Singer-PolignacNo ratings yet

- Wired UK - December 2017Document228 pagesWired UK - December 2017Federico Kukso100% (2)

- RA 7277 Magna Carta For Disabled Persons IRRDocument33 pagesRA 7277 Magna Carta For Disabled Persons IRRBarangay MalabonNo ratings yet

- Sample Presentation ScriptDocument6 pagesSample Presentation ScriptTranPhamVietThaoNo ratings yet

- Comprehensive Geriatric AssessmentDocument87 pagesComprehensive Geriatric AssessmentNurrahmanita AzizaNo ratings yet

- Blind Leading The Sighted: Drawing Design Insights From Blind Users Towards More Productivity-Oriented Voice InterfacesDocument35 pagesBlind Leading The Sighted: Drawing Design Insights From Blind Users Towards More Productivity-Oriented Voice InterfacesagebsonNo ratings yet

- Epidemiology of Visual Impairment & BlindnessDocument17 pagesEpidemiology of Visual Impairment & BlindnessvaishnaviNo ratings yet

- CHCDIV001 Student Assessment Booklet ID 99496Document67 pagesCHCDIV001 Student Assessment Booklet ID 99496Francis Dave Peralta Bitong100% (4)

- SA Notificiation 1Document26 pagesSA Notificiation 1Sampath KumarNo ratings yet

- Understanding Low Vision Terms for Optometric PracticeDocument27 pagesUnderstanding Low Vision Terms for Optometric PracticeJohn DeanNo ratings yet

- Basic Concept, Nature & Understanding LSENs (Bam Imas) FinaleDocument49 pagesBasic Concept, Nature & Understanding LSENs (Bam Imas) Finalerhoda mae ypulongNo ratings yet

- Inclusive Language-GuidelinesDocument27 pagesInclusive Language-GuidelinesJason BealsNo ratings yet

- Description: Luminating Design For The Visually ImpairedDocument9 pagesDescription: Luminating Design For The Visually ImpairedabooNo ratings yet

- Visual DisordersDocument21 pagesVisual DisordersJobelle Acena100% (1)

- Ed7 Module 9 Children With Special NeedsDocument33 pagesEd7 Module 9 Children With Special NeedsPedro ManatadNo ratings yet

- Driver Performance Evaluation ConsideratDocument10 pagesDriver Performance Evaluation Consideratprofeta1955No ratings yet