Professional Documents

Culture Documents

2023C1 #1 Looking Inside The Magical Black Box - A Systems Theory Guide To Managing AI

Uploaded by

David EsquivelOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2023C1 #1 Looking Inside The Magical Black Box - A Systems Theory Guide To Managing AI

Uploaded by

David EsquivelCopyright:

Available Formats

FEATURE FEATURE

Looking Inside the Magical

Black Box

A Systems Theory Guide to Managing AI

A

rtificial intelligence (AI) algorithms it is impossible to build a model that considers all

show tremendous potential in guiding possible variables leading to desirable outcomes.

decisions therefore, many enterprises have owever, a model can help decision makers by

implemented AI techniques. AI investments providing general guidance on what may happen in

reached approximately US$ 8 billion in 2020.1 The the future based on what has happened in the past.

problem is that there are many unknowns, and

percent of enterprises cannot explain how their AI AI algorithms either use predefined models or

tools work.2 Decision makers may be overly trusting create their own models to make predictions. This

the unknown components inside what is referred is the basis for categorizing AI algorithms as either

to as the AI magical black box and, therefore, may symbolic or statistical.8 Symbolic algorithms use

unintentionally expose their enterprises to ethical, a set of rules to transform data into a predicted

social and legal threats. outcome. The rules define the model, and the user

can easily understand the system by reviewing

Despite what many believe about this new technology the model. rom a systems theory perspective,

being objective and neutral, AI-based algorithms the inputs and processes are clearly defined. or

often merely repeat past practices and patterns. example, symbolic algorithms are used to develop

AI can simply automate the status quo. In fact, credit scores, where inputs are defined a priori

AI-based systems sometimes make problematic, and processes (calculations) are performed using

discriminatory or biased decisions4 because they

often replicate the problematic, discriminatory or

biased processes that were in place before AI was

FIGURE 1

introduced. ith the widespread use of AI, this

Systems Theory View of AI

technology affects most of humanity therefore, it

may be time to take a systemwide view to ensure Inputs Processes Outputs

that this technology can be used to make ethical,

unbiased decisions.

Systems Theory

SIMONA AICH

Many disciplines have encouraged a systems theory

approach to studying phenomena. This approach Is an undergraduate student at Ludwigshafen University of Business and

states that inputs are introduced into a system Society (Ludwigshafen, Germany) in a cooperative study program with SAP

and processes convert these inputs into SE, a market leader for enterprise resource planning (ERP) software. She

outputs (fig re 1). has completed internships in various departments, including consulting and

development. er research interests include the impact of algorithms, a topic

An algorithm is defined as a standard procedure she plans to pursue in her master’s degree studies.

that involves a number of steps, which, if followed

GERALD F. BURCH | P .D.

correctly, can be relied upon to lead to the solution

of a particular kind of problem. AI algorithms use Is an assistant professor at the University of est lorida (Pensacola, lorida,

a defined model to transform inputs into outputs. USA). e teaches courses in information systems and business analytics at

British statistician George E. P. Box is credited both the graduate and undergraduate levels. is research has been published in

with saying All models are wrong, but some are the ISACA® Journal. e can be reached at gburch uwf.edu.

useful. 7 The point is that the world is complex, and

VOLUME 1 | 2023 ISACA JOURNAL 39

outcomes. Bias has been defined as choosing

one generalization over another, other than strict

consistency with the observed training instances.10

This is often seen where one outcome is more likely to

be from one set of functions than from another. Bias

is often seen either in the collection of data, where

a non-representative sample is taken, which affects

the outcome, or by the algorithms themselves, which

lean toward one outcome. Decisions based on an AI

algorithm’s outputs are often of great interest to the

general public because they can have a major impact

on people’s lives, such as whether they are selected

for a job interview or whether they are approved for

associated weights and formulas to arrive at an a home loan. In IT, three categories of bias can be

output. This output is then used to decide whether to distinguished preexisting, technical and emergent.

extend credit.

Preexisting Bias

Statistical algorithms, in contrast, often allow the Sometimes, a bias established in society (preexisting)

computer to select the most important inputs to is transferred into software. This can happen either

develop a new model (process). These models are explicitly, such as when a discriminatory attitude is

usually more sophisticated than those developed deliberately built into the algorithm, or implicitly, such

by symbolic algorithms but still predict outcomes as when a profiling algorithm is trained with the help

based on inputs. One issue associated with the use of historical data based on bias. Preexisting bias is

of statistical algorithms is that the user may not know often introduced at the input stage of the system.

which inputs have been chosen or which processes One example of a preexisting bias is use of the classic

have been used to convert inputs into outputs, and, air Isaac Corporation ( ICO) algorithm to calculate

LO O K I N G F O R

therefore, may be unable to understand the model a creditworthiness score. In this case, cultural biases

M O R E?

they are utilizing to make decisions. associated with the definition of traditional credit can

• Read Audit Practitioner’s

result in discrimination because some cultures place

Guide to Machine

more emphasis on positive payment.11

Learning, Part 1:

Technology. A model can help decision

Technical Bias

www.isaca.org/audit-

makers by providing general Technical bias is often the result of computer

practitioner-guide-to-

ML-part-1 guidance on what may happen limitations associated with hardware, software or

peripherals. Technical specifications can affect a

• Learn more about, in the future based on what has system’s processes, leading to certain groups of

discuss and collaborate people being treated differently from others. This

on emerging happened in the past. may occur when standards do not allow certain

technology in ISACA’s characteristics to be recorded, or it can be the result

Online orums. of technical limitations related to the software or

https://engage.isaca.org/ Systems theory shows that the output of these

programming of algorithms. These defects in the

onlineforums models depends heavily on the inputs (data)

algorithm are often seen in the processing stage

provided and the types of algorithms (processes)

of the system. An example of technical bias is the

chosen. Subsequent use of the AI model’s outputs

inability to reproduce an answer using an AI algorithm.

to make decisions can easily lead to bias, resulting

This might occur when the data being used to train

in systematic and unknowing discrimination against

(or develop) the model are altered slightly, resulting

individuals or groups.9

in a different model being produced. Being unable

to reproduce a model with the same or similar data

Computer System Bias leads to doubt about the model’s overall validity. An

The systems theory discussion of AI algorithms example of this type of bias occurred at Amazon,

can be extended by considering how biases affect when women’s r sum s were excluded based on the

40 ISACA JOURNAL VOLUME 1 | 2023

system’s selection of words in applicants’ r sum s.

Amazon tried to remove the bias but eventually had

to abandon the AI algorithm because it could not

Conducting correlation studies of the variables

identify the underlying technical logic that resulted in before introducing them into the system

discriminatory outputs.12

may provide an early indication of potential

Emergent Bias discrimination problems.

Emergent bias happens due to the incorrect

interpretation of an output or when software is used for

unintended purposes. This type of bias can arise over

time, such as when values or processes change, but the hidden inside the AI black box (fig re ). The AI

technology does not adapt1 or when decision makers system can result in outcomes that are unethical or

apply decision criteria based on the incorrect output of discriminatory. Therefore, it is critical that decision

the algorithm. Emergent bias normally occurs when the makers understand what happens inside the box—at

output is being converted into a decision. each step, and with each type of bias—before using AI

outputs to make decisions.

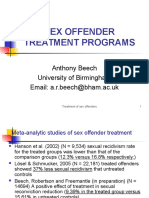

An example of emergent bias is the use of

Correctional Offender Management Profiling for

Analyzing the AI Black Box and

Alternative Sanctions (COMPAS), which is used by

some US court systems to determine the likelihood of

Removing Bias

a defendant’s recidivism. The algorithms are trained After understanding how bias can find its way into the

primarily with historical data on crime statistics, AI black box, the goal of managers is to identify and

which are based on statistical correlations rather remove the biases. As shown in fig re , there are

than causal relationships. As a result, bias related five steps to avoid bias and discrimination.

to ethnicity and financial means often results in

minorities receiving a bad prognosis from the Step 1: Inputs

model.14 A study of more than 7,000 people arrested The goal of AI algorithms is to develop outputs

in the US State of lorida evaluated the accuracy of (predictions) based on inputs. Therefore, it is possible

COMPAS in predicting recidivism. It found that 44.9 that AI algorithms will detect relationships that

percent of the African Americans defendants labeled could be discriminatory based on any given input

high risk did not reoffend. Making decisions using this variable. Including inputs such as race, ethnicity,

model’s outcomes would, therefore, negatively affect gender and age authorizes the system to make

nearly 4 percent of African American defendants recommendations based on these inputs, which is,

for no valid reason. Conversely, only 2 . percent of by definition, discrimination. And making decisions

white defendants labeled as high risk in the study did based on these factors is also discrimination. This

not reoffend.1 nowingly using biased outcomes to is not an admonishment to never use these inputs

make decisions introduces emergent bias that may rather, it is a warning that discrimination could be

lead to negative outcomes. claimed. or example, automobile insurance models

predict accident rates based on age and gender.

These forms of bias can occur in different Most people would probably agree that this is fair, so

combinations,1 and they point to the mystery including age and gender would be appropriate inputs

FIGURE 2

AI Black Box

Inputs Processes Outputs Decisions Outcomes

Preexisting Technical Emergent

Bias Bias Bias

VOLUME 1 | 2023 ISACA JOURNAL 41

FIGURE 3

How to Avoid AI Algorithm Bias and Discrimination

ꞏ Know chosen inputs.

ꞏ Avoid inputs that lead to discrimination.

Inputs

ꞏ Conduct correlation and moderation

studies of inputs and outputs.

ꞏ Be transparent about inputs.

ꞏ Identify and remove preexisting biases.

ꞏ Know chosen AI algorithms. Decisions Outcomes

Processes

ꞏ Adjust inputs into AI algorithms to

identify key inputs. ꞏ Conduct potential ꞏ Monitor outcomes.

ꞏ Choose explainable algorithms. discrimination and ꞏ Conduct discrimination

ꞏ Identify and remove technical biases. ethical studies. and ethics studies.

ꞏ Identify and remove ꞏ Monitor environmental

emergent biases. changes.

ꞏ Conduct correlation and moderation

Outputs

studies of inputs and outputs.

ꞏ Report results to decision makers.

ꞏ Determine risk levels to see if decisions

can be automated.

in an accident risk model. But enterprises should Investigating multiple models can also help identify

be transparent about their inputs to ensure that the technical bias. or example, gone are the days when

public is aware of what factors are considered in the weather forecasters relied on just one model. It is

decision-making process. Conducting correlation much more common now for spaghetti models to be

studies of the variables before introducing them used, giving an overview of what many models are

into the system may provide an early indication of predicting instead of relying on just one.

potential discrimination problems.

After an algorithm has been developed, user

Preexisting bias can also be introduced with inputs. acceptance testing (UAT) is an important step

This can be done by choosing to include potentially to ensure that the algorithm is truly doing what

discriminating inputs or by choosing other inputs that it was designed to do. UAT should examine all

may act as proxies for gender, age and ethnicity, such aspects of an algorithm and the code itself. 17 For

as choosing personal economic variables as inputs nondiscriminatory AI applications, it is important

due to socioeconomic relationships. Decision to consider justice as a target value and to provide

makers must know which inputs are being used by people disadvantaged by an AI-based decision with

the AI algorithms. the ability to enforce their rights.18

Step 2: Processes Step 3: Outputs

The algorithms used to convert inputs to outputs This is the last step before a decision is made.

must be understood at the highest possible level of Correlation and moderation studies of the outputs

detail. This is easy for symbolic algorithms, but much and the most common inputs associated with

more difficult for some statistical AI techniques. discrimination (e.g., gender, race, ethnicity) should

Decision makers should request that the inputs used be conducted. The results should be reported to

in developing and training statistical AI models be decision makers to ensure that they are aware of how

identified to determine which variables are being inputs are related to outputs.

used. Another consideration is to ascertain why a

particular AI technique was chosen and to determine Step 4: Decisions

the incremental explanatory power of a model that The AI system is reconnected to the outside

cannot be explained vs. one that can be explained. environment during this step. Decision makers

Different AI algorithms should be tested to determine must understand that decisions made using AI have

the accuracy of each one, and further investigation consequences for the people or systems associated

into inputs is warranted when unexplainable models with those decisions. It is very important at this point

significantly outperform explainable models.

42 ISACA JOURNAL VOLUME 1 | 2023

to analyze whether discrimination can result from the

decisions being made. This is especially important

when the decision is directly connected to the output

Too often, enterprises do not monitor what has

without any human intervention. owever, it is happened and continue to use the same inputs

important to note that human interaction does not

eliminate the possibility of emergent bias, as human and processes, unknowingly allowing

beings are inherently biased.19 biases to shape the outputs that influence the

Step 5: Outcomes decision-making process.

At this point, the decisions have interacted with the

environment and the effects are known—but only if

the enterprise follows up and measures the actual 4. ccessibi it — ow information is made available

outcomes. Too often, enterprises do not monitor to different people and under what circumstances

what has happened and continue to use the same access is given24

inputs and processes, unknowingly allowing

biases to shape the outputs that in uence the AI4People Model

decision-making process. The AI4People model is much more recent. It

was developed by analyzing six existing ethical

The Final Check: Ethics frameworks and identifying 47 principles for making

Understanding inputs, processes, outputs, ethical decisions.2 ive core principles emerged

decisions and outcomes may ensure that bias has

1. eneficence—The promotion of well-being and

been removed from an AI algorithm and potential

the preservation of the planet

discrimination has been identified. owever,

enterprises can follow all these steps and still have 2. onma eficence—The avoidance of personal

ethical issues associated with the decision-making privacy violations and the limitations of AI

process. The life cycle model for developing ethical capabilities, including not only humans’ intent to

AI is a great approach for identifying and removing misuse AI but also the sometimes unpredictable

ethical AI issues.20 hat decision makers may still be behavior of machines

missing is a framework for deciding what is unethical. 3. tonom —The balanced decision-making power

of humans and AI

There are many frameworks that address the ethical

aspects of algorithms. Some of these frameworks 4. stice—The preservation of solidarity and

are specialized, covering a particular area such as prevention of discrimination.

healthcare.21 Two frameworks—the PAPA model22 5. p icabi it —Enabling of the other principles

and the AI4People model2 —have gained widespread by making them understandable, transparent

application. They present an interesting contrast and accountable2

since the former was developed at the end of the

ig re compares these models and presents

1980s and the latter was developed in 2018, in a

ethical AI algorithm considerations for decision

completely different world of technology.

makers.

PAPA Model

These two ethical models show that enterprises

The PAPA model identifies four key issues to preserve should consider other outcome variables that can

human dignity in the information age determine the ethical implications of their decisions.

This should be done not only by the software

1. rivac —The amount of private information one

developers, but also by the stakeholders involved in

wants to share with others and the amount of

the algorithm.27 Decision makers should evaluate

information that is intended to stay private

each of the areas listed in fig re and determine

2. cc rac — ho is responsible for the correctness which additional outcome variables they should

of information collect and track to ensure that their AI algorithms are

3. ropert — ho owns different types of not negatively impacting ethical interests. The limited

information and the associated infrastructure views of one decision maker may be inadequate for

VOLUME 1 | 2023 ISACA JOURNAL 43

FIGURE 4

Comparison of Ethical Models and AI Algorithm Considerations

PAPA AI4People gorithm onsi erations

Privacy Nonmaleficence Avoid violations of personal privacy, security and possible protective measures; do no harm.

Accuracy Justice Produce nondiscriminatory outputs and preserve solidarity by using accurate data.

Explicability Provide understandable and transparent inputs and processes with associated accountability

for outputs, decisions and outcomes.

Property Not addressed Identify ownership of information and related infrastructure.

Accessibility Not addressed Discuss how information is made available to different people and under what circumstances.

Beneficence Promote human well-being and planet preservation.

Autonomy Balance decision-making power between humans and AI.

this task. One option is to assemble a diverse board AI algorithms can improve organizational performance

to evaluate these areas and make recommendations by better predicting future outcomes. owever, decision

about which outcomes to track. This may help makers are not absolved from understanding the inputs,

expose initially unidentified concerns that can be processes, outputs and outcomes of the decisions

incorporated into the AI algorithms’ redesign to made by AI. Taking a systems theory approach may

ensure that ethical issues are addressed.28 help enterprises ensure that the legal, ethical and social

aspects of AI algorithms are examined. Outcomes

based on AI algorithms must not be assumed to be rigid

and finite because society and technology are constantly

The prerequisites for an ethical changing. Similarly, ethical principles may change too.

AI algorithm are unbiased data, hen the environment for an algorithm changes, the

algorithm must be adapted, even if it requires going

explainable processes, unbiased beyond the original specifications.29

interpretation of the algorithm’s

Endnotes

output and monitoring of the 1 oldi, S. It’s 2021. Do You now hat

outcomes for ethical, legal and AI Is Doing ICO Blog, 2 May 2021,

https://www.fico.com/blogs/its-2021-do-you-

societal effects. know-what-your-ai-doing

2 Ibid.

3 O’Neil, C. The Era of Blind aith in Big Data Must

Conclusion End, Ted Talks, 2017, https://www.ted.com/talks/

cathy_o_neil_the_era_of_blind_faith_in_big_data_

The use of AI algorithms can contribute to human

must_end

self-realization and result in increased effectiveness

4 ielinski, L. et al. Atlas of Automation—

and efficiency. Therefore, this technology is already

Automated Decision-Making and Participation

being applied in numerous industries. owever,

in Germany, Algorithmwatch, 2019,

there are risk factors and ethical concerns regarding

https://atlas.algorithmwatch.org/

the collection and processing of personal data and

5 ohnson, . A. . E. ast . E. Rosenzweig

compliance with societal norms and values. The

Systems Theory and Management, Management

prerequisites for an ethical AI algorithm are unbiased

Science, vol. 10, iss. , anuary 19 4, p. 19 – 9 ,

data, explainable processes, unbiased interpretation

https://www.jstor.org/stable/2627306

of the algorithm’s output and monitoring of the

6 aylock, D. . Thangata Key Concepts in Teaching

outcomes for ethical, legal and societal effects.

Primary Mathematics, Sage, United ingdom, 2007

44 ISACA JOURNAL VOLUME 1 | 2023

7 orruitiner, C. D. All Models Are rong, 18 Op cit Beck

Medium, 1 anuary 2019, https://medium.com/ 19 Op cit Scarpino

the-philosophers-stone/all-models-are-wrong- 20 Ibid.

4c407bc1705 21 afis, V. G. O. Schaefer M. . Labude et al.

8 Pearce, G. ocal Points for Auditable and An Ethics ramework for Big Data in ealth and

Explainable AI, ISACA® Journal, vol. 4, 2022, Research, ABR, vol. 11, 1 October, 2019,

https://www.isaca.org/archives p. 227–2 4, https://doi.org/10.1007/

9 riedman, B. . Nissenbaum Bias in Computer S41649-019-00099-x

Systems, ACM Transactions on Information 22 Mason, R. our Ethical Issues of the Information

Systems, vol. 14, uly, 199 , p. 0– 47, Age, MIS Quarterly, vol. 10, 198 , p. –12

https://doi.org/10.1145/230538.230561 23 loridi, L. . Cowls M. Beltramelti et al

10 Mitchell, T. M. The Need for Biases in Learning AI4People—An Ethical ramework for a Good

Generalizations, Rutgers University, AI Society Opportunities, Risks, Principles, and

New Brunswick, New ersey, USA, 1980, Recommendations, Minds and Machines,

https://www.cs.cmu.edu/~tom/pubs/ vol. 28, December 2018, p. 89-707,

NeedForBias_1980.pdf https://doi.org/10.1007/S11023-018-9482-5

11 Scarpino, . Evaluating Ethical Challenges in 24 Op cit Mason

AI and ML, ISACA Journal, vol. 4, 2022, 25 Op cit loridi et al.

https://www.isaca.org/archives 26 Barton, M. C. . P ppelbu Prinzipien f r die

12 Ibid. ethische Nutzung k nstlicher Intelligenz, HMD,

13 Op cit riedman and Nissenbaum vol. 9, 2022, p. 4 8–481, https://doi.org.10.1365/

14 Angwin, . . Larson S. Mattu L. irchner S40702-022-00850-3

Machine Bias, Propublica, 2 May 201 , 27 uber, . Data Ethics rameworks, .

https://www.propublica.org/article/ Information, Wissenschaft and Praxis, vol. 72,

machine-bias-risk-assessments-in- iss. - , 2021, p. 291–298, https://doi.org/

criminal-sentencing 10.1565/iwp-2021-2178

15 Ibid. 28 van Bruxvoort, . M. van eulen ramework

16 Beck, S. et al. nstliche Intelligenz for Assessing Ethical Aspects of Algorithms and

und Diskriminierung, 7 March 2019, Their Encompassing Socio-Technical System,

https://www.plattform-lernende-systeme.de/ Applied Science, vol. 11, 2021, p. 11187,

publikationen-details/kuenstliche-intelligenz- https://doi.org/10.3390/app112311187

und-diskriminierung-herausforderungen-und- 29 Pearce, G., M. otopski Algorithms and the

loesungsansaetze.html Enterprise Governance of AI, ISACA Journal,

17 Baxter, C. Algorithms and Audit Basics, ISACA vol. 4, 2021, https://www.isaca.org/archives

Journal, vol. , 2021, https://www.isaca.org/archives

By joining ISACA’s One In Tech Foundation

you can help remove barriers to ensure

untapped individuals receive equitable

access to begin and advance their career

within the Cybersecurity and IT Audit

professions. Together, let’s build diversity

and inclusion in cyber careers.

www.oneintech.org

OIT_Jv1-2023_one-third-page.indd 1 12/6/22 9:56 PM

VOLUME 1 | 2023 ISACA JOURNAL 45

You might also like

- Artificial Intelligence Control Problem: Fundamentals and ApplicationsFrom EverandArtificial Intelligence Control Problem: Fundamentals and ApplicationsNo ratings yet

- UZ Graduate School of Management Assignment on Expert Systems, Neural Networks and Knowledge ManagementDocument13 pagesUZ Graduate School of Management Assignment on Expert Systems, Neural Networks and Knowledge ManagementTafadzwa GwenaNo ratings yet

- Be The One - Season 3 - Tech Geeks - MDIDocument6 pagesBe The One - Season 3 - Tech Geeks - MDIYASH KOCHARNo ratings yet

- Assessing and Improving Prediction and Classification: Theory and Algorithms in C++From EverandAssessing and Improving Prediction and Classification: Theory and Algorithms in C++No ratings yet

- Chapter IDocument23 pagesChapter Ivits.20731a0433No ratings yet

- MACHINE LEARNING FOR BEGINNERS: A Practical Guide to Understanding and Applying Machine Learning Concepts (2023 Beginner Crash Course)From EverandMACHINE LEARNING FOR BEGINNERS: A Practical Guide to Understanding and Applying Machine Learning Concepts (2023 Beginner Crash Course)No ratings yet

- The Ethics of Algorithms: Key Problems and SolutionDocument11 pagesThe Ethics of Algorithms: Key Problems and SolutionPranav R KashyapNo ratings yet

- Hassan 2021 SLR PDFDocument26 pagesHassan 2021 SLR PDFJerimNo ratings yet

- ID 429 Anodot Ultimate Guide To Building A Machine Learning Outlier Detection System Part IIDocument22 pagesID 429 Anodot Ultimate Guide To Building A Machine Learning Outlier Detection System Part IIClément MoutardNo ratings yet

- Burton 2019 SLR Augementeddecisonmaking PDFDocument20 pagesBurton 2019 SLR Augementeddecisonmaking PDFJerimNo ratings yet

- key_terms_for_ai_governanceDocument10 pageskey_terms_for_ai_governanceLucaNo ratings yet

- Jumpstart Your ML Journey: A Beginner's Handbook to SuccessFrom EverandJumpstart Your ML Journey: A Beginner's Handbook to SuccessNo ratings yet

- Anomaly Detection 2Document8 pagesAnomaly Detection 2Aishwarya SinghNo ratings yet

- Ex MLDocument33 pagesEx MLAntonio FernandezNo ratings yet

- Towards Ethical Socio-Legal Governance in AIDocument3 pagesTowards Ethical Socio-Legal Governance in AIAjay MukundNo ratings yet

- Term Governance IADocument8 pagesTerm Governance IAJosue FamaNo ratings yet

- Introduction and Problem Area: Tentative Title - Detecting Interesting PatternsDocument7 pagesIntroduction and Problem Area: Tentative Title - Detecting Interesting PatternsAishwarya SinghNo ratings yet

- Data Science for Beginners: Intermediate Guide to Machine Learning. Part 2From EverandData Science for Beginners: Intermediate Guide to Machine Learning. Part 2No ratings yet

- Model Lifecycle (XII)Document9 pagesModel Lifecycle (XII)Palash ChitlangyaNo ratings yet

- Machine Learning For BeginnerDocument31 pagesMachine Learning For Beginnernithin_vnNo ratings yet

- 1 s2.0 S0010482521001189 MainDocument15 pages1 s2.0 S0010482521001189 MainSyamkumarDuggiralaNo ratings yet

- Ethical and Legal Issue in AI and Data ScienceDocument13 pagesEthical and Legal Issue in AI and Data ScienceMuhammad hamza100% (1)

- ai smqpDocument24 pagesai smqpkushkruthik555No ratings yet

- Artificial Intelligence Diagnosis: Fundamentals and ApplicationsFrom EverandArtificial Intelligence Diagnosis: Fundamentals and ApplicationsNo ratings yet

- TMP 2 DEEDocument13 pagesTMP 2 DEEFrontiersNo ratings yet

- Rapid Prototyping: Lessons Learned: ScollDocument11 pagesRapid Prototyping: Lessons Learned: Scollsannan azizNo ratings yet

- The High-Interest Credit Card of Technical DebtDocument9 pagesThe High-Interest Credit Card of Technical Debtdiana.yantiNo ratings yet

- 3-Princ y Practica Del M.LDocument25 pages3-Princ y Practica Del M.LENRIQUE ARTURO CHAVEZ ALVAREZNo ratings yet

- EthicsDocument20 pagesEthicsAmiel OrtezaNo ratings yet

- Doran RevisedviennapaperDocument9 pagesDoran RevisedviennapaperDeebak TamilmaniNo ratings yet

- 10 1109@iCAST1 2018 8751262Document7 pages10 1109@iCAST1 2018 8751262irapurple03No ratings yet

- Information Gain - Towards Data ScienceDocument8 pagesInformation Gain - Towards Data ScienceSIDDHARTHA SINHANo ratings yet

- 1Document6 pages1Linus AbokiNo ratings yet

- Counterfactual Explanations For Machine Learning A ReviewDocument13 pagesCounterfactual Explanations For Machine Learning A ReviewPurui GuoNo ratings yet

- Chapter Quiz16 20 PDFDocument5 pagesChapter Quiz16 20 PDFAnatasyaOktavianiHandriatiTataNo ratings yet

- An Overview of Analytics, and AI: Learning Objectives For Chapter 1Document23 pagesAn Overview of Analytics, and AI: Learning Objectives For Chapter 1Ahmad YasserNo ratings yet

- Systems For DecisionDocument27 pagesSystems For Decisionsocimedia300No ratings yet

- Lecture02 Frameworks Platforms-Part1Document40 pagesLecture02 Frameworks Platforms-Part1Tuna ÖztürkNo ratings yet

- Final Industrial ReportDocument34 pagesFinal Industrial Reportankit10mauryaNo ratings yet

- NotesDocument5 pagesNotesxentro clipsNo ratings yet

- Introduction To Pattern Recognition SystemDocument12 pagesIntroduction To Pattern Recognition SystemSandra NituNo ratings yet

- Distributed Artificial Intelligence: Fundamentals and ApplicationsFrom EverandDistributed Artificial Intelligence: Fundamentals and ApplicationsNo ratings yet

- Executive Data ScienceDocument6 pagesExecutive Data ScienceMusicLover21 AdityansinghNo ratings yet

- Introduction To Machine Learning: February 2010Document9 pagesIntroduction To Machine Learning: February 2010Ermias MesfinNo ratings yet

- Use of Adaptive Boosting Algorithm To Estimate User's Trust in The Utilization of Virtual Assistant SystemsDocument6 pagesUse of Adaptive Boosting Algorithm To Estimate User's Trust in The Utilization of Virtual Assistant SystemsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fuzzy Type-2 Facial Recognition SystemDocument16 pagesFuzzy Type-2 Facial Recognition SystemSorelle KanaNo ratings yet

- Using Genetic Algorithms and Simulation As DecisioDocument6 pagesUsing Genetic Algorithms and Simulation As DecisioAndreea CostacheNo ratings yet

- Does Algorithm Mollify or Magnify Bias in Decision MakingDocument8 pagesDoes Algorithm Mollify or Magnify Bias in Decision Makingafsana8nituNo ratings yet

- Federal University of Lafia: Department of Computer ScienceDocument6 pagesFederal University of Lafia: Department of Computer ScienceKc MamaNo ratings yet

- Decision Tree Is An UpsideDocument7 pagesDecision Tree Is An UpsideSmriti PiyushNo ratings yet

- Sex Offender Risk Assessment ArticleDocument14 pagesSex Offender Risk Assessment Articlevelma martinezNo ratings yet

- House Hearing, 109TH Congress - Sexual Exploitation of Children Over The Internet: The Face of A Child Predator and Other IssuesDocument172 pagesHouse Hearing, 109TH Congress - Sexual Exploitation of Children Over The Internet: The Face of A Child Predator and Other IssuesScribd Government Docs100% (1)

- 1 10 Anthony BeechDocument42 pages1 10 Anthony BeechWaqar Ahmed ShaikhNo ratings yet

- Jarrod Bacon Parole Board of Canada 2019Document5 pagesJarrod Bacon Parole Board of Canada 2019CTV VancouverNo ratings yet

- Indiana Probation Exam Study GuideDocument17 pagesIndiana Probation Exam Study GuideGary FomichNo ratings yet

- Deterrence BriefingDocument12 pagesDeterrence BriefingPatricia NattabiNo ratings yet

- Edgar 123Document38 pagesEdgar 123leaiyang08241996No ratings yet

- AB 720 Fact Sheet - Updated PDFDocument1 pageAB 720 Fact Sheet - Updated PDFTracie MoralesNo ratings yet

- Ebook Corrections 2Nd Edition Stohr Test Bank Full Chapter PDFDocument30 pagesEbook Corrections 2Nd Edition Stohr Test Bank Full Chapter PDFselenatanloj0xa100% (10)

- Amicus Brief in Support of PAA For John Wetzel and George LittleDocument25 pagesAmicus Brief in Support of PAA For John Wetzel and George LittleKyle BarryNo ratings yet

- 2020 Violent Crime Reduction PlanDocument29 pages2020 Violent Crime Reduction PlanArnessa GarrettNo ratings yet

- Concept of Recidivism in IndiaDocument6 pagesConcept of Recidivism in IndiaAnirudh PrasadNo ratings yet

- The Horse CourseDocument20 pagesThe Horse CourseDiegoAbelQuichePaucarNo ratings yet

- The Future of Criminal AttitudesDocument20 pagesThe Future of Criminal AttitudesliviugNo ratings yet

- Prosecution of Serious Juvenile Offenses and Youth Accountability - June 2023Document9 pagesProsecution of Serious Juvenile Offenses and Youth Accountability - June 2023Christie ZizoNo ratings yet

- English ProjectDocument17 pagesEnglish Projectaravee3010No ratings yet

- Final Presentation 5-22Document47 pagesFinal Presentation 5-22api-285030242No ratings yet

- Rehabilitation Programs On The Behavior of Juveniles in Manga Children's Remand Home, Nyamira County - KenyaDocument7 pagesRehabilitation Programs On The Behavior of Juveniles in Manga Children's Remand Home, Nyamira County - KenyaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- (Criminology)Document27 pages(Criminology)manjeetNo ratings yet

- Fem 2015 66 IrDocument54 pagesFem 2015 66 IrzulkaberNo ratings yet

- Us Prison System Argumentative Essay Final DraftDocument8 pagesUs Prison System Argumentative Essay Final Draftapi-395015790No ratings yet

- Komatsu Forklift Fg20 12 To Fg30sh 12 Fd20 12 To Fd30 12 Shop Manual Sm050Document24 pagesKomatsu Forklift Fg20 12 To Fg30sh 12 Fd20 12 To Fd30 12 Shop Manual Sm050pumymuma100% (43)

- Negative Impacts That Prison Has On Mentally Ill PeopleDocument15 pagesNegative Impacts That Prison Has On Mentally Ill PeopleIAN100% (1)

- Analysis of The Causes and Effects of Recidivism in The Nigerian Prison System Otu, M - SorochiDocument10 pagesAnalysis of The Causes and Effects of Recidivism in The Nigerian Prison System Otu, M - SorochiFahad MemonNo ratings yet

- Ashkar - Views From The Inside Young Offenders' Subjective Experiences of IncarcerationDocument14 pagesAshkar - Views From The Inside Young Offenders' Subjective Experiences of IncarcerationZoe PeregrinaNo ratings yet

- Promoting Workforce Strategies For Reintegrating Ex OffendersDocument19 pagesPromoting Workforce Strategies For Reintegrating Ex Offendersaflee123No ratings yet

- The Therapeutic PrisonDocument16 pagesThe Therapeutic PrisonjavvnNo ratings yet

- RecidivistDocument7 pagesRecidivistElijahBactolNo ratings yet

- Community's Perception Towards Women Deprived of LibertyDocument6 pagesCommunity's Perception Towards Women Deprived of LibertyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- II Individual Activity Spider MapDocument3 pagesII Individual Activity Spider Mapapi-3412752510% (3)