Professional Documents

Culture Documents

Lecture Notes Ling2019 1

Uploaded by

Phúc NguyễnOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lecture Notes Ling2019 1

Uploaded by

Phúc NguyễnCopyright:

Available Formats

lOMoARcPSD|27553903

49275 Lecture Notes Ling(2019 )-1

Neural Networks and Fuzzy Logic (University of Technology Sydney)

Studocu is not sponsored or endorsed by any college or university

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Steve Ling

School of Biomedical Engineering

Faculty of Engineering and Information Technology

University of Technology, Sydney

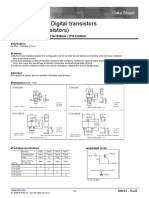

Fuzzy input1: Heart rate

1

Output membership value

0.8

0.6

VL L M H VH

0.4

0.2

0

0.5 1 1.5 2 2.5

Normailized heat rate

Fuzzy input2: Corrected QT interval

1

Output membership value

0.8

0.6

VL L M H VH

0.4

0.2

0

Fuzzy

0.7 0.8 0.9 1 1.1 1.2 1.3 1.4 1.5

Normailized corrected QT interval Logic

UTS

March 2019

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

49275 Neural Networks and Fuzzy Logic

Steve SH Ling

CONTENTS

1. INTRODUCTION TO NEURAL NETWORKS AND FUZZY LOGIC

1.1 Model-Free Systems 1

1.2 Important Milestones 2

1.2.1 Neural Networks 2

1.2.2 Fuzzy Systems 4

1.3 Various Structures 5

1.3.1 Neural Networks 5

1.3.2 Fuzzy Systems 7

1.4 Introduction to Neural Networks 8

1.4.1 Biological Neurons 8

1.4.2 Simple Neuron model 10

1.4.3 Architectures, Output Characteristics and Learning Algorithms 11

1.4.4 Applications of neural network 12

1.5 Introduction to Fuzzy Logic 13

1.5.1 Fuzzy Set Theory 13

1.5.2 Support Set 14

1.5.3 Membership Functions 14

1.5.4 Fuzzy Set Operations 16

1.5.5 Extension Principle 18

1.5.6 Linguistic Hedges 18

References 20

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

2. FUNDAMENTAL CONCEPTS OF NEURAL NETWORKS

2.1 Neuron Modelling for Artificial Neural Systems 23

2.1.1 McCulloch-Pitts Neuron Model 23

2.1.2 Perceptrons 26

2.2 Basic Network Architectures 30

2.2.1 Feedforward Network 30

2.2.2 Recurrent Network 31

2.3 Learning Rules 32

2.3.1 Supervised and Unsupervised Learning 32

2.3.2 The General Learning Rule 32

2.3.3 Hebbian Learning Rule 33

2.3.4 Discrete Perceptron Learning Rule 34

2.3.5 Delta Learning Rule 35

2.3.6 Widrow-Hoff Learning Rule 37

2.3.7 Summary of learning rule and their properties 38

References 38

3. FUNDAMENTAL CONCEPTS OF FUZZY LOGIC AND FUZZY

CONTROLLER

3.1 Fundamental concepts of fuzzy logic 39

3.1.1 Fuzzy Relations 39

3.1.2 Composition of Fuzzy Relations 40

3.2 Fuzzy Logic Control System 42

3.2.1 System Variables 43

3.2.2 Fuzzification 44

3.2.3 Fuzzy Control Rules and Rule Base 45

3.2.4 Reasoning Techniques 49

ii

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

3.2.5 Defuzzification 51

3.3 Closed Loop Fuzzy Logic Control 56

3.4 Self-Organising Fuzzy Logic Controller 66

3.4.1 Structure of a SOFLC 66

3.4.2 Performance Index Table 67

3.4.3 Rule-base Generation and Modification 68

3.4.4 Self-Organising Procedure 68

3.4.5 Remarks 69

References 70

4. SINGLE-LAYER FEEDFORWRD NEURAL NETWORKS AND

RECURRENT NEURAL NETWORK

4.1 Single-Layer Perceptron Classifiers 71

4.1.1 Classification Model 71

4.1.2 Discriminant Functions 73

4.1.3 Linear Classifier 74

4.1.4 Minimum-distance classifier 77

4.1.5 Non-parametric Training Concept 80

4.1.6 Training and Classification using the Discrete Perceptron 83

4.1.7 Single-Layer Continuous Perceptron Networks 85

4.1.8 Multi-Category Single-Layer Perceptron Networks 89

4.2 Single-Layer Feedback (Recurrent) Network 92

References 96

iii

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

5. MULTI-LAYER FEEDFORWRD NEURAL NETWORKS

5.1. Linearly Nonseparable Patterns 97

5.2 Delta Learning Rule for Multi-Perceptron Layer 100

5.3 Generalised Delta Learning Rule (Error Back Propagation Training) 101

5.4 Learning Factors 104

5.4.1 Evaluate the network performance 104

5.4.2 Initial Weights 106

5.4.3 Learning Constant 106

5.4.4 Adaptive Learning rate 107

5.4.5 Momentum Method 107

5.4.6 Network Architecture and Data Representation 109

5.5 Batch Mode Training 113

5.6 Early Stopping Method of Training 114

References 115

6. INTRODUCTION TO CONVOLUTIONAL NEURAL NETWORKS

6.1 Motivation 116

6.2 Architecture of Convolutional Neural Networks 117

6.2.1 Convolutional Layer 117

6.2.1.1 Local Connectivity 118

6.2.1.2 Spatial Arrangement 119

6.2.1.3 Parameter Sharing 121

6.2.2 Pooling Layer 124

6.2.3 Fully-connected Layer 128

6.2.4 Softmax 128

iv

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

6.3 Optimization Algorithms for Training Deep Models 129

6.3.1 Stochastic Gradient Descent 129

6.3.2 Momentum 130

6.3.3 Parameter initialization strategy 132

6.3.4 Algorithms with Adaptive Learning Rates 132

6.3.4.1 AdaGrad 133

6.3.4.2 RMSProp 134

6.3.4.3 Adam 135

6.3.4.4 Choosing the Right Optimization Algorithm 135

6.3.5 Batch Normalization 136

References 137

7. GENETIC ALGORITHMS

7.1 Introduction to Genetic Algorithm 138

7.2 Optimisation of a simple function 139

7.2.1 Representation 140

7.2.2 Initial population 141

7.2.3 Evaluation function 141

7.2.4 Genetic operators 142

7.2.5 Parameters 143

7.2.6 Experimental results 143

7.3 Genetic Algorithms: How do they work? 144

7.4 Real-coded Genetic Algorithms 155

7.4.1 Crossover operations 157

7.4.1.1 Single-point crossover 157

7.4.1.2 Arithmetic crossover 157

7.4.1.3 Blend- crossover 158

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

7.4.2 Mutation operations 158

7.4.2.1 Uniform mutation 158

7.4.2.2 Non-uniform mutation 159

7.5 Training the neural network using Genetic Algorithm 159

References 161

vi

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

CHAPTER ONE

INTRODUCTION TO NEURAL NETWORKS AND FUZZY

LOGIC

_______________________________________________________

Chapter 1.1 Model-Free Systems

In a world of evolving complexity and variety, although events are never the same, there

is some continuity, similarity and predictability which allow us to generalise future

events from past experience. Two techniques, neural networks and fuzzy systems, share

the common ability to work well in this natural environment which is riddled with

difficulty arising from uncertainty, imprecision, and noise.

Neural networks and fuzzy systems estimate functions from sample data. Deterministic and

statistical approaches also estimate functions, however they require mathematical models.

Neural networks and fuzzy systems are model-free estimators as they do not require the

development of system models such as transfer functions and state-space representations.

The operational framework of neural networks and fuzzy systems is symbolic.

Neural networks theory has its structure embedded in the mathematical fields of

dynamical systems, optimal and adaptive control, and statistics. Fuzzy theory

encompasses these fields and others such as probability, mathematical logic, and

nonlinear control. Applications of neural networks include high speed moderns, long

distance telephone calls, airport bomb detectors, medical imaging, biomedical signal

classification systems and handwritten character and speech recognition systems.

Applications of fuzzy systems include subway systems, elevator and traffic light scheduling

systems. Fuzzy systems are also used to auto-focus camcorders, smart home systems,

biomedical instrumentation, and to control smartly household appliances such as air

conditioners, washing machines, vacuum cleaners, and refrigerators.

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 1.2 Important Milestones

1.2.1 Neural Networks

McCulloch and Pitts outlined the first formal element of an elementary computing neuron in

1943. The connections between neurons in a network fundamentally determine the dynamics

of the network. For this reason, the field known today as Neural Networks was originally

called Connectionism. Networks of this type seemed appropriate for modelling not only

symbolic logic, but also perception and behaviour.

In 1958, Rosenblatt found models based on McCulloch & Pitts neurons to be

unbiological. They required implausibly precise connections and timing, and they did not take

into account model variations in real neural networks. Rosenblatt then developed a theory of

statistical separability in a class of network models called perceptrons. It became clear later

that the perceptron was incapable of learning to distinguish classes of patterns which were not

linearly separable.

The solution of this problem is back-propagation, a method of training a neural network to

approximate any function, including arbitrary complex functions. It is the only form of neural

network which has produced a significant number of commercial applications.

In 1988, Yann LeCun developed a fundamental convolutional neural network which named

LeNet5. The image features are distributed across the entire image, and convolutions with

learnable parameters are an effective way to extract similar features at multiple location with few

parameters. In 2014, Christian Szegedy from Google begun a quest aimed at reducing the

computational burden of deep neural networks, and devised the GoogLeNet the first Inception

architecture.

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

The important milestones in the development of artificial neural systems may be

summarised in Table 1 . 1.

Table 1 . 1 Milestones in the development of artificial neural systems

1943 McCulloch, Pitts McCulloch & Pitts neuron model

1949 Hebb Hebbian learning rule

1954 Minsky First neurocomputer

1958 Rosenblatt Rosenblatt perceptron

1960 Widrow, Hoff ADALINE (Adaptive Linear Element)

1962 Widrow, Hoff Widrow-Hoff learning rule

1974 Grossberg, Cohen ART (Adaptive Resonance Theory)

1974 Werbos Early exposition of back-propagation

1977 Kohonen Associative memory

1980 Fukushima, Miyaka Neocognitron

1982 Hopfield Recurrent neural networks

1982 Kohonen Self-organising maps

1986 Rumelhart, Hinton, Williams Back-propagation

1988 Broomhead, Lowe Radial basis function

1988 Yann LeCun Convolutional neural network (LeNet5)

1992 MacKay Bayesian neural networks

2014 Christian Szegedy GoogLeNet

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

1.2.2 Fuzzy Systems

During the past several years, fuzzy logic control has emerged as one of the most active and

fruitful areas for research in the application of fuzzy set theory. Motivated by Zadeh's seminal

papers on the linguistic approach and system analysis based on the theory of fuzzy sets,

Mamdani and his colleagues pioneered the use of fuzzy logic control. Recent applications

have shown effective control using fuzzy logic can be designed for complex ill-defined control

systems without the knowledge of their underlying dynamics.

The important milestones in the development of fuzzy logic control may be summarised in

Table 1 . 2.

Table 1 . 2 Milestones in the development of fuzzy logic control

1965 Zadeh Fuzzy sets

1972 Zadeh A rationale for fuzzy logic control

1973 Zadeh Linguistic approach

1974 Mamdani, Assilian Steam engine control

1977 Ostergaard Heat exchanger and cement kiln control

1979 Procyk Self-organising fuzzy logic control

1980 Tong et al. Wastewater treatment process

1980 Fukami, Muzimoto, Tanaka Fuzzy conditional inference

1984 Sugeno, Murakami Parking control of a model car

1985 Kiszka, Gupta Fuzzy system stability

1985 Takagi, Sugeno Takagi-Sugeno (T-S) fuzzy models

1987 Miyamoto, Yasunobu Sendai subway system

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 1.3 Various Structures

1.3.1 Neural Networks

Back-propagation provides a way of using a target function to find the coefficients which make a

certain mapping function approximate the target function as closely as possible. The mapping

function in back-propagation is complex. It can be visualised as the computation carried

out by a fully connected three-layer feedforward network. The network consists of three

layers: the input, hidden, and output layers as shown in Figure 1.1.

Input Layer Hidden Layer Output Layer

Figure 1.1 Neural Network

Neural networks used for identification purposes typically have multilayer feedforward

architectures and are trained using the error back-propagation technique. The basic configuration

for forward plant identification is shown in Figure 1.2. The identification of the plant inverse is

another viable alternative for designing control systems. A neural network configuration using

inverse plant identification is shown in Figure 1.3.

Figure 1.2 Forward Plant Identification

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Figure 1.3 Plant Inverse Identification

Figure 1.4 shows the feedforward controller implemented using a neural network. Neurocontroller

B is an exact copy of neural network A, which undergoes training. Network A is connected so that

it gradually learns to perform as the unknown plant inverse. A closely related control architecture

for control and simultaneous specialised learning of the output domain is shown in Figure 1.5.

Figure 1.4 Neural Network Control with Plant Inverse Learning

Figure 1.5 Neural Network Control

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

1.3.2 Fuzzy Systems

A fuzzy logic controller (FLC) can be typically incorporated in a closed loop control system as

shown in Figure 1.6. The main elements of the FLC are a fuzzification unit, an inference engine

with a knowledge base, and a defuzzification unit.

The Self-Organising Fuzzy Logic Controller (SOFLC) shown in Figure 1.7 which has a control

policy which can change with respect to the process it is controlling and the environment it is

operating in. The particular feature of this controller is that it strives to improve its performance

until it converges to a predetermined quality.

Figure 1.6 Fuzzy logic controller

Figure 1.7 Self-organising fuzzy logic controller

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 1.4 Introduction to Neural Networks

Neural Network is an interconnected assembly of simple processing elements, units, or nodes,

whose functionality is loosely based on the animal neuron. The processing ability of the

network is stored in the inter-unit connection, strengths, or weights, obtained by a process of

adaptation to, or learning from, a set of training patterns. One of the simple example of neural

network structure is shown in Figure 1.8. In this Figure, a denotes the input of network, b

denotes the output of network, U denotes the neuron (node), and w denotes the weight between

two neurons. There are three entities that characterize a neural network:

▪ The network topology, or interconnection of neural units (feedforward, recurrent, etc).

▪ The characteristics of individual units or artificial neurons (transfer functions).

▪ The strategy for pattern learning or training (Hebbian learning, delta learning rule, etc)

Figure 1.8 Example of neural network structure

1.4.1 Biological Neurons

The neuron is the fundamental building block of the biological network. Its schematic diagram

is shown in Figure 1.9. A typical cell has three major regions: the cell body (soma), the axon,

and the dendrites. Dendrites form a dendritic tree, which is a very fine bush of fibers around

the neuron's body. Dendrites receive information from neurons through long fibres called axons.

An axon is a long cylindrical connection that carries impulses from the neuron. The axon-

dendrite contact organ is called a synapse. The synapse is where the neuron introduces its signal

to the neighbouring neuron

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

The neuron is able to respond to the total of its inputs aggregated within a short time interval

called the period of latent summation. The neuron's response is generated if the total potential

of its membrane reaches a certain level. Incoming impulses can be excitatory if they cause the

firing of a neuron, or inhibitory if they hinder the firing of a response. A more precise condition

for firing is that the excitation should exceed the inhibition by the amount called the threshold

of the neuron, typically a value of about 40 mV. After carrying a pulse, an axon fibre is in a

state of completely nonexcitability for a certain time called the refractory period. The time

units for modelling biological neurons can be taken to be of the order of a millisecond. However,

the refractory period is not uniform over the cells.

The typical cycle time of neurons is about a million times slower than semiconductor gates.

Nevertheless, the brain can do very fast processing for tasks like vision, motor control, and

decisions even with access to incomplete and noisy data. This is obviously possible only

because billions of neurons operate simultaneously in parallel.

Figure 1.9 Schematic Diagram of a Neuron

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

1.4.2 Simple Neuron model

A basic neuron model is shown in Figure 1.10 (a) and its threshold T characteristic is shown

in Figure 1.10 (b). The firing rule for this model is defined as follows:

1 𝑛𝑒𝑡 ≥ 𝑇

𝑜 = 𝑓(𝑛𝑒𝑡) = { (1.1)

0 𝑛𝑒𝑡 < 𝑇

where 𝑛𝑒𝑡 = ∑𝑛𝑖=1 𝑤𝑖 𝑥𝑖 ,

o denotes the output of neuron,

𝑥𝑖 denotes the input of neuron, i = 1, 2, … n where n is the number of input.

𝑤𝑖 denotes the weight between the output o and input x.

Figure 1.10 Basic neuron model

To consider the conditions necessary for the firing of a neuron. Incoming impulses can be

excitatory if they cause the firing, or inhibitory if the hinder the firing of the response. Notes

that wi = +1 for excitatory synapses and wi = −1 for inhibitory synapses.

10

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

1.4.3 Architectures, Output Characteristics and Learning Algorithms

There are two main types of neural networks and namely Feed-forward networks and

Recurrent/feedback networks. A summary of the architectures of neural networks are shown in

Figure 1.11.

Various feed-forward neural networks have been developed such as single-layer perceptron,

multilayer perceptron and radial basis function nets, etc. Feed-forward type networks receive

external signals and simply propagate these signals through all the layers to obtain the result

(output) of the neural network. There are no feedback connection previous layer.

On the other hand, recurrent/feedback networks such as competitive networks, Kohonen’s

SOM, Hopfield network, etc, have such feedback connections to model the temporal

characteristics of the problem being learned.

Figure 1.11 A summary of the architectures of neural networks

One of the key characteristic of neural network is its learning ability. Learning consists of

adjusting weight and threshold values until a certain criterion (or several criteria) is (are)

satisfied.

There are two main types of learning:

Supervised learning, where the neuron (or neural network) is provided with a data set

consisting of input vectors and a target (desired output) associated with each input vector. This

data set is referred to as the training set. The aim of supervised training is then to adjust the

weight values such that the error between the real output of the neuron and the target output is

11

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

minimized. The learning algorithms of supervised learning are including LVQ, Perceptron,

Back-propagation, ARTMap, etc.

Unsupervised learning, where the aim is to discover patterns or features in the input data with

no assistance from an external source. Many unsupervised learning algorithms basically

perform a clustering of the training patterns. The learning algorithms of unsupervised learning

are including SOM, VQ, PCA, Hebb learning rule, etc.

1.4.4 Applications of neural network

There are six tasks that neural network can perform:

(1) Pattern classification

(2) Clustering

(3) Function approximation

(4) Prediction or forecasting

(5) Optimisation

(6) Retrieval by content

Figure 1.12 Tasks of neural network.

12

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 1.5 Introduction to Fuzzy Logic

In a traditional set theory, an item is either a member of a set or it is not. This two-valued logic

has proved to be very effective in solving well-defined problems, which are characterised by

precise descriptions of the process being dealt with in quantitative form. However, there is a

class of problems which are typically complex or ill-defined in nature where the concepts are

no longer clearly true or false, but are more or less false or most likely true. Fuzzy set theory

emerged as one effective approach to dealing with these problems. Developed in 1965 by Lotfi

Zadeh, the theory of fuzzy sets was introduced as an extension to traditional set theory, and

the corresponding fuzzy logic was developed to manipulate the fuzzy sets.

1.5.1 Fuzzy Set Theory (Ross 1995)

Fuzzy sets are defined in a universe of discourse. For a given universe of discourse U, a fuzzy

set is determined by a membership function which maps members of U on to a membership

range in the interval [0,1]. Associated with a classical binary or crisp set is a characteristic

function which returns 1 if the element is a member of that set and 0 otherwise.

A fuzzy set F in a universe of discourse U is usually represented as a set of ordered pairs of

elements u and grade of membership value μ F ( u ) :

F={ (u, F(u))|uU } (1.2)

A fuzzy set F can be written as

𝐹 = ∫𝑈 F(u)/𝑢 for continuous U (1.3)

𝐹 = ∑𝑛𝑖=1 F(𝑢𝑖 )/𝑢𝑖 for discrete U (1.4)

13

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 1.1

In the universe of discourse U = {2,3,4,5,6,7} , the fuzzy subset F labelled ‘integer close to

4’ may be defined as

F = 0. 33 / 2 + 0. 66 / 3 + 1. 0 / 4 + 0. 66 / 5 + 0. 33 / 6 + 0. 0 / 7

1.5.2 Support Set

The support set of a fuzzy set F is the crisp set of all points u in U such that μ F ( u ) > 0. A

fuzzy set whose support is a single point in U is referred to as a fuzzy singleton. The support

set is said to be compact if it is a strict subset of the universe of discourse.

1.5.3 Membership Functions

The membership for fuzzy sets can be defined numerically or as a function. A numerical

definition expresses the degree of membership function as a vector of numbers. A

functional definition defines the membership function in an analytic expression which

allows the membership grade for each element in the defined universe of discourse· to be

calculated. The membership functions which are often used include the triangular function,

the trapezoid function and the Gaussian function, as illustrated in Figure 1.13.

The triangular function is defined as follows:

0 𝑓𝑜𝑟 𝑢 < 𝑎

(𝑢 − 𝑎)/(𝑏 − 𝑎) 𝑓𝑜𝑟 𝑎 ≤ 𝑢 ≤ 𝑏

𝜇𝐹 (𝑢) = { (1.5)

(𝑐 − 𝑢)/(𝑐 − 𝑏) 𝑓𝑜𝑟 𝑏 ≤ 𝑢 ≤ 𝑐

0 𝑓𝑜𝑟 𝑢 > 𝑐

14

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

The trapezoid function is defined as follows:

0 𝑓𝑜𝑟 𝑢 < 𝑎

(𝑢 − 𝑎)/(𝑏 − 𝑎) 𝑓𝑜𝑟 𝑎 ≤ 𝑢 ≤ 𝑏

𝜇𝐹 (𝑢 ) = 1 𝑓𝑜𝑟 𝑏 ≤ 𝑢 ≤ 𝑐 (1.6)

(𝑑 − 𝑢)/(𝑑 − 𝑐) 𝑓𝑜𝑟 𝑐 ≤ 𝑢 ≤ 𝑑

{ 0 𝑓𝑜𝑟 𝑢 > 𝑑

The Gaussian function is defined as follows:

(𝑢−𝑐)2

−

𝜇𝐹 (𝑢) = 𝑒 2𝜎2 (1.7)

where the parameters are the centre c and the variance .

Figure 1.13 Some typical membership functions (Triangular, Trapezoid, Gaussian).

15

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 1.2

A fuzzy subset F of U labelled “middle age” may be defined as

F={ (u, F(u))|uU }

Using a triangular membership function, the fuzzy subset F might be defined as

0 𝑓𝑜𝑟 𝑢 < 30

(𝑢 − 30)/15 𝑓𝑜𝑟 30 ≤ 𝑢 ≤ 45

𝜇𝐹 (𝑢) = {

(60 − 𝑢)/15 𝑓𝑜𝑟 45 ≤ 𝑢 ≤ 60

0 𝑓𝑜𝑟 𝑢 > 60

Figure 1.14 Fuzzy subset of “middle age” persons.

1.5.4 Fuzzy Set Operations

Let A and B be two fuzzy sets in U with membership functions A and B respectively. Some

basic fuzzy set operations are summarised as follows:

Equality A(u) = B(u), uU (1.8)

Union A B (u) =max{A(u), B(u)}, uU (1.9)

Intersection A B (u) =min{A(u), B(u)}, uU (1.10)

Complement A’ (u) = 1−A(u), uU (1.11)

Normalisation NORM(A) (u) = A(u)/max(A(u)), uU (1.12)

Concentration CON(A) (u) = (A(u))2, uU (1.13)

Dilation DIL(A) (u) = √(A(u)), uU (1.14)

16

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Intensification 2(A(u))2 𝑓𝑜𝑟 0 ≤ A(u) ≤ 0.5

INT(A) (u) = { 2 𝑓𝑜𝑟 0.5 ≤ A(u) ≤ 1

1 − 2(1 − A(u)) (1.15)

Algebraic A • B (u) =A(u) • B(u), uU (1.16)

product

Bounded sum A B (u) = min{1,A(u) + B(u)}, uU (1.17)

Bounded A B (u) = max{0,A(u) + B(u)−1}, uU (1.18)

product

A(u) 𝑓𝑜𝑟 B(u) = 1

Drastic product A B (u)= {B(u) 𝑓𝑜𝑟 A(u) = 1 (1.19)

0 for A(u), B(u) < 1

Example 1.3

Let two fuzzy sets A and B be defined as follows:

A=0.1/1 + 0.3/2 + 0.7/3 + 1.0/4 + 0.6/5 + 0.2/6 + 0.1/7

B=0.2/1 + 0.8/2 + 1.0/3 + 0.6/4 + 0.4/5 + 0.3/6 + 0.1/7

Then

A’=0.9/1 + 0.7/2 + 0.3/3 + 0.0/4 + 0.4/5 + 0.8/6 + 0.9/7

A’ A =0.1/1 + 0.3/2 + 0.3/3 + 0.0/4 + 0.4/5 + 0.2/6 + 0.1/7

A’ A =0.9/1 + 0.7/2 + 0.7/3 + 1.0/4 + 0.6/5 + 0.8/6 + 0.9/7

A B =0.1/1 + 0.3/2 + 0.7/3 + 0.6/4 + 0.4/5 + 0.2/6 + 0.1/7

A B =0.2/1 + 0.8/2 + 1.0/3 + 1.0/4 + 0.6/5 + 0.3/6 + 0.1/7

17

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

1.5.5 Extension Principle

It is possible for elements u of one universe of discourse U to be mapped onto elements v of

another universe of discourse v through a function f. An extension principle developed by

Zadeh (1975) and later elaborated by Yager (1986) allows us to extend the domain of a function

on fuzzy sets.

Let f:u→v and define A to be a fuzzy set on the universe of discourse U and

A=1/u1+ 2/u2 +…+ n/un

then f(A)=1/f(u1)+ 2/ f(u2) +…+ n/ f(un) (1.20)

Example 1.4

If v=f(u)=2u−1 and A=0.6/1+1/2+0.8/3

then

f(A)=0.6/1+1/3+0.5/5 or V=0.6/1+1/3+0.5/5

1.5.6 Linguistic Hedges

One powerful aspect of fuzzy sets is the ability to deal with linguistic quantifiers or “hedges”.

Hedges such as very, more or less, not very, plus, etc. correspond to modifications in the

membership function as illustrated in Figure 1.15. Table 1.3 shows some fuzzy set operators

which can be used to represent some standard hedges. Note that the operator definition are not

unique and should be designed into appropriate forms before being used.

18

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Figure 1.15 Fuzzy set modified by hedges.

Table 1.3 Hedges and corresponding operators

19

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

References

1. Brown, M., Harris, C. 1994, Neuro fuzzy Adaptive Modelling and Control,

Hertfordshire Prentice Hall.

2. Chai, R., Ling, S. H., San, P. P., Naik, G., Nguyen, N. T., Tran, Y., Craig, A., and

Nguyen, N. T. 2017, “Improving EEG-based driver fatigue classification using

sparse-deep belief networks,” Frontiers in Neuroscience, vol.11, Article103.

3. Ghevondian, N., Nguyen, H. T. 1997, ‘Low Power Portable Monitoring System of

Parameters for Hypoglycaemic Patients’, 19th Annual International Conference,

IEEE Engineering in Medicine and Biology Society, 30 October – 2 November 1997,

Chicago, USA, pp. 1029-1031.

4. Ghevondian, N., Nguyen, H. T. 1997, ‘Using Fuzzy Logic Reasoning for Monitoring

Hypoglycaemia in Diabetic Patients’, 19th Annual International Conference, IEEE

Engineering in Medicine and Biology Society, 30 October – 2 November 1997,

Chicago, USA, pp. 1108-1111.

5. Jamshidi, M., Vadiee, N., Ross, T. J. 1993, Fuzzy Logic and Control - Software and

Hardware Applications, Prentice Hall, New Jersey.

6. Joseph, T., Nguyen, H. T. 1998, ‘Neural Network Control of Wheelchairs using

Telemetric Head Movement’, 20th Annual International Conference, IEEE

Engineering in Medicine and Biology Society, 29 October – 1 November 1998, Hong

Kong, pp. 2731-2733.

7. Kosko, B. 1992, Neural Networks and Fuzzy Systems, Prentice Hall, New Jersey.

8. Ling, S. H., Nguyen, H. T. 2012, ‘Natural occurrence of nocturnal hypoglycaemia

detection using hybrid particle swarm optimized fuzzy reasoning model’, Artificial

Intelligence in Medicine, issue 55, pp. 177-184.

20

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

9. Ling, S. H., Leung, F. H. F., Lam, H. K., Tam, P. K. S. 2003, “Short-term electric load

forecasting based on a neural fuzzy network,” IEEE Trans. Industrial Electronics, vol.

50, no. 6, pp.1305–1316.

10. Ling, S. H., Iu, H. H. C., Leung, F. H. F., Chan K. Y. 2008, “Improved hybrid PSO-

based wavelet neural network for modelling the development of fluid dispensing for

electronic packaging,” IEEE Trans. Industrial Electronic, vol. 55, no. 9, pp. 3447–

3460, Sep. 2008.

11. Ling, S. H., Nguyen, H. T. 2011, “Genetic algorithm based multiple regression with

fuzzy inference system for detection of nocturnal hypoglycemic episodes,” IEEE Trans.

on Information Technology in Biomedicine, vol. 15, no. 2, pp. 308–315.

12. Ling, S. H., San, P. P., Chan K. Y., Leung, F. H. F., Liu, Y. 2014, “An intelligent

swarm based-wavelet neural network for affective mobile phone design,”

Neurocomputing, vol. 142, pp. 30-38.

13. Nguyen, H. T., Sands, D. M. 1995, ‘Self-Organising Fuzzy Logic Controller’, Control

95, The Institution of Engineers, Australia, 23-25 October 1995, Melbourne, vol 2, pp.

353-257.

14. Nguyen, H. T., King, L. M., Knight, G. 2004, ‘Real-Time Head Movement System

and Embedded Linux Implementation for the Control of Power Wheelchairs’, 26th

Annual International Conference of the IEEE Engineering in Medicine and Biology

Society, 1-5 September 2004, San Francisco, USA, pp. 4892-4895.

15. Nguyen, H. T., Nguyen, S. T., Taylor, P. B., Middleton J. 2007, ‘Head Direction

Command Classification using an Adaptive Optimal Bayesian Neural Network’,

International Journal of Factory Automation, Robotics and Soft Computing, Issue 3,

July 2007, pp. 98-103.

16. Smith, M. 1993, Neural Networks for Statistical Modelling. Van Nostrand Reinhold,

New York.

21

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

17. Ross T.J. 1995. Fuzzy Logic with Engineering Applications. McGraw-Hill.

18. Yan, J., Ryan, M., Power, J. 1994, Using Fuzzy Logic, Prentice Hall, Hertfordshire.

Zurada, J. M. 1992, Introduction to Artificial Neural Systems. West Publishing

Company, St. Paul.

22

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

CHAPTER TWO

FUNDAMENTAL CONCEPTS OF NEURAL NETWORKS

_______________________________________________________

Chapter 2.1 Neuron Modelling for Artificial Neural Systems

2.1.1 McCulloch-Pitts Neuron Model

The first formal definition of a synthetic neuron model was formulated by McCulloch and

Pitts (1943). The McCulloch-Fitts neuron model is shown in Figure 2.1. The firing rule for

this model is defined as follows

1 𝑖𝑓 ∑𝑛𝑖=1 𝑤𝑖 𝑥𝑖 ≥ 𝑇 1 𝑖𝑓 𝐰 ′ 𝐱 ≥ 𝑇

𝑧={ 𝑂𝑅 𝑧={ (2.1)

0 𝑖𝑓 ∑𝑛𝑖=1 𝑤𝑖 𝑥𝑖 < 𝑇 0 𝑖𝑓 𝐰 ′ 𝐱 < 𝑇

where w is the weight vector and x is the input vector.

𝑤1 𝑥1

𝐰 = [𝑤 𝑥

…2 ] and 𝐱 = [ …2 ]

𝑤𝑛 𝑥𝑛

Note that wi = +1 for excitatory synapses, wi = −1 for inhibitory synapses for this model, and

T is the neuron's threshold value.

Although this neuron model is very simplistic, it has substantial computing potential. It can

perform the basic logic operations NOT, OR, and AND, provided its weights and thresholds

are properly selected.

Figure 2.1 McCulloch-Pitts Neuron Model

23

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 2.1

Example of three-input NOR gates using the McCulloch-Pitts neuron model is shown in

Figure 2.2. Verify the implemented functions by compiling a truth table for this logic gate.

Figure 2.2 NOR Gate

Solution 2.1

v1

y v2

x1 x2 x3 v1=w’x y v2 z

0 0 0 0 0 0 1

0 0 1 1 1 −1 0

0 1 0 1 1 −1 0

0 1 1 2 1 −1 0

1 0 0 1 1 −1 0

1 0 1 2 1 −1 0

1 1 0 2 1 −1 0

1 1 1 3 1 −1 0

24

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Exercise 2.1

Example of three-input NAND gates using the McCulloch-Pitts neuron model is shown in

Figure 2.3. Verify the implemented functions by compiling a truth table for this logic gate.

Figure 2.3 NAND Gate

25

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

2.1.2 Perceptrons

The McCulloch-Pitts model is based on several simplications. It allows only binary states

(0,1) and operates under a discrete-time assumption with synchronisation of all neurons in a

larger network. Weights and thresholds in a neuron are fixed and no interaction among

network neurons takes place except for signal flow.

A general perceptron consists of a processing element with synaptic input connections and a

single input. Its symbolic representation described in Figure 2.4 shows a set of weights and

the neuron's processing unit (node). The neuron output signal is given by:

𝑣 = ∑𝑛𝑖=1 𝑤𝑖 𝑥𝑖 = 𝐰 ′ 𝐱 (2.2)

𝑧 = 𝑓(𝑣) (2.3)

Figure 2.4 Perceptron

The function z = f(v) is often referred to as an activation function. Note that temporarily,

the threshold value is not explicitly used for convenience. We have assumed that

the modelled neuron has (n-1) actual synaptic connections associated with actual

variable inputs x1 ,x2 ,...,xn-1. We have also assumed that the last synapse is an

inhibitory one with wn = −1.

26

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Typical activations functions used are

Threshold logic unit (TLU)

+1, 𝑣 > 0

𝑧 = 𝑓0 (𝑣) = sgn(𝑣) = { (2.4)

−1, 𝑣 < 0

Figure 2.5 Threshold logic unit (TLU)

Logistic function

1

𝑓1 (𝑣 ) = (2.5)

1+𝑒 −𝑣

𝑧 = 𝑓1 (v)

( )

= 𝑧(1 − 𝑧)

Figure 2.6 Logistic function

27

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Bipolar logistic function

2 1−𝑒 −𝑣

𝑓2 (𝑣) = − 1 = 1+𝑒 −𝑣 = 2𝑓1 (𝑣) − 1 (2.6)

1+𝑒 −𝑣

𝑧= 𝟐( )

( 𝟐( ))

= 0.5(1 − 𝑧 2 )

Figure 2.7 Bipolar logistic function

Hyperbolic bipolar logistic function

1−𝑒 −2𝑣

𝑓3 (𝑣) = tanh(𝑣) = 1+𝑒 −2𝑣 = 2𝑓1 (2𝑣) − 1 (2.7)

𝑧= 𝟑( )

( 𝟑( ))

= 1 − tanh2 (z)

Figure 2.8 Hyperbolic bipolar logistic function

The soft-limiting activation functions f1(v), f2(v), f3(v) are often called sigmoidal

characteristics, as opposed to the hard-limiting activation function f0(v). A perceptron with

the activation function f0(v) describes the discrete perceptron shown in Figure 2.9. It was the

first learning machine introduced by Rosenbratt in 1958.

28

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Figure 2.9 Discrete Perceptron

Example 2.2

Prove that if the logistic function z(v) = f1(v) is used as an activation function, then the

derivative of z(v) is given by:

𝑑𝑧

= 𝑧(1 − 𝑧) (2.8a)

𝑑𝑣

Solution 2.2

1 𝑒𝑣

𝑧(𝑣) = 𝑓1 (𝑣) = =

1 + 𝑒 −𝑣 𝑒 𝑣 + 1

𝑑𝑧 𝑒 𝑣 (𝑒 𝑣 + 1) − 𝑒 𝑣 (𝑒 𝑣 ) 𝑒𝑣 (𝑒 𝑣 )2

= = −

𝑑𝑣 (𝑒 𝑣 + 1)2 𝑒𝑣 + 1 (𝑒 𝑣 + 1)2

2

𝑒𝑣 𝑒𝑣

= 𝑣 −( 𝑣 ) = 𝑧 − 𝑧 2 = 𝑧(1 − 𝑧)

𝑒 +1 𝑒 +1

Example 2.3

If the bipolar logistic function z(v) = f2(v) is used as an activation function, then the

derivative of z(v) is given by:

𝑑𝑧

= 0.5(1 − 𝑧2 ) (2.8b)

𝑑𝑣

Solution 2.3

2 2𝑒 𝑣 𝑒 𝑣 −1

𝑧(𝑣) = 𝑓2 (𝑣) = − 1 = 𝑒 𝑣+1 − 1 =

1+𝑒 −𝑣 𝑒 𝑣 +1

𝑑𝑧 𝑒 𝑣 (𝑒 𝑣 + 1) − (𝑒 𝑣 − 1)𝑒 𝑣 2𝑒 𝑣

= =

𝑑𝑣 (𝑒 𝑣 + 1)2 (𝑒 𝑣 + 1)2

2𝑒 𝑣 1 (1 − 𝑧)

= 𝑣

× 𝑣 = (𝑧 + 1) = 0.5(1 − 𝑧 2 )

𝑒 +1 𝑒 +1 2

29

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 2.2 Basic Network Architectures

The neural network can be defined as an interconnection of neurons such that neuron

outputs are connected, through weights, to all other neurons including themselves with

both lag-free and delay connections allowed.

2.2.1 Feedforward Network

An elementary feedforward architecture of m neurons receiving n inputs is shown in Figure

2.10.

Figure 2.10 Single-layer feedforward network: (a) interconnection scheme

and (b) block diagram.

The mapping of the input vector x to the output vector z can be represented by

v = Wx

(2.9)

z = (v)

where W is the weight matrix (connection matrix) and

𝑥1 𝑣1 𝑧1

𝐱 = [ 𝑥…2 ] , 𝐯 = [ 𝑣…2 ] , 𝒛 = [ 𝑧…2 ]

𝑥𝑛 𝑣𝑚 𝑧𝑚

𝑤11 ⋯ 𝑤1𝑛 𝑣1

𝐖=[ ⋮ ⋱ ⋮ ], (v) = [𝑣…2 ] where () is an activation function.

𝑤𝑚1 ⋯ 𝑤𝑚𝑛 𝑣 𝑛

30

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

2.2.2 Recurrent Network

A recurrent network can be obtained from the feedforward network by connecting the

outputs of the neurons to their inputs as shown in Figure 2.11.

Figure 2.11 Single-layer discrete-time recurrent network: (a) interconnection scheme

and (b) block diagram.

In the above recurrent network, the time elapsed between t and t + . is introduced by

the delay elements in the feedback loop. This time delay is analogous to the refractory

period of an elementary biological neuron model.

The mapping of the input vector x to the output vector z can be represented by

𝐯(𝑡 + Δ) = 𝐖𝐱(𝑡)

𝐳(𝑡 + Δ) = 𝐯(𝑡 + Δ) (2.10)

For a discrete-time artificial neural system

𝐯(𝑘 + 1) = 𝐖𝐱(𝑘)

𝐳(𝑡 + 1) = 𝐯(𝑡 + 1) (2.11)

Recurrent networks typically operate with a discrete representation of data. They often

use neurons with a hard-limiting activation function. A system with discrete-time inputs

and a discrete data representation is called an automaton.

31

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 2.3 Learning Rules

2.3.1 Supervised and Unsupervised Learning

There are two different types of learning: supervised learning and unsupervised learning. In

supervised learning, the desired response d is provided by the trainer. The distance

[d,z]between the actual and the desired response serves as an error measure and is used to

correct the network parameters. The error can be used to modify weights so that the error

decreases. For this learning mode, a set of input and output patterns (training set) is required.

In unsupervised learning, the desired response d is not known. Since no information is

available regarding to the correctness of responses, learning must be based on

observations of responses to inputs that we have some knowledge about. In this mode of

learning, the network must discover by itself any possible patterns, regularities, and

separating properties. While discovering these, the network updates its parameters using a

self-organising process.

2.3.2 The General Learning Rule

The general learning rule for neural network studies is: The weight Wi increases in

proportion to the product of input x and learning signal ri . The learning signal ri is in

general a function of Wi , x , and sometimes of the training signal d.

ri = ri(wi, x, d) (2.12)

The increment of the weight vector according to the general learning rule is

wi (k+1)=wi (k)+wi (k) (2.13)

where

wi (k) = c ri(k) x(k) (2.14)

c>0 is called learning constant (learning rate)

ri is the learning signal and

ri(k) = ri( wi (k), x(k), d(k)) (2.15)

The illustration for general weight learning rules is given in Figure 2.12.

32

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Figure 2.12 Illustration for weight learning rule.

2.3.3 Hebbian Learning Rule

The Hebbian learning rule (1949) represents a purely feedforward, unsupervised learning.

The learning signal r is equal simply to the output of the neuron.

ri(k) = zi(k) = f (wi’ (k) x(k)) (2.16)

wi (k+1) = wi (k)+wi (k)

where wi (k) = cri(k)xi (k)

This learning rule requires the weight initialisation at small random values around wi = 0

prior to learning.

33

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Exercise 2.2

Assume that the network shown in Figure 2.9 with the initial weight vector w(1) needs to be

trained using the set of three input vectors x(1), x(2), x(3) as below

1 1 0 1

−2

𝐱(1) = [ ], 𝐱(2) = [ −0.5 1 −1

] , 𝐱(3) = [ ] , and 𝐰(1) = [ ]

1.5 −2 −1 0

0 −1.5 1.5 0.5

If the activation function of this perceptron is the logistic function f1(v), the learning

constant is c = 1, and the Hebbian learning rule is used, show that the weight vectors after

subsequent training steps are:

1.9526 2.4021 2.4021

w(2)= [ −2.9052 ], w(3)= [ −3.1299 ], w(4)= [ −3.1105 ]

1.4289 0.5298 0.5104

0.5 −0.1743 −0.1452

2.3.4 Discrete Perceptron Learning Rule

The discrete perceptron learning rule (1958) is of supervised type as shown in Figure 2.13. The

learning signal r is the error between the desired and actual response of the neuron.

ri(k) = ei(k) = di(k) − zi(k) = di(k) − f( wi’ (k) x(k)) (2.17)

wi (k+1)=wi (k)+cri(k)xi (k)

The weight adjustment is inherent zero when the desired and actual responses agree. The

weights are initialised at any values.

Figure 2.13 Discrete perceptron learning rule.

34

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Exercise 2.3

Assume that the network shown in Figure 2.9 with the initial weight vector w(1) needs to be

trained using the set of three input vectors x(1), x(2), x(3) as below

1 0 −1 1

𝐱(1) = [−2], 𝐱(2) = [ 1.5 ] , 𝐱(3) = [ 1 ] , and 𝐰(1) = [−1]

0 −0.5 0.5 0

−1 −1 −1 0.5

The trainer’s desired responses for x(1), x(2), x(3) are d(1)=−1, d(2)= −1, d(3)=1 respectively.

If the learning constant is c=0.1, and the discrete perceptron learning rule is used, show that

the weight vectors after subsequent training steps are:

0.8 0.8 0.6

w (2) = [ −0.6], w (3) = [ −0.6 −0.4

], w (4) = [ 0.1 ]

0 0

0.7 0.7 0.5

2.3.5 Delta Learning Rule

The delta learning rule is only valid for continuous activation function and in the supervised

learning mode. This learning rule can be readily derived from the condition of least squared

error between the output and the desired response.

vi = wi’ x

1 1 1 2

𝐸 = 𝑒𝑖 2 = (𝑑𝑖 − 𝑧𝑖 )2 = (𝑑𝑖 − 𝑓(𝑣𝑖 )) (2.18)

2 2 2

The error gradient vector is

𝜕𝐸 𝜕𝐸 𝜕𝑧𝑖 𝜕𝑓 𝜕𝑓 𝑣𝑖

𝛻𝐸 = = = −(𝑑𝑖 − 𝑧𝑖 ) = −(𝑑𝑖 − 𝑧𝑖 ) (2.19)

𝜕𝑤𝑖 𝜕𝑧𝑖 𝜕𝑤𝑖 𝜕𝑤𝑖 𝜕𝑣𝑖 𝜕𝑤𝑖

𝜕𝑓

𝛻𝐸 = −(𝑑𝑖 − 𝑧𝑖 ) 𝐱

𝜕𝑣𝑖

35

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Since the minimisation of the error requires the weight changes to be in the negative gradient

direction, we take

∆𝐰𝑖 = − 𝛻𝐸 (2.20)

where is a positive constant (learning rate).

Using the general learning rule (2.14), it can be seen that the learning constant c and the

learning rate are equivalent. The weights are initialised at any values and the learning

signal r can be found from

𝜕𝑓(𝑣(𝑘))

𝑟𝑖 (𝑘) = 𝑒𝑖 (𝑘) = 𝑒𝑖 (𝑘)𝑓 ′ (𝑣(𝑘)) = (𝑑𝑖 (𝑘) − 𝑧𝑖 (𝑘))𝑓 ′ (𝑣(𝑘)) (2.21)

𝜕𝑣(𝑘)

wi (k+1)=wi (k)+cri(k)xi (k)

Exercise 2.4

Again, assume that the network shown in Figure 2.9 with the initial weight vector w(1)

needs to be trained using the set of three input vectors x(1), x(2), x(3) as below

1 0 −1 1

−2

𝐱(1) = [ ], 𝐱(2) = [ 1.5 1 −1

] , 𝐱(3) = [ ] , 𝑎𝑛𝑑 𝐰(1) = [ ]

0 −0.5 0.5 0

−1 −1 −1 0.5

The trainer’s desired responses for x(1), x(2), x(3) are d(1) = −1, d(2)= −1, d(3)=1

respectively. If the activation function of this perception is the bipolar logistic function f2 (v),

the learning constant is c = 0.1, and the delta learning rule is used, show that the weight

vectors after subsequent training steps are:

0.974 0.974 0.947

𝐰(2) = [ −0.948 ], 𝐰(3) = [ −0.956 ], 𝐰(4) = [ −0.929 ]

0 0.002 0.016

0.526 0.531 0.504

36

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

2.3.6 Widrow-Hoff Learning Rule

The Widrow-Hoff learning rule (1962) is applicable for the supervised training of neural

networks. It is independent of the activation function of neurons. The learning signal r is the

error between the desired output value d and the activation value of the neuron v.

𝑟𝑖 (𝑘) = 𝑑𝑖 (𝑘) − 𝑣𝑖 (𝑘) = 𝑑𝑖 (𝑘) − 𝐰 ′ (𝑘)𝐱(𝑘) (2.22)

wi (k+1)=wi (k)+cri(k)xi (k)

This rule can be considered as a special case of the delta rule. Assuming that

𝑣𝑖 = 𝐰 ′ (𝑘)𝐱(𝑘), 𝑓(𝑣𝑖 ) = 𝑣𝑖 , 𝑓 ′ (𝑣𝑖 ) = 1. This rule is sometimes called the LMS (least mean

square) learning rule. The weights are initialised at any value.

Exercise 2.5

Again, assume that the network shown in Figure 2.9 with the initial weight vector w(1)

needs to be trained using the set of three input vectors x(1), x(2), x(3) as below

1 0 −1 1

𝐱(1) = [−2], 𝐱(2) = [ 1.5 ] , 𝐱(3) = [ 1 ] , 𝑎𝑛𝑑 𝐰(1) = [−1]

0 −0.5 0.5 0

−1 −1 −1 0.5

The trainer’s desired responses for x(1), x(2), x(3) are d(1) = −1, d(2)= −1, d(3)=1

respectively. If the activation function of this perception is the bipolar logistic function f2 (v),

the learning constant is c = 0.1, and the Widrow-Hoff learning rule is used, show that the

weight vectors after subsequent training steps are:

0.65 0.65 0.3767

𝐰(2) = [−0.30], 𝐰(3) = [−0.255] , 𝐰(4) = [0.0183]

0 −0.015 0.1216

0.85 0.82 0.5468

37

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

2.3.7 Summary of learning rule and their properties

References

Brown, M., Harris C. 1994. Neurofuzzy Adaptive Modelling and Control.

Hertfordshire: Prentice Hall.

Kosko B. 1992. Neural Networks and Fuzzy Systems. New Jersey: Prentice Hall.

Hertz J., Krogh A., Palmer R. G. 1991. Introduction to the Theoary of Neural

Computing.Redwood City, California: Addison-Wesley.

Smith M. 1993. Neural Networks for Statistical Modelling. New York: Van Nostrand

Reinhold

Zurada, J.M. 1992. Introduction to Artificial Neural Systems. St. Paul: West

Publishing Company.

38

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

CHAPTER THREE

FUNDAMENTAL CONCEPTS OF FUZZY LOGIC AND

FUZZY CONTROLLER

_______________________________________________________

Chapter 3.1 Fundamental concepts of fuzzy logic

3.1.1 Fuzzy Relations

A fuzzy relation maps elements of one universe to one of another universe through the

Cartesian product of the two universes. The strength of the relation between ordered pairs of

the two universes is measured with the membership function expressing various degrees of

strength of the relation on the unit interval [0,1].

Fuzzy Cartesian Product

If A1, A2, …An are fuzzy sets in U1, U2, … Un respectively, the Cartesian product of A1,

A2,…, An is a fuzzy set F in the product space U1U2 … Un with the membership function:

F(u1, u2, …, un)=min[A1(u1), A2(u2), …An(un)] (3.1)

where F= A1 A2 … An

Fuzzy Relation

An n-ary fuzzy relation is a fuzzy set in U1U2 … Un and expressed as:

RU ={((u1, u2, …, un), R(u1, u2, …, un))|(u1, u2, …, un)U} (3.2)

where U1U2 … Un

39

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 3.1

Let A be a fuzzy set defined on a universe of three discrete temperatures, T={t1, t2, t3}, and B

be a fuzzy set defined on a universe of two discrete pressure P={p1, p2}. Fuzzy set A

represents the “ambient” temperature and fuzzy set B represents the “near optimum” pressure

for a certain heat exchanger, and the Cartesian product might represent the conditions

(temperature-pressure pairs) of the exchanger that are associated with “efficient” operations.

Let

T=0.1/t1 + 0.6/t2 + 1/t3

P=0.4/p1 + 0.8/p2

If R is the fuzzy relation in T P

0.1 0.1

R=TP = [0.4 0.6]

0.4 0.8

3.1.2 Composition of Fuzzy Relations

If P and Q are fuzzy relations in UV and V W respectively.

By using max-min composition, P and Q is a fuzzy relation denoted by:

P ∘ Q={|(u,w), max[min[P(u, v), Q(v, w)]] | (uU, vV, wW} (3.3)

By using max-product composition, P and Q is a fuzzy relation denoted by:

P ∘ Q={|(u,w), max[P(u, v) • Q(v, w)] | (uU, vV, wW} (3.4)

40

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 3.2

In the armature control of a DC motor, suppose that the membership functions for both

armature resistance Ra (ohms), armature Ia (A), and motor speed N (rpm) are given in their

per unit values:

0.3 0.7 1.0 0.2

𝑅𝑎 = { + + + }

30 60 100 120

0.2 0.4 0.6 0.8 1.0 0.1

𝐼𝑎 = { + + + + + }

20 40 60 80 100 120

0.33 0.67 1.0 0.15

𝑁={ + + + }

500 1000 1500 1800

The fuzzy relation between armature resistance and armature current P= Ra Ia, and the fuzzy

relation between armature current and motor speed Q= Ia N can be calculated

0.2 0.2 0.2 0.15

0.2 0.3 0.3 0.3 0.3 0.1 0.33 0.4 0.4 0.15

0.33 0.6 0.6 0.15

𝐏 = [0.2 0.4 0.6 0.7 0.7 0.1 ] 𝐐=

0.2 0.4 0.6 0.8 1.0 0.1 0.33 0.67 0.8 0.15

0.2 0.2 0.2 0.2 0.2 0.1 0.33 0.67 1.0 0.15

[ 0.1 0.1 0.1 0.1 ]

Using the max-min compositional operations, a relation P ∘ Q can be calculated to related

armature resistance to motor speed

0.3 0.3 0.3 0.15

𝐓 = 𝐏 ∘ 𝐐 = [0.33 0.67 0.7 0.15 ]

0.33 0.67 1.0 0.15

0.2 0.2 0.2 0.15

41

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 3.2 Fuzzy Logic Control System

In control system design, a PID controller is effective for a fixed control environment. In

order to cope with varying control environment or system non-linearity, an adaptive controller,

a self-tuning PID controller, a H ∞ controller or a sliding mode controller may be used. The

design of these controllers needs a mathematical model of the process in order to formulate

the input-output relation. Such models can be very difficult or very time consuming to be

identified.

In the fuzzy-logic-based approach, the inputs, outputs and control response are specified in

terms similar to those that might be used by an expert. Complex mathematical models of the

system under control are not required. Essentially, complicated knowledge based on the

experience of an expert can be incorporated in the fuzzy system in a relatively simple

way. Usually, this knowledge is expressed in the forms of rules.

Fuzzy logic and its applications in control engineering can be considered as the most important

area in fuzzy set theory and its applications. Since the invention of the first fuzzy controller by

Mamdani in 1974, fuzzy logic controllers (FLCs) have been successfully applied in numerous

industrial applications such as cement-kiln process control, automatic train operation,

camcorder autofocussing, crane control, etc

A fuzzy logic controller (FLC) can be typically incorporated in a closed loop control system as

shown in Figure 3.1. The main elements of the FLC are a fuzzification unit, an inference engine

with a knowledge base, and a defuzzification unit.

Figure 3.1 A fuzzy logic control system

42

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

3.2.1 System Variables

When a FLC is designed to replace a conventional PD controller, the input variables of the

FLC are error (e) and change of error (ce). The output variable of the FLC is a control signal

u.

The fuzzy set for each system variable are defined in typical linguistic terms such as

PVB Positive Very Big

PB Positive Big

PM Positive Medium

PS Positive Small ZE

Zero

NS Negative Small

NM Negative Medium

NB Negative Big

NVB Negative Very Big

There are two ways to define the membership for a fuzzy set: numerical or functional. A

numerical definition expresses the degree of membership function of a fuzzy set as a vector

of numbers whose dimension depends on the level of discretisation in the universe of discourse.

A functional definition denotes the membership function of a fuzzy set in a functional form

such as the triangular function or a Gaussian function.

Figure 3.2 show typical fuzzy sets and membership functions of system variables error

e ,change of error ce, and controller output (plant input) u in numerical form. A functional

form membership for a fuzz set is shown in Figure 3.3.

Figure 3.2 Membership functions in numerical form

43

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Triangular membership functions Gaussian membership functions

Figure 3.3 Membership functions in functional form

3.2.2 Fuzzification

Fuzzification is the process of mapping from observed inputs to fuzzy sets in the various input

universes of discourse. In process control, the observed data is usually crisp, and fuzzification

is required to map the observed range of crisp inputs to corresponding fuzzy values for the

system input variables. The mapped data are further converted into suitable linguistic terms

as labels of the fuzzy sets defined for system input variables. This process can be expressed

by:

X = fuzzifier (x) (3.5)

44

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 3.3

Assume that the range of “error” is [-5V, 5V] and the fuzzy set “error” has 9 members [NVB,

NB, NM, NS, ZE, PS, PM. PB, PVB] with triangular membership functions shown in Figure

3.4.

Figure 3.4 Fuzzification

Show that if

e = 2.25V

then

E = {0.75/ PM +0.25/ PB}

3.2.3 Fuzzy Control Rules and Rule Base

In a FLC, knowledge of the application domain and the control objectives is formulated

subjectively in most applications, based on an expert's experience. However, an

“objective” knowledge base may be constructed in a learning/self-organising environment by

using fuzzy modelling techniques.

The knowledge base consists of a data base and a rule base. The data base provides the

necessary definitions of the fuzzy parameters as fuzzy sets with membership functions defined

on the universe of discourse for each variable. The rule base consists of fuzzy control rules

intended to achieve the control objectives.

45

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Fuzzy Inference Rules

A fuzzy rule, often expressed in the form

IF (antecedent) THEN (consequent) (3.6)

e.g. If (x is A) AND (y is B) THEN (z is C)

is essentially a fuzzy relation or a fuzzy implication.

There are two main types of fuzzy inference rules in fuzzy logic reasoning: generalised modus

ponens (GMP) and generalised modus tollens (GMT). GMP is widely used in fuzzy logic

control applications and GMT is commonly used in expert systems, especially medical

diagnosis applications.

Generalised Modus Ponens (GMP) - Direct Reasoning

Premise 1 (Knowledge) : IF x is A then y is B

Premise 2 (Fact) : x is A’

Consequence (Conclusion) : y is B’

Generalised Modus Tollens (GMTP) - Indirect Reasoning

Premise 1 (Knowledge) : IF x is A then y is B

Premise 2 (Fact) : y is B’

Consequence (Conclusion) : x is A’

Fuzzy Knowledge Base

A fuzzy knowledge base consists of a number of fuzzy rules. In most engineering

control applications, the fuzzy rules are expressed as

IF (x is A) AND (y is B) THEN (z is C) (3.7)

46

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

There are several sentence connectives such as AND, OR, and ALSO. The connectives AND

and OR are often used in the antecedent part, while connective ALSO is usually used in the

consequent part of fuzzy rules.

A fuzzy control algorithm should always be able to infer a proper control action for any input

in the universe of discourse. This property is referred to as 'completeness'. If the number of

fuzzy sets, or 'predicates', for each input variable is denoted by m and the number of system

input variables by n , then mn different rules are required for a completeness in the

conventional expert system approach. For example, if the number of fuzzy sets per system

input variable m is 7 and the number of input variables n is 3, then 73 = 343 rules are required.

In contrast with a conventional expert system, a FLC rule base typically only uses a small

number of rules to attain completeness in its behaviour. It has been found that the number of

control rules in a FLC can be remarkably reduced primarily due to the overlap of the fuzzy

sets and the soft matching approach used in fuzzy inference.

47

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 3.4

Fuzzy logic is used to control a two-axis mirror gimbal for aligning a laser beam using a

quadrant detector. Electronics sense the error in the position of the beam relative to the centre

of the detector and produces two signals representing the x and y direction errors. The

controller processes the error information using fuzzy logic and provides appropriate control

voltages to run the motors which reposition the beam.

To represent the error input to the controller, a set of linguistic variables is chosen to represent

5 degrees of error, 3 degrees of change of error, and 5 degrees of armature voltage.

Membership functions are constructed to represent the input and output values' grades of

membership as shown in Figure 3.5.

Figure 3.5 Membership functions of a laser beam alignment system

Two sets of rules are chosen. These "Fuzzy Associative Memories" or FAMs, are a shorthand

matrix notation for presenting the rule set. A linguistic armature voltage rule is fired for each

pair of linguistic error variables and linguistic change in error variables.

A set of "pruned" rules is also used to investigate the effect of reducing the processed

information on the behaviour of the controller. When pruned, the FAM is slightly modified

to incorporate all the rules. The effect on the system response by modifying the FAM bank is

more dramatic than modifying the membership functions. Changing the FAM "coarsely" tunes

the response while adjusting the membership functions "finely" tunes the response. The FAMs

48

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

are shown in Table 3.1. Table 3.l (a) shows the full set of 15 fuzzy rules and Table 3.l (b)

shows the rule base fuzzy set after pruning.

Table 3.1 Fuzzy Associative Memories

(a) (b)

3.2.4 Reasoning Techniques

There are various ways in which the observed input values can be used to identify which

rules should be used and to infer an appropriate fuzzy control action. Among the various

fuzzy inference methods, the following are the most commonly used in industrial FLCs.

1. The point-valued MAX-MIN fuzzy inference method

2. The point-valued MAX-PRODUCT (or MAX-DOT) fuzzy inference method

Due to the nature of industrial process control, it is often the case that the input data are crisp.

Fuzzification typically involves treating these as fuzzy singletons, which are then used with a

fuzzy inference method. Assume that the fuzzy control rule base has only two rules:

Rule 1: IF x is A1 and y is B1 THEN z is C1

Rule2: IF x is A2 and y is B2 THEN z is C2

Let the fire strength of the ith rule be denoted by i;. For inputs x0 and y0 , the fire strength

1 and 2 can be calculated from

1=A1(x0) B1(y0)

2=A2(x0) B2(y0) (3.7)

49

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

MAX-MIN Fuzzy Reasoning

In MAX-MIN fuzzy reasoning, Mamdani's minimum operation rule Rc is used for fuzzy

implication. The membership of the inferred consequence C is point-wise given by

𝜇𝑐 (𝑧) = ⋁𝑛𝑖=1 (𝛼𝑖 ∧ 𝜇𝑐1 (𝑦)) (3.8)

Figure 3.6 shows the MAX-MIN inference process for the crisp input values x0 and y0 which

have been regarded as fuzzy singletons.

Figure 3.6 MAX-MIN inference

MAX-PRODUCT Fuzzy Reasoning

In MAX-PRODUCT fuzzy reasoning, Larsen's product operation rule RP is used for

fuzzy implication. The membership of the inferred consequence C is point-wise given by

𝜇𝑐 (𝑧) = ⋁𝑛𝑖=1 (𝛼𝑖 ⋅ 𝜇𝑐1 (𝑦)) (3.9)

Figure 3.7 shows the MAX- PRODUCT inference process for the crisp input values x0 and

y0.

Figure 3.7 MAX-PRODUCT fuzzy inference

50

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

3.2.5 Defuzzification

Defuzzification is the process of mapping from a space of inferred fuzzy control actions to a

space of non-fuzzy (crisp) control actions. A defuzzification strategy is aimed at producing

a non-fuzzy control action that best represents the possibility distribution of the inferred

fuzzy control action. This can be expressed by:

u = defuzzifter(U) (3.10)

In real-time implementation of fuzzy logic control, the commonly used defuzzification

strategies are the mean of maximum (MOM) and the centre of area (COA).

Mean of Maximum (MOM) Method

The MOM strategy (height defuzzification) generates a control action which represents the

mean value of all local control actions whose membership functions reach the maximum.

Let the number of rules be denoted by n, the maximum height of the membership function of

the fuzzy set defined for the output control (consequent) of the i-th rule by the crisp value Hi,

the corresponding crisp control value along the output universe of discourse by Ui and the fire

strength from the i-th rule by i. Then the crisp control value u* defuzzified using the MOM

method is given by:

∑𝑛

𝑖=1 𝛼𝑖 𝐻𝑖 𝑈𝑖

𝑢∗ = ∑𝑛

(3.11)

𝑖=1 𝛼𝑖 𝐻𝑖

The crisp value Ui is a support value at which the membership function reaches

maximum Hi (most often Hi = 1). In addition, although the fire strength from the i-th rule i

is normally calculated as described in Equation 3.7, a more effective method for calculating

the fire strength in a MOM method is

i = Ai(xi)• Bi(yi). (3.12)

Assuming Hi = 1,

∑𝑛

𝑖=1 𝛼𝑖 𝑈𝑖

𝑢∗ = ∑𝑛

(3.13)

𝑖=1 𝛼𝑖

51

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Centre of Area (COA) Method

The COA strategy generates the centre of gravity of the possibility distribution of a control

action.

Let the number of rules be denoted by n, the amount of control output of the i-th rule ui, and

its corresponding membership value in the output fuzzy set C 𝜇𝐶 (𝑢𝑖 ). Then the crisp control

value u* defuzzified using the COA method is given by:

∑𝑛

𝑖=1 𝜇𝐶 (𝑢𝑖 )𝑢𝑖

𝑢∗ = ∑𝑛

(3.14)

𝑖=1 𝜇𝐶 (𝑢𝑖 )

If the universe of discourse is continuous, then the COA strategy generates an output control

action of

∫ 𝜇𝐶 (𝑢)𝑢𝑑𝑢

𝑢∗ = (3.15)

∫ 𝜇𝐶 (𝑢)𝑑𝑢

52

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Example 3.5

In a control system, the membership functions of system variables error e , change of error

ce, and controller output (plant input) u are shown Figure 3.8.

Assume that the fuzzy rules are represented by a FAM Table (Table 3.2), and the current

values of error e and change of error ce are {e = 1.5, ce = −0.05}. Assume also that the ranges

of error, change of error, and controller output are [−3,3], [−1,1], and [−6,6] respectively.

error e

change of error ce

Controller output u

Figure 3.8 Membership functions of a control system

Table 3.2 FAM Table

Change of Error (CE)

N ZE P

N N N ZE

Error (E)

ZE N ZE P

P ZE P P

53

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Solution 3.5

Step 1: Fuzzification

The current values of error e and change of error ce are {e = 1.5, ce = −0.05}

In fuzzy notation:

E1.5=0.25/ZE+0.75/P

CE-0.05=0.1/N+0.9/ZE

Step 2: Reasoning

E CE U (MOM) U(COA)

Rule 1 0.25/ZE 0.1/N 0.025/N 0.1/N

Rule 2 0.25/ZE 0.9/ZE 0.225/ZE 0.25/ZE

Rule 3 0.75/P 0.1/N 0.075/ZE 0.1/ZE

Rule 4 0.75/P 0.9/ZE 0.675/P 0.75/P

Step 3: Defuzzification

According to the MOM method, the output 𝑢∗ can be found using

∑𝑛𝑖=1 𝛼𝑖 𝑈𝑖 0.025(−4) + 0.225(0) + 0.075(0) + 0.675(4)

𝑢∗ = = = 2.6

∑𝑛𝑖=1 𝛼𝑖 0.025 + 0.225 + 0.075 + 0.675

According to the COA method, the output u* can be found using the max-min inference

process as shown in below

∫ 𝜇𝐶 (𝑢)𝑢𝑑𝑢

𝑢∗ =

∫ 𝜇𝐶 (𝑢)𝑑𝑢

−3.6 −3 1 3 6

∫−6 0.1𝑢𝑑𝑢 + ∫−3.6(0.25𝑢 + 1)𝑢𝑑𝑢 + ∫−3 0.25𝑢𝑑𝑢 + ∫1 (0.25𝑢)𝑢𝑑𝑢 + ∫3 0.75𝑢𝑑𝑢

= 6 −3.6 1 3 6

∫−3.6 0.1𝑑𝑢 ∫−3 (0.25𝑢 + 1)𝑑𝑢 + ∫−3 0.25𝑑𝑢 + ∫1 (0.25𝑢) 𝑑𝑢 + ∫3 0.75𝑑𝑢

54

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

−3.6 −3 1 3 6

𝑢2 𝑢3 𝑢2 𝑢2 𝑢3 𝑢2

(0.1 2 ) + (0.25 3 + 2 ) + (0.25 2 ) + (0.25 3 ) + (0.75 2 )

−6 −3.6 −3 1 3

= −3 3

𝑢2 𝑢2

(0.1𝑢)−3.6

−6 + (0.25 + 𝑢) + (0.25𝑢)1−3 + (0.25 ) + (0.75𝑢)63

2 −3.6

2 1

−1.152 − 0.342 − 1 + 2.1667 + 10.125 9.7977

= = = 2.1323

0.24 + 0.105 + 1 + 1 + 2.25 4.595

Figure 3.9 Max-Min Inference Process

55

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Chapter 3.3 Closed Loop Fuzzy Logic Control

A control system for a physical system is an arrangement of hardware components designed

to alter, to regulate, or to command, through a control action, another physical system so that

it exhibits certain desired characteristics or behavior. Physical control systems are typically of

two types: open-loop control systems, in which the control action is independent of the physical

system output, and closed-loop control systems (also known as feedback control systems), in

which the control action depends on the physical system output. Examples of open-loop control

systems are a toaster, in which the amount of heat is set by a human, and an automatic washing

machine, in which the controls for water temperature, spin-cycle time, and so on are preset by

a human. In both these cases, the control actions are not a function of the output of the toaster

or the washing machine. Examples of feedback control are a room temperature thermostat,

which senses room temperature and activates a heating or cooling unit when a certain threshold

temperature is reached, and an autopilot mechanism, which makes automatic course

corrections to an aircraft when heading or altitude deviations from certain preset values are

sensed by the instruments in the plane’s cockpit.

To control any physical variable, we must first measure it. The system for measurement of the

controlled signal is called a sensor. The physical system under control is called a plant. In a

closed-loop control system, certain forcing signals of the system (the inputs) are determined

by the responses of the system (the outputs). To obtain satisfactory responses and

characteristics for the closed-loop control system, it is necessary to connect an additional

system, known as a compensator, or a controller, to the loop. The general form of a closed-

loop control system is illustrated in Figure 3.10. The control problem is stated as follows. The

output, or response, of the physical system under control (i.e., the plant) is adjusted as required

by the error signal. The error signal is the difference between the actual response of the plant,

as measured by the sensor system, and the desired response, as specified by a reference input.

56

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

Figure 3.10 A closed-loop control system

First-generation (nonadaptive) simple fuzzy controllers can generally be depicted by a block

diagram such as that shown in Figure 3.11.

Figure 3.11 A simple fuzzy logic control system block diagram

The knowledge-base module in Figure 3.11 contains knowledge about all the input and output

fuzzy partitions. It will include the term set and the corresponding membership functions

defining the input variables to the fuzzy rule-base system and the output variables, or control

actions, to the plant under control.

The steps in designing a simple fuzzy control system are as follows:

1. Identify the variables (inputs, states, and outputs) of the plant.

2. Partition the universe of discourse or the interval spanned by each variable into a

number of fuzzy subsets, assigning each a linguistic label (subsets include all the

elements in the universe).

3. Assign or determine a membership function for each fuzzy subset.

4. Assign the fuzzy relationships between the inputs’ or states’ fuzzy subsets on the one

hand and the outputs’ fuzzy subsets on the other hand, thus forming the rule-base.

57

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

5. Choose appropriate scaling factors for the input and output variables to normalize the

variables to the [0, 1] or the [−1, 1] interval.

6. Fuzzify the inputs to the controller.

7. Use fuzzy approximate reasoning to infer the output contributed from each rule.

8. Aggregate the fuzzy outputs recommended by each rule.

9. Apply defuzzification to form a crisp output.

Aircraft Landing Control Problem

The following example shows the flexibility and reasonable accuracy of a typical application

in fuzzy control.

Example 3.6

We will conduct a simulation of the final descent and landing approach of an aircraft. The

desired profile is shown in Figure 3.12. The desired downward velocity is proportional to the

square of the height. Thus, at higher altitudes, a large downward velocity is desired. As the

height (altitude) diminishes, the desired downward velocity gets smaller and smaller. In the

limit, as the height becomes vanishingly small, the downward velocity also goes to zero. In

this way, the aircraft will descend from altitude promptly but will touch down very

gently to avoid damage.

Figure 3.12 The desired profile of downward velocity versus altitude

The two state variables for this simulation will be the height above ground, h, and the vertical

velocity of the aircraft, v (Figure 3.13). The control output will be a force that, when applied

to the aircraft, will alter its height, h, and velocity, v. The differential control equations are

loosely derived as follows. See Figure 3.14. Mass, m, moving with velocity, v, has momentum,

58

Downloaded by Phúc Nguy?n (hphuc4499@gmail.com)

lOMoARcPSD|27553903

49275 Neural Networks and Fuzzy Logic

p = mv. If no external forces are applied, the mass will continue in the same direction at the

same velocity. If a force, f, is applied over a time interval Δt, a change in velocity of Δv = fΔt/m

will result. If we let Δt = 1.0 (s) and m = 1.0 (Ib s2 ft−1), we obtain Δv = f (lb), or the change in