Professional Documents

Culture Documents

Chapter 2

Uploaded by

berhanu seyoumOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter 2

Uploaded by

berhanu seyoumCopyright:

Available Formats

CHAPTER 2

QUALITATIVE EXPLANATORY AND REGRESSAND VARIABLE

OF REGRESSION MODELS

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 1

2.1 Qualitative Explanatory Variables in Regression Models

In regression analysis , in addition to quantitative variables, the

dependent variable may be essentially influenced by qualitative nature

of the variables.

These are marital status, job category, season, gender, race, colour,

religion, nationality, geographical region, party affiliation, and political

upheavals and etc.

These qualitative variables are essentially nominal scale variables,

having no particular numerical values, which are known as dummy

variables, indicator variables, categorical variables, and qualitative

variables.

But we can "quantify" them by taking values of 0 and 1, where 0

indicating the absence of an attribute and 1 indicating its presence.

Thus the gender variable can be quantified as male = 1 and female =0,

or vice versa and We use them in the regression equation together

with the other independent variables.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 2

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 3

But random assignment can’t always control for all possible other

factors—though sometimes we may be able to identify some of these

factors and add them to our equation

Let’s say that the treatment is job training:

◦ Suppose that random assignment, by chance, results in one group

having more males and being slightly older than the other group

◦ If gender and age matter in determining earnings, then we can

control for the different composition of the two groups by including

gender and age in our regression equation:

OUTCOMEi = β0 + β1TREATMENTi + β2D1i + β3X2i + εi

where: D1i = dummy variable for the individual’s gender

X2 = the individual’s age

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 4

Unfortunately, random assignment experiments are not common in

economics because they are subject to problems that typically do not

plague medical experiments—e.g.:

1. Non-Random Samples:

Most subjects in economic experiments are volunteers, and samples

of volunteers often aren’t random and therefore may not be

representative of the overall population

As a result, our conclusions may not apply to everyone

2. Unobservable Heterogeneity:

In Equation the above, we added observable factors to the equation to

avoid omitted variable bias, but not all omitted factors in economics

are observable

This “unobservable omitted variable” problem is called unobserved

heterogeneity

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 5

3. The Hawthorne Effect:

Human subjects typically know that they’re being studied, and they

usually know whether they’re in the treatment group or the control

group

The fact that human subjects know that they’re being observed

sometimes can change their behavior, and this change in behavior

could clearly change the results of the experiment

4. Impossible Experiments:

It’s often impossible (or unethical) to run a random assignment

experiment in economics

Think about how difficult it would be to use a random assignment

experiment to study the impact of marriage on earnings!

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 6

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 7

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 8

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 9

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 10

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 11

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 12

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 13

2nd, If a qualitative variable has m categories, you may include m

dummies, provided you do not include the (common) intercept in the

model. This way we do not fall into the dummy variable trap.

3rd, The category that the value of 0 is called the reference, benchmark

or comparison category. All comparisons are made in relation to the

reference category, as we will show with our example.

4th , if there are several dummy variables, you must keep track of the

reference category; otherwise, it will be difficult to interpret the

results.

5th, at times we will have to consider interactive dummies, which we

will illustrate shortly.

6th, since dummy variables take values of 1 and 0, we cannot take their

logarithms. That is, we cannot introduce the dummy variables in log

form.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 14

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 15

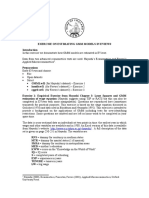

Table 2.1: Regression Results for a model of wage determination:

Dependent Variable: WAGE; Method: Least Squares, Sample: 1 1289

Included observations: 1289

Coefficient Std. Error t-Statistic Prob. Values

CONSTANT -7.183338 1.015788 -7.071691 0.0000

FEMALE -3.074875 0.364616 -8.433184 0.0000

NONWHITE -1.565313 0.509188 -3.074139 0.0022

UNION 1.095976 0.506078 2.165626 0.0305

EDUCATION 1.370301 0.065904 20.79231 0.0000

EXPER 0.166607 0.016048 lO.38205 0.0000

R-squared 0.323339 Mean dependent var 12.36585

Adjusted R-squared 0.320702 S.D. dependent var 7.896350

S.E. of regression 6.508137 Akaike info criterion 6.588627

Sum squared resid 54342.54 Schwarz criterion 6.612653

Log likelihood -4240.370 Durbin-Watson stat 1.897513

F-statistic 122.6149 Prob(F -statistic) 0.000000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 16

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 17

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 18

Interactive dummies

To find out the interactive dummies, we re-estimate the wage function above by adding

to it the product of the female and non-white dummies. Such a product is called an

interactive dummy, for it interacts the two qualitative variables. Adding the interactive

dummy, we obtain the results in reported in the following table.

Table 2.2: Wage function with interactive dummies.

Dependent Variable: WAGE, Method: Least Squares, Sample: 1 1289

Included observations: 1289

Coefficient Std. Error t-Statistic Prob. Values

Constant -7.0887 1.0195 -6.9533 0.0000

-3.2401 0.3953 -8.1961 0.0000

-2.1585 0.7484 -2.8840 0.0040

1.1150 0.5063 2.2021 0.0278

Education 1.3701 0.0659 20.791 0.0000

Experiences 0.1658 0.0161 10.326 0.0000

1.0954 1.0128 1.0814 0.2797

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 19

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 20

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 21

Table 2.3: Wage function with differential intercept and slope dummies.

Dependent Variable: WAGE, Method: Least Squares, Sample: 1 1289

Included observations: 1289

Variable Coefficient Std. Error t-Statistic Prob. Values

Constant -11.09129 1.421846 -7.8033 0.0000

3.174158 1.966465 1.614144 0.1067

2.909129 2.780066 1.046424 0.2956

4.454212 2.973494 1.497972 0.1344

Edu 1.587125 0,093819 16.91682 0.0000

Exp 0.220912 0,025107 8.798919 0.0000

-0.336888 0,131993 -2,552314 0.Q108

-0.096125 0.031813 -3,021530 0.0026

-0.321855 0,195348 -1.647595 0.0997

-0.022041 0.044376 -0.496700 0.6195

-0.198323 0.191373 -1.036318 0.3003

-0.033454 0.046054 -0,726410 0,4677

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 22

2

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 23

Table 2.4 Reduced wage function.

Dependent Variable: WAGE, Method: Least Squares, Sample: 1

1289

Included observations: 1289

Variable Coefficient Std. Error t-Statistic Prob. Values

Cons -10.645 1.3718 -7.7600 0.0000

FE 3.2574 1.9592 1.6626 0.0966

NW 2.6269 2.4178 1.0864 0.2775

UN 1.0785 0.5053 2.1339 0.0330

Edu 1.5658 0.0918 17.054 0.0000

Exp 0.2126 0.0227 9.3381 0.0000

FE*Edu -0.3469 0.1314 -2.6386 0.0084

FE*Exp -0.0949 0.0315 -3.0074 0.0027

NW*Edu -0.3293 0.1866 -1.7648 0.0778

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 24

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 25

Of course, there are other possibilities to express the wage function.

For example, you may want to interact female with union and education

(female*union*education), which will show whether the females who are

educated and belong to unions have differential wages with respect to

education or union status.

But beware of introducing too many dummy variables, for they can rapidly

consume degrees of freedom..

Table 2.5 Semi-log model of wages.

Dependent Variable: lnWAGE, Method: Least Squares,

Included observations: 1289

Variable Coefficient Std. Error t-Statistic Prob. Values

Constant 0.9055 0.0741 12.2076 0.0000

-0.2491 0.0266 -9.3578 0.0000

-0.1335 0.0371 -3.5913 0.0003

0.1802 0.0369 4.8763 0.0330

Edu 0.0998 0.0048 20.752 0.0000

Exp 0.0127 0.0012 10.889 0.0000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 26

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 27

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 28

1

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 29

Table 2.6 Regression of GPI on GPS with interactive dummy.

Dependent Variable: GPI, Method: Least Squares,

Sample: 1959-2007

Included observations: 49

Variable Coefficient Std. Error t-Statistic Prob.

Values

Constant -32.490 23.249 -1.3974 0.1691

1.0692 0.0259 41.256 0.0000

-327.85 61.753 -5.3089 0.0000

0.2441 0.0445 5.4747 0.0000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 30

.

.

.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 31

2.2 Limited Dependent Variable Models

The standard linear regression models are applied when the behaviour of

economic agents approximated by continuous variables such as income,

saving, expenditure, output, etc.

But there are many situations in which the dependent variable in a

regression equation simply represents a discrete choice assuming only a

limited number of values.

Models involving dependent variables of this kind are called limited or

discrete dependent variable models also called qualitative response

models.

Examples of discrete choices (a) whether to work or not, (b) whether to

attend formal education or not, (c) Choice of occupation and (d) Brand of

consumer durable goods to purchase.

There are three approaches to developing a probability model for a binary

response variable: Linear Probability, Logit and Probit models.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 32

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 33

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 34

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 35

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 36

Probability

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 37

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 38

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 39

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 40

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 41

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 42

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 43

1

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 44

2.2.1 of the Logit model

𝑛

𝑦𝑖 1−𝑦 𝑖

𝐿= 𝐺(𝑋𝑖 𝛽) 1 − 𝐺(𝑋𝑖 𝛽)

𝑖=1

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 45

Applying natural logarithms, we can get the log-likelihood function as:

𝑛

𝑙𝑛𝐿 = 𝑦𝑖 𝑙𝑛 𝐺(𝑋𝑖 𝛽) + (1 − 𝑦𝑖 )𝑙𝑛 1 − 𝐺(𝑋𝑖 𝛽)

𝑖=1

This equation is highly non-linear, and we need to apply numerical optimization

methods to obtain the solutions.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 46

𝑛

𝑙𝑛𝐿 = 𝑦𝑖 𝑙𝑛 𝐺(𝑋𝑖 𝛽) + (1 − 𝑦𝑖 )𝑙𝑛 1 − 𝐺(𝑋𝑖 𝛽)

𝑖=1

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 47

𝑛

𝑙𝑛𝐿 = 𝑦𝑖 𝑙𝑛 𝑒 𝛼 +𝑋𝑖 𝛽 − 𝑦𝑖 ln

(1 + 𝑒 𝛼 +𝑋𝑖 𝛽 − (1 − 𝑦𝑖 ) ln

(1 + 𝑒 𝛼 +𝑋𝑖 𝛽

𝑖=1

The first order conditions are:

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 48

𝒏 𝒏 𝒏 𝒏

𝒀𝒊 − 𝜶 + 𝜷𝑿𝒊 𝑿𝒊 = 𝟎 ⇒ 𝒀𝒊 𝑿𝒊 = 𝜶 𝑿𝒊 + 𝜷 𝑿𝒊 𝟐

𝒊=𝟏 𝒊=𝟏 𝒊=𝟏 𝒊=𝟏

𝒏 𝒏 𝒏

𝒀𝒊 − 𝜶 + 𝜷𝑿𝒊 =𝟎⇒ 𝒀𝒊 = 𝒏𝜶 + 𝜷 𝑿𝒊

𝒊=𝟏 𝒊=𝟏 𝒊=𝟏

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 49

Odds Ratio : CDF

We realize that the sigmoid, or S-

shaped, curve in the figure is

very much resembles the

cumulative distribution function

(CDF) of a random variable.

Therefore, one can easily use the

CDF to model regressions where

the response variable is

dichotomous, taking 0–1 values.

Fig. A cumulative distribution function (CDF) can be both for logit

and probit models.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 50

odds( x 1) p ( x)

e odds( x)

odds( x) 1 p ( x)

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 51

• Step 2: Raise e = 2.718 to the lower and upper bounds of the Confidence

Interval :

•If entire interval is above 1, conclude positive association

• If entire interval is below 1, conclude negative association

• If interval contains 1, cannot conclude there is an association

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 52

Multiple or Multinomial Logistic Regression

• Extension to more than one predictor variable either numeric or

dummy variables with k predictors, the model is written:

e 0 1x1 k xk i

G ( x, ) 0 1 x1 k xk

e ( Adgusted)

1 e

• Adjusted Odds ratio for raising xi by 1 unit, holding all other predictors

constant.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 53

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 54

• The logit model uses the logistic probability distribution to estimate

the parameters of the model.

• Although seemingly nonlinear, the log of the odds ratio makes the logit

model linear in the parameters.

• If we have grouped data, we can estimate the logit model by OLS

whereas in the cases of micro-level data, we have to use the method of

maximum likelihood.

• In the former case we will have to correct for heteroscedasticity in the

error term.

• Unlike the LPM, the marginal effect of a regressor in the logit model

depends not only on the coefficient of that regressor but also on the

values of all regressors in the model.

• Until the availability of readily accessible computer packages to estimate the logit

and probit models, the LPM was used quite extensively because of its simplicity.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 55

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 56

• An alternative to logit is the probit model. The underlying probability

distribution of probit is the normal distribution.

• The parameters of the probit model are usually estimated by the

method of maximum likelihood.

• Like the logit model, the marginal effect of a regressor in the probit

model involves all the regressors in the modeL

• The logit and probit coefficients cannot be compared directly. But if you

multiply the probit coefficients by 1.81, they are then comparable with

the logit coefficients.

• This conversion is necessary because the underlying variances of the

logistic and normal distribution are different.

• In practice, the logit and probit models give similar results. The choice

between them depends on the availability of software and the ease of

interpretation.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 57

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 58

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 59

Table 2.7 Linear probability model of to smoke or not to smoke.

Dependent Variable: WAGE, Method: Least Squares, Sample: 1 1289

Included observations: 1289

Coefficient Std. Error t-Statistic Prob. Values

Constant 1.1230 0.1883 5.9625 0.0000

Age -0.0047 0.0008 -5.7009 0.0000

Edu -0.0206 0.0046 -4.4652 0.0040

Income 1.03E-06 1.63E-06 0.6285 0.5298

Pcig 0.0051 0.0028 -0.7990 0.0723

R-squared 0.0387

Adjusted R-squared 0.0355

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 60

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 61

• We can refine this model by introducing interaction terms, such as age

multiplied by education, or education multiplied by income, or

introduce a squared term in education or in age to find out if there is

nonlinear impact of these variables on smoking.

• But there is no point in doing that, because the LPM has several

inherent limitations.

• Firstly, the LPM assumes that the probability of smoking moves linearly

with the value of the explanatory variable, no matter how small or

large that value is.

• Secondly, by logic, the probability value must lie between 0 and 1. But

there is no guarantee that the estimated probability values from the

LPM will lie within these limits. This is because OLS does not take into

account the restriction that the estimated probabilities must lie within

the bounds of 0 and 1.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 62

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 63

Estimation of the logit model

Estimation of the logit model depends on the type of data available for

analysis, either data at the individual, or micro, level, as in the case of the

smoker example, and data at the group level.

Table 2.8.:Logit model of to smoke or not to smoke.

Dependent Variable: SMOKER, Method: Maximum Likelihood(ML) - Binary Logit

(Quadratic hill climbing), Sample: 1 1196, Included observations: 1196

Convergence achieved after 3 iterations QML (Huber/White) standard errors &

covariance

Coefficient Std. Error t-Statistic Prob. Values

Constant 2.7450 0.8217 3.3405 0.0008

Age -0.0208 0.0036 -5.7723 0.0000

Edu -0.0909 0.0205 -4.4274 0.0000

Income 4.72E-06 7.27E-06 0.6490 0.5163

Pcig -0.0223 0.0123 -1.8016 0.0716

McFadden R-squared = 0.0297, LR statistic= 47.26 with Prob(LR statistic)=0.000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 64

• Let us examine these results. The variables age and education are highly

statistically significant and have the expected signs. As age of the people

increases, the value of the logit decreases, or they are less likely to smoke

perhaps due to health concerns that is as people age.

• Likewise, more educated people are less likely to smoke, perhaps due to

the ill effects of smoking.

• The price of cigarettes has the expected negative sign and is significant at

about the 7% level. Ceteris paribus, the higher the price of cigarettes, the

lower is the probability of smoking.

• Income has no statistically visible impact on smoking, perhaps because

expenditure on cigarettes may be a small proportion of family income.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 65

That is, the probability that a person with the given characteristics is a smoker is

about 38% and this person is a smoker.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 66

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 67

• We can test the null hypothesis that all the coefficients are

simultaneously zero with the likelihood ratio (LR) statistic, which is

the equivalent of the F-test in the linear regression model.

• Under the null hypothesis that none of the regressors are significant,

the LR statistic follows the chi-square distribution with df equal to the

number of explanatory variables, which four.

• As you can see at the bottom of the above Table2.8 shows, the value of

the LR statistic is about 47.26 and the p value is practically zero, thus

refuting the null hypothesis.

• Therefore, the four variables included in the logit model are important

determinants of smoking habits .

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 68

Table 2.9: The logit model of smoking with interaction.

Dependent Variable: SMOKER Method: ML Binary Logit (Quadratic hill climbing),

Sample: 1 1196, Included observations: 1196, Convergence achieved after 10

iterations and Covariance matrix computed using second derivatives

Coefficient Std. Error t-Statistic Prob. Values

Constant 1.0931 0.9556 1.1438 0.2527

Age -0.0182 0.0037 -4.8112 0.0000

Edu 0.0394 0.0425 0.9281 0.3533

Income 9.50E-05 2.69E-06 3.5351 0.0004

Pcig -0.0217 0.0125 -1.7324 0.0832

-7.45E-06 2.13E-06 -3.4897 0.0005

McFadden R-squared =0.0377, LR statistic= 59.96 with Prob(LR statistic)=0.000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 69

• In Table 2.8, individually education had a significant negative impact on

the logit (probability of smoking) and income had no statistically

significant impact.

• However, introducing the multiplicative or interactive effect of the two

variables as an additional explanatory variable, the results are given in

Table 2.9.

• Now education by itself has no statistically significant impact on the logit,

but income has highly significant positive impact.

• But if you consider the interactive term, education multiplied by income,

it has significant negative impact on the logit.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 70

• That is, persons with higher education who also have higher incomes

are less likely to be smokers than those who are more educated only or

have higher incomes only.

• What this suggests is that the impact of one variable on the probability

of smoking may be reinforced by the presence of other variable(s).

• In the LPM, the error term has non-normal distribution; in the logit

model, the error term has the logistic distribution and in the probit

model, it has the normal distribution.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 71

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 72

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 73

Table 2.10: ML estimation of the censored regression model.

Dependent Variable: HOURS Method: ML - Censored Normal (TOBIT) (Quadratic

hill climbing) Sample: 1 753,Included observations: 753, Left censoring (value) at

zero, Convergence achieved after 6 iterations and Covariance matrix computed

using second derivatives

Coefficient Std. Error Z-Statistic Prob. Values

Constant 1126.3 379.58 2.9672 0.0030

AGE -54.109 6.6213 -8.1720 0.0000

EDU 38.646 20.684 1.8683 0.0617

EXP 129.82 16.229 7.9993 0.0000

EXPQ -1.8447 0.5096 -3.6194 0.0003

FAMINC 0.0407 0.5096 7.7540 0.0000

KIDSLT6 -782.37 103.75 -7.5408 0.0000

HUSWAGE -105.509 15.629 -6.7507 0.0000

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 74

Interpretation of the Tobit estimates

For example, if the husband's wages go up, on average, a woman will

work less in the labour market, ceteris paribus.

The slope coefficients of the various variables in Table 2.10 gives the

marginal impact of that variable on the mean value of the latent

variable

But in practice we are interested in the marginal impact of a regressor

on the mean value of the actual values observed in the sample.

In the Tobit type censored regression models, a unit change in the

value of a regressor has two effects:

(1) the effect on the mean value of the observed regressand, and

(2) the effect on the probability that the latent variable is actually

observed.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 75

2.10

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 76

2.10

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 77

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 78

As in the Tobit model, an individual regression coefficient in truncated

samples measures the marginal effect of that variable on the mean

value of the regressand for all observations - that is, including the non-

included observations.

Hence, the within-sample marginal effect of a regressor is smaller in

absolute value than the value of the coefficient of that variable, as in the

case of the Tobit model.

Assuming that the error term follows the normal distribution with zero

mean and constant variance, we can estimate censored regression

models by the method of maximum likelihood (ML). The estimators

thus obtained are consistent.

Since the Tobit model uses more information or observations than the

truncated regression model, the estimates obtained from Tobit are

expected to be more efficient.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 79

One major caveat is that the ML estimators are consistent only if the

assumptions about the error term are valid.

In cases of heteroscedasticity and non-normal error term, the ML

estimators are inconsistent.

Alternative methods need to be devised in such situations as compute

robust standard errors,

In practice, censored regression models may be preferable to the

truncated regression models because in the former we include all the

observations in the sample, whereas in the latter we only include

observations in the truncated sample.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 80

Modeling count data: the Poisson and negative binomial

regression models

The regressand is of the count type in many a phenomena, such as the

number of:

visits to a zoo in a given year,

patents received by a firm in a year,

visits to a dentist in a year,

speeding tickets received in a year,

cars passing through a toll booth in a span of, say, 5 minutes, and

so on. The

underlying variable in each case is discrete, taking only a finite non-

negative number of values.

Tests are conducted as in Logistic regression.

When the mean and variance are not equal, the Poisson Distribution

will be replaced with Negative Binomial Distribution

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 81

A unique feature of all these examples is that they take a finite number

of non-negative integer, or count, values.

Regression models based on poisson probability distribution are

known as poisson regression models (PRM).

An alternative to PRM is negative binomial regression model (NBRM),

which is based on the negative binomial probability distribution and is

used to remedy some of the deficiencies of the PRM.

This model is nonlinear in parameters, necessitating nonlinear

regression estimation.

This can be accomplished by the method of maximum likelihood (ML).

The Poisson regression model which is often used to model count data,

based on the Poisson probability distribution(PPD.

A unique feature of the PPD is that the mean of a poisson variable is the

same as its variance.This is also a restrictive feature of PPD.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 82

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 83

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 84

We cannot use the normal probability distribution to model count data.

The Poisson probability distribution (PPD) is often used to model count

data, especially to model rare or infrequent count data.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 85

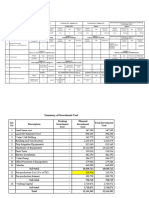

2.11 Tabulation of patent raw data.

Tabulation ofP90

Sample: 1 181

Included observations: 181

Number of categories: 5

Cumulative Cumulative

# Patents Count Percent Count Percent

[0,200) 160 88.40 160 88.40

[200,400) 10 5.52 170 93.92

[400,600) 6 3.31 176 97.24

[600,800) 3 1.66 179 98.90

[800,1000) 2 1.10 181 100.00

Total 181 100.00 181 100.00

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 86

Table 2.12 Poisson model of patent data (ML estimation).

Dependent Variable: P90: Method: ML/QML - Poisson Count (Quadratic hill

climbing)Sample: 1 181, Included observations: 181 and Convergence achieved

after 6 iterations Covariance matrix computed using second derivatives

variable Coefficient Std. Error Z-Statistic Prob. Values

Constant -0.7458 0.0621 -12.003 0.0000

LR90 0.8651 0.0080 107.23 0.0000

AEROSP -0.7965 0.0679 -11.721 0.0000

CHEMIST 0.7747 0.0231 33.500 0.0000

COMPUTER 0.4688 0.0239 19.586 0.0000

MACHINES 0.6463 0.0380 16.994 0.0000

VEHICLES -1.1556 0.0391 -38.432 0.0000

JAPAN -0.0038 0.0268 -0.1449 0.8848

US -0.4189 0.0230 -18.140 0.0000

Log likelihood is -5081, Restr. log likelihood is -15822 and LR statistic is 21482

with its p.v. of 0.0000,

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 87

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 88

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 89

.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 90

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 91

Limitation of the Poisson regression model

The Poisson regression results for the patent and R&D given in Table 12.4

should not be accepted at face value.

The standard errors of the estimated coefficients given in that table are

valid only if the assumption of the Poisson distribution underlying the

estimated model is correct.

If there is over-dispersion, the PRM estimates, although consistent are

inefficient with standard errors that are downward biased.

If this is the case, the estimated Z values are inflated, thus over-estimating

the statistical significance of the estimated coefficients.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 92

The main point to note is that if one uses the poisson regression

model(PRM), it is subject to over-dispersion test.

If the test shows over-dispersion, we should at least correct the standard

errors by method of quasi-maximum likelihood estimation (QMLE) and

generalized linear model(GLM).

If the assumption of equi-dispersion underlying the PRM cannot be

sustained, and

Even if we correct the standard errors obtained by ML, it might be better

to search for alternative to PRM, which is the negative binomial regression

model, based on the negative binomial probability distribution.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 93

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 94

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 95

But these results may not be reliable because of the restrictive

assumption of the PPD that its mean and variance are the same.

In most practical applications of PRM, the variance tends to be greater

than the mean. This is the case of over-dispersion.

To correct for over-dispersion, we can use the methods of quasi

maximum likelihood estimation (QMLE) and generalized linear model

(GLM).

Both these methods correct the standard errors of the PRM, which

was estimated by the method of maximum likelihood (ML).

As a result of these corrections, it was found that several standard

errors in the PRM were severely underestimated, resulting in the

inflated statistical significance of the various regressors.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 96

In some cases, the regressors are found to be statistically insignificant,

in strong contrast with the original PRM estimates.

Since our results showed over-dispersion, we used an alternative

model, the negative binomial regression model (NBRM).

An advantage of NBRM model is that it allows for over-dispersion and

also provides a direct estimation of the extent of overestimation of the

variance.

The NBRM results also show that the original PRM standard errors

were underestimated in several cases.

8/1/2021 For MSc students by Urgaia R.(Ph.D.),OSU 97

You might also like

- Handbook of Regression MethodsDocument654 pagesHandbook of Regression Methodskhundalini100% (3)

- Key ConceptsDocument153 pagesKey ConceptsEdwin Lolowang100% (2)

- Factor AnalysisDocument16 pagesFactor AnalysissairamNo ratings yet

- Research Methodology MCQ..Document20 pagesResearch Methodology MCQ..Anseeta SajeevanNo ratings yet

- Non Linear RegressionDocument12 pagesNon Linear RegressionSheryl OsorioNo ratings yet

- Mostly Harmless Econometrics: An Empiricist's CompanionFrom EverandMostly Harmless Econometrics: An Empiricist's CompanionRating: 4 out of 5 stars4/5 (21)

- James W. Hardin, Joseph M. Hilbe - Generalized Linear Models and Extensions-Stata Press (2018)Document789 pagesJames W. Hardin, Joseph M. Hilbe - Generalized Linear Models and Extensions-Stata Press (2018)owusuessel100% (1)

- Empirical Project Assignment 1Document6 pagesEmpirical Project Assignment 1ehitNo ratings yet

- Experiment 3 FORMOSODocument5 pagesExperiment 3 FORMOSOZoe FormosoNo ratings yet

- P08 - 178380 - Eviews GuideDocument9 pagesP08 - 178380 - Eviews Guidebobhamilton3489No ratings yet

- Assosa University School of Graduate Studies Mba ProgramDocument10 pagesAssosa University School of Graduate Studies Mba Programyeneneh meseleNo ratings yet

- ET 310 Lab 4Document19 pagesET 310 Lab 4DylanNo ratings yet

- Analytics Report: Gray Hunter and John Mcclintock Yamelle Gonzalez Dummy Variables APRIL 14, 2020Document4 pagesAnalytics Report: Gray Hunter and John Mcclintock Yamelle Gonzalez Dummy Variables APRIL 14, 2020api-529885888No ratings yet

- Breakout Session - TaskDocument5 pagesBreakout Session - Taskandrew.tugumeNo ratings yet

- Applied EconomicsDocument18 pagesApplied EconomicsRolly Dominguez BaloNo ratings yet

- Chapter 4-5.editedDocument22 pagesChapter 4-5.editedannieezeh9No ratings yet

- Wibest 2nd 2021Document7 pagesWibest 2nd 2021Nisa ErsaNo ratings yet

- Spatial Data AnalysisDocument9 pagesSpatial Data AnalysisBezan MeleseNo ratings yet

- Research MethodologyDocument6 pagesResearch MethodologyMANSHI RAINo ratings yet

- ECOM30001 Basic Econometrics Semester 1, 2017: Week 1 Introduction: What Is Econometrics?Document12 pagesECOM30001 Basic Econometrics Semester 1, 2017: Week 1 Introduction: What Is Econometrics?A CHingNo ratings yet

- AdafdfgdgDocument15 pagesAdafdfgdgChelsea RoseNo ratings yet

- Chapter Fou1Document11 pagesChapter Fou1sue patrickNo ratings yet

- Chapter 14: Introduction To Panel DataDocument14 pagesChapter 14: Introduction To Panel DataAnonymous sfwNEGxFy2No ratings yet

- Assignment 2.1Document19 pagesAssignment 2.1SagarNo ratings yet

- Lecture Outline 9.2 PDFDocument4 pagesLecture Outline 9.2 PDFyip33No ratings yet

- Exercise 3 Two Way ANOVADocument8 pagesExercise 3 Two Way ANOVAjia quan gohNo ratings yet

- Ardl Analysis Chapter Four FinalDocument9 pagesArdl Analysis Chapter Four Finalsadiqmedical160No ratings yet

- Application of Chi - Square Test of Independence: A Seminar Presented ONDocument9 pagesApplication of Chi - Square Test of Independence: A Seminar Presented ONjimoh olamidayo MichealNo ratings yet

- Maths - Ijamss - Control Limits For Variable Fraction - Rebecca Jebaseeli EdnaDocument4 pagesMaths - Ijamss - Control Limits For Variable Fraction - Rebecca Jebaseeli Ednaiaset123No ratings yet

- Econometrics 2Document110 pagesEconometrics 2Nguyễn Quỳnh HươngNo ratings yet

- Chapter 4Document9 pagesChapter 4Rizwan RaziNo ratings yet

- Lecture 4 - Design of Experiments1Document15 pagesLecture 4 - Design of Experiments1waireriannNo ratings yet

- Bab 3 RevisiDocument7 pagesBab 3 RevisiZeroNine. TvNo ratings yet

- Kubsa Guyo Advance BiostatisticDocument30 pagesKubsa Guyo Advance BiostatistickubsaNo ratings yet

- Decision Science June 2023 Sem IIDocument8 pagesDecision Science June 2023 Sem IIChoudharyPremNo ratings yet

- Session 05-2021 (2021 - 10 - 19 04 - 03 - 36 UTC)Document18 pagesSession 05-2021 (2021 - 10 - 19 04 - 03 - 36 UTC)Della DillinghamNo ratings yet

- Models Evaluation I 2020Document15 pagesModels Evaluation I 2020Vivek Singh NainwalNo ratings yet

- Experiment # 3 Conservation of EnergyDocument5 pagesExperiment # 3 Conservation of EnergyellatsNo ratings yet

- Name: Kamran Ahmed Roll No: M19MBA064 Semester: 3 Subject: Econometrics Teacher Name: Sir UsmanDocument6 pagesName: Kamran Ahmed Roll No: M19MBA064 Semester: 3 Subject: Econometrics Teacher Name: Sir UsmanShahzad QasimNo ratings yet

- BRM AssgnmntDocument14 pagesBRM Assgnmntraeq109No ratings yet

- FR2202mock ExamDocument6 pagesFR2202mock ExamSunny LeNo ratings yet

- 2 SLS (Direct and Indirect) For Meroz Data SetDocument4 pages2 SLS (Direct and Indirect) For Meroz Data SetAadil ZamanNo ratings yet

- EconomicsDocument3 pagesEconomicsAlfred AwuorNo ratings yet

- Calib ReportDocument11 pagesCalib Reportthandeka ncubeNo ratings yet

- Terms and DefinitionsDocument31 pagesTerms and DefinitionskauluzavanessaNo ratings yet

- N Paper 0-92-11may-Ccbs Docs 9916 1Document12 pagesN Paper 0-92-11may-Ccbs Docs 9916 1kamransNo ratings yet

- UAS 44 Scoring ExplanationDocument6 pagesUAS 44 Scoring ExplanationROBERTO ENRIQUE GARCÍA AGUILARNo ratings yet

- Esaet2023 131 137Document7 pagesEsaet2023 131 137baotramlehuynh2k4No ratings yet

- Self Efficacy ManuscriptDocument15 pagesSelf Efficacy ManuscriptTroy Delos SantosNo ratings yet

- Lect 1 Descriptive StatisticsDocument38 pagesLect 1 Descriptive StatisticsRéy SæmNo ratings yet

- Practical Chapter 15 Part 1 WorksheetDocument2 pagesPractical Chapter 15 Part 1 WorksheetKabeloNo ratings yet

- Descriptive Statistics For The Variables in The Data: Panel A. Whether They Are in Treatment or Control GroupDocument8 pagesDescriptive Statistics For The Variables in The Data: Panel A. Whether They Are in Treatment or Control GroupVenna ShafiraNo ratings yet

- COVID 19 PresentationDocument16 pagesCOVID 19 PresentationAdane HailuNo ratings yet

- Written Report - 890Document6 pagesWritten Report - 890prab bainsNo ratings yet

- Operations Management Assignment For WriterDocument13 pagesOperations Management Assignment For WriterAijaz ShaikhNo ratings yet

- Regression Analysis and Its Interpretation With SpssDocument4 pagesRegression Analysis and Its Interpretation With SpssChakraKhadkaNo ratings yet

- KOM 6115 Assignment 4 (GS65807)Document7 pagesKOM 6115 Assignment 4 (GS65807)jia quan gohNo ratings yet

- MPH 520 FinalDocument12 pagesMPH 520 Finalapi-454844144No ratings yet

- Data Analitics For Business: Descriptive StatisticsDocument66 pagesData Analitics For Business: Descriptive StatisticsFarah Diba DesrialNo ratings yet

- Report Oleksandra CheipeshDocument9 pagesReport Oleksandra CheipeshSashka CheypeshNo ratings yet

- 4.2 Empirical Analysis: 4.2.1 Descriptive StatisticsDocument12 pages4.2 Empirical Analysis: 4.2.1 Descriptive StatisticsAtiya KhanNo ratings yet

- MethodologyDocument7 pagesMethodologyQuratulain BilalNo ratings yet

- Slovak University of Agriculture in Nitra Faculty of Economics and ManagementDocument8 pagesSlovak University of Agriculture in Nitra Faculty of Economics and ManagementdimaNo ratings yet

- Introduction To Meta Analysis SDGDocument72 pagesIntroduction To Meta Analysis SDGBes GaoNo ratings yet

- KOM 6115 Assignment 5 (GS65807)Document13 pagesKOM 6115 Assignment 5 (GS65807)jia quan gohNo ratings yet

- Azebe TsegayeDocument62 pagesAzebe Tsegayeberhanu seyoumNo ratings yet

- Abay Fana Dairy Farm & Milk Processing - FinalDocument42 pagesAbay Fana Dairy Farm & Milk Processing - Finalberhanu seyoumNo ratings yet

- Aries Agro Processing PLC FinalDocument21 pagesAries Agro Processing PLC Finalberhanu seyoumNo ratings yet

- Keko M.SC 2nd Draft Thesis-CommentDocument80 pagesKeko M.SC 2nd Draft Thesis-Commentberhanu seyoumNo ratings yet

- Keko M.SC 2nd Draft Thesis-CommentDocument80 pagesKeko M.SC 2nd Draft Thesis-Commentberhanu seyoumNo ratings yet

- Berhanu - Thesis For PresentationDocument69 pagesBerhanu - Thesis For Presentationberhanu seyoumNo ratings yet

- Berhanu Seyoum Final ThesisDocument88 pagesBerhanu Seyoum Final Thesisberhanu seyoumNo ratings yet

- Chapter 4Document102 pagesChapter 4berhanu seyoumNo ratings yet

- Berhanu Seyoum Final ThesisDocument88 pagesBerhanu Seyoum Final Thesisberhanu seyoumNo ratings yet

- Gebru Meshesha PDFDocument77 pagesGebru Meshesha PDFYemane100% (1)

- The Effects of Emotional Intelligence in Employees PerformanceDocument16 pagesThe Effects of Emotional Intelligence in Employees PerformanceMohan SubramanianNo ratings yet

- Adversity Quotient and Language Motivation As Predictors of Language Achievement Among Senior Teacher Education StudentsDocument10 pagesAdversity Quotient and Language Motivation As Predictors of Language Achievement Among Senior Teacher Education StudentsJuan MiguelNo ratings yet

- Econometrics Lecture 1: IntroductionDocument18 pagesEconometrics Lecture 1: IntroductionKnowledge HubNo ratings yet

- Economics MCQs BankDocument49 pagesEconomics MCQs BankMuhammad Nadeem SarwarNo ratings yet

- MDA Output InterpretationDocument32 pagesMDA Output InterpretationChitra BelwalNo ratings yet

- Group 14 - SPSS Applications (Revised)Document103 pagesGroup 14 - SPSS Applications (Revised)AnshulNo ratings yet

- Experimental Design: Some Basic Design ConceptsDocument23 pagesExperimental Design: Some Basic Design ConceptsRene PerezNo ratings yet

- Cc-Me cs2 PSMDocument40 pagesCc-Me cs2 PSMJuan ToapantaNo ratings yet

- NEJM 2023. Where Medical Statistics Meets Artificial IntelligenceDocument9 pagesNEJM 2023. Where Medical Statistics Meets Artificial IntelligenceunidadmedicamazamitlaNo ratings yet

- TheImpactofTimeManagementontheStudents1-PB 2 PDFDocument8 pagesTheImpactofTimeManagementontheStudents1-PB 2 PDFKrisha LalosaNo ratings yet

- Logistic Regression Model - A ReviewDocument5 pagesLogistic Regression Model - A ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- EE4 Ch04 Solutions ManualDocument12 pagesEE4 Ch04 Solutions ManualKavya SanjayNo ratings yet

- A Simulation Study of The Number of Events Per Variable inDocument7 pagesA Simulation Study of The Number of Events Per Variable inMario Guzmán GutiérrezNo ratings yet

- Pol Part in Europe PDFDocument22 pagesPol Part in Europe PDFSheena PagoNo ratings yet

- Data Mining and Machine Learning: Fundamental Concepts and AlgorithmsDocument57 pagesData Mining and Machine Learning: Fundamental Concepts and Algorithmss8nd11d UNINo ratings yet

- Determine The Magnitude of Statistical Variates at Some Future Point of TimeDocument1 pageDetermine The Magnitude of Statistical Variates at Some Future Point of TimeJulie Ann MalayNo ratings yet

- Dr. Gabao Research With Table of SpecDocument16 pagesDr. Gabao Research With Table of SpecChris PatlingraoNo ratings yet

- WINSEM2022-23 CSI3005 ETH VL2022230503218 ReferenceMaterialI WedMar0100 00 00IST2023 MultivariateDataVisualization PDFDocument56 pagesWINSEM2022-23 CSI3005 ETH VL2022230503218 ReferenceMaterialI WedMar0100 00 00IST2023 MultivariateDataVisualization PDFVenneti Sri sai vedanthNo ratings yet

- Sight-Reading: Andreas C. Lehmann and Reinhard KopiezDocument8 pagesSight-Reading: Andreas C. Lehmann and Reinhard KopiezSTELLA FOURLANo ratings yet

- Hussain 2012Document14 pagesHussain 2012MilicaNo ratings yet

- The Effect of Good Corporate Governance and Corporate Social Responsibility Disclosure On Financial Performance With The Company's Reputation As ModeratingDocument8 pagesThe Effect of Good Corporate Governance and Corporate Social Responsibility Disclosure On Financial Performance With The Company's Reputation As ModeratingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Gender Analysis of Healthcare Expenditures in Rural NigeriaDocument13 pagesGender Analysis of Healthcare Expenditures in Rural NigeriaPremier PublishersNo ratings yet

- Chapter 1 SlidesDocument12 pagesChapter 1 SlidesOreratile KeorapetseNo ratings yet

- Econometrics IIDocument101 pagesEconometrics IIDENEKEW LESEMI100% (1)