Professional Documents

Culture Documents

Note - Unit-4

Uploaded by

YaseenOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Note - Unit-4

Uploaded by

YaseenCopyright:

Available Formats

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

Unit-4

Course contents:

• Forecasting

• Time-Series Decomposition

• ARIMA models

• Measures of Forecast Accuracy

• Case studies : predictive applications to various Business and health

care domains

Faculty Name: Dr.Saroja Kumar Rout

Department: Information Technology

Course Name: Predictive Analytics

Course Code:A6608

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

What Is Time Series Forecasting? Overview

Time series forecasting essentially allows businesses to predict future outcomes by

analyzing previous data, and providing businesses with a glimpse into what direction data

are trending.But time series forecasting is not without its challenges. To use time series

forecasting, one must have accurate data from the past and have some assurance that this

data will be representative of future events. Curious to know whether time series

forecasting is applicable to your business? Then you’re in luck. Below, we’ll detail

everything you need to know about time series forecasting, so that you can decide whether

or not time series forecasting is right for you and your business.

What is a time series?

A time series is usually modelled through a stochastic process Y(t), i.e. a sequence of

random variables. In a forecasting setting we find ourselves at time t and we are interested

in estimating Y(t+h), using only information available at time t.

Time Series Forecasting: An Overview

Source: AiSmartz

What Is Time Series Forecasting?

Time series forecasting is a method of predicting future events by analyzing historical data.

Some examples of this include:

• Annual crop yields

• Monthly sales performances

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

• Cryptocurrency transactions

When Should You Use Time Series Forecasting?

Time series forecasting can be used when you have quantitative data that has been

measured over a period of time. For time series forecasting to work, you must ensure that

several criteria are met.

Forecasting with time series models involves using historical data to predict future values

in a time-ordered sequence. Time series forecasting is widely used in various fields,

including finance, economics, sales, weather, and more. Here's a general overview of the

process for building a time series forecasting model:

1. Data Collection: Gather historical time-ordered data. The data should include a time

index and the corresponding observations.

2. Data Preprocessing: Clean and preprocess the data. This includes handling missing

values, outliers, and transforming the data as needed. Common preprocessing steps

include differencing to remove trends or seasonal patterns and scaling if necessary.

3. Exploratory Data Analysis (EDA): Perform EDA to understand the data's

characteristics, including seasonality, trends, and autocorrelation. Visualizations and

statistical tests can be helpful in this stage.

4. Train-Test Split: Split the data into a training set (historical data) and a testing set

(future data for evaluation). The testing set should be held out and not used for model

training.

5. Model Selection: Choose an appropriate time series forecasting model. Some

common models include:

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

• ARIMA (AutoRegressive Integrated Moving Average): Suitable for stationary

data with autocorrelation.

• Exponential Smoothing (ETS): Useful for data with seasonality and trends.

• Prophet: Designed for daily seasonal data with holidays and events.

• LSTM (Long Short-Term Memory): A type of recurrent neural network (RNN)

for more complex sequential data.

• VAR (Vector Autoregression): Used when dealing with multiple time series

variables.

6. Model Training: Train the selected model using the training data. This involves

estimating model parameters and fitting the model to the historical data.

7. Model Evaluation: Use the testing set to evaluate the model's performance. Common

evaluation metrics include Mean Absolute Error (MAE), Mean Squared Error (MSE),

Root Mean Squared Error (RMSE), and others.

8. Forecasting: Once the model is trained and evaluated, use it to make future forecasts.

The forecast horizon depends on your specific application.

9. Model Refinement: Depending on the model's performance, you might need to fine-

tune parameters, consider alternative models, or retrain the model with more recent

data to improve accuracy.

10. Deployment: If the model meets your forecasting requirements, deploy it for

generating real-time or future predictions.

11. Monitoring: Continuously monitor the model's performance and retrain it as needed,

especially if the data distribution or patterns change.

Remember that choosing the right model and preprocessing steps depends on the specific

characteristics of your time series data. Seasonality, trends, and autocorrelation are

critical factors to consider when selecting the appropriate model. Additionally, time

series forecasting often involves an iterative process of model selection, training, and

evaluation to achieve the best results.

2. Time-Series Decomposition

Time-series decomposition is a statistical technique used to break down a time

series data into its constituent components. These components typically include trend,

seasonality, and residual (or error). The goal of time-series decomposition is to better

understand the underlying patterns and structures within the data, aiding in analysis and

forecasting.

1. Trend:

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

• The trend component represents the long-term movement or pattern in the

data. It captures the overall direction, whether the series is increasing,

decreasing, or staying relatively constant over time.

2. Seasonality:

• Seasonality refers to the repeating patterns or variations in the data that occur

at regular intervals. These intervals could be daily, weekly, monthly, or any

other consistent time frame. Seasonality accounts for the cyclical fluctuations

observed in the time series.

3. Residual (Error):

• The residual component, also known as the error term, represents the random

fluctuations or noise in the data that cannot be attributed to the trend or

seasonality. It includes any irregularities or unexpected variations that are not

explained by the identified trend and seasonality.

Time-series decomposition methods can be additive or multiplicative:

Time-series decomposition is valuable for various applications, including identifying

underlying patterns, removing seasonality for trend analysis, and improving forecasting

accuracy. Common methods for time-series decomposition include moving averages,

exponential smoothing, and more advanced techniques like the Seasonal-Trend

decomposition using LOESS (STL) or the Classical Decomposition method.

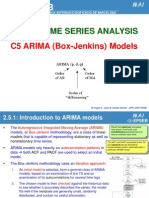

3. ARIMA models

What is ARIMA?

ARIMA is an acronym that stands for Auto-Regressive Integrated Moving Average. It is a

class of model that captures a suite of different standard temporal structures in time series

data.

In this tutorial, We will talk about how to develop an ARIMA model for time series

forecasting in Python.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

An ARIMA model is a class of statistical models for analyzing and forecasting time series

data. It is really simplified in terms of using it, Yet this model is really powerful.

ARIMA stands for Auto-Regressive Integrated Moving Average.

ARIMA, which stands for AutoRegressive Integrated Moving Average, is a popular time

series forecasting model that combines autoregression, differencing, and moving averages.

ARIMA models are used for analyzing and forecasting univariate time series data. The model

is particularly effective in capturing different components of time series data, including trends

and seasonality.

Here are the key components and concepts of ARIMA models:

1. AutoRegressive (AR) Component:

• The autoregressive component involves modeling the relationship between an

observation and several lagged observations (previous time steps). The "p" in

ARIMA(p, d, q) denotes the order of the autoregressive component, indicating

the number of lagged observations used in the model.

2. Integrated (I) Component:

• The integrated component refers to differencing the time series data to make it

stationary. Stationarity is crucial for ARIMA models because they perform

better on time series data with a constant mean and variance. The "d" in

ARIMA(p, d, q) represents the order of differencing required to achieve

stationarity.

3. Moving Average (MA) Component:

• The moving average component involves modeling the relationship between

the current observation and a residual error from a moving average model

applied to lagged observations. The "q" in ARIMA(p, d, q) signifies the order

of the moving average component, representing the number of lagged forecast

errors included in the model.

The general form of an ARIMA model is denoted as ARIMA(p, d, q), where:

• "p" is the order of the autoregressive component.

• "d" is the order of differencing.

• "q" is the order of the moving average component.

ARIMA models are suitable for time series data that exhibit trends and seasonality. They are

widely used in various fields for tasks such as financial forecasting, economic modeling, and

demand forecasting.

The model parameters (p, d, q) are typically determined through analysis of the time series

data, including autocorrelation and partial autocorrelation functions, as well as by assessing

the stationarity of the data through differencing. While ARIMA models are effective for many

time series, they may need adjustments or extensions for more complex patterns or non-

stationary data.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

Steps to Use ARIMA Model

We construct a linear regression model by incorporating the specified number and type of

terms. Additionally, we prepare the data through differencing to achieve stationarity,

effectively eliminating trend and seasonal structures that can adversely impact the regression

model.

1. Visualize the Time Series Data

Visualize the Time Series Data involves plotting the historical data points over time to

observe patterns, trends, and seasonality.

2. Identify if the date is stationary

Identify if the data is stationary involves checking whether the time series data exhibits a

stable pattern over time or if it has any trends or irregularities. Stationary data is necessary for

accurate predictions using ARIMA, and various statistical tests can be employed to determine

stationarity.

3. Plot the Correlation and Auto Correlation Charts

To plot the correlation and auto-correlation charts in the steps of using the ARIMA model

online, you analyze the time series data. The correlation chart displays the relationship

between the current observation and lagged observations, while the auto-correlation chart

shows the correlation of the time series with its own lagged values. These charts provide

insights into potential patterns and dependencies within the data.

4. Construct the ARIMA Model or Seasonal ARIMA based on the data

To construct an ARIMA (Autoregressive Integrated Moving Average) model or a Seasonal

ARIMA model, one analyzes the data to determine the appropriate model parameters, such as

the order of autoregressive (AR) and moving average (MA) components. This step involves

selecting the optimal values for the model based on the characteristics and patterns observed

in the data.

Let’s Start

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

Defining the Dataset

In this tutorial, I am using the below dataset.

df=pd.read_csv('time_series_data.csv')

df.head()

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

# Updating the header

df.columns=["Month","Sales"]

df.head()

df.describe()

df.set_index('Month',inplace=True)

from pylab import rcParams

rcParams['figure.figsize'] = 15, 7

df.plot()

If we see the above graph then we will able to find a trend that there is a time when sales are

high and vice versa. That means we can see data is following seasonality. For ARIMA first

thing we do is identify if the data is stationary or non – stationary. if data is non-stationary we

will try to make them stationary then we will process further.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

Case studies : predictive applications to various Business and health care

domains

Case studies- Business Domain:

Case Study: Retail Sales Forecasting

Objective: The primary goal of this case study was to implement predictive analytics to

forecast retail sales accurately, allowing the company to optimize inventory management and

improve overall revenue.

Approach:

1. Data Collection:

• Gathered historical sales data for various products across different stores.

• Collected information on external factors such as economic indicators,

seasonality, and promotional events.

2. Feature Selection:

• Identified key features affecting sales, including historical sales performance,

seasonal trends, and external factors that might influence consumer behavior.

3. Model Selection:

• Chose a predictive modeling technique, such as time series analysis or

machine learning algorithms (e.g., decision trees, random forests), based on

the complexity of the data and the desired accuracy.

4. Data Preprocessing:

• Cleaned and preprocessed the data, handling missing values and outliers.

• Created a structured dataset suitable for training the predictive model.

5. Training the Model:

• Split the dataset into training and testing sets.

• Trained the predictive model using historical data, allowing the algorithm to

learn patterns and relationships between features and sales outcomes.

6. Validation:

• Validated the model using the testing set to ensure its accuracy and

generalizability.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

Results:

1. Accurate Sales Forecasting:

• The predictive model successfully forecasted sales trends for various products

in different stores.

• Accuracy was achieved through a combination of historical sales patterns and

external factors.

2. Optimized Inventory Management:

• Armed with accurate sales forecasts, the company optimized inventory levels

for each product and store.

• Reduced instances of overstocking and stockouts, leading to more efficient

supply chain management.

3. Improved Revenue:

• By minimizing excess inventory costs and capitalizing on sales opportunities,

the company experienced improved revenue.

• Strategic decision-making based on predictive analytics positively impacted

the bottom line.

4. Responsive Marketing Strategies:

• The insights from the predictive model allowed for the development of

targeted marketing strategies.

• Promotional efforts and discounts were strategically timed to align with

predicted high-demand periods.

This case study demonstrates how predictive analytics in retail sales forecasting can lead to

more informed decision-making, improved operational efficiency, and ultimately, enhanced

business performance.

Case studies- Health care domains:

Case Study: Patient Readmission Prediction

Objective: The primary objective of this case study was to implement predictive analytics to

identify patients at high risk of hospital readmission. The goal was to improve patient care,

reduce healthcare costs, and enhance overall hospital operational efficiency.

Approach:

1. Data Collection:

• Gathered comprehensive patient data, including demographics, medical history,

admission records, and post-discharge information.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

• Identified relevant features such as chronic conditions, previous

hospitalizations, and socio-economic factors.

2. Feature Engineering:

• Created relevant features for predicting readmission risk, considering factors

such as the number of previous hospitalizations, length of stay, and the presence

of specific chronic diseases.

3. Model Selection:

• Chose a suitable predictive modeling technique, such as logistic regression or

machine learning algorithms like decision trees or ensemble methods, based on

the complexity of the data.

4. Data Preprocessing:

• Handled missing data and outliers appropriately.

• Ensured that the dataset was well-structured for training the predictive model.

5. Training the Model:

• Split the dataset into training and testing sets.

• Trained the predictive model using historical patient data to learn patterns

associated with readmission risk.

6. Validation:

• Validated the model's performance using a separate testing set.

• Assessed the model's accuracy, sensitivity, specificity, and other relevant

metrics.

Results:

1. Identification of High-Risk Patients:

• The predictive model successfully identified patients at a high risk of hospital

readmission based on learned patterns from historical data.

2. Personalized Care Plans:

• Healthcare providers could intervene with personalized care plans for identified

high-risk patients, addressing specific needs and potential risk factors.

3. Reduced Readmission Rates:

• The implementation of personalized interventions led to a reduction in hospital

readmission rates for the identified high-risk group.

4. Resource Optimization:

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

Department of Information Technology, Vardhaman College of Engineering, Hyderabad.

Web:(www.vardhaman.org)

• Hospitals could allocate resources more efficiently by focusing on patients

identified as high risk, ensuring that the necessary support and interventions

were provided.

5. Cost Savings:

• By reducing unnecessary readmissions, the hospital achieved cost savings

associated with both direct medical expenses and indirect costs related to patient

care.

This case study illustrates the powerful impact of predictive analytics in healthcare by enabling

proactive and personalized care strategies, ultimately leading to improved patient outcomes

and more efficient use of healthcare resources.

SAROJA/LECTURE NOTE/VMEG/PREDICTIVE ANALYTICS/UNIT-4

You might also like

- Answer 4Document3 pagesAnswer 4Shubham imtsNo ratings yet

- WiproDocument21 pagesWiproJatinNo ratings yet

- Ads Phase5Document10 pagesAds Phase5RAJAKARUPPAIAH SNo ratings yet

- About Time Series DataDocument2 pagesAbout Time Series DataBangsanNo ratings yet

- DAC Phase2Document5 pagesDAC Phase2divyasaampathNo ratings yet

- ADS SEM8 Com BH Sample NotesDocument16 pagesADS SEM8 Com BH Sample NotesNabeelNo ratings yet

- Module 5 PDFDocument23 pagesModule 5 PDFJha JeeNo ratings yet

- A Survey On Forecasting of Time Series DataDocument8 pagesA Survey On Forecasting of Time Series DataLinh PhamNo ratings yet

- Ads - Phase 2Document6 pagesAds - Phase 2RidhaNo ratings yet

- Ergo Assigment Standard DataDocument28 pagesErgo Assigment Standard Datahenok biruNo ratings yet

- Advanced Forecasting Techniques: How To Use Advanced Forecasting Techniques For Estimating Demand of NHS ServicesDocument18 pagesAdvanced Forecasting Techniques: How To Use Advanced Forecasting Techniques For Estimating Demand of NHS Servicesbittu00009No ratings yet

- MGT6 Module 4 ForecastingDocument10 pagesMGT6 Module 4 ForecastingEzra HuelgasNo ratings yet

- Demand Forecasting Techniques in Human Resource Planning.Document12 pagesDemand Forecasting Techniques in Human Resource Planning.shadowfx955No ratings yet

- Demand Forecasting of A Perishable Dairy Drink: An ARIMA ApproachDocument15 pagesDemand Forecasting of A Perishable Dairy Drink: An ARIMA ApproachLewis TorresNo ratings yet

- Demand Forecasting: After Reading This Module, The Learner Should Be Able ToDocument10 pagesDemand Forecasting: After Reading This Module, The Learner Should Be Able ToAangela Del Rosario CorpuzNo ratings yet

- Application of Predictive Analytics in Volume Forecasting and Resource PlanningDocument69 pagesApplication of Predictive Analytics in Volume Forecasting and Resource PlanningVamsi KrishnaNo ratings yet

- Time Series Analysis - Definition, Types & Techniques - TableauDocument7 pagesTime Series Analysis - Definition, Types & Techniques - TableaukarthiNo ratings yet

- The Realization of A Type of Supermarket Sales Forecast Model & SystemDocument6 pagesThe Realization of A Type of Supermarket Sales Forecast Model & SystemAnoop DixitNo ratings yet

- Module 45Document15 pagesModule 45Lucy MendozaNo ratings yet

- Greykite Arxiv PaperDocument27 pagesGreykite Arxiv PaperabcNo ratings yet

- Big Data Analytics in Forecasting Lakes LevelsDocument4 pagesBig Data Analytics in Forecasting Lakes LevelsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Wang3 1 PDFDocument34 pagesWang3 1 PDFNarayan OliNo ratings yet

- A Comprehensive Guide To Time Series AnalysisDocument26 pagesA Comprehensive Guide To Time Series AnalysisMartin KupreNo ratings yet

- Lecture Notes 4Document6 pagesLecture Notes 4vivek guptaNo ratings yet

- Forecasting Is The Process of Making Statements About Events Whose Actual OutcomesDocument4 pagesForecasting Is The Process of Making Statements About Events Whose Actual OutcomesSamir JogilkarNo ratings yet

- Data Mining - UNIT-VDocument13 pagesData Mining - UNIT-Vbkharthik1No ratings yet

- What-If Analysis TemplateDocument5 pagesWhat-If Analysis Templatenathaniel.chrNo ratings yet

- Sahasrabudhe2020 - Experimental Analysis of Machine LearningDocument7 pagesSahasrabudhe2020 - Experimental Analysis of Machine LearningbmdeonNo ratings yet

- AI and ML For Business Antim Prahar WITH ANSWERSDocument26 pagesAI and ML For Business Antim Prahar WITH ANSWERSTinku The BloggerNo ratings yet

- A Software Tool For Intermittent Demand Analysis: January 2009Document7 pagesA Software Tool For Intermittent Demand Analysis: January 2009Anonymous 0JWiNosPNo ratings yet

- All NotesDocument6 pagesAll Notestiajung humtsoeNo ratings yet

- Forecasting and Demand PlanningDocument30 pagesForecasting and Demand PlanningKurt PrunaNo ratings yet

- Ai - Digital Assignmen1Document11 pagesAi - Digital Assignmen1SAMMY KHANNo ratings yet

- Using Forecasting Methodologies to Explore an Uncertain FutureFrom EverandUsing Forecasting Methodologies to Explore an Uncertain FutureNo ratings yet

- Bda Unit 5Document30 pagesBda Unit 5lalithavasavi12No ratings yet

- Lesson 8 Components of DSSDocument6 pagesLesson 8 Components of DSSSamwelNo ratings yet

- s3950476 TimeSeriesAnalysis Assignment 3Document13 pagess3950476 TimeSeriesAnalysis Assignment 3Namratha DesaiNo ratings yet

- SCA Notes Unit TwoDocument4 pagesSCA Notes Unit Twokritigupta.may1999No ratings yet

- Operations BookletDocument40 pagesOperations BookletshakeDNo ratings yet

- Ali ReserchDocument23 pagesAli Reserchaslamaneeza8No ratings yet

- Ba Unit 4 - Part1Document7 pagesBa Unit 4 - Part1Arunim YadavNo ratings yet

- EdaDocument12 pagesEdaInspiring Evolution100% (1)

- Unit of AnalysisDocument56 pagesUnit of Analysismallabhi354No ratings yet

- Architecture of Data Science Projects: ComponentsDocument4 pagesArchitecture of Data Science Projects: ComponentsDina HassanNo ratings yet

- 399 ArticleText 844 1 10 20230203Document12 pages399 ArticleText 844 1 10 20230203Srividya KondaguntaNo ratings yet

- Data WarehousingDocument9 pagesData Warehousingprajendangol09No ratings yet

- Pengantar: Time Series AnalysisDocument41 pagesPengantar: Time Series AnalysisAdam Alexander BriaNo ratings yet

- ShaunBorg Placement ReportDocument11 pagesShaunBorg Placement ReportMatthew MontebelloNo ratings yet

- Forecasting: Overview: Learning ObjectivesDocument5 pagesForecasting: Overview: Learning ObjectivesCharice Anne VillamarinNo ratings yet

- Samra ResrchDocument20 pagesSamra Resrchaslamaneeza8No ratings yet

- Predictive AnalyticsDocument9 pagesPredictive Analyticsmattew657No ratings yet

- Data ScienceDocument16 pagesData ScienceDankmullaNo ratings yet

- 7 Tsa RiDocument18 pages7 Tsa RiSAMRIDDHI JAISWALNo ratings yet

- Operational Management POMG2710: Chapter OutlineDocument17 pagesOperational Management POMG2710: Chapter OutlineلبليايلاNo ratings yet

- Da CH1 SlqaDocument6 pagesDa CH1 SlqaSushant ThiteNo ratings yet

- Clustering On Temporal Multidimensional Data by Visualization TechniqueDocument4 pagesClustering On Temporal Multidimensional Data by Visualization TechniqueSiva Prasad GottumukkalaNo ratings yet

- Forecasting: Categories of Forecasting MethodsDocument4 pagesForecasting: Categories of Forecasting MethodsprakasNo ratings yet

- Operations Dossier + FAQ's 2018-19 PDFDocument93 pagesOperations Dossier + FAQ's 2018-19 PDFVijay RamanNo ratings yet

- IBM SPSS ForecastingDocument2 pagesIBM SPSS ForecastingErika PerezNo ratings yet

- Ch22 Time Series Econometrics - ForecastingDocument38 pagesCh22 Time Series Econometrics - ForecastingKhirstina CurryNo ratings yet

- Stata Lab4 2023Document36 pagesStata Lab4 2023Aadhav JayarajNo ratings yet

- ARIMA and Sugar Cane JuiceDocument4 pagesARIMA and Sugar Cane JuiceRounaq DharNo ratings yet

- LectDocument96 pagesLectCarmen OrazzoNo ratings yet

- Time Series PennDocument67 pagesTime Series PennVishnu Prakash SinghNo ratings yet

- ARIMA Models For Bus Travel Time PredictionDocument11 pagesARIMA Models For Bus Travel Time Predictioncristian_masterNo ratings yet

- IGNOU AssignmentDocument9 pagesIGNOU AssignmentAbhilasha Shukla0% (1)

- Forecasting Energy Consumption of Turkey by ArimaDocument10 pagesForecasting Energy Consumption of Turkey by ArimaMuhammad Sajid AfridiNo ratings yet

- WRSP JjuDocument344 pagesWRSP JjuEng Ahmed abdilahi IsmailNo ratings yet

- Time Series Forecasting Business Report: Name: S.Krishna Veni Date: 20/02/2022Document31 pagesTime Series Forecasting Business Report: Name: S.Krishna Veni Date: 20/02/2022Krishna Veni100% (1)

- Time SeriesDocument91 pagesTime SeriesRajachandra VoodigaNo ratings yet

- Journal of International Trade Law and Policy: Emerald Article: Determinants of Foreign Direct Investment in IndiaDocument20 pagesJournal of International Trade Law and Policy: Emerald Article: Determinants of Foreign Direct Investment in IndiaRahmii Khairatul HisannNo ratings yet

- Moanassar,+8986 26608 1 LEDocument13 pagesMoanassar,+8986 26608 1 LESnehal singhNo ratings yet

- Real Statistics Using Excel - Time Series Examples Workbook Charles Zaiontz, 27 July 2018Document380 pagesReal Statistics Using Excel - Time Series Examples Workbook Charles Zaiontz, 27 July 2018rcorellanarNo ratings yet

- Monte Carlo Simulation (ARIMA Time Series Models)Document15 pagesMonte Carlo Simulation (ARIMA Time Series Models)Anonymous FZNn6rBNo ratings yet

- ARIMA Model Python Example - Time Series ForecastingDocument11 pagesARIMA Model Python Example - Time Series ForecastingREDDAIAH B NNo ratings yet

- ACTL2102 Final NotesDocument29 pagesACTL2102 Final NotesVinit DesaiNo ratings yet

- Time Series ARIMA Models PDFDocument22 pagesTime Series ARIMA Models PDFahmeddanafNo ratings yet

- Time Series Analysis: C5 ARIMA (Box-Jenkins) ModelsDocument14 pagesTime Series Analysis: C5 ARIMA (Box-Jenkins) ModelsStella Ngoleka IssatNo ratings yet

- Arima Slide ShareDocument65 pagesArima Slide Shareshrasti guptaNo ratings yet

- Fadi Al-Turjman, Manoj Kumar, Thompson Stephan, Akashdeep Bhardwaj - Evolving Role of AI and IoMT in The Healthcare Market-Springer (2022)Document283 pagesFadi Al-Turjman, Manoj Kumar, Thompson Stephan, Akashdeep Bhardwaj - Evolving Role of AI and IoMT in The Healthcare Market-Springer (2022)luongthuhuongNo ratings yet

- W1Document17 pagesW1k_bodaNo ratings yet

- IGNOU MBA MS-08 Solved AssignmentDocument12 pagesIGNOU MBA MS-08 Solved AssignmenttobinsNo ratings yet

- Jurnal Analisis Time SeriesDocument8 pagesJurnal Analisis Time SeriesAngel DevianyNo ratings yet

- Module 3.1 Time Series Forecasting ARIMA ModelDocument19 pagesModule 3.1 Time Series Forecasting ARIMA ModelDuane Eugenio AniNo ratings yet

- Mathematical Modelling of Environmental and Life Sciences ProblemsDocument253 pagesMathematical Modelling of Environmental and Life Sciences ProblemsPatriniaRupestrisNo ratings yet

- Time Series Forecasting of GDPDocument32 pagesTime Series Forecasting of GDPGrace StylesNo ratings yet

- Time Series Analysis of Exchange Rate NigerianDocument21 pagesTime Series Analysis of Exchange Rate NigerianInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Chapter - 9-Lectures Dr. SAEEDDocument90 pagesChapter - 9-Lectures Dr. SAEEDفيصل القرشيNo ratings yet

- Time Series Analysis of Sales of Petroleum Products in Nigeria (1988-2011)Document32 pagesTime Series Analysis of Sales of Petroleum Products in Nigeria (1988-2011)Daniel Obasi50% (2)