Professional Documents

Culture Documents

Unsupervised Learning Algorithm 1

Uploaded by

vamsi krishnaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Unsupervised Learning Algorithm 1

Uploaded by

vamsi krishnaCopyright:

Available Formats

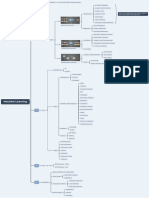

Unsupervised Learning Algorithms

1. Clustering 3 . Hig h D im e n s io n Vis ua l iz at io n Gaussian distribution falls exponentially while t -

distributions sort of inversely.

t is a task of grouping a set of points/observations (without any target variable) so that points in the same group are imensionality reduction techniques help to convert high

4. Recommender system

I D

more similar to each other than those in other groups. dimensional data to fewer dimensions preserving the

information of our feature columns. Recommender systems are designed to recommend things

Distance-Based Clustering Density-Based Clustering Distribution-Based Clustering

to the user based on many factors. hese systems predict the

T

DBSCAN (Density-based Gaussian Mixture Models a . P rinci al Co

p mp onent A nalysis ( C A)

P

most likely product the users are most likely to purchase and

K-Means Hierarchical spatial clustering application (GMM) are interested in.

Name

with noise)

The main idea of PC is to find the best value of vector u,

A

Starting from each point as Idea is to classify points as Given that data follows gaussian which is the direction of maximum variance (or maximum 4 1 Mar k et-Bas k et A nalysis

Starting with K .

random centroids, each cluster, we group either core point border point

, distribution, we identify mean and information) and along which we should rotate our existing

arket basket analysis is used to analyze the combination of

we assign points to similar points until there is or a noise point based on how

M

variance that best represents the coordinates.

only one cluster. The ideal K densely a point is surrounded shape of the clusters.

products that have been bought together.

Main Idea each of them to

form K-clusters is obtained using a by other points.

he eigenvector associated with A ssociation R ules

dendrogram T

Type of distance metric chosen for

the largest eigenvalue indicates The IF component of an association rule is known as the

K - number of μ(mean) and σ(variance)

Linkage Function calculating distances between the direction in which the data antecedent. The THEN component is known as the

clusters

Online

points. has the most variance. consequent.

Hyper-

Yes No No Yes Su pp ort :

parameters

b t- SN ( t-Distributed S toc astic N eig bor

. E h h Em bedding )

Good ability to find arbitrarily Provides more intuitive results for

Simplest No need to decide shaped clusters and clusters with making decisions.

t-S tries to create an embedding that preserves the

clustering K before

NE

Advantages

noise.

Provides a lot more elliptical neighborhood using some probabilistic methods when the

algorithm. clustering No need to decide K clusters. Con idence :f

datapoint in a higher dimensional space is projected into a

Random lower dimensional space.

Very sensitive towards the Can’t handle high dimensional It assumes normal distribution

initialization choice of linkage data of features We compute Pij for d-dimensions

proble functions DBSCAN struggles with clusters and Qij for d′-dimensions where

Fails for varying L i t:

f

of similar density. Clusters are assumed to be d>d.

size or density, It is an offline model.

elliptical

Dis-

and non-globular

advantages

shapes Need to specify the number of

Need to define K.

clusters.

p ros cons

It is the most simple and It is computationally

2 . A n o m a ly D e t e c t i o n We define qij with the same formulation as pij since every xi easy to understand and expensive

and xj would have corresponding yi and yj in d’ dimensional implement Complexity grows

nomaly is synonymous with an outlier. nomaly means something which is not a part of normal behavior

t is used to calculate large exponentially

A A

ovelty means something unique, or something that you haven't seen before(novel). space. I

N

item sets. Cold start problem.

E lli tic n elo e

p E v p I solation orest

F L ocal O utlier actor (

F LOF )

4 2 Content-based reco ender syste

ark the points as outliers which

. mm m

Main Idea

M

W e randomly make splits in the data The core idea behind LOF is to compare

are very far away from the and make trees out of it until there is the density of a point with its neighbors '

Recommends items with similar content e.g. metadata ( ,

centroid of the ellipse. only a single point in the leaf node.

density.

description topics to the items the user has liked in the

, )

past.

Does not work on non-unimodal O n an average, outliers have lower If the density of a point is less than the For a useful d’ transformation we need pij ≈ qij, we use KL-

data. depth and inliers have more depth in density of its neighbors, we flag that point ros cons

divergence that defines a loss function which measures p

It is the random trees. as an outlier.

the dissimilarity between two distributions No cold start proble t always recommends

I

specifically N o need for usage dat items related to the same

Dra wb cka s

No popularity bias can categories and never

for Biased towards axis-parallel Need to find optimal t-distributions work better than gaussian distributions

, ,

recommend items with rare recommend anything from

multivariate splits Need to tune threshold with S E because:

feature

N

other categories

Gaussians Bad performance on high dimensional Can capture user content Requires a lot of domain

dat t-distributions with a degree of freedom equal to 1 have a

Data is assumed to follow unimodal features to provide knowledge.

and multivariate gaussian.. High Time Complexity longer tail than gaussian distributions

recommendations

R e co m m e n d e r sys t e m 5 . t i m e s e r i e s a n a lys i s 5.3 Effective Forecasting methods (Exponential smoothening):

S imple Ex ponential S moot h ing

4.3 Collaborative filtering system

Time Series forecasting is method of making predictions based on historical

This system looks for patterns in user activity to produce The key idea is to not only keep some memory of the entire time

time-stamped data.

user-specific recommendations series but also to give more value to the recent data and less value

,

U ser-Based: This is a form of collaborative filtering for to the past value.

Trend : It is a linear increasing or decreasing behavior of the series over a long

recommender systems based on the similarity between period that does not repeat.

the users is calculated

Seasonality: Seasonality in time-series data refers to a pattern that occurs at a

Item-Based: This is a form of collaborative filtering for regular interval.

recommender systems based on the similarity between

the items is calculated Moving Average: The approach of taking an average of the last k data points in

our series and use it to guess the next point at t=k is Moving Average.

Model-based: This system is based on the similarity

between the users and items is calculated. Data contains

a set of users and items and ratings/reactions in the form

of a user-item interaction matrix.

5 .1 T i m e S e r i e s D e co m p o s i t i o n Double Exponential Smoothing

Matrix Factorization: Matrix factorization is a way to

generate latent features when multiplying two different The trend of the entire time series in the SES formulation is incorporated to

Additive Multiplicative forecast future values.

kinds of entities. Collaborative filtering is the application

of matrix factorization to identify the relationship

between items and user entities.

In multiplicative seasonality, we obtain a time series in which the amplitude of the

seasonal component is increasing with an increasing trend.

i1 i2 i3 i4

U1 4.5 2.0 U1 1. 2 0.8 i1 i2 i3 i4

5.2 simple methods for forecasting:

U2 3.5

=

U2 1.4 0.9

x 1.5 1. 2 1.0 0.8 N aive The forecasts are equal to the last observed data.

2.0

U3 5.0 U3 1.5 1.0

1.7 0.6 1.1 0.4

U4 3.5 4.0 1.0 U4 1. 2 0.8

rating matri x u ser matri x item matri x

P re d i c te d R atings :

L atent F eat u re : k = 4

Triple Exponential Smoothing

- - 3 - 5 1 0 2 1 1 2 3 4 5

Mean / Median The forecasts are equal to the mean /median of Triple xponential Smoothing is an extension of Double xponential Smoothing

3 - - - 1 1 3 0 0 1 0 1 2 1

4 3 4 2 1

E E

- -

= = 1 1 1 0 0

observed data. that explicitly adds support for seasonality to the univariate time series.

- 3 2 0 3 0 0 3 3 3 0 0

-

2 - - - 1 1 3 0 0

= 0 1 0 1

2 1 5 2 4

- 0 2 0 2 3

2 - - - 2 1 0 0 4 2

3 1 3

r p q r’

pros c ons

Minimal domain knowledge

Cold start proble

require

Computationally expensive

The system doesn't need

It's a bit difficult to recommend

contextual features

items to users with unique tastes. The forecasts are e q ual to the observed value at the

Serendipity Season N aive

same time from the last occurrence of same season .

5 . 4 Stat i o n a r i t y A R I M A ( A u t o R e g r e s s i v e I n t e g ra t e d M o v i n g A v e ra g e )

A R I M A i s c o m b i n a t i o n o f A R a n d M A a l o n g w i t h i n t e g ra t i o n w h i c h i s

A time series whose properties are n o t d e p e n d e n t opposite of differencing.

upon time

Therefore time series with trend and seasonality are

non stationary

Differencing can be used to remove non stationarity.

In first differences the values become the difference

between consecutive original values. Here the predictors include both the lagged values of y and lagged

Similarly second differences we find the difference errors. Here is the differenced series .

between the consecutive values of first differences. In ARIMA (p , d , q) means that :

p is the order o f AR m o d e

d is t h e d e g r e e fi r s t d i ff e r e n c i n g

5.5 ARIMA Forecasting methods: q is o r d e r o f MA m o d e l

Its different from ARMA in the aspect that ARMA re quires the time

s e r i e s t o b e s t a t i o n a r y.

AR (Autoregressive model)

In AR models, the variable of interest is forecasted using a

linear combination of past val ue of the variable S A R I M A ( S e a s o n a l A u t o r e g r e s s i v e i n t e g ra t e d m o v i n g a v e ra g e ) :

SARIMA model can model seasonal data. Its formed by adding

seasonal terms in the ARIMA model.

The above e quation shows the AR model of order p, i.e.

AR(p )

M A ( M o v i n g A v e ra g e )

S A R I M A c a n b e r e p r e s e n t e d b y,

In MA models, we use the past forecast errors for forecasting.

where m = seasonal period

upper case notations are for seasonal term

l o w e r c a s e n o t a t i o n s a r e f o r n o n - s e a s o n a l t e r m s

The seasonal par t involves terms similar to non-seasonal terms but

involves backshift of the seasonal period.

In MA models, we use the past forecast errors for

forecasting.

A R I M AX ( A R I M A + E x o g e n o u s v a r i a b l e )

A R M A ( A u t o R e g r e s s i v e M o v i n g A v e ra g e )

Exogenous variables are variables whose cause is external to the

It is used to describe stationar y ti me series in terms of AR

model and whose role is to explain other variables or outcomes in

the model

and MA

In ARMA (p, q) , p is the order of AR and q is the order of

MA

It includes lagged values as well as the lagged errors.

Here

x is an exogenous variable used along with lagging errors and

lagging values

T h e r e i s a l s o S A R I M AX ( S A R I M A + E x o g e n o u s v a r i a b l e

You might also like

- Deployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformDocument2 pagesDeployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformIzan IzwanNo ratings yet

- Week 2 MineralsDocument23 pagesWeek 2 MineralsShuaib IsmailNo ratings yet

- Deployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformDocument1 pageDeployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformGaby GonzálezNo ratings yet

- Automatic Direction Finder: The Adf SystemDocument5 pagesAutomatic Direction Finder: The Adf Systemajaydce05No ratings yet

- Discount Factor TemplateDocument5 pagesDiscount Factor TemplateRashan Jida ReshanNo ratings yet

- DN NDM Factor For Bearings Aricle 3Document3 pagesDN NDM Factor For Bearings Aricle 3Anand SinhaNo ratings yet

- Linear Programming SIMPLEX METHODDocument18 pagesLinear Programming SIMPLEX METHODNicole Vinarao100% (1)

- Empuje Axial en Bombas VerticalesDocument6 pagesEmpuje Axial en Bombas VerticalesKaler Soto PeraltaNo ratings yet

- Machine Learning Section3 Ebook v05Document15 pagesMachine Learning Section3 Ebook v05camgovaNo ratings yet

- Bench MarkingDocument12 pagesBench Markingamgad monirNo ratings yet

- Pam Clustering TechniqueDocument10 pagesPam Clustering TechniquesamakshNo ratings yet

- M6Document23 pagesM6foodiegurl2508No ratings yet

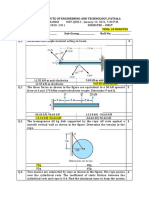

- Amity School of Engineering and Technology Amity University, Uttar PradeshDocument5 pagesAmity School of Engineering and Technology Amity University, Uttar PradeshbuddyNo ratings yet

- ML AlgorithmsDocument4 pagesML AlgorithmsamitNo ratings yet

- Implicit Quantile Networks For Distributional Reinforcement Learning, Will Dabney Et Al., 2018, v1Document14 pagesImplicit Quantile Networks For Distributional Reinforcement Learning, Will Dabney Et Al., 2018, v1Jean SavournonNo ratings yet

- Clustering HTML PDFDocument2 pagesClustering HTML PDFCarlos AbrahamNo ratings yet

- I. Automatic Screening System - A ReviewDocument6 pagesI. Automatic Screening System - A ReviewMukesh LavanNo ratings yet

- Ambo University: Inistitute of TechnologyDocument15 pagesAmbo University: Inistitute of TechnologyabayNo ratings yet

- Iterative Figure-Ground DiscriminationDocument4 pagesIterative Figure-Ground Discriminationmehdi13622473No ratings yet

- 2.3. Clustering - Scikit-Learn 1Document24 pages2.3. Clustering - Scikit-Learn 1sarangNo ratings yet

- Parsons PDFDocument16 pagesParsons PDFrather AarifNo ratings yet

- 4 ClusteringDocument21 pages4 Clusteringpaulitxenko08No ratings yet

- Recursive Hierarchical Clustering AlgorithmDocument7 pagesRecursive Hierarchical Clustering Algorithmreader29No ratings yet

- Clustering High Dimensional DataDocument15 pagesClustering High Dimensional DataInzemam Ul HaqNo ratings yet

- Course - Data Science Foundations - Data MiningDocument3 pagesCourse - Data Science Foundations - Data MiningImtiaz NNo ratings yet

- SML Hand Note Bau by DTDocument1 pageSML Hand Note Bau by DTfarida1971yasminNo ratings yet

- 2020-Auto-Tuning Spectral Clustering For Speaker Diarization Using Normalized Maximum EigengapDocument5 pages2020-Auto-Tuning Spectral Clustering For Speaker Diarization Using Normalized Maximum EigengapMohammed NabilNo ratings yet

- A Study On Weather Forecast Using Data StreamsDocument11 pagesA Study On Weather Forecast Using Data StreamsDaring ChowdariesNo ratings yet

- Sathyabama Institute of Science and Technology SIT1301-Data Mining and WarehousingDocument22 pagesSathyabama Institute of Science and Technology SIT1301-Data Mining and WarehousingviktahjmNo ratings yet

- Cluster Analysis: Basic Concepts and AlgorithmsDocument141 pagesCluster Analysis: Basic Concepts and AlgorithmsMayank WadhwaniNo ratings yet

- Machine Learning: B.Tech (CSBS) V SemesterDocument17 pagesMachine Learning: B.Tech (CSBS) V SemesterLIKHIT JHANo ratings yet

- Personality Prediction SystemDocument6 pagesPersonality Prediction SystemMohammad saheemNo ratings yet

- UCS551 Chapter 7 - ClusteringDocument9 pagesUCS551 Chapter 7 - ClusteringFarah YahayaNo ratings yet

- The General Considerations and Implementation In: K-Means Clustering Technique: MathematicaDocument10 pagesThe General Considerations and Implementation In: K-Means Clustering Technique: MathematicaMario ZamoraNo ratings yet

- BAAIDocument4 pagesBAAIShreenidhi M RNo ratings yet

- MACHINE LEARNING TipsDocument10 pagesMACHINE LEARNING TipsSomnath KadamNo ratings yet

- 4 - Unsupervised ClassificationDocument21 pages4 - Unsupervised ClassificationHERiTAGE1981No ratings yet

- Application of Cluster Analysis 1Document4 pagesApplication of Cluster Analysis 1Naga Venkata Sai Suraj MaheswaramNo ratings yet

- SR - No Paper Name Authors Publication Year Dataset-Algorithm Advantages Disadvantages Result Future-WorksDocument4 pagesSR - No Paper Name Authors Publication Year Dataset-Algorithm Advantages Disadvantages Result Future-Worksaniltatti25No ratings yet

- Signal Processing: Jeremias Sulam, Yaniv Romano, Ronen TalmonDocument9 pagesSignal Processing: Jeremias Sulam, Yaniv Romano, Ronen TalmonDante de BeauvoirNo ratings yet

- An Improved K-Means Clustering AlgorithmDocument3 pagesAn Improved K-Means Clustering AlgorithmKiran SomayajiNo ratings yet

- Machine Learning 1707965934Document15 pagesMachine Learning 1707965934robson110770No ratings yet

- Clustering TechniquesDocument23 pagesClustering TechniquesCheeraayu ChowhanNo ratings yet

- Research Method Rm-Chapter 4Document56 pagesResearch Method Rm-Chapter 4GAMEX TUBENo ratings yet

- Random Sum-Product Forests With Residual LinksDocument9 pagesRandom Sum-Product Forests With Residual LinksGaston GBNo ratings yet

- Ist 407 PresentationDocument12 pagesIst 407 Presentationapi-529383903No ratings yet

- WS1UNRDocument13 pagesWS1UNRAbiy MulugetaNo ratings yet

- Popular Decision Tree Algorithms Are Provably NoisDocument17 pagesPopular Decision Tree Algorithms Are Provably NoisPraveen KumarNo ratings yet

- Deep Embedding Network For ClusteringDocument6 pagesDeep Embedding Network For Clusteringdonaldo garciaNo ratings yet

- A Toolkit For Remote Sensing Enviroinformatics ClusteringDocument1 pageA Toolkit For Remote Sensing Enviroinformatics ClusteringkalokosNo ratings yet

- Clustering Exercise 4-15-15Document2 pagesClustering Exercise 4-15-15nomore891No ratings yet

- Ijsrcsamsv 7 I 3 P 174Document5 pagesIjsrcsamsv 7 I 3 P 174Dr Ganapati DasNo ratings yet

- Electric Power Scam Prediction Using Machine Learning TechniquesDocument8 pagesElectric Power Scam Prediction Using Machine Learning TechniquesShanmuganathan V (RC2113003011029)No ratings yet

- Probabilistic Time Series Forecasting With Implicit Quantile NetworksDocument7 pagesProbabilistic Time Series Forecasting With Implicit Quantile NetworksAgossou Alex AgbahideNo ratings yet

- Minka Ep UaiDocument8 pagesMinka Ep UaiJW JNo ratings yet

- Multivariate Statistical Methods A Primer Fourth E... - (Chapter 9 Cluster Analysis)Document18 pagesMultivariate Statistical Methods A Primer Fourth E... - (Chapter 9 Cluster Analysis)scribed123scribedNo ratings yet

- Pax-Dbscan: A Proposed Algorithm For Improved Clustering: Grace L. Samson Joan LuDocument36 pagesPax-Dbscan: A Proposed Algorithm For Improved Clustering: Grace L. Samson Joan LuIndra SarNo ratings yet

- ASCAI - Adaptive Sampling For Acquiring Compact AIDocument8 pagesASCAI - Adaptive Sampling For Acquiring Compact AInotsure.g6rp1No ratings yet

- 2020 - Supervised Community Detection With Line Graph Neural Networks - Chen Et AlDocument24 pages2020 - Supervised Community Detection With Line Graph Neural Networks - Chen Et AlBlue PoisonNo ratings yet

- 4 ClusteringDocument9 pages4 ClusteringBibek NeupaneNo ratings yet

- Tài Liệu Việt Nam -Anh BảoDocument4 pagesTài Liệu Việt Nam -Anh BảoĐặng Văn NhậtNo ratings yet

- DM Lecture 06Document32 pagesDM Lecture 06Sameer AhmadNo ratings yet

- Machine LearningDocument1 pageMachine LearningFareedNo ratings yet

- AI and Expert SystemDocument23 pagesAI and Expert SystemCharles TomyNo ratings yet

- Comparison of Different Clustering Algorithms Using WEKA ToolDocument3 pagesComparison of Different Clustering Algorithms Using WEKA ToolIJARTESNo ratings yet

- MST Quiz 2 AnswersDocument4 pagesMST Quiz 2 AnswersWilleyNo ratings yet

- Specifications For Design of Hot Metal Ladles AISE Standard No.Document17 pagesSpecifications For Design of Hot Metal Ladles AISE Standard No.Sebastián Díaz ConstanzoNo ratings yet

- Datasheet PDFDocument9 pagesDatasheet PDFValentina LópezNo ratings yet

- Rekshma Seminar ReportDocument37 pagesRekshma Seminar Reportrahulraj rNo ratings yet

- Crash Recovery: TransactionDocument11 pagesCrash Recovery: TransactionhhtvnptNo ratings yet

- Diabetes Prediction SystemDocument25 pagesDiabetes Prediction SystemYeswanth CNo ratings yet

- Use of Permapol P3.1polymers and Epoxy Resins in The Formulation of Aerospace SealantsDocument6 pagesUse of Permapol P3.1polymers and Epoxy Resins in The Formulation of Aerospace Sealants이형주No ratings yet

- CapacitorDocument35 pagesCapacitorjolieprincesseishimweNo ratings yet

- Buckling of Stiffened Steel Plates - A Parametric StudyDocument26 pagesBuckling of Stiffened Steel Plates - A Parametric StudybribruNo ratings yet

- Chapter 1 Two-Phase Flow and Boiling Heat TransferDocument44 pagesChapter 1 Two-Phase Flow and Boiling Heat TransferjackleesjNo ratings yet

- Versiv CCTT Version 1.0 NDocument199 pagesVersiv CCTT Version 1.0 Nandcamiloq3199100% (1)

- WDMDocument7 pagesWDMNenad VicentijevicNo ratings yet

- Chapter 8 Walls and Buried Structures: WSDOT Bridge Design Manual M 23-50.20 Page 8-I September 2020Document56 pagesChapter 8 Walls and Buried Structures: WSDOT Bridge Design Manual M 23-50.20 Page 8-I September 2020Vietanh PhungNo ratings yet

- Java Full Stack Developer Alekya Email: Contact:: Professional SummaryDocument6 pagesJava Full Stack Developer Alekya Email: Contact:: Professional Summarykiran2710No ratings yet

- Chapter3 PDFDocument9 pagesChapter3 PDFAdithyan GowthamNo ratings yet

- FCC Series 11 High Pressure Globe Control ValvesDocument15 pagesFCC Series 11 High Pressure Globe Control ValvesBASKARNo ratings yet

- 770 - Rail Clamp Manual (GA-9-180KN-TR68 Ex II 2D T3)Document30 pages770 - Rail Clamp Manual (GA-9-180KN-TR68 Ex II 2D T3)j3fersonNo ratings yet

- Manual - Manitou 1740 PDocument5 pagesManual - Manitou 1740 PMichel GonçalvesNo ratings yet

- Digiphone+: Surge Wave Receiver For Acoustic and Electromagnetic Fault PinpointingDocument2 pagesDigiphone+: Surge Wave Receiver For Acoustic and Electromagnetic Fault Pinpointingpatricio aguirreNo ratings yet

- 200WNA1Document5 pages200WNA1James ArlanttNo ratings yet

- Empowerment Tech LessonsDocument34 pagesEmpowerment Tech LessonsAlyanna De LeonNo ratings yet

- EBARA Reference DataDocument6 pagesEBARA Reference DataMarcelo Peretti100% (1)

- Assessment of Learning-1Document24 pagesAssessment of Learning-1Jessie DesabilleNo ratings yet

- Chapter No-5 Steam Condensers and Cooling Towers Marks-16Document22 pagesChapter No-5 Steam Condensers and Cooling Towers Marks-16Vera WidyaNo ratings yet