Professional Documents

Culture Documents

Research Proposal

Uploaded by

ts.kr1020Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Research Proposal

Uploaded by

ts.kr1020Copyright:

Available Formats

Title: Application of Convolutional Vision Transformers for the

Classification of Infectious Diseases in Chest Radiological images

Submitted by

Name: Md Hasibur Rahman

Course Code: CSE 326

Course Title: Research and Innovation

Id: 211-15-14616

Sec: 58_A

Submission date: 28/11/2023

Submitted to

Dr. Arif Mahmud, Associated Professor, Department of CSE, DIU.

Daffodil International University| 1

Table of Contents

Introduction: .................................................................................................... 3

Problem Statement ........................................................................................... 4

Literature Review: ........................................................................................... 5

Aims and Objectives: ....................................................................................... 7

Research Questions to be addressed in this project. ........................................ 8

Significance of the Research: .......................................................................... 9

Proposed Methodology .................................................................................. 10

About the Dataset: ......................................................................................... 12

References: .................................................................................................... 14

Table of Figures

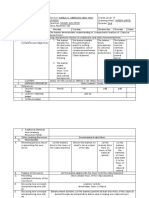

FIGURE 1 DIAGRAM OF THE PROPOSED METHODOLOGY. .......................................... 12

Index of Tables

TABLE 1 DISTRIBUTION OF THE CLASSES OF THE DATASET AND THEIR LABELS. ......... 13

Daffodil International University| 2

Introduction:

Respiratory diseases, such as COVID-19, tuberculosis, and pneumonia, pose

significant global health threats, responsible for substantial morbidity and

mortality worldwide (WHO, 2020). Rapid and accurate diagnosis of these

diseases is critical for effective patient management and improved health

outcomes. In this regard, chest radiological images serve as a primary non-

invasive tool for early detection and monitoring of these conditions (Kelly et

al., 2020). However, conventional manual analysis of chest radiological images

is often labor-intensive and subject to variability, highlighting the need for

more accurate and efficient methods (Rajpurkar et al., 2017).

In recent years, deep learning models, particularly Convolutional Neural

Networks (CNNs), have demonstrated promising results in enhancing the

accuracy of disease detection and classification from chest X-rays (Rajpurkar

et al., 2017; Wang et al., 2017). The advent of transformer models, primarily

used in natural language processing tasks, offer potential applications in

medical imaging, demonstrating competitive performance with CNNs in

certain tasks (Dosovitskiy et al., 2020).

This project proposes to leverage the power of transformer models, specifically

a custom Convolutional Vision Transformer, in the classification of chest

radiological images, with a focus on infectious respiratory diseases including

COVID-19, lung opacity, normal lung, viral pneumonia, and tuberculosis. The

goal is to fine-tune and compare various deep learning architectures on a multi-

class chest X-ray dataset, providing an in-depth analysis of the models'

interpretability and prediction capabilities.

Daffodil International University| 3

Problem Statement

The problem this research intends to address is the development and evaluation

of a Transformer-based model for the classification of Chest radiological

images, specifically focusing on five classes - COVID-19, Lung Opacity,

Normal, Viral Pneumonia, and Tuberculosis. The challenge is to fine-tune and

compare the performance of various deep learning architectures, including

VGG19, ResNet50, Xception, a custom CNN model, and a custom

Convolutional Vision Transformer, in order to identify the model that offers

the highest prediction accuracy. Furthermore, an understanding of the model's

decision-making process, through the use of visualization techniques, is sought

to provide insights into how these models process and interpret chest X-ray

images. This understanding could significantly impact the adoption of such

models in clinical practice, augmenting the efficiency and accuracy of disease

detection.

Daffodil International University| 4

Literature Review:

The significant role of radiography, particularly chest X-rays, in the early

detection and diagnosis of respiratory diseases, is well-established (Kelly et

al., 2020). Yet, traditional manual analysis of these images can be labor-

intensive, subject to variability, and lack the necessary speed for efficient

disease control, especially in the face of pandemic scenarios like COVID-19

(Rajpurkar et al., 2017).

Over the past few years, machine learning, and particularly deep learning

models, have shown remarkable potential to overcome these challenges,

enhancing the speed, accuracy, and efficiency of disease detection from chest

X-rays (Rajpurkar et al., 2017; Wang et al., 2017). Convolutional Neural

Networks (CNNs), with their strength in automatically learning hierarchical

representations from raw data, have emerged as a leading tool in this domain,

providing superior performance in diverse medical imaging tasks (LeCun et

al., 2015). Various architectures like VGG19, ResNet50, and Xception have

been extensively employed, showcasing strong results in chest X-ray analysis

(Simonyan & Zisserman, 2014; He et al., 2016; Chollet, 2017).

Nevertheless, despite these successes, the interpretability of CNNs remains a

considerable challenge, often considered as "black-box" models, with

decision-making processes hard to decipher (Selvaraju et al., 2020). This lack

of transparency has raised concerns about the practical applicability of these

models in clinical practice, underscoring the need for models that not only offer

high prediction accuracy but also explainable outcomes.

Recently, the advent of transformer models, originally designed for natural

language processing tasks, offers promising solutions in this regard. The

Convolutional Vision Transformer (ViT), an adaptation of the transformer

model for computer vision tasks, has shown competitive performance with

CNNs, bringing the benefits of both worldviews - the data efficiency and

performance of CNNs, and the flexible global reasoning capability of

transformers (Dosovitskiy et al., 2020). Furthermore, the self-attention

mechanism inherent in transformers could potentially offer greater

interpretability, providing insights into the model's decision-making process

(Vaswani et al., 2017).

Daffodil International University| 5

However, the application of Convolutional Vision Transformers in medical

imaging, particularly in chest X-ray analysis, is still a relatively unexplored

domain, warranting further investigation.

In summary, this research builds on a body of work exploring deep learning

for chest X-ray analysis, and seeks to extend it by investigating the potential

and efficacy of Convolutional Vision Transformers in this context, addressing

the dual need for high performance and interpretability.

Daffodil International University| 6

Aims and Objectives:

Aim:

The overarching aim of this project is to investigate the efficacy of

Convolutional Vision Transformers for the classification of infectious diseases

in chest radiological images, with a specific focus on enhancing interpretability

alongside maintaining high performance.

Objectives:

1. Dataset Exploitation and Preparation To effectively utilize a comprehensive,

open-source chest X-ray dataset, ensuring appropriate preprocessing and

labelling for an accurate and efficient machine learning model development

process.

2. Model Development and Enhancement To develop, fine-tune and enhance

various deep learning models including VGG19, ResNet50, Xception, a

custom CNN model, and a custom Convolutional Vision Transformer. The

objective includes exploring ways to increase their performance and efficiency

in classifying chest radiological images into five distinct classes: COVID-19,

Lung Opacity, Normal, Viral Pneumonia, and Tuberculosis.

3. Comparative Performance Analysis To conduct a comparative analysis of

the performance of the developed models. This would involve evaluating each

model's predictive accuracy, and other relevant metrics such as precision,

recall, F-score, and ROC-AUC, to determine the most effective model for the

classification task.

4. Enhancing Model Interpretability To introspect the selected model using

visualization techniques such as convolution visualization or attention map

visualization, to enhance the model's interpretability. This involves

understanding and explaining the decision-making process of the model,

including what parts of the input image it focuses on and how it assigns weights

to make a prediction.

5. Contribution to Academic and Clinical Practice To provide a significant

contribution to the existing body of knowledge in the field of medical imaging

Daffodil International University| 7

and deep learning, by offering insights into the application of Convolutional

Vision Transformers in chest X-ray classification. Furthermore, this research

aims to enhance the potential adoption of such models in clinical practice by

presenting a model that not only performs with high accuracy, but also offers

explainability and transparency in its decision-making process.

In achieving these objectives, this research will provide a comprehensive

investigation into the potential application of Convolutional Vision

Transformers in medical imaging, with a focus on interpretability and high

performance.

Research Questions to be addressed in this project.

1. How does the performance of Convolutional Vision Transformers

compare with traditional Convolutional Neural Networks and other deep

learning models such as VGG19, ResNet50, and Xception in the

classification of chest radiological images of infectious respiratory

diseases?

2. What insights can be derived from convolution visualization or attention

map visualization about the decision-making process of the

Convolutional Vision Transformer model in predicting respiratory

diseases from chest radiological images?

Daffodil International University| 8

Significance of the Research:

This research stands at the intersection of deep learning and medical imaging,

and seeks to explore the potential of Convolutional Vision Transformers (ViTs)

in the classification of chest radiological images for infectious respiratory

diseases. The significance of this research can be appreciated from multiple

perspectives.

Firstly, the utilization of a cutting-edge AI technology such as ViTs, which have

primarily been employed in natural language processing tasks, into the realm

of medical imaging, is itself an innovative step. It will contribute to the

expanding body of knowledge on the application of transformer models in the

field of computer vision (Dosovitskiy et al., 2020).

Secondly, it directly addresses a persistent challenge in the field of medical

imaging - the lack of interpretability in deep learning models. By utilizing the

self-attention mechanism inherent in transformers, this research could pave the

way for more interpretable models, thereby enhancing their acceptance and

applicability in clinical practice (Vaswani et al., 2017).

Thirdly, the fine-tuning and comparative analysis of various deep learning

architectures will provide valuable insights into the performance and suitability

of these models in classifying chest radiological images for various respiratory

diseases. This has significant implications for healthcare, potentially

improving the speed, accuracy, and efficiency of disease detection and

management, which is particularly important given the ongoing global

pandemic (Kelly et al., 2020).

Finally, the visualization and analysis of the model's decision-making process

will contribute to a deeper understanding of how these models process and

interpret medical images. This knowledge can assist in the refinement of these

models, as well as the development of best practices for their application in the

medical field (Selvaraju et al., 2020).

In summary, this research has the potential to contribute significantly to the

fields of medical imaging, deep learning, and healthcare, advancing our

understanding and capabilities in disease detection and management, and

bringing us closer to the goal of personalized and effective patient care.

Daffodil International University| 9

Proposed Methodology

The methodology for this project entails a systematic process comprising data preparation, model

development, and comparative evaluation, followed by introspection and analysis of the model's

decision-making process.

1. Data Preparation:

The research will utilize an open-source dataset of chest radiological images available online.

This dataset comprises images categorized into five classes: COVID-19, Lung Opacity, Normal,

Viral Pneumonia, and Tuberculosis.

The initial step involves a thorough quality check and cleaning of the dataset. Only frontal view

images will be used, as these provide the most consistent views and are most commonly used in

medical diagnosis. All images will be checked for their labelling accuracy. The images will then

be preprocessed to ensure they are of a consistent size and format for model training.

Furthermore, data augmentation techniques such as rotation, flipping, and scaling will be applied

to increase the robustness of the model. As of now, the appropriate pre-processing or data

augmentation techniques to be applied have not been strictly specified. Further research and

discussion with supervisor will be conducted prior to selection and application of these

techniques.

2. Model Development and Training:

A variety of deep learning models will be developed for comparison. These include well-

established architectures like VGG19, ResNet50, and Xception. Each model will be fine-tuned

using the prepared dataset, with adjustments made to hyperparameters to optimize performance.

A custom Convolutional Neural Network (CNN) architecture will be developed, leveraging

depthwise separable convolution layers, dilated convolution layers, residual blocks, and batch

normalization.

Finally, a custom Convolutional Vision Transformer model will be developed and trained. This

model will leverage the capabilities of transformers, including their self-attention mechanism, to

classify chest radiological images.

The models will be trained on a designated training set, using a validation set to tune the model

parameters to avoid overfitting. The model weights will be updated using the backpropagation

algorithm, and optimization algorithms such as Adam or stochastic gradient descent will be used

to minimize the loss function.

Daffodil International University| 10

3. Model Evaluation and Comparison:

Once the models are trained, they will be evaluated using a separate test set. The primary

performance metric will be accuracy, but precision, recall, F-score, and ROC-AUC will also be

used for a comprehensive evaluation.

A comparative analysis will be conducted to determine the most effective model. This analysis

will consider not only the performance metrics but also factors such as computational efficiency

and training time.

4. Model Introspection and Analysis:

The most effective model will then undergo further introspection using visualization techniques

such as convolution visualization or attention map visualization. This process aims to understand

the decision-making process of the model, providing insights into what the model focuses on in

the input image, and how it assigns weights when making a prediction. This investigation will

provide valuable insights into the interpretability of the model, a key aspect of the research

objectives.

In conclusion, this research employs a comprehensive and rigorous methodology, combining

data preparation, model development, and evaluation with an introspective analysis, to

investigate the efficacy and interpretability of Convolutional Vision Transformers in chest X-ray

classification.

Daffodil International University| 11

Figure 1 Diagram of the Proposed Methodology.

About the Dataset:

The dataset (click here to access dataset website) for this research project is a

comprehensive collection of chest radiological images, specifically X-rays,

that have been meticulously gathered from various online resources (Basu et.

al., 2021). This dataset is a result of a collaborative effort by researchers from

Qatar University, Doha, and Dhaka University, along with their associates from

Pakistan and Malaysia (Basu et. al., 2021). The team worked in close

collaboration with medical professionals to ensure the accuracy and relevance

of the data (Basu et. al., 2021).

The primary dataset, which consists of four classes - COVID-19, Lung

Opacity, Normal, and Viral Pneumonia, was initially sourced from the COVID-

19 Radiography Database on Kaggle (Basu et. al., 2021). To enhance the

diversity and robustness of the dataset, additional images of Pneumonia and

Daffodil International University| 12

COVID-19 were incorporated from various other online resources (Basu et.

al., 2021).

In a significant enhancement to the original dataset, (Basu et. al., 2021)

research team introduced a fifth class - Tuberculosis. This addition was made

to broaden the scope of the research and increase the dataset's relevance in the

context of infectious respiratory diseases (Basu et. al., 2021).

To maintain consistency and ensure the highest quality of data, only frontal

view X-ray images were included in the dataset (Basu et. al., 2021). Any lateral

view images that were part of the original collections were excluded (Basu et.

al., 2021).

The final dataset, therefore, comprises five classes of chest X-ray images, each

representing a different condition. The distribution of these classes is as

follows:

Table 1 Distribution of the classes of the dataset and their labels.

Disease Name Number of Samples Label

COVID-19 4,189 0

Lung Opacity 6,012 1

Normal 10,192 2

Viral Pneumonia 7,397 3

Tuberculosis 4,897 4

Total 32,687

In total, the dataset includes 32,687 samples, making it a substantial resource

for training and evaluating the deep learning models that will be developed as

part of this research project.

This dataset, with its diverse classes and large number of samples, provides a

solid foundation for our research into the efficacy and interpretability of

Convolutional Vision Transformers in chest X-ray classification.

Daffodil International University| 13

Project Plan:

Timeline Activity

November 20 -

November 30, 2023 Preliminary Research and Literature Review

December 1 -

December 15, 2023 Dataset Collection and Exploration

December 16 -

December 30, 2024 Dataset Preparation

January 1 - February Model Development: Implementing VGG19, ResNet50,

18, 2024 Xception, and custom CNN

March 1 - March 15, Model Development: Implementing and training

2024 custom Convolutional Vision Transformer

March 16 - March 25, Model Enhancement: Fine-tuning and hyperparameters

2024 optimization

April 26 - May 10,

2024 Comparative Performance Analysis of models

May 11 - May 25, 2024 Enhancing Model Interpretability

May 26 - June 15, 2024 Drafting and Proofreading of the report

July 6 - August 15,

2024 Preparation for Defense

August 16 – August 25,

2024 Final Review, Project Submission, and Defense

Daffodil International University| 14

References:

• WHO (2020). The top 10 causes of death. https://www.who.int/news-

room/fact-sheets/detail/the-top-10-causes-of-death

• Kelly, B. J., Farness, P., Soto, M. T., Morgan, M., & Ghassemi, M.

(2020). Machine Learning in Medical Imaging. Journal of Nuclear

Medicine Technology, 48(3), 209-219.

• Rajpurkar, P., Irvin, J., Ball, R. L., Zhu, K., Yang, B., Mehta, H., ... &

Langlotz, C. P. (2017). Deep learning for chest radiograph diagnosis: A

retrospective comparison of the CheXNeXt algorithm to practicing

radiologists. PLoS medicine, 15(11), e1002686.

• Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., & Summers, R. M.

(2017). ChestX-ray8: Hospital-scale chest x-ray database and

benchmarks on weakly-supervised classification and localization of

common thorax diseases. Proceedings of the IEEE conference on

computer vision and pattern recognition, 2097-2106.

• Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X.,

Unterthiner, T., ... & Houlsby, N. (2020). An image is worth 16x16

words: Transformers for image recognition at scale. arXiv preprint

arXiv:2010.11929.

• Chollet, F. (2017). Xception: Deep Learning with Depthwise Separable

Convolutions. Proceedings of the IEEE conference on computer vision

and pattern recognition, 1251-1258.

• He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep Residual Learning

for Image Recognition. Proceedings of the IEEE conference on computer

vision and pattern recognition, 770-778.

• Kelly, B., Squizzato, S., Parascandolo, P., Kalkreuter, N., Kashani, R., &

Rajpurkar, P. (2020). An overview of deep learning in medical imaging

focusing on MRI. Zeitschrift für Medizinische Physik, 30(2), 102-116.

• LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature,

521(7553), 436–444.

Daffodil International University| 15

• Rajpurkar, P., Irvin, J., Zhu, K., Yang, B., Mehta, H., Duan, T., ... &

Lungren, M. P. (2017). Chexnet: Radiologist-Level Pneumonia

Detection on Chest X-Rays with Deep Learning. arXiv preprint

arXiv:1711.05225.

• Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., &

Batra, D. (2020). Grad-CAM: Visual Explanations from Deep Networks

via Gradient-Based Localization. International Journal of Computer

Vision, 128(2), 336-359.

• Simonyan, K., & Zisserman, A. (2014). Very Deep Convolutional

Networks for Large-Scale Image Recognition. arXiv preprint

arXiv:1409.1556.

• Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.

N., ... & Polosukhin, I. (2017). Attention is All You Need. Advances in

neural information processing systems, 5998-6008.

• Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., & Summers, R. M.

(2017). ChestX-ray8: Hospital-scale Chest X-ray Database and

Benchmarks on Weakly-Supervised Classification and Localization of

Common Thorax Diseases. Proceedings of the IEEE conference on

computer vision and pattern recognition, 2097-2106.

• Basu, Arkaprabha; Das, Sourav; Ghosh, Susmita; Mullick, Sankha;

Gupta, Avisek; Das, Swagatam, 2021, "Chest X-Ray Dataset for

Respiratory Disease

Classification", https://doi.org/10.7910/DVN/WNQ3GI, Harvard

Dataverse, V5

Daffodil International University| 16

You might also like

- ResNet-50 Vs VGG-19 Vs Training From Scratch A Comparative Analysis of The Segmentation and Classification of Pneumonia FromDocument10 pagesResNet-50 Vs VGG-19 Vs Training From Scratch A Comparative Analysis of The Segmentation and Classification of Pneumonia FromCarlos Cézar ConradNo ratings yet

- Applsci 12 06448 v3 2Document15 pagesApplsci 12 06448 v3 2Hasib RahmanNo ratings yet

- Breast Cancer Classification From Histopathological Images Using Resolution Adaptive NetworkDocument15 pagesBreast Cancer Classification From Histopathological Images Using Resolution Adaptive NetworkKeren Evangeline. INo ratings yet

- Pneumonia Lung Opacity Detection and Segmentation in Chest X-Rays by Using Transfer Learning of The Mask R-CNNDocument9 pagesPneumonia Lung Opacity Detection and Segmentation in Chest X-Rays by Using Transfer Learning of The Mask R-CNNWeb ResearchNo ratings yet

- Deepa-Senthil2022 Article PredictingInvasiveDuctalCarcinDocument22 pagesDeepa-Senthil2022 Article PredictingInvasiveDuctalCarcinKeren Evangeline. INo ratings yet

- Thomson Reuters Indexing JournalsDocument7 pagesThomson Reuters Indexing JournalssanaNo ratings yet

- An Improved Transformer Network For Skin Cancer ClassificationDocument10 pagesAn Improved Transformer Network For Skin Cancer ClassificationenthusiasticroseNo ratings yet

- Classification of Breast Cancer Histopathological Images Using Discriminative Patches Screened by Generative Adversarial NetworksDocument16 pagesClassification of Breast Cancer Histopathological Images Using Discriminative Patches Screened by Generative Adversarial NetworksGuru VelmathiNo ratings yet

- Comput Methods Programs Biomed 2021 206 106130Document11 pagesComput Methods Programs Biomed 2021 206 106130Fernando SousaNo ratings yet

- Pneumonia Detection Using Convolutional Neural Networks (CNNS)Document14 pagesPneumonia Detection Using Convolutional Neural Networks (CNNS)shekhar1405No ratings yet

- Measurement: Amit Kumar Jaiswal, Prayag Tiwari, Sachin Kumar, Deepak Gupta, Ashish Khanna, Joel J.P.C. RodriguesDocument8 pagesMeasurement: Amit Kumar Jaiswal, Prayag Tiwari, Sachin Kumar, Deepak Gupta, Ashish Khanna, Joel J.P.C. RodriguesDiego Alejandro Betancourt PradaNo ratings yet

- Sabol Et Al 2020Document10 pagesSabol Et Al 2020Mais DiazNo ratings yet

- JPM 10 00224 v3Document27 pagesJPM 10 00224 v3thouraya hadj hassenNo ratings yet

- 1 s2.0 S1746809423012648 MainDocument18 pages1 s2.0 S1746809423012648 Mainmozhganeutoop1998No ratings yet

- Tongue Tumor Detection in Hyperspectral Images Using Deep Learning Semantic SegmentationDocument11 pagesTongue Tumor Detection in Hyperspectral Images Using Deep Learning Semantic Segmentationanchal18052001No ratings yet

- Pulmonary Tuberculosis Detection From Chest X-Ray Images Using Machine LearningDocument7 pagesPulmonary Tuberculosis Detection From Chest X-Ray Images Using Machine LearningIJRASETPublicationsNo ratings yet

- Anatomy VR 3Document10 pagesAnatomy VR 3lubos.danisovicNo ratings yet

- An Efficient CNN Model For COVID-19 Disease DetectDocument12 pagesAn Efficient CNN Model For COVID-19 Disease Detectnasywa rahmatullailyNo ratings yet

- A BERT Encoding With Recurrent Neural Network and Long-Short Term Memory For Breast Cancer Image Classification - 1-s2.0-S2772662223000176-MainDocument15 pagesA BERT Encoding With Recurrent Neural Network and Long-Short Term Memory For Breast Cancer Image Classification - 1-s2.0-S2772662223000176-MainSrinitish SrinivasanNo ratings yet

- (IJIT-V7I2P7) :manish Gupta, Rachel Calvin, Bhavika Desai, Prof. Suvarna AranjoDocument4 pages(IJIT-V7I2P7) :manish Gupta, Rachel Calvin, Bhavika Desai, Prof. Suvarna AranjoIJITJournalsNo ratings yet

- An End-to-End Mammogram Diagnosis: A New Multi-Instance and Multiscale Method Based On Single-Image FeatureDocument11 pagesAn End-to-End Mammogram Diagnosis: A New Multi-Instance and Multiscale Method Based On Single-Image FeatureProfessor's Tech AcademyNo ratings yet

- An - Innovative - Deep - Learning - Framework - Working OnDocument10 pagesAn - Innovative - Deep - Learning - Framework - Working OnFavour OjiakuNo ratings yet

- (IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooDocument8 pages(IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooEighthSenseGroupNo ratings yet

- Result 7 Paper - PatchesDocument8 pagesResult 7 Paper - PatchesKeren Evangeline. INo ratings yet

- 3.. Malignant Melanoma Classification Using Deep Learning Datasets, Performance Measurements, Challenges and OpportunitiesDocument25 pages3.. Malignant Melanoma Classification Using Deep Learning Datasets, Performance Measurements, Challenges and OpportunitiesMuhammad Awais QureshiNo ratings yet

- Diagnostics 12 02477Document16 pagesDiagnostics 12 02477Rony TeguhNo ratings yet

- A Real-World Dataset and Benchmark For Foundation Model Adaptation in Medical Image ClassificationDocument9 pagesA Real-World Dataset and Benchmark For Foundation Model Adaptation in Medical Image ClassificationqicherNo ratings yet

- A Survey On Automatic Generation of Medical Imaging Reports Based On Deep LearningDocument16 pagesA Survey On Automatic Generation of Medical Imaging Reports Based On Deep LearningSuesarn WilainuchNo ratings yet

- Medical Image Classification Algorithm Based On Vi PDFDocument12 pagesMedical Image Classification Algorithm Based On Vi PDFEnes mahmut kulakNo ratings yet

- Convolutional Neural NetworksDocument28 pagesConvolutional Neural NetworksGOLDEN AGE FARMSNo ratings yet

- Chest X-Ray Outlier Detection Model Using Dimension Reduction and Edge DetectionDocument11 pagesChest X-Ray Outlier Detection Model Using Dimension Reduction and Edge DetectionHardik AgrawalNo ratings yet

- SirishKaushik2020 Chapter PneumoniaDetectionUsingConvoluDocument14 pagesSirishKaushik2020 Chapter PneumoniaDetectionUsingConvoluMatiqul IslamNo ratings yet

- Pulmonary Image Classification Based On Inception-V3 Transfer Learning ModelDocument9 pagesPulmonary Image Classification Based On Inception-V3 Transfer Learning ModelKhaleda AkhterNo ratings yet

- Covid 19Document18 pagesCovid 19AISHWARYA PANDITNo ratings yet

- NTTTTTDocument5 pagesNTTTTTDippal IsraniNo ratings yet

- Multi-Label Local To Global Learning A Novel Learning Paradigm For Chest X-Ray Abnormality ClassificationDocument12 pagesMulti-Label Local To Global Learning A Novel Learning Paradigm For Chest X-Ray Abnormality ClassificationSuman DasNo ratings yet

- GRUUNetDocument12 pagesGRUUNetAshish SinghNo ratings yet

- 2014 Drls in Mamao SydneyDocument13 pages2014 Drls in Mamao Sydneyp110054No ratings yet

- A Deep Learning Approach For Breast Invasive Ductal Carcinoma Detection and Lymphoma Multi-Classification in Histological ImagesDocument12 pagesA Deep Learning Approach For Breast Invasive Ductal Carcinoma Detection and Lymphoma Multi-Classification in Histological ImagesKhaleda AkhterNo ratings yet

- Unbalanced and Small Sample Deep Learning For COVID X-Ray ClassificationDocument13 pagesUnbalanced and Small Sample Deep Learning For COVID X-Ray ClassificationS. SreeRamaVamsidharNo ratings yet

- Breast Cancer Histopathological Image Classification Using Convolutional Neural Networks With Small SE-ResNet ModuleDocument21 pagesBreast Cancer Histopathological Image Classification Using Convolutional Neural Networks With Small SE-ResNet ModuleCarlos Cézar ConradNo ratings yet

- Pneumonia Binary Classification Using Multi-Scale Feature Classification Network On Chest X-Ray ImagesDocument9 pagesPneumonia Binary Classification Using Multi-Scale Feature Classification Network On Chest X-Ray ImagesIAES IJAINo ratings yet

- Anapub Paper TemplateDocument10 pagesAnapub Paper Templatebdhiyanu87No ratings yet

- Bardou2018 PDFDocument16 pagesBardou2018 PDFNadeem ShoukatNo ratings yet

- Medical Image Analysis With TransformersDocument66 pagesMedical Image Analysis With TransformersAKHIL HAKKIMNo ratings yet

- Automated Breast Tumor Ultrasound Image Segmentation With Hybrid UNet and Classification Using Fine-Tuned CNN ModelDocument31 pagesAutomated Breast Tumor Ultrasound Image Segmentation With Hybrid UNet and Classification Using Fine-Tuned CNN ModelChuyên Mai TấtNo ratings yet

- Lung-RetinaNet Lung Cancer Detection Using A RetinaNet With Multi-Scale Feature Fusion and Context ModuleDocument12 pagesLung-RetinaNet Lung Cancer Detection Using A RetinaNet With Multi-Scale Feature Fusion and Context ModuleNakib AhsanNo ratings yet

- Didonato 2017Document11 pagesDidonato 2017Pasquale TedeschiNo ratings yet

- 8-Prediction of Cancer Disease Using Machine Learning ApproachDocument8 pages8-Prediction of Cancer Disease Using Machine Learning Approachbeefypixel761No ratings yet

- Comprehensive Healthcare Simulation: Surgery and Surgical SubspecialtiesFrom EverandComprehensive Healthcare Simulation: Surgery and Surgical SubspecialtiesDimitrios StefanidisNo ratings yet

- A System Theoretic Approach To Modeling and Analysis of Mammography Testing ProcessDocument13 pagesA System Theoretic Approach To Modeling and Analysis of Mammography Testing ProcessPriya HankareNo ratings yet

- Musfequa Final ProposalDocument15 pagesMusfequa Final ProposalARPITA SARKER 1804099No ratings yet

- Automatic Radiology Report Generation Based On Multi-View Image Fusion and Medical Concept EnrichmentDocument9 pagesAutomatic Radiology Report Generation Based On Multi-View Image Fusion and Medical Concept Enrichmentsebampitako duncanNo ratings yet

- Research Article Enhance-Net: An Approach To Boost The Performance of Deep Learning Model Based On Real-Time Medical ImagesDocument15 pagesResearch Article Enhance-Net: An Approach To Boost The Performance of Deep Learning Model Based On Real-Time Medical ImagesFilip PajićNo ratings yet

- 1-Breast Cancer Detection From Thermography Based On Deep Neural NetworksDocument5 pages1-Breast Cancer Detection From Thermography Based On Deep Neural Networksmariam askarNo ratings yet

- The Role of Virtual Reality Simulation in Surgical Training in The Light of COVID-19 PandemicDocument13 pagesThe Role of Virtual Reality Simulation in Surgical Training in The Light of COVID-19 PandemicIván Hernández FloresNo ratings yet

- BiomoleculesDocument14 pagesBiomoleculesasim zamanNo ratings yet

- Automated Chest Screening Based On A Hybrid Model of Transfer Learning and Convolutional Sparse Denoising AutoencoderDocument19 pagesAutomated Chest Screening Based On A Hybrid Model of Transfer Learning and Convolutional Sparse Denoising AutoencoderAlejandro De Jesus Romo RosalesNo ratings yet

- COVID-19 Detection From Chest X-Rays Using Trained Output Based Transfer Learning ApproachDocument24 pagesCOVID-19 Detection From Chest X-Rays Using Trained Output Based Transfer Learning ApproachAsmaa AbdulQawyNo ratings yet

- Machine LearningDocument1 pageMachine LearningSuman GhoshNo ratings yet

- g8 DLL Health q2Document5 pagesg8 DLL Health q2Marian Miranda90% (10)

- A212 SQQP3123 Simulation Type - 271279Document4 pagesA212 SQQP3123 Simulation Type - 271279Soo Choon KhongNo ratings yet

- Curriculum Vitae: Bio DataDocument5 pagesCurriculum Vitae: Bio DataKasaana IsmailNo ratings yet

- Agency and The Foundations of Ethics: Nietzschean Constitutivism - Reviews - Notre Dame Philosophical ReviewsDocument11 pagesAgency and The Foundations of Ethics: Nietzschean Constitutivism - Reviews - Notre Dame Philosophical ReviewsTimothy BrownNo ratings yet

- 2.1-Memory-Multi-store-model - Psychology A Level AqaDocument14 pages2.1-Memory-Multi-store-model - Psychology A Level Aqanarjis kassamNo ratings yet

- Copar: Community Organizing Participatory Action ResearchDocument21 pagesCopar: Community Organizing Participatory Action ResearchLloyd Rafael EstabilloNo ratings yet

- DLL Lesson Plan in Arts q1 WK 5Document6 pagesDLL Lesson Plan in Arts q1 WK 5Mitzi Faye CabbabNo ratings yet

- Do Angels EssayDocument1 pageDo Angels EssayJade J.No ratings yet

- Prueba de Acceso Y Admisión A La Universidad: Andalucía, Ceuta, Melilla Y Centros en MarruecosDocument3 pagesPrueba de Acceso Y Admisión A La Universidad: Andalucía, Ceuta, Melilla Y Centros en MarruecosNatalia FleitesNo ratings yet

- Ashlee Mammenga Two Prong Lesson Plan 2Document4 pagesAshlee Mammenga Two Prong Lesson Plan 2api-252244536No ratings yet

- Dalubhasaang Mabini: Kolehiyo NG EdukasyonDocument7 pagesDalubhasaang Mabini: Kolehiyo NG EdukasyonVen DianoNo ratings yet

- Reinventing Marketing Strategy by Recasting Supplier/customer RolesDocument13 pagesReinventing Marketing Strategy by Recasting Supplier/customer RolesnitsNo ratings yet

- Aesthetics and The Philosophy of ArtDocument3 pagesAesthetics and The Philosophy of ArtRenzo Pittaluga100% (1)

- Engineering Design & Drafting Institute in MumbaiDocument3 pagesEngineering Design & Drafting Institute in MumbaiSuvidya Institute of TechnologyNo ratings yet

- Theeffectsoftechnologyonyoungchildren 2Document5 pagesTheeffectsoftechnologyonyoungchildren 2api-355618075No ratings yet

- William Allan Kritsonis, PHDDocument35 pagesWilliam Allan Kritsonis, PHDWilliam Allan Kritsonis, PhDNo ratings yet

- Lesson Plan For IYOW SkillsDocument3 pagesLesson Plan For IYOW SkillsVictoria GalvezNo ratings yet

- Higgins's and Prescott'sDocument3 pagesHiggins's and Prescott'sadmbad2No ratings yet

- Disease Prediction Using Machine Learning: December 2020Document5 pagesDisease Prediction Using Machine Learning: December 2020Ya'u NuhuNo ratings yet

- The Code of The Extraordinary MindDocument1 pageThe Code of The Extraordinary MindAvi K. Shrivastava100% (1)

- CEP Project Proposal: I. DescriptionDocument2 pagesCEP Project Proposal: I. DescriptionShedy GalorportNo ratings yet

- ColoursDocument3 pagesColoursCrina CiupacNo ratings yet

- Daily Lesson LogDocument3 pagesDaily Lesson LogNelmie JunNo ratings yet

- Cues For Weightlifting CoachesDocument4 pagesCues For Weightlifting CoachesDiegoRodríguezGarcíaNo ratings yet

- Athey 2015Document2 pagesAthey 2015MunirNo ratings yet

- CMS QAPI Five ElementsDocument1 pageCMS QAPI Five ElementssenorvicenteNo ratings yet

- Rules and Guidelines On Debate CompetitionDocument4 pagesRules and Guidelines On Debate CompetitionDoc AemiliusNo ratings yet

- National Elctrical Installation CurriculumDocument34 pagesNational Elctrical Installation CurriculumRudi FajardoNo ratings yet

- Motivation & Emotion WORD DOCUMENTDocument8 pagesMotivation & Emotion WORD DOCUMENTTiffany ManningNo ratings yet