Professional Documents

Culture Documents

Poster AR VAE TTS

Uploaded by

Alfredo EsquivelJOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Poster AR VAE TTS

Uploaded by

Alfredo EsquivelJCopyright:

Available Formats

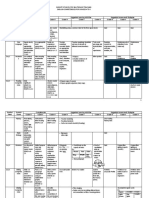

ICASSP 2022

Autoregressive variational autoencoder #8917

with a hidden semi-Markov model-based structured attention for speech synthesis

Takato Fujimoto, Kei Hashimoto, Yoshihiko Nankaku, and Keiichi Tokuda (Nagoya Institute of Technology, Japan)

1. Overview 3. Proposed model (ARHSMM-VAE)

Autoregressive (AR) acoustic model with attention mechanism Acoustic

𝑿 𝑿

features Inference 𝑄 𝑺

• Predict acoustic features recursively from linguistic features Speech

Speech 1. Encode linguistic features Encoder

• Estimate alignment between acoustic and linguistic features 𝒛 𝑡−1

Decoder 2. Upsample latent linguistic features based on state durations 𝒁

• Achieve remarkable performance 𝒛𝑡−1 𝒁 3. Predict latent acoustic features in AR manner

• e.g., Tacotron 2 [Shen et al.; ’18] and Transformer TTS [Li et al.; ’19] 𝒀 𝑽 𝑺

4. Decode latent acoustic features into acoustic features

state

Problems due to lack of robustness FUNet

State sequence

• Training instability caused by excessive degrees of freedom in alignment 𝑺 = (1, 1, 2, 2, 2, 3, … )

State durations

• Quality degradation caused by exposure bias 𝒗1 , 𝒗1 , 𝒗2 , 𝒗2 , 𝒗2 , 𝒗3 , …

𝑑1 = 2, 𝑑2 = 3, …

• Discrepancy between training and inference Duration

Training HSMM trellis frame

𝒗1 , 𝒗2 , 𝒗3 , …

Predictor • Maximize ELBO over approximate posterior distributions

Hidden semi-Markov model (HSMM)-based structured

𝛼 𝛽

attention [Nankaku et al.; ’21] Text Approximate posterior distributions 𝑄 𝑺 and 𝑄 𝒁 𝒛 𝑡−1 𝜸𝑡 /𝜸𝑡+1

Encoder

• Latent alignment based on VAE framework • Estimate state given entire sequence using forward-

𝑿

• Acoustic modeling with explicit duration models Linguistic backward algorithm 𝑄 𝒁

features 𝒀

• Appropriate handling of duration in statistical manner • Approximate posterior distribution relative to frame 𝑡 Speech

Encoder

• Constraints to yield monotonic alignment 𝑿

𝑿 • Simultaneously infer 𝑄 𝑺 and 𝑄 𝒁 for strong dependence 𝒁

AR VAE Loss (KL divergence)

𝒀 𝑽

Speech Encoder Speech Decoder 𝑃(𝒁|𝑺, 𝑽) 𝑺

• AR generation of latent variables instead of observations

state

𝛼

• Consistent AR structure between training and inference FUNet

𝜸𝑡

𝑄(𝒁)

• Reduce exposure bias 𝜸𝑡+1

𝛽

Inverse of FUNet

Integration of HSMM-based structured attention and AR VAE FUNet

Forward algorithm with FUNet

past samples 𝒛 <𝑡

• FUNet HSMM trellis frame

• Speech synthesis integrating robust HMMs and flexible DNNs and backward algorithm with BUNet 𝑃(𝑺|𝑽)

• Non-linear prediction from

• VAE-based acoustic model containing autoregressive HSMM (ARHSMM) 𝑄(𝑺) relative Duration previous time step

• Improve speech quality and robustness of model training Duration Generalized 𝑄(𝑺) to state 𝑘

Predictor

Predictor forward-backward algorithm

• 𝑃 𝒛𝑡 𝒛 𝑡−1 , 𝒗

𝑘 =

Acoustic 𝒁 𝒩(𝒛𝑡 ; FUNet 𝒛 𝑡−1 , 𝒗

𝑘 ) 𝑄 𝑽

𝑿

DNet

features Speech

Speech 𝑺 Speech

• Inverse 𝑃 𝒛 𝑡 𝒛𝑡−1 , 𝒗

𝑘 /𝑃 𝒛 𝑡 𝒛 𝑡−1 , 𝒗𝑘 is Encoder

𝑿 𝑿 Calculating output probabilities

Encoder Decoder using FUNet

FUNet 𝑄(𝑽) intractable 𝒁

ARHSMM • Approximated by inverse modules

𝒀

Text BUNet and DNet 𝒀 𝑽 𝑺

state

𝑃(𝑽|𝒀) 𝑃(𝑽|𝒀)

Linguistic Encoder 𝑽

features Text Text

Speech Encoder

Encoder Encoder

2. Autoregressive variational autoencoder 𝒀 Linguistic

features

𝑿 Acoustic

features

𝒀

HSMM trellis frame

Conventional AR model

• Achieve remarkable performance 4. Experiments

• Training: Teacher forcing

• Target input, no autoregressive behavior Experimental conditions Evaluation

• Inference: Free running • Ximera dataset • Subjective evaluation metric

⇒ Discrepancy between training and inference • Japanese, single female speaker, sampled at • Mean opinion score (MOS) on 5-point scale

48 kHz • Objective evaluation metrics using DTW

Teacher forcing Free running

𝑿

𝑿 • Waveforms were synthesized using a pre-trained • Mel-cepstral distortion (MCD)

WaveGrad [Chen et al.; ’21] vocoder • F0 root mean square error (F0RMSE)

• Conventional AR vs. AR VAE

𝑿

Baselines • AR VAE > Non-AR > Conv. AR

Posterior Decoder AR prior • Analysis by synthesis (AS) • Attention mechanism

AR VAE • FastSpeech 2 [Ren et al.; ’20]

𝑃 𝒁|𝑿 ∝ ෑ 𝑃 𝒙𝑡 |𝒁 𝑃 𝒛𝑡 𝒛𝑡−1 • HSMM-based attn. > Location-sensitive attn.

• AR modeling on latent variable space 𝒁 • Non-AR model trained using external duration,

𝑡 • Effective on both large and small datasets

• Training: Approx. posterior Approx. 𝑄 𝒁 ∝ ෑ 𝑙 𝒛 ; 𝑿 𝑃 𝒛 𝒛 pitch, and energy

• Inference: Prior posterior 𝑡

𝑡 Encoder

𝑡 𝑡−1

• Tacotron 2 [Shen et al.; ’18]

• Consistent autoregressive behavior • AR model with location-sensitive attention

Ablations

Product of Gaussian PDFs

• HSMM-ATTN [Nankaku et al.; ’21] • Objective evaluation metrics

Training

Inference • Duration mean absolute error (DMAE)

𝑿 𝑿 • AR model with HSMM-based attention

• Mel-cepstral distortion (MCD)

Decoder Decoder • F0 root mean square error (F0RMSE)

𝒁 𝒁

Proposed models • Removing backward path makes F0RMSE

• AR-VAE worse

Encoder

• AR VAE applied to Tacotron 2 • Sharing FUNet parameters is critical

𝑿 • ARHSMM-VAE

You might also like

- FIDP Oral Com 1Document5 pagesFIDP Oral Com 1Jomark RebolledoNo ratings yet

- Music Genre Classification Using Machine Learning Techniques: April 2018Document13 pagesMusic Genre Classification Using Machine Learning Techniques: April 2018Sahil BansalNo ratings yet

- Teaching Language in Context. Chapter 1 SummaryDocument6 pagesTeaching Language in Context. Chapter 1 SummarySC Zoe100% (6)

- Curriculum Map in English 8Document13 pagesCurriculum Map in English 8Jennelyn TadleNo ratings yet

- Poster Ecoc Rimini 030921Document1 pagePoster Ecoc Rimini 030921Jaya Kumar KbNo ratings yet

- Time-Frequency Audio Features For Speech-Music ClassificationDocument5 pagesTime-Frequency Audio Features For Speech-Music ClassificationShrouk AbdallahNo ratings yet

- English GR 456 1st Quarter MG Bow 1Document24 pagesEnglish GR 456 1st Quarter MG Bow 1api-359551623100% (1)

- MALAPATAN-1 - English-Assessment-Report - LEAST LEARNED AND MASTERED COMPETENCIESDocument7 pagesMALAPATAN-1 - English-Assessment-Report - LEAST LEARNED AND MASTERED COMPETENCIESJivielyn VargasNo ratings yet

- Spectral and Textural Features For AutomaticDocument4 pagesSpectral and Textural Features For AutomaticAicha ZitouniNo ratings yet

- Natus Bio Logic Navigator Pro Master IIDocument2 pagesNatus Bio Logic Navigator Pro Master IIferranNo ratings yet

- Poster Design With Emi in Mind 1691391858356Document1 pagePoster Design With Emi in Mind 1691391858356luidgi12345No ratings yet

- Paombong High School, Inc. S.Y. 2017-2018 Curriculum Map English 8Document18 pagesPaombong High School, Inc. S.Y. 2017-2018 Curriculum Map English 8Jzaninna SolNo ratings yet

- JDSU Fibercharacterization Poster October2005Document1 pageJDSU Fibercharacterization Poster October2005Gaurav SainiNo ratings yet

- Vol7no3 331-336 PDFDocument6 pagesVol7no3 331-336 PDFNghị Hoàng MaiNo ratings yet

- Group 10 - Curriculum MapDocument2 pagesGroup 10 - Curriculum MapAni Pearl PanganibanNo ratings yet

- LCD in English Q1 To Q4Document124 pagesLCD in English Q1 To Q4Crail ManikaNo ratings yet

- Foreign Accent Conversion Through Voice MorphingDocument6 pagesForeign Accent Conversion Through Voice Morphingrachitapatel449No ratings yet

- Phonological Processes in Connected Speech: FormativeDocument4 pagesPhonological Processes in Connected Speech: FormativebetancourtandgaliffaNo ratings yet

- Histogram of Gradients of Time-Frequency Representations For Audio Scene DetectionDocument15 pagesHistogram of Gradients of Time-Frequency Representations For Audio Scene DetectionMuhammet Ali KökerNo ratings yet

- Unpacking Grade 7 1st QuarterDocument9 pagesUnpacking Grade 7 1st QuarterGideon Pol TiongcoNo ratings yet

- 1st Sem Fidp Oral CommDocument5 pages1st Sem Fidp Oral CommMarielle AlystraNo ratings yet

- 1 Quarter: - News Reports - Speeches - Informative Talks - Panel DiscussionsDocument2 pages1 Quarter: - News Reports - Speeches - Informative Talks - Panel DiscussionsCarrie Alyss P Eder-Ibacarra100% (2)

- RF PropogationDocument3 pagesRF PropogationShubham RastogiNo ratings yet

- Anumanchipalli2019 - Speech Synthesis From Neural Decoding of Spoken SentencesDocument20 pagesAnumanchipalli2019 - Speech Synthesis From Neural Decoding of Spoken SentencesErick SolisNo ratings yet

- Unsupervised Speech Representation Learning Using Wavenet AutoencodersDocument13 pagesUnsupervised Speech Representation Learning Using Wavenet AutoencodersYoann DragneelNo ratings yet

- Visualization of Perceptual Qualities in Textural SoundsDocument8 pagesVisualization of Perceptual Qualities in Textural SoundsgrxxxNo ratings yet

- Fidp Oral ComDocument10 pagesFidp Oral ComClarissa SanchezNo ratings yet

- 2 AES ImmersiveDocument5 pages2 AES ImmersiveDavid BonillaNo ratings yet

- A Study On Automatic Speech RecognitionDocument2 pagesA Study On Automatic Speech RecognitionInternational Journal of Innovative Science and Research Technology100% (1)

- Unified Process: Introduction To TheDocument8 pagesUnified Process: Introduction To TheSevNo ratings yet

- WorkflowGraphic Final v01Document1 pageWorkflowGraphic Final v01jfNo ratings yet

- Curriculum Map 7 2019 2020Document9 pagesCurriculum Map 7 2019 2020Junna Gariando BalolotNo ratings yet

- Radiographic Assessment of Pediatric Foot Alignment: Review: ObjectiveDocument8 pagesRadiographic Assessment of Pediatric Foot Alignment: Review: ObjectiveEGNo ratings yet

- VisingerDocument5 pagesVisingerkyeonjNo ratings yet

- Earth Sci Fidp (g11)Document8 pagesEarth Sci Fidp (g11)arvie lucesNo ratings yet

- 5 Avenue, Ledesco Village, La Paz, Iloilo City, Philippines Tel. #: (033) 320-4854 School ID: 404172 Email AddDocument8 pages5 Avenue, Ledesco Village, La Paz, Iloilo City, Philippines Tel. #: (033) 320-4854 School ID: 404172 Email AddMelerose Dela SernaNo ratings yet

- Effective Treatment For ChildhoodDocument28 pagesEffective Treatment For ChildhoodRafael AlvesNo ratings yet

- International Journal of Current Advanced Research: A Survey On Automatic Speech Recognition System Research ArticleDocument11 pagesInternational Journal of Current Advanced Research: A Survey On Automatic Speech Recognition System Research ArticleSana IsamNo ratings yet

- Automatic Detection of Vocal Segments in Popular Songs.: January 2004Document9 pagesAutomatic Detection of Vocal Segments in Popular Songs.: January 2004LomarenceNo ratings yet

- Paper 5Document4 pagesPaper 5s.dedaloscribdNo ratings yet

- DLL Grade 7 Week 1Document6 pagesDLL Grade 7 Week 1Almar MiraNo ratings yet

- FBA DiagramDocument2 pagesFBA Diagramana sandra popNo ratings yet

- Quarter 1 Week 3: PRIMALS (English Class, Group 1 - The Linguists)Document4 pagesQuarter 1 Week 3: PRIMALS (English Class, Group 1 - The Linguists)ANNA MARIE LARGADAS MALULANNo ratings yet

- Part One The Nature of Approaches and MethodsDocument1 pagePart One The Nature of Approaches and Methodsbertaniamine7No ratings yet

- A Sub-Character Architecture For Korean Language ProcessingDocument6 pagesA Sub-Character Architecture For Korean Language ProcessingNicolly PradoNo ratings yet

- Overall SyllabusDocument525 pagesOverall SyllabusDIVYANSH GAUR (RA2011027010090)No ratings yet

- Fully Convolutional Networks For Semantic Segmentation: Jonathan Long Evan Shelhamer Trevor Darrell UC BerkeleyDocument10 pagesFully Convolutional Networks For Semantic Segmentation: Jonathan Long Evan Shelhamer Trevor Darrell UC BerkeleyUnaixa KhanNo ratings yet

- Curriculum Map English 8Document26 pagesCurriculum Map English 8Tipa JacoNo ratings yet

- Review of The Emotional Feature Extraction and Classification Using EEG SignalsDocument12 pagesReview of The Emotional Feature Extraction and Classification Using EEG Signalsforooz davoodzadehNo ratings yet

- Sat GuideDocument56 pagesSat Guidemuhayyoeshonqulova0No ratings yet

- Speaker Recognition Based On Deep Learning: An OverviewDocument39 pagesSpeaker Recognition Based On Deep Learning: An OverviewfranciscoNo ratings yet

- Shirani MehrH PDFDocument8 pagesShirani MehrH PDFchirag801No ratings yet

- DLL ENG8 3RDQ Week1Document7 pagesDLL ENG8 3RDQ Week1Donna Mae MonteroNo ratings yet

- 5831 978-1-5386-4658-8/18/$31.00 ©2019 Ieee Icassp 2019Document5 pages5831 978-1-5386-4658-8/18/$31.00 ©2019 Ieee Icassp 2019ABDUL KHADER U ANo ratings yet

- English 7 Curriculum Map: 443 Mabini Street Atimonan, Quezon (Recognized by The Government) No. 010 S. 1986Document6 pagesEnglish 7 Curriculum Map: 443 Mabini Street Atimonan, Quezon (Recognized by The Government) No. 010 S. 1986ZarahJoyceSegoviaNo ratings yet

- Headphone Technology Hear-Through, Bone Conduction, Noise CancelingDocument6 pagesHeadphone Technology Hear-Through, Bone Conduction, Noise CancelingRichard HallumNo ratings yet

- Curriculum Map 1-4Document29 pagesCurriculum Map 1-4DoreenSevillesNo ratings yet

- Beyond Minimum: Speech Showing Prosodic Asking Ideas ComingDocument8 pagesBeyond Minimum: Speech Showing Prosodic Asking Ideas ComingGeraldNo ratings yet

- Deep Learning For Audio Signal ProcessingDocument14 pagesDeep Learning For Audio Signal Processingsantoshmore439No ratings yet