Professional Documents

Culture Documents

Test Automation For Machine Learning

Uploaded by

Trâm TrầnOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Test Automation For Machine Learning

Uploaded by

Trâm TrầnCopyright:

Available Formats

TEST AUTOMATION

FOR MACHINE

LEARNING: AN

EXPERIENCE REPORT

ANGIE JONES

NORTH CAROLINA, USA

MACHINE LEARNING IS A METHOD OF specific products to visitors of their websites,

equipping computers with artificial intelligence users of their mobile apps, or customers calling

so that they are able to utilize information that into their call centers based on characteristics of

they have been exposed to in order to further that person and their current context. This type of

develop themselves without the need for algorithm isn’t entirely new, however, what gave

additional programming. Not long ago, I had the us the advantage over competitors was the

unique opportunity to work on an exciting project coupling of real-time decision making along with

that utilized machine learning as one of its most a learning algorithm that promised to make a

attractive features. The product was an business’s advertising better over time.

advertising application that used analytics to

I was tasked with verifying that the learning

determine the best ad that a business could

algorithm was working as intended, and chose

provide to a specific customer in real-time. Much

test automation as a tool to assist me in this

like the advertisements we see when surfing the

effort. While exciting, this was also quite the

web are tailored to us based on our browsing

challenge. Here’s a look into my journey.

habits, this software allowed businesses to show

" TESTING TRAPEZE | APRIL 2017 22

Learning how it learns

As a tester, and more specifically an automation engineer, I am

accustomed to working on scenarios where the expected result is

exact. For example, when working with a typical e-commerce

application, if I add a product to my empty cart that costs $29.99 and I

modify the quantity in the cart to add two more of this product, my

expected subtotal is $89.97. Exact. Testable. Automatable.

“

with machine learning,

there is no exactness.

There’s a range of valid

I quickly discovered that with machine learning, there is no exactness.

There’s a range of valid possibilities that could occur based on what

the machine has learned and how it will utilize that information in its

possibilities that could future decisions. So the first thing I needed to do was learn how the

occur based on what

algorithm learns so that I could determine the range of correctness.

the machine has

This required quite a bit of deep diving under the surface of the

learned

application into the code and talking extensively with developers to

” understand what triggers this machine to learn, how it learns, and how

it uses what it has learned to make decisions.

The code was quite complex and I’m not ashamed to admit that the

algorithms were over my head. However, I pressed on until I felt I had a

fundamental understanding of how it worked and therefore was able to

come up with scenarios to test it.

Training it

Before testing any learning, I had to give the system something to

learn. Without any learned patterns, the system was designed to

randomly recommend an ad to be provided to a customer. I created a

set of five ads that the system could choose from.

To trigger learning, I needed to simulate a substantial number of

customer transactions to occur within a customized window of time.

After this period of time elapsed, the system would then build a model

based on ads that led to conversions during that time. This essentially

would represent what the machine has learned.

I started with a very simplistic (and stereotypical) scenario where

recommended ads were requested for 2,000 unique customers who

identified as either male or female. I randomly generated the gender of

each customer, so there was always between a 48-50% split of one

customer type versus the other.

" TESTING TRAPEZE | APRIL 2017 23

My goal was for the vast majority of women to buy the dress after being

provided with the dress ad, and the vast majority of men to buy the

trousers after being provided with the trouser one. Since there were five

ads, the chance of a woman being shown the dress ad or a man being

shown the trousers ad was 20%. One thing I learned about the system

about the system during“

one thing I learned

my initial investigation...

during my initial investigation of the learning algorithm was that too was that too much of

much of something was not considered a good thing. Meaning, if 100% something was not

of women who were shown the dress ad ended up buying the dress, considered a good

then by design, the system would treat it as an abnormality. So, to thing

make it more realistic, I skewed the conversions a bit so that is was

more like a 65% conversion rate. Also, to ensure models were created

for each ad, I had any customer buy the product for whatever ad they

were shown 2% of the time.

”

This essentially ended up with a pool where roughly 1,000 of the

customers were women, of which approximately 200 of them were

provided with an ad for a dress. Of that, about 130 of the women

actually bought the dress. Another 20 or so of the 1,000 women made

random purchases based on the ad they were shown which could

include a few dresses. A similar pool was generated for men and

trousers.

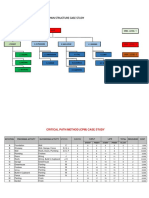

Within my automation code, I kept the results from the training exercise

in a data structure that looked something like this after a given run:

Women who Women who Men who Men who

Ad

saw purchased saw purchased

Dress 202 133 204 3

Trousers 205 3 196 128

Scarf 199 2 203 3

Gloves 194 1 198 1

Belt 197 1 202 2

Testing it

Given the training I’d simulated, it was clear to me that the system

should have learned that women are likely to buy dresses and men are

likely to buy trousers. Having learned this, I also expected the system

to now offer women dresses more often and men trousers more often.

" TESTING TRAPEZE | APRIL 2017 24

However, what does “more” mean exactly? No one could really give me

“

I’m no statistician or

data analyst so I

resorted to using POCS

an exact answer or even a range for this. I’m no statistician or data

analyst so I resorted to using POCS - plain ole common sense. If 20%

of women were shown the ad for the dress when there was no learning

in place, then after the system has learned, I expect more than 20% of

- plain ole common

the women to be shown the dress ad and I expect the four other ads to

sense

be shown less than the dress ad and less than they were shown before

” the learning. Likewise, I expected a similar result for men but with the

trouser ad.

The same scenario ran again but this time with 2,000 new unique

customers. I captured the ads shown and purchases made into a new

data structure and compared it with the before-learning data.

Women who Women who Men who Men who

Ad

saw before saw after saw before saw after

Dress 202 890 204 874

Trousers 205 66 196 59

Scarf 199 18 203 24

Gloves 194 14 198 19

Belt 197 15 202 21

Do you see what’s wrong here? The system indeed learned that the

dress was popular but it began showing the dress ad to most

customers, regardless of gender.

Of course, I began wondering what I did wrong...being a novice to

machine learning and all. Did I not train the system properly? I cleared

all models that the system had stored and re-ran the test. This time I

noticed that after the system learned, it began offering the trousers ad

to most customers, regardless of gender! Huh?

After investigating the data collected for each run, I noticed that

whichever ad led to the most conversions during that training period

would be the product that the system learned was most favorable. The

second most popular one would be shown more than the random 20%

of the time, but less than the most popular ad. It became clear to me

that the customer demographic was not being considered in these

decisions and that this was clearly a bug.

" TESTING TRAPEZE | APRIL 2017 25

Doubting my findings

Writing up the bug report was similar to writing this article. Because

there’s no exact expected result, I needed to include detailed

information about how I trained the system, why my expectations were

what they were, and how I verified them.

There were only two developers assigned to work on the learning

module. One of which was a brilliant developer and understood this

machine learning stuff way more than anyone else in the company -

let’s call him Arty, and the other a junior developer looking to learn from

him.

Arty received my bug report but was not convinced that there was a

problem with the system. You see, Arty had set up his own scenario,

which was different from mine, and you guessed it...it worked on his

machine!

Arty closed my bug report.

Being that I was new to this and Arty was the in-house expert, I

second-guessed myself yet again and went back to my automation

code to study my setup. I ran the scenario multiple times to generate

“

being that I was new to

this and Arty was the

in-house expert, I

more models and further enforce the learning that women buy dresses second-guessed myself

”

and men buy trousers. That didn’t seem to change the outcome. After a

day of racking my brain, I was convinced that my initial test was correct

and regardless of what Arty said, the way the system was learning was

not as intended.

I reopened the bug report.

Proving my findings

Arty was convinced that the issue I reported was not a bug and I was

convinced that it was. Consequently, this high-priority bug sat there for

several weeks. Eventually, it became time to release the software and of

course we had to come to some resolution before doing so. The

product owner called a meeting with Arty and myself to hear both

arguments.

As a tester, I believe my primary role is to provide information about the

product, and while I don’t fight to get bugs fixed, I do believe a part of

my role is also to advocate for the customer. So, while I had the data

from my findings, I presented my observations to the product owner

" TESTING TRAPEZE | APRIL 2017 26

with customer impact instead. I explained that What I learned

65% of men bought trousers, yet our system is As technology advances, so must our test

much more likely to present a man with an ad for approaches. In this case, hard rules such as “the

a dress, even when there’s only a 1% chance exact results must be known in order to test or

that this will lead to a conversion. I highlighted automate” had to be revisited and challenged.

that this issue will cause businesses to miss out

The need for automation became much more

on potential sales, it goes against what we’ve

apparent as thousands of customer transactions

marketed our product to be, and I don’t think our

needed to be simulated and analyzed. Yet,

customers will find this acceptable.

coding the scenario was only one small part of

Arty presented his beliefs and went into a lot of actually testing the feature. There was still a

theory about machine learning. After the lesson, need for thoughtful and critical testing, and this

he then changed the scenario on his machine to was definitely a case where automation was not

resemble mine and proceeded to run it during just about validating that certain features were

the meeting to prove that it, in fact, works. correct, but was utilized as a tool to support that

testing.

It didn’t.

I also learned that no matter how smart

Arty finally conceded that we indeed had a bug

machines become, having blind faith in them is

on our hands. He also kicked himself for ever

not wise; plain ole common sense can still trump

doubting me in the first place, which I’ll admit,

an algorithm. While we should embrace new

felt pretty good.

technology, it’s imperative that we, as testers, not

allow others to convince us that any software has

advanced beyond the point of needing testing.

We must continue to question, interrogate, and

advocate for the human customers that we

serve.

Angie Jones is a Consulting Automation Engineer who advises several scrum teams on automation

strategies and has developed automation frameworks for countless software products. As a Master

Inventor, she is known for her innovative and out-of-the-box thinking style which has resulted in more

than 20 patented inventions in the US and China. Angie shares her wealth of knowledge

internationally by speaking and teaching at software conferences, serving as an Adjunct College

Professor of Computer Programming, and leading tech workshops for young girls through TechGirlz

and Black Girls Code.

" TESTING TRAPEZE | APRIL 2017 27

You might also like

- AWS Machine Learning Journey EbookDocument21 pagesAWS Machine Learning Journey EbookCITSNo ratings yet

- Python Machine Learning: A Practical Beginner's Guide to Understanding Machine Learning, Deep Learning and Neural Networks with Python, Scikit-Learn, Tensorflow and KerasFrom EverandPython Machine Learning: A Practical Beginner's Guide to Understanding Machine Learning, Deep Learning and Neural Networks with Python, Scikit-Learn, Tensorflow and KerasNo ratings yet

- Machine Learning1Document11 pagesMachine Learning1Rishab Bhattacharyya100% (1)

- How to Develop Residual Income in a Sustainable & Scalable Business: Real Fast Results, #39From EverandHow to Develop Residual Income in a Sustainable & Scalable Business: Real Fast Results, #39No ratings yet

- The Machine Learning Journey PDFDocument21 pagesThe Machine Learning Journey PDFWan Zulkifli Wan IdrisNo ratings yet

- The Machine Learning Journey PDFDocument21 pagesThe Machine Learning Journey PDFkapoor_mukesh4uNo ratings yet

- Assembly Required: How to Hyperscale Your Sales, Dominate the Competition, and Become the Market LeaderFrom EverandAssembly Required: How to Hyperscale Your Sales, Dominate the Competition, and Become the Market LeaderNo ratings yet

- Algorithmic BiasDocument20 pagesAlgorithmic BiasSeham OthmanNo ratings yet

- Real World AI: A Practical Guide for Responsible Machine LearningFrom EverandReal World AI: A Practical Guide for Responsible Machine LearningNo ratings yet

- Lumpaz, Jan Paolo M. 3 Year Bsba - Marketing ManagementDocument8 pagesLumpaz, Jan Paolo M. 3 Year Bsba - Marketing ManagementJAN PAOLO LUMPAZNo ratings yet

- Online Modeling of Proactive Moderation System For Auction Fraud DetectionDocument5 pagesOnline Modeling of Proactive Moderation System For Auction Fraud DetectionkokilaNo ratings yet

- Measuring Advertising EffectivenessDocument2 pagesMeasuring Advertising EffectivenessjrenceNo ratings yet

- Quarsicoursepro Money MakerDocument20 pagesQuarsicoursepro Money MakerCesar RubimNo ratings yet

- Python Machine Learning: Introduction to Machine Learning with PythonFrom EverandPython Machine Learning: Introduction to Machine Learning with PythonNo ratings yet

- Finding the Right Freelancer to Build Your Mobile AppFrom EverandFinding the Right Freelancer to Build Your Mobile AppRating: 5 out of 5 stars5/5 (1)

- Suncorp Behavioural QuestionsDocument1 pageSuncorp Behavioural QuestionsxinsurgencyNo ratings yet

- Best Machine Learning Platform ComparisonDocument38 pagesBest Machine Learning Platform Comparisonsaby003No ratings yet

- Online Modeling of Proactive Moderation System For Auction Fraud DetectionDocument4 pagesOnline Modeling of Proactive Moderation System For Auction Fraud DetectionBubul ChoudhuryNo ratings yet

- Why Machine Learning Is Important and Its EffectDocument14 pagesWhy Machine Learning Is Important and Its EffectTomNo ratings yet

- ThinkVine UMich Presentation FDocument40 pagesThinkVine UMich Presentation FjcstengerNo ratings yet

- Ad Nauseam Vs GoogleDocument7 pagesAd Nauseam Vs GooglefepunowNo ratings yet

- Online Modeling of Proactive Moderation System For Auction Fraud DetectionDocument4 pagesOnline Modeling of Proactive Moderation System For Auction Fraud DetectionBubul ChoudhuryNo ratings yet

- Machine Learning Tutorial For BeginnersDocument15 pagesMachine Learning Tutorial For BeginnersmanoranjanchoudhuryNo ratings yet

- Machine Learning For Absolute Begginers A Step By Step Guide: Algorithms For Supervised And Unsupervised Learning With Real World ApplicationsFrom EverandMachine Learning For Absolute Begginers A Step By Step Guide: Algorithms For Supervised And Unsupervised Learning With Real World ApplicationsNo ratings yet

- Online Modeling of Proactive Moderation System For Auction Fraud DetectionDocument6 pagesOnline Modeling of Proactive Moderation System For Auction Fraud DetectionPavan KumarNo ratings yet

- Machine Learning For Absolute Beginners A Step by Step guide Algorithms For Supervised and Unsupervised Learning With Real World ApplicationsFrom EverandMachine Learning For Absolute Beginners A Step by Step guide Algorithms For Supervised and Unsupervised Learning With Real World ApplicationsRating: 2 out of 5 stars2/5 (2)

- Lessons, Tactics, and Stories From World-Class ExperimentersDocument23 pagesLessons, Tactics, and Stories From World-Class ExperimentersJerome WongNo ratings yet

- An Enlightenment To Machine Learning - RespDocument22 pagesAn Enlightenment To Machine Learning - RespIgorJalesNo ratings yet

- How To Build A Demand Generation Function For Your OrganizationDocument1 pageHow To Build A Demand Generation Function For Your OrganizationAbhijit GangoliNo ratings yet

- The Profit Prophecy 10 30 12 D PDFDocument37 pagesThe Profit Prophecy 10 30 12 D PDFRoberto Poletto100% (1)

- Using Heuristic Test Oracles - Enter The HICCUPP Heuristic - InformITDocument3 pagesUsing Heuristic Test Oracles - Enter The HICCUPP Heuristic - InformITJacin MardoNo ratings yet

- Intrusion Detection Systems A Complete Guide - 2021 EditionFrom EverandIntrusion Detection Systems A Complete Guide - 2021 EditionNo ratings yet

- Building A Large Scale Machine Learning-Based Anomaly Detection SystemDocument60 pagesBuilding A Large Scale Machine Learning-Based Anomaly Detection SystemAnonymous dQZRlcoLdhNo ratings yet

- Bug Knows Software Testing BetterDocument2 pagesBug Knows Software Testing BetterTestingGarageNo ratings yet

- Cybersecurity Jobs: Resume Marketing: Find Cybersecurity Jobs, #1From EverandCybersecurity Jobs: Resume Marketing: Find Cybersecurity Jobs, #1No ratings yet

- Assessing Vendors: A Hands-On Guide to Assessing Infosec and IT VendorsFrom EverandAssessing Vendors: A Hands-On Guide to Assessing Infosec and IT VendorsNo ratings yet

- Business Technology Development Strategy Bundle: Artificial Intelligence, Blockchain Technology and Machine Learning Applications for Business SystemsFrom EverandBusiness Technology Development Strategy Bundle: Artificial Intelligence, Blockchain Technology and Machine Learning Applications for Business SystemsRating: 5 out of 5 stars5/5 (1)

- Eportfolio ProjectDocument2 pagesEportfolio Projectapi-283470748No ratings yet

- Intrusion Prevention Systems A Complete Guide - 2021 EditionFrom EverandIntrusion Prevention Systems A Complete Guide - 2021 EditionNo ratings yet

- A Study Into The Effectiveness of Viral MarketingDocument28 pagesA Study Into The Effectiveness of Viral MarketingAman Aggarwal100% (1)

- Manufacturing Execution System A Complete Guide - 2021 EditionFrom EverandManufacturing Execution System A Complete Guide - 2021 EditionNo ratings yet

- Threat Intelligence Platform A Complete Guide - 2021 EditionFrom EverandThreat Intelligence Platform A Complete Guide - 2021 EditionNo ratings yet

- Security Risk Management: Building an Information Security Risk Management Program from the Ground UpFrom EverandSecurity Risk Management: Building an Information Security Risk Management Program from the Ground UpRating: 4.5 out of 5 stars4.5/5 (2)

- Computer Information Systems A Complete Guide - 2021 EditionFrom EverandComputer Information Systems A Complete Guide - 2021 EditionNo ratings yet

- Python Machine Learning Illustrated Guide For Beginners & Intermediates: The Future Is Here!From EverandPython Machine Learning Illustrated Guide For Beginners & Intermediates: The Future Is Here!Rating: 5 out of 5 stars5/5 (1)

- 50 Ads That Made MillionsDocument29 pages50 Ads That Made MillionsAnonymous 4u9t6lNo ratings yet

- ConvNet For The 2020sDocument12 pagesConvNet For The 2020sHamza OKDNo ratings yet

- Solved Questions SE UNIT 1Document5 pagesSolved Questions SE UNIT 1santanudas2001in9040No ratings yet

- UML Use Case DiagramDocument26 pagesUML Use Case DiagramPinkpulp PussypulpNo ratings yet

- Artificial Intelligence (Mahbub Mas'Ud)Document16 pagesArtificial Intelligence (Mahbub Mas'Ud)mahbubNo ratings yet

- Unit-2 (Software Development Process Models)Document12 pagesUnit-2 (Software Development Process Models)Rabin AleNo ratings yet

- Kuliah 1 PendahuluanDocument39 pagesKuliah 1 PendahuluanTeddy EkatamtoNo ratings yet

- Laboratory Practice IIDocument23 pagesLaboratory Practice IIJalindar EkatpureNo ratings yet

- Real-Time Convolutional Neural Networks For Emotion and Gender ClassificationDocument5 pagesReal-Time Convolutional Neural Networks For Emotion and Gender ClassificationDivyank PandeyNo ratings yet

- PID Controller Design For Integrating Processes With Time DelayDocument9 pagesPID Controller Design For Integrating Processes With Time DelayniluNo ratings yet

- Life Cycle Engineering's Asset Management System Framework - Using Asset Management Capabilities To Create Value - Life Cycle EngineeringDocument6 pagesLife Cycle Engineering's Asset Management System Framework - Using Asset Management Capabilities To Create Value - Life Cycle Engineeringamr habibNo ratings yet

- Advanced Control System NotesDocument40 pagesAdvanced Control System NotesBrijesh B NaikNo ratings yet

- P M PDocument3 pagesP M Pajay booraNo ratings yet

- Predicting The Reviews of The Restaurant Using Natural Language Processing TechniqueDocument4 pagesPredicting The Reviews of The Restaurant Using Natural Language Processing TechniqueBalu SadanalaNo ratings yet

- Case Study and Exercises - Planning and Control CycleDocument8 pagesCase Study and Exercises - Planning and Control CycleYosie MalindaNo ratings yet

- Fitness - CentreDocument22 pagesFitness - CentrePrithvi AnandakumarNo ratings yet

- Course Handout PDFDocument3 pagesCourse Handout PDFNayan KumarNo ratings yet

- Computer Science Study Abroad Handbook 2023 2024Document23 pagesComputer Science Study Abroad Handbook 2023 2024BRAHMJOT SINGHNo ratings yet

- Introduction To Artificial Intelligence 2021 NSDocument43 pagesIntroduction To Artificial Intelligence 2021 NSFitriana Zahirah TNo ratings yet

- MSAI 04 - JidokaDocument44 pagesMSAI 04 - JidokaUlriksenNo ratings yet

- Text Classification: Slides Adapted From Lyle Ungar and Dan JurafskyDocument29 pagesText Classification: Slides Adapted From Lyle Ungar and Dan JurafskyMatthew MiceliNo ratings yet

- PID ControllerDocument10 pagesPID ControllerFarid HazwanNo ratings yet

- Mini Project Pid Design and TuningDocument5 pagesMini Project Pid Design and TuningfaranimohamedNo ratings yet

- SyllabusDocument5 pagesSyllabusLaki LuckwinNo ratings yet

- The Likelihood of An Adverse Event and The Impact of The EventDocument65 pagesThe Likelihood of An Adverse Event and The Impact of The EventchitraNo ratings yet

- Siemens Automation ProgramDocument22 pagesSiemens Automation ProgramAvinash ChauhanNo ratings yet

- Road Line LdetectionDocument15 pagesRoad Line LdetectionAnurag GautamNo ratings yet

- Domain VII. Continuous Improvement 9% of The Exam (11 Questions)Document34 pagesDomain VII. Continuous Improvement 9% of The Exam (11 Questions)Mohammed Yassin ChampionNo ratings yet

- HarmyaDocument33 pagesHarmyaYawkal AddisNo ratings yet

- Fast Convert Or-Decision Table To Decision TreeDocument4 pagesFast Convert Or-Decision Table To Decision TreePhaisarn SutheebanjardNo ratings yet

- Journal Pre-Proof: European Journal of ControlDocument21 pagesJournal Pre-Proof: European Journal of Controlstalker2222No ratings yet