Professional Documents

Culture Documents

Mind and Machine Two Sides of The Same C

Uploaded by

yahya.gourram09Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Mind and Machine Two Sides of The Same C

Uploaded by

yahya.gourram09Copyright:

Available Formats

Commentary

iMedPub Journals 2020

Health Science Journal

www.imedpub.com Vol. 14 No. 5: 742

ISSN 1791-809X

DOI: 10.36648/1791-809X.14.5.742

Mind and Machine: Two sides of the same Coin? Sukanya Chakraborty and

Siddhartha Sen*

1 Department of Biological Sciences,

Indian Institute of Science Education and

Abstract Research, Berhampur, Odisha, India

The human brain has been the source of inspiration behind the birth and growth 2 Department of Information Science

of Artificial Intelligence. The achievements in the diverse domains of AI have with focus on Artificial Intelligence and

stemmed from replication of brain circuits. Almost as if a full circle, AI which had allied fields, R.V. College of Engineering,

been inspired from the brain, has also found several applications in the field of Bengaluru, India

Neuroscience and understanding the brain. In this article, we explore these

two domains individually, as well as make an attempt to elucidate how closely *Corresponding author:

correlated they actually are. Siddhartha Sen

Keywords: Artificial intelligence; Neural networks; Machine learning; Brain; Signal

processing; Neuroscience siddharthasen.is17@rvce.edu.in

Tel: +918697701844

Received with Revision July 28, 2020, Accepted: August 12, 2020, Published: August 17, Department of Information Science

2020 with focus on Artificial Intelligence and

allied fields, R.V. College of Engineering,

Introduction Bengaluru, India

Ancient Greek philosophers had spent much of their time

pondering about what truly makes one intelligent. But this Citation: Chakraborty S, Sen S (2020) Mind

concept was embraced in science and research only about half and Machine: Two sides of the same Coin?

a century ago. Neuroscience has strived to understand how the Health Sci J. Vol. 14 No. 5: 742.

brain processes information, makes decisions, and interacts with

the environment. But in the mid-20th century, arose a new school

of thought. How can we simulate intelligence in an artificial dynamics of biological neural systems. The principle is that when

system? And so, Artificial Intelligence (AI) was born (Figure 1). synapses (junctions between cells in the nervous system) fire

repeatedly, the connection gets reinforced. In a biological context,

Artificial Intelligence has been defined as “the science and this is one of the ways that we learn and remember things. The

engineering of making intelligent machines”, by John McCarthy, process is called potentiation of memory. For example, consider

who coined the term in 1956. It aims at enabling machines to simple tasks like finding your way back home or remembering

perform tasks such as computation, prediction and others multiplication tables of 9. They seem quite easy because the

without manual intervention. Having drawn inspiration from the synapses in these pathways have fired very frequently. They have

brain, the ultimate goal of AI is to make our work easier. It does thus become strengthened. Hence, the task seems effortless. The

this by mimicking the way the brain works, and trying to replicate same principle is now used in AI to make machines learn [2].

it in computers.

Following this development, ANNs witnessed an enormous surge

A Brief Historical Perspective in research. An important achievement was the perceptron. This

was an AI model which replicated the information storage and

Brain science and AI have been progressing in a closely knit organization in the brain. Developed by Frank Rosenblatt in 1957,

fashion since the past several decades [1]. Soon after the birth this simple ANN can process multiple inputs to produce a single

of modern computers, research on AI gained momentum, with output. It is, in a way, a replica of a single neuron. Certain input

the goal of building machines that can “think”. With the advent values are entered, acted upon by a mathematical function and

of microscopy in the early 1900s, researchers began imaging outputs are generated. The perceptron laid the foundation for

neuronal connections in brain tissues. The web of connections the subsequent, more complex networks (Figure 3).

between neurons inspired computer scientists to mould the

Artificial Neural Network (ANN). This was one of the earliest and The 1981 Nobel Prize in Physiology or Medicine was awarded to

most effective models in the history of AI (Figure 2). Hubel and Wiesel for elucidating visual processing [3]. Their work

gave insights into how the visual system perceives information.

In 1949, an algorithm set was formulated - the Hebbian learning Using electronic signal detectors they captured the responses

rules. This is one of the oldest learning algorithms inspired by the of neurons when a visual system saw different images. It was

© Copyright iMedPub | This article is available in: http://www.hsj.gr/ 1

Health Science Journal

2020

Vol. 14 No. 5: 742

ISSN 1791-809X

Figure 1 Brain on a Chip.

Image Credits: Mike MacKenzie, via www.vpnsrus.com

Figure 2 Golgi Stained Neurons from Mouse (BL/6) Hippocampus.

Image Credits: NIMHANS, Neurophysiology

2 This article is available in: http://www.hsj.gr/

Health Science Journal

2020

Vol. 14 No. 5: 742

ISSN 1791-809X

Figure 3 Schematic Representation of Perceptron.

Created using Microsoft Corporation (2019) Microsoft PowerPoint.

found that there are several layers of neurons leading up to are to be made. For example, in a face recognition program,

the visual centre in the brain from the eyes. Each layer has its these would be the image pixel value points. These inputs are

own computations to perform. In this way, a biological system then distributed to every node in the hidden layer. With each

can transform simple inputs into complex features. These input a constant called bias (whose value is set to 1) is added.

observations laid the foundation for the modern neural networks In the hidden layer, the bias as well as the predictor variables

which work at many layers or levels. are multiplied by the corresponding weights. The resultants

are added together. An activation function (such as sigmoid)

Artificial Neural Networks is applied on the obtained sum. This becomes the output of a

A human brain is composed of 86 billion neurons, intricately node in the hidden layer. It is then forwarded to the output layer.

connected with other neural cells. Each neuron is a single cell Multiple hidden layers are used to solve more complex problems.

having branches called dendrites which bring in information Finally, the output layer is used for making predictions based on

and a tail-like axon which transmits the processed information another round of calculations [5] (Figure 4).

onto other cells. The main processing occurs in the soma, or cell

body of the neuron. Activation of one neuron creates a spike

Interdisciplinary Nature of AI and

in potential which is transmitted as electrical signals [4]. On Neuroscience

an analogous principle, the neuronal cell body is equivalent to

In the modern day world, AI and Neuroscience are greatly

a node, a basic unit in the artificial network. The dendrites are

interlinked in their applications. The complexity of the brain, its

like the input to this node, and the axon like the output in an

cognitive power, demands multidisciplinary expertise. AI aims

ANN. The action potential of an individual neuron is generated

to investigate theories and build computer systems equipped to

at the junction between the axon and the cell body based on

perform tasks that require human intelligence, decision-making

the inputs it receives. In ANNs, mathematical tools such as linear

and control. Thus, it has an important role in understanding the

combinations and sigmoid functions are used to compute the

working of the brain. By visualising the biological processes in

action potential at a certain node.

an artificial platform, we can gain new insights. But AI can also

If we examine the basic architecture of ANNs, they closely benefit from this, because a detailed analysis of how the brain

resemble the biological connections. A typical ANN consists of at works could provide information into the nature of cognition

least three layers: input, output, hidden. The complexity of the itself, and help in simulating these artificially [6]. Essentially, they

network is increased with an increasing number of hidden layers. are driving each other forward.

The input layer receives a vector or a series of predictor variable

AI has become an indispensable tool in neuroscience. Training an

values. These are the features on the basis of which predictions

algorithm to mimic the qualities of vision and hearing enables us

3 This article is available in: http://www.hsj.gr/

Health Science Journal

2020

Vol. 14 No. 5: 742

ISSN 1791-809X

Figure 4 Representation of ANN Architecture.

Created using Microsoft Corporation (2019) Microsoft PowerPoint.

to understand how the biological network functions. It can also neuroscience investigations such as visual processing or spatial

help crack the activation patterns of individual groups of neurons navigation have already found applications in neural-inspired

that underlie a thought or behaviour. Incorporation of AI into the AI [8,9]. However, biological neural research mostly puts focus

construction of brain implants is making their internal processes, on understanding the mechanisms and form of the brain and

such as identifying electrical spikes of activity, far more effective. other parts. AI is more application-driven, and strives to improve

Machine Learning has greatly simplified the interpretation performance of computational systems. To bridge the gap,

and reconstruction of data obtained from techniques such as a common “language” is the need of the day, a way to convey

Functional Magnetic Resonance Imaging (fMRI), integral to brain results between the two fields in an effective and constructive

research. manner [10].

Future Prospects Conclusion

AI and Neuroscience collectively aim to answer several questions Incorporating Neuroscientific concepts in Artificial Intelligence

which have remained unaddressed for a long time. One such has proven to be computationally quite expensive and the

question is the exact mechanism of learning. . The very essence architecture is difficult to implement in hardware. Attempts

of an ANN lies in how it “learns” by repeated trials, drawing are being made to develop AI systems which will perform tasks

inspiration (or feedback) from the errors in previous trials. It with little or no data to work on (for e.g., One Shot Learning

moulds itself to produce the results which resemble the expected in Computer Vision). This is an algorithm which aims to learn

output most closely. This is done continuously till it finds the information about object categories from one or very few

perfect design. Like a human brain, it trains itself. ANNs mostly training samples [11]. We face such scenarios in our lives - when

perform supervised learning, i.e., where the classes into which we have to perform new categorization tasks having limited or

an object is to be classified is known beforehand. The task of no knowledge regarding the same (for e.g., if someone has seen

image recognition for example, is performed by training neural a ship only once or twice in his life, he will still be able to identify

networks on the ImageNet dataset. The networks develop a it). While incorporating the ideas we get from Neuroscience in

statistical understanding of what images with the same label - hardware is difficult, the extensive usage of high performing

‘dog’, for instance - have in common. When shown a new image, computing platforms such as GPUs (Graphics Processing Unit)

the networks examine it for similar patterns in parameters and shows signs of improvement [12]. The ability to incorporate

respond accordingly [4]. neural algorithms in computer systems should not be limited by

The use of AI to understand higher order brain processes like factors like computer architecture, hardware requirements and

cognition might be explored in the future. processing speed.

To be able to blend AI and neuroscience within an integrated Neural networks are a rough representation of the brain, as it

framework, there are technical hurdles that need to be addressed. models neurons as mathematical functions. On the contrary,

More importantly, if the goals of the two disciplines can merge our brains function as highly sophisticated devices using electro-

better, we might see several developments. Principles from chemical signals. That makes us unique as individuals and

4 This article is available in: http://www.hsj.gr/

Health Science Journal

2020

Vol. 14 No. 5: 742

ISSN 1791-809X

different from machines. It is an undeniable fact that AI has left a

mark on the world in several facets. Nevertheless, for machines

Acknowledgement

to achieve the computational power and mystique of the human We would like to express our heartfelt gratitude to Dr. Pinakpani

brain, may well remain an impossible feat for years to come. Pal, Associate Professor, Electronics and Communication Sciences

Unit, Indian Statistical Institute, Kolkata, for sharing his views on

recent developments in Artificial Intelligence and associated

fields.

in Artificial Intelligence.

References

8 Ponce CR, Xiao W, Schade PF, Hartmann TS, Kreiman G, et al. (2019)

1 Hassabis D, Kumaran D, Summerfield C, Botvinick M (2017)

Evolving images for visual neurons using a deep generative network

Neuroscience-inspired artificial intelligence. Neuron 95: 245-258.

reveals coding principles and neuronal preferences. Cell 177: 999-

2 Choe Y (2014) Hebbian Learning. In: Jaeger D, Jung R (eds) Encyclopedia 1009.

of Computational Neuroscience. Springer, New York, NY.

9 Hawkins J, Lewis M, Klukas M, Purdy S, Ahmad S (2019) A framework

3 Hubel DH (1982) Exploration of the primary visual cortex, 1955– for intelligence and cortical function based on grid cells in the

78. Nature 299: 515-524. neocortex. Front Neural Circuits 12: 121.

4 Sidiropoulou K, Pissadaki EK, Poirazi P (2006) Inside the brain of a 10 Chance FS, Aimone JB, Musuvathy SS, Smith MR, Vineyard CM, et

neuron. EMBO reports 7: 886-892. al. (2020) Crossing the Cleft: Communication Challenges Between

Neuroscience and Artificial Intelligence. Front Comput Neurosci.

5 Sharma V, Rai S, Dev A (2012) A comprehensive study of artificial

neural networks. International Journal of Advanced research in 11 Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot

computer science and software engineering 2. learning. In: Advances in neural information processing systems 30.

6 Potter SM (2007) What can AI get from neuroscience?. In 50 years of 12 Blouw P, Choo X, Hunsberger E, Eliasmith C (2019) Benchmarking

artificial intelligence. Springer, Berlin, Heidelberg. keyword spotting efficiency on neuromorphic hardware. In:

Proceedings of the 7th Annual Neuro-inspired Computational

7 Van der Velde F (2010) Where artificial intelligence and neuroscience

Elements Workshop.

meet: The search for grounded architectures of cognition. Advances

5 This article is available in: http://www.hsj.gr/

You might also like

- The Impact of Artificial Intelligence and Deep Learning in Eye Diseases: A ReviewDocument11 pagesThe Impact of Artificial Intelligence and Deep Learning in Eye Diseases: A ReviewJay CurranNo ratings yet

- An Ancient Wish To Forge The GodsDocument21 pagesAn Ancient Wish To Forge The GodsParam Chadha100% (1)

- Brain Machine InterfaceDocument4 pagesBrain Machine InterfaceIJRASETPublicationsNo ratings yet

- 1 s2.0 S0893608021003683 MainDocument11 pages1 s2.0 S0893608021003683 MainLYCOONNo ratings yet

- 6 ApplicationDocument6 pages6 ApplicationsharathNo ratings yet

- Progress in Brain Computer Interface: Challenges and OpportunitiesDocument20 pagesProgress in Brain Computer Interface: Challenges and OpportunitiesSarammNo ratings yet

- Artificial Intelligence and Psychology Developments andDocument15 pagesArtificial Intelligence and Psychology Developments andJosé Luis PsicoterapeutaNo ratings yet

- Reading Material 2 Human AndmachinesDocument5 pagesReading Material 2 Human AndmachinesKONINIKA BHATTACHARJEE 1950350No ratings yet

- Artificial Intelligence in Medicine Book - 2022 - 2Document15 pagesArtificial Intelligence in Medicine Book - 2022 - 2pedroNo ratings yet

- An Assessment On Humanoid Robot: M. Lakshmi Prasad S. Giridhar - ShahamilDocument6 pagesAn Assessment On Humanoid Robot: M. Lakshmi Prasad S. Giridhar - ShahamilEditor InsideJournalsNo ratings yet

- Iscience: Plasticity and Adaptation in Neuromorphic Biohybrid SystemsDocument26 pagesIscience: Plasticity and Adaptation in Neuromorphic Biohybrid SystemsAndré AlexandreNo ratings yet

- Brain Computer Interface Literature ReviewDocument5 pagesBrain Computer Interface Literature Reviewbujuj1tunag2100% (1)

- Artificial IntelligenceDocument8 pagesArtificial IntelligenceFatimazahra MachkourNo ratings yet

- Blue Brain TechnologyDocument5 pagesBlue Brain TechnologyIJRASETPublicationsNo ratings yet

- 20 - Neurocomputing3Document7 pages20 - Neurocomputing3Abdul AgayevNo ratings yet

- Индивидуальный проект «Artificial Intelligence: pros and cons» («Искусственный интеллект: за и против»)Document8 pagesИндивидуальный проект «Artificial Intelligence: pros and cons» («Искусственный интеллект: за и против»)ВикторияNo ratings yet

- Applications of Artificial Intelligence & Associated TechnologiesDocument6 pagesApplications of Artificial Intelligence & Associated TechnologiesdbpublicationsNo ratings yet

- Neurorobotics Workshop For High School Students Promotes Competence and Confidence in Computational NeuroscienceDocument10 pagesNeurorobotics Workshop For High School Students Promotes Competence and Confidence in Computational NeuroscienceJoshua HernandezNo ratings yet

- Master Thesis Brain Computer InterfaceDocument8 pagesMaster Thesis Brain Computer Interfaceangelagibbsdurham100% (2)

- Human Brain/Cloud Interface: Hypothesis and Theory Published: 29 March 2019 Doi: 10.3389/fnins.2019.00112Document24 pagesHuman Brain/Cloud Interface: Hypothesis and Theory Published: 29 March 2019 Doi: 10.3389/fnins.2019.00112Mark LaceyNo ratings yet

- Triton Int'L Ss/College Subidhanagar-32, KTMDocument25 pagesTriton Int'L Ss/College Subidhanagar-32, KTMShrey AgastiNo ratings yet

- Intelligent Systems for Information Processing: From Representation to ApplicationsFrom EverandIntelligent Systems for Information Processing: From Representation to ApplicationsNo ratings yet

- Lecture 01Document11 pagesLecture 01aimamalik338No ratings yet

- Application of Artificial IntelligenceDocument12 pagesApplication of Artificial IntelligencePranayNo ratings yet

- Facial Emotion Detection Using Deep LearningDocument9 pagesFacial Emotion Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Artificial Intelligence in HealthcareDocument13 pagesArtificial Intelligence in HealthcareJenner FeijoóNo ratings yet

- A Review Paper On General Concepts of "Artificial Intelligence and Machine Learning"Document4 pagesA Review Paper On General Concepts of "Artificial Intelligence and Machine Learning"GOWTHAMI M KNo ratings yet

- Perspectives: On Scientific Understanding With Artificial IntelligenceDocument9 pagesPerspectives: On Scientific Understanding With Artificial IntelligenceElif Ebrar KeskinNo ratings yet

- Ai50Proceedings WebDocument266 pagesAi50Proceedings WebConstanzaPérezNo ratings yet

- Bionic Final PDFDocument4 pagesBionic Final PDFJasmine RaoNo ratings yet

- 1 s2.0 S2414644720300208 MainDocument4 pages1 s2.0 S2414644720300208 MainWindu KumaraNo ratings yet

- Fnhum 16 1055546Document9 pagesFnhum 16 1055546nour el imen mouaffekNo ratings yet

- Explainable AI: Interpreting, Explaining and Visualizing Deep LearningFrom EverandExplainable AI: Interpreting, Explaining and Visualizing Deep LearningWojciech SamekNo ratings yet

- Cognitive Computing: Methodologies For Neural Computing and Semantic Computing in Brain-Inspired SystemsDocument14 pagesCognitive Computing: Methodologies For Neural Computing and Semantic Computing in Brain-Inspired SystemsRaul DiazNo ratings yet

- 35 BlueEyesTechDocument22 pages35 BlueEyesTechsai pavanNo ratings yet

- Intelligent System: Haruki UenoDocument36 pagesIntelligent System: Haruki Uenokanchit pinitmontreeNo ratings yet

- Review Paper On Blue Brain TechnologyDocument8 pagesReview Paper On Blue Brain TechnologyIJRASETPublicationsNo ratings yet

- The Relationship Between Artificial Intelligence and Psychological TheoriesDocument5 pagesThe Relationship Between Artificial Intelligence and Psychological TheoriesSyed Mohammad Ali Zaidi KarbalaiNo ratings yet

- A Review On Methods, Issues and Challenges in Neuromorphic EngineeringDocument6 pagesA Review On Methods, Issues and Challenges in Neuromorphic EngineeringJeeshma Krishnan KNo ratings yet

- Engineering: Guoguang Rong, Arnaldo Mendez, Elie Bou Assi, Bo Zhao, Mohamad SawanDocument11 pagesEngineering: Guoguang Rong, Arnaldo Mendez, Elie Bou Assi, Bo Zhao, Mohamad SawanNitesh LaguriNo ratings yet

- 10.1007@978 3 030 37078 7Document280 pages10.1007@978 3 030 37078 7Pablo Ernesto Vigneaux WiltonNo ratings yet

- Oncología IADocument9 pagesOncología IALuis Eduardo Santaella PalmaNo ratings yet

- Self Study: Oriental Institute of Science & Technology, Bhopal Department of Information TechnologyDocument13 pagesSelf Study: Oriental Institute of Science & Technology, Bhopal Department of Information TechnologyAditi BhateNo ratings yet

- Paper On Blue EyesDocument8 pagesPaper On Blue EyesUnknown MNo ratings yet

- Workshops of The Sixth International Brain Computer Interface Meeting Brain Computer Interfaces Past Present and FutureDocument35 pagesWorkshops of The Sixth International Brain Computer Interface Meeting Brain Computer Interfaces Past Present and FutureANTONIO REYES MEDINANo ratings yet

- FRONTIERS Krakow GDCDocument65 pagesFRONTIERS Krakow GDCGordana Dodig-CrnkovicNo ratings yet

- A Thousand Brains Toward Biologically ConstrainedDocument15 pagesA Thousand Brains Toward Biologically ConstrainedGoal IASNo ratings yet

- A Synopsis Report On: Submitted ToDocument8 pagesA Synopsis Report On: Submitted ToAkshayNo ratings yet

- Brain Chip InterferenceDocument5 pagesBrain Chip InterferenceSuyash MauryaNo ratings yet

- Intelligence To Artificial CreativityDocument13 pagesIntelligence To Artificial CreativityFernandaNo ratings yet

- Artificial Brain: Bharat Ratna Indira Gandhi College of Engineering Kegaon, SolapurDocument3 pagesArtificial Brain: Bharat Ratna Indira Gandhi College of Engineering Kegaon, SolapurApoorv SinghalNo ratings yet

- Integrating Machine Learning With Human KnowledgeDocument27 pagesIntegrating Machine Learning With Human KnowledgeLe Minh Tri (K15 HL)No ratings yet

- A Study of Deep Learning ApplicationsDocument3 pagesA Study of Deep Learning ApplicationsEditor IJTSRDNo ratings yet

- Artificial Intelligence (AI)Document6 pagesArtificial Intelligence (AI)Pankaj Kumar JhaNo ratings yet

- Bionic Eye - An Artificial Vision & Comparative Study Based On Different Implant TechniquesDocument9 pagesBionic Eye - An Artificial Vision & Comparative Study Based On Different Implant TechniquesGabi BermudezNo ratings yet

- Summary Buku Introduction To Management - John SchermerhornDocument5 pagesSummary Buku Introduction To Management - John SchermerhornElyana BiringNo ratings yet

- Control 4Document17 pagesControl 4muhamed mahmoodNo ratings yet

- Literature & MedicineDocument14 pagesLiterature & MedicineJoyce LeungNo ratings yet

- Data Center Cooling SolutionsDocument24 pagesData Center Cooling SolutionsGemelita Ortiz100% (1)

- Cree J Series™ 2835 Leds: Product Description FeaturesDocument28 pagesCree J Series™ 2835 Leds: Product Description FeaturesLoengrin MontillaNo ratings yet

- Highway Weigh-In-Motion (WIM) Systems With User Requirements and Test MethodsDocument18 pagesHighway Weigh-In-Motion (WIM) Systems With User Requirements and Test MethodsFayyaz Ahmad KhanNo ratings yet

- Installation Manual - Chain Link FenceDocument2 pagesInstallation Manual - Chain Link FenceChase GietterNo ratings yet

- Chapter 5 - Adlerian TherapyDocument28 pagesChapter 5 - Adlerian TherapyRhalf100% (1)

- Last Boat Not Least - An Unofficial Adventure For Fallout 2d20Document14 pagesLast Boat Not Least - An Unofficial Adventure For Fallout 2d20Veritas Veritati100% (3)

- Sports Tournament Management SystemDocument6 pagesSports Tournament Management SystemPusparajan PapusNo ratings yet

- Biology 20 Unit A Exam OutlineDocument1 pageBiology 20 Unit A Exam OutlineTayson PreteNo ratings yet

- Physics Notes AJk 9th Class Chap6Document3 pagesPhysics Notes AJk 9th Class Chap6Khizer Tariq QureshiNo ratings yet

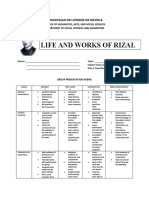

- Rubrics - Reporting - RizalDocument2 pagesRubrics - Reporting - RizaljakeNo ratings yet

- Lambda Calculus CLCDocument38 pagesLambda Calculus CLCDan Mark Pidor BagsicanNo ratings yet

- Lyra Kate Mae P. Salvador BSA-1 MANSCI-446 Course Final RequirementDocument5 pagesLyra Kate Mae P. Salvador BSA-1 MANSCI-446 Course Final RequirementLyra Kate Mae SalvadorNo ratings yet

- Further MathematicsDocument44 pagesFurther MathematicsMohd Tirmizi100% (1)

- Chart-5050 by Songram BMA 54thDocument4 pagesChart-5050 by Songram BMA 54thMd. Noor HasanNo ratings yet

- Fallas de ManeaderoDocument17 pagesFallas de ManeaderoGerman Martinez RomoNo ratings yet

- Format of Actual BatchDocument16 pagesFormat of Actual Batchaljhon dela cruzNo ratings yet

- Prelude by Daryll DelgadoDocument3 pagesPrelude by Daryll DelgadoZion Tesalona30% (10)

- SAEP-35 Valves Handling, Hauling, Receipt, Storage, and Testing-15 April 2020Document10 pagesSAEP-35 Valves Handling, Hauling, Receipt, Storage, and Testing-15 April 2020Ahdal NoushadNo ratings yet

- ANSYS SimplorerDocument2 pagesANSYS Simplorerahcene2010No ratings yet

- Estadistica MultivariableDocument360 pagesEstadistica MultivariableHarley Pino MuñozNo ratings yet

- Glazing Risk AssessmentDocument6 pagesGlazing Risk AssessmentKaren OlivierNo ratings yet

- COMSOL WebinarDocument38 pagesCOMSOL WebinarkOrpuzNo ratings yet

- lmp91000 PDFDocument31 pageslmp91000 PDFJoão CostaNo ratings yet

- Bash SHELL Script in LinuxDocument6 pagesBash SHELL Script in LinuxHesti WidyaNo ratings yet

- Miele G 666Document72 pagesMiele G 666tszabi26No ratings yet

- Mathematical Derivation of Optimum Fracture Conductivity For Pseudo-Steady State and Steady State Flow Conditions: FCD Is Derived As 1.6363Document12 pagesMathematical Derivation of Optimum Fracture Conductivity For Pseudo-Steady State and Steady State Flow Conditions: FCD Is Derived As 1.6363Christian BimoNo ratings yet

- Electronic Transformer For 12 V Halogen LampDocument15 pagesElectronic Transformer For 12 V Halogen LampWin KyiNo ratings yet