Professional Documents

Culture Documents

Chapter 2

Uploaded by

Mahamad AliOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter 2

Uploaded by

Mahamad AliCopyright:

Available Formats

Automated

experiment tracking

F U L LY A U T O M AT E D M L O P S

Arturo Opsetmoen Amador

Senior Consultant - Machine Learning

Machine learning has an experimental nature

Many "levers" to experiment with:

Data transformations

Features used

Training algorithms

Hyperparameter tuning

Evaluation metrics

Precision, recall, etc.

FULLY AUTOMATED MLOPS

A large space of possibilities!

FULLY AUTOMATED MLOPS

Problems of Manual Machine Learning Workflows

Lack of automation = problems in manual ML workflows

Difficult to track experiments and results

Waste time and resources

Hard to reproduce/share results

FULLY AUTOMATED MLOPS

Automated logging in ML

Important things to log include:

Code

Environment

Data

Parameters

Metrics

FULLY AUTOMATED MLOPS

The importance of logging

Logging is essential for:

The reproducibility of experiments in ML systems.

Tracking system performance and making informed decisions.

Identifying potential issues for improvement.

Reproducibility provides transparency and is crucial to make our systems trustworthy.

FULLY AUTOMATED MLOPS

Automated experiment tracking system

Organize logs per run, or experiment to:

See model training metadata

Compare model training runs

Reproduce model training runs

FULLY AUTOMATED MLOPS

Automated experiment tracking - Today's market

Several tools that automate experiment

tracking:

TensorBoard

MLFlow

Weights & Biases

Neptune

FULLY AUTOMATED MLOPS

Let's practice!

F U L LY A U T O M AT E D M L O P S

The model registry

F U L LY A U T O M AT E D M L O P S

Arturo Opsetmoen Amador

Senior Consultant - Machine Learning

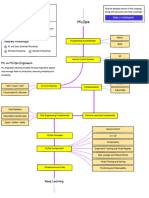

The ML model lifecycle

FULLY AUTOMATED MLOPS

Throwing a model over the fence

FULLY AUTOMATED MLOPS

A first step towards automated MLOps

FULLY AUTOMATED MLOPS

What is the model registry?

FULLY AUTOMATED MLOPS

What is the model registry? - Experimentation

FULLY AUTOMATED MLOPS

What is the model registry? - Registering a model

FULLY AUTOMATED MLOPS

What is the model registry? - Updated deployment

FULLY AUTOMATED MLOPS

What is the model registry? - Model decommission

FULLY AUTOMATED MLOPS

Let's practice!

F U L LY A U T O M AT E D M L O P S

The feature store in

an automated

MLOps architecture

F U L LY A U T O M AT E D M L O P S

Arturo Opsetmoen Amador

Senior Consultant - Machine Learning

Features in machine learning

Feature Engineering Examples:

Select, manipulate, and transform raw data

Numerical transformations

sources to create features used as input for

our ML algorithms Encoding of categories

Grouping of values

Constructing new features

FULLY AUTOMATED MLOPS

Feature engineering in the enterprise

FULLY AUTOMATED MLOPS

Feature engineering in the enterprise

FULLY AUTOMATED MLOPS

Feature engineering in the enterprise

FULLY AUTOMATED MLOPS

The feature store

Centralized feature repository

Avoid duplication of work with automation

Transformation standardization

Centralized storage

Feature serving for batch and real-time

FULLY AUTOMATED MLOPS

The feature store - Accelerated experimentation

Accelerated experimentation

Data extracts for experiments

Feature discovery

Avoids multiple definitions for identical

features

FULLY AUTOMATED MLOPS

The feature store - Continuous training

Continuous Training (CT)

Data extracts for automated pipelines in

prod

FULLY AUTOMATED MLOPS

The feature store - Online predictions

Online predictions

Use pre-defined features for prediction

services

FULLY AUTOMATED MLOPS

The feature store - Environment symmetry

Avoids training-serving skew

FULLY AUTOMATED MLOPS

Let's practice!

F U L LY A U T O M AT E D M L O P S

The metadata store

F U L LY A U T O M AT E D M L O P S

Arturo Opsetmoen Amador

Senior Consultant - Machine Learning

What is metadata in MLOps?

Metadata is the information about the artifacts

created during the execution of different components of an ML pipeline

Metadata examples:

Data versioning: Different versions of the same data are kept

Metadata about training artifacts such as hyperparameters

Pipeline execution logs

1 https://datacentricai.org/

FULLY AUTOMATED MLOPS

Important aspects of metadata in ML

Data lineage

Reproducibility

Monitoring

Regulatory

FULLY AUTOMATED MLOPS

The importance of metadata - Data lineage

Data lineage metadata tracks the information about data:

from its creation point

to the points of consumption

FULLY AUTOMATED MLOPS

The importance of metadata - Reproducibility

The metadata about our machine learning experiments:

Allows others to reproduce our results

Increases trust in our ML systems

Introduces scientific rigor to our ML process

FULLY AUTOMATED MLOPS

The importance of metadata - Monitoring

It allows machine learning engineers to

Follow the execution of the different parts of the MLOps pipeline

Check the status of the ML system at any time

FULLY AUTOMATED MLOPS

Example monitoring tool

1 https://cloud.google.com/stackdriver/docs/solutions/gke/observing

FULLY AUTOMATED MLOPS

The metadata store

Centralized place to manage all the MLOps

metadata about:

experiments (logs)

artifacts

models

pipelines

It has a user interface that allows us to:

read and write all model-related

metadata

1 https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation-pipelines-in-machine-

learning

FULLY AUTOMATED MLOPS

The metadata store in an MLOps architecture

FULLY AUTOMATED MLOPS

Metadata store in fully automated MLOps

It enables the automatic monitoring of the functioning of the fully automated MLOps pipeline

Facilitating automatic incident response. For example,

Automatic model re-training

Automatic rollbacks

FULLY AUTOMATED MLOPS

Let's practice!

F U L LY A U T O M AT E D M L O P S

You might also like

- Systemize Your BusinessDocument27 pagesSystemize Your BusinessvlaseNo ratings yet

- Spare Parts Inventory MEADocument161 pagesSpare Parts Inventory MEAGrupo ValoracionNo ratings yet

- Hvac AbbreviationsDocument10 pagesHvac Abbreviationsjoshua florentinNo ratings yet

- Automation Studio 6 PDFDocument32 pagesAutomation Studio 6 PDFgabriel122050% (4)

- (Mathematics and Its Applications - Modelling, Engineering, and Social Sciences) Bhaben Chandra Kalita - Tensor Calculus and Applications - Simplified Tools and Techniques-CRC Press (2019)Document175 pages(Mathematics and Its Applications - Modelling, Engineering, and Social Sciences) Bhaben Chandra Kalita - Tensor Calculus and Applications - Simplified Tools and Techniques-CRC Press (2019)Mahamad AliNo ratings yet

- Mule SOft For ABDocument134 pagesMule SOft For ABkiran kumarNo ratings yet

- Sabp K 001Document21 pagesSabp K 001Anonymous B7pghhNo ratings yet

- 96-8000 English MillDocument296 pages96-8000 English MillcadcamshopNo ratings yet

- MLops ConceptDocument20 pagesMLops Conceptsuster.kayangNo ratings yet

- MATLAB Course Session 1 Introduction To Modelling and SimulationDocument59 pagesMATLAB Course Session 1 Introduction To Modelling and SimulationMahamod IsmailNo ratings yet

- Machine Learning Workflow EbookDocument22 pagesMachine Learning Workflow EbookNguyen Thi Hoang GiangNo ratings yet

- MLflow - An Open Platform To Simplify The Machine Learning Lifecycle Presentation 1Document28 pagesMLflow - An Open Platform To Simplify The Machine Learning Lifecycle Presentation 1shak KathirvelNo ratings yet

- Mlops: Continuous Delivery and Automation Pipelines in Machine LearningDocument14 pagesMlops: Continuous Delivery and Automation Pipelines in Machine LearningKady Yadav100% (1)

- Webinar Slides MlopsDocument35 pagesWebinar Slides Mlopsronaldo.zani100% (1)

- Pyspark MaterialDocument16 pagesPyspark MaterialgokulNo ratings yet

- A Guide To MLOpsDocument3 pagesA Guide To MLOpsJuan Gonzalez-EspinosaNo ratings yet

- HDLBusPro English WebinarDocument89 pagesHDLBusPro English WebinarNikolay Rusanov100% (1)

- PPT08-Big Data Analytics (Apache Spark & SparkML)Document53 pagesPPT08-Big Data Analytics (Apache Spark & SparkML)TsabitAlaykRidhollahNo ratings yet

- Mule Soft For ABDocument68 pagesMule Soft For ABshaik shazilNo ratings yet

- K-1028E A - C (Air Conditioner) Universal Remote Control 1000 in 1 (York Replacement Remote)Document12 pagesK-1028E A - C (Air Conditioner) Universal Remote Control 1000 in 1 (York Replacement Remote)CandisftNo ratings yet

- Radeki de Dovnic Manufacturing - Case Study CollaborationDocument29 pagesRadeki de Dovnic Manufacturing - Case Study CollaborationSNOOFY100% (3)

- Chapter 3Document53 pagesChapter 3Mahamad AliNo ratings yet

- Chapter 1Document45 pagesChapter 1Mahamad AliNo ratings yet

- Chapter 4Document49 pagesChapter 4Mahamad AliNo ratings yet

- MLOps Asilla 20221124Document16 pagesMLOps Asilla 20221124khanh chuhuuNo ratings yet

- 14 Python Automl Frameworks Data Scientists Can UseDocument3 pages14 Python Automl Frameworks Data Scientists Can UseMamafouNo ratings yet

- Automlmicrosoftazure 1625847967437Document71 pagesAutomlmicrosoftazure 1625847967437Nikhil RNo ratings yet

- Enhancing Podcast Accessibility - A Guide To LLM Text Highlighting - Analytics VidhyaDocument14 pagesEnhancing Podcast Accessibility - A Guide To LLM Text Highlighting - Analytics VidhyazishankamalNo ratings yet

- Streamlining Machine Learning Pipelines in Python 1703072313Document8 pagesStreamlining Machine Learning Pipelines in Python 1703072313kddhjm5ybpNo ratings yet

- Deeplearning - Ai Deeplearning - AiDocument91 pagesDeeplearning - Ai Deeplearning - AiJian QuanNo ratings yet

- Mlops: A Reality!!!!Document5 pagesMlops: A Reality!!!!Asish KumarNo ratings yet

- Importance - of - AutoML For Augmented AnalyticsDocument17 pagesImportance - of - AutoML For Augmented AnalyticsAnurag VishnoiNo ratings yet

- Data ScienceDocument38 pagesData ScienceDINESH REDDYNo ratings yet

- Doulos SNUG08 PaperDocument22 pagesDoulos SNUG08 PaperpriyajeejoNo ratings yet

- Automated Machine Learning PracticesDocument1 pageAutomated Machine Learning Practiceskinarofelix12No ratings yet

- End-to-End Machine Learning With TensorFlow On GCPDocument150 pagesEnd-to-End Machine Learning With TensorFlow On GCPGerald Sng Khoon KhaiNo ratings yet

- Evaluation and Comparison of AutoML Approaches and ToolsDocument9 pagesEvaluation and Comparison of AutoML Approaches and ToolsMathias RosasNo ratings yet

- How Mlops Helps Build Better Machine Learning ModelsDocument4 pagesHow Mlops Helps Build Better Machine Learning ModelsHanu TiwariNo ratings yet

- Data ScienceDocument39 pagesData ScienceMohamed HarunNo ratings yet

- MlopsDocument1 pageMlopssam zakNo ratings yet

- AIDI 1010 WEEK3 (A) v1.4Document24 pagesAIDI 1010 WEEK3 (A) v1.4Shafat KhanNo ratings yet

- V4 - MLOps Product BriefDocument5 pagesV4 - MLOps Product BriefGador OneNo ratings yet

- MLOPs Artem KovalDocument38 pagesMLOPs Artem KovalchNo ratings yet

- Automated Machine Learning - Docx FinalDocument15 pagesAutomated Machine Learning - Docx Finalhemantreddy290No ratings yet

- OPAL-RT AcademyDocument30 pagesOPAL-RT AcademysatyajitNo ratings yet

- Automated Deployment of Modelica Models in Excel Via Functional Mockup Interface and Integration With modeFRONTIERDocument10 pagesAutomated Deployment of Modelica Models in Excel Via Functional Mockup Interface and Integration With modeFRONTIERjwpaprk1No ratings yet

- Chapter 5 Introduction To ML-1Document32 pagesChapter 5 Introduction To ML-1Hasina mohamedNo ratings yet

- Getting Started With MLOPs 21 Page TutorialDocument21 pagesGetting Started With MLOPs 21 Page TutorialMamafouNo ratings yet

- Machine LDocument71 pagesMachine LANDREA AREVALONo ratings yet

- Mock Based Unit TestingDocument17 pagesMock Based Unit TestingUdayan DattaNo ratings yet

- The Machine Learning Lifecycle in 2021Document20 pagesThe Machine Learning Lifecycle in 2021JeanNo ratings yet

- MLopsDocument43 pagesMLopsAbhiram AcharyaNo ratings yet

- Unit 9 Online Analytical Processing (OLAP) : StructureDocument17 pagesUnit 9 Online Analytical Processing (OLAP) : StructuregaardiNo ratings yet

- Introduction To AIOps - SimplilearnDocument14 pagesIntroduction To AIOps - SimplilearnAmarNo ratings yet

- InternshipDocument31 pagesInternshipsuji myneediNo ratings yet

- 93060v00 Predictive Maintenance Ebook v04Document8 pages93060v00 Predictive Maintenance Ebook v04Netrino QuarksNo ratings yet

- 93060v00 Predictive Maintenance Ebook v04 PDFDocument8 pages93060v00 Predictive Maintenance Ebook v04 PDFramaananNo ratings yet

- Name M Qamar PLCDocument10 pagesName M Qamar PLCm qamarNo ratings yet

- Monitoring Machine Learning Models in ProductionDocument24 pagesMonitoring Machine Learning Models in ProductionNijat ZeynalovNo ratings yet

- Documentacao LangchainDocument53 pagesDocumentacao LangchainpreesendeNo ratings yet

- Sem 3 Project Report IIT-ISMDocument20 pagesSem 3 Project Report IIT-ISMJackNo ratings yet

- What Is Embedded MLDocument3 pagesWhat Is Embedded MLmagep92240No ratings yet

- CON9714 - de Gruyter-CON9714 - Oracle Management Cloud Best Practices For Mining Oracle Enterprise Manager DataDocument42 pagesCON9714 - de Gruyter-CON9714 - Oracle Management Cloud Best Practices For Mining Oracle Enterprise Manager DatasbabuindNo ratings yet

- Azure Machine Learning Azureml API 2Document3,901 pagesAzure Machine Learning Azureml API 2Bobby JoshiNo ratings yet

- An Empirical Study On The Usage of Automated Machine Learning ToolsDocument12 pagesAn Empirical Study On The Usage of Automated Machine Learning Toolschin keong HoNo ratings yet

- MATLAB With TensorFlow and PyTorch (TechSource)Document32 pagesMATLAB With TensorFlow and PyTorch (TechSource)Hartanto TantriawanNo ratings yet

- A Practical Guide To Adopting The Universal Verification Methodology (Uvm) Second EditionDocument2 pagesA Practical Guide To Adopting The Universal Verification Methodology (Uvm) Second EditionDitzsu tzsuDiNo ratings yet

- Machine Learning with Python: A Comprehensive Guide with a Practical ExampleFrom EverandMachine Learning with Python: A Comprehensive Guide with a Practical ExampleNo ratings yet

- Lecture08 4upDocument5 pagesLecture08 4upMahamad AliNo ratings yet

- Untitled 3Document1 pageUntitled 3Mahamad AliNo ratings yet

- MartDocument4 pagesMartMahamad AliNo ratings yet

- Cs410 Notes Ch4Document5 pagesCs410 Notes Ch4Mahamad AliNo ratings yet

- Alg 07Document17 pagesAlg 07Mahamad AliNo ratings yet

- Introduction To Java Programming and Data Structures, 11E, YDocument12 pagesIntroduction To Java Programming and Data Structures, 11E, YMahamad AliNo ratings yet

- LoopDocument5 pagesLoopMahamad AliNo ratings yet

- Mathematica 1Document8 pagesMathematica 1Mahamad Ali100% (1)

- Publication 12 19898 300Document7 pagesPublication 12 19898 300Mahamad AliNo ratings yet

- Sigma MaleDocument37 pagesSigma MaleMahamad AliNo ratings yet

- (MCQ) - Data Warehouse and Data Mining - LMT2Document6 pages(MCQ) - Data Warehouse and Data Mining - LMT2Mahamad AliNo ratings yet

- CommNetw Tut9 SolutionDocument4 pagesCommNetw Tut9 SolutionMahamad AliNo ratings yet

- (MCQ) - Data Warehouse and Data Mining - LMT3Document5 pages(MCQ) - Data Warehouse and Data Mining - LMT3Mahamad AliNo ratings yet

- (MCQ) - Data Warehouse and Data Mining - LMT5Document4 pages(MCQ) - Data Warehouse and Data Mining - LMT5Mahamad AliNo ratings yet

- (MCQ) - Data Warehouse and Data Mining - LMTDocument5 pages(MCQ) - Data Warehouse and Data Mining - LMTMahamad AliNo ratings yet

- (MCQ) - Data Warehouse and Data Mining - LMT4Document9 pages(MCQ) - Data Warehouse and Data Mining - LMT4Mahamad AliNo ratings yet

- ScheduleDocument6 pagesScheduleMahamad AliNo ratings yet

- Cs FortevolutionDocument4 pagesCs FortevolutionMahamad AliNo ratings yet

- 00-FSSR CS512 2022T1 AdministriviaDocument7 pages00-FSSR CS512 2022T1 AdministriviaMahamad AliNo ratings yet

- AlgorithmDocument17 pagesAlgorithmMahamad AliNo ratings yet

- Smart Metering System For Electrical Power DistributionDocument6 pagesSmart Metering System For Electrical Power DistributionSkumaran KumaranNo ratings yet

- DCCatGlobalDec2016 d2Document70 pagesDCCatGlobalDec2016 d2Osvaldo DiazNo ratings yet

- The Impact of Artificial Intelligence and BlockchaDocument21 pagesThe Impact of Artificial Intelligence and BlockchaFreeman AbuNo ratings yet

- IEC 61850 Testing Tools ENUDocument4 pagesIEC 61850 Testing Tools ENUtheloniussherekNo ratings yet

- Masterdrives Motion ControlDocument16 pagesMasterdrives Motion ControlRaj ChavanNo ratings yet

- Catalog ITEM - Pdf4a3667894b315Document552 pagesCatalog ITEM - Pdf4a3667894b315Domokos LeventeNo ratings yet

- EE428 Industrail Process Control - OutlineDocument3 pagesEE428 Industrail Process Control - OutlineabdurrehmanNo ratings yet

- Robot ExcavatorDocument9 pagesRobot ExcavatorHua Hidari YangNo ratings yet

- Unit - 1 - IntroductionDocument61 pagesUnit - 1 - IntroductionSatheesh KumarNo ratings yet

- 338 Instrumentation and Control Engg. NSQF Irdt PDFDocument230 pages338 Instrumentation and Control Engg. NSQF Irdt PDFAnshu MishraNo ratings yet

- HVAC ReportDocument5 pagesHVAC ReportrazahNo ratings yet

- Ilensys Fresher Hiring ProcessDocument12 pagesIlensys Fresher Hiring ProcessSagar AyushNo ratings yet

- Estimating Exteranl and Internal Pressure Drop For AHU and FCUDocument6 pagesEstimating Exteranl and Internal Pressure Drop For AHU and FCUfghabboonNo ratings yet

- Hotel Management System ThesisDocument5 pagesHotel Management System Thesisvictorialeonlittlerock100% (2)

- Chapter 2-IT, Culture, TrendsDocument31 pagesChapter 2-IT, Culture, TrendsEster Sabanal GabunilasNo ratings yet

- Audible Compendium Prediction MachineDocument16 pagesAudible Compendium Prediction MachineHl LiewNo ratings yet

- The Future of Work and Learning: in The Age of The 4th Industrial RevolutionDocument28 pagesThe Future of Work and Learning: in The Age of The 4th Industrial Revolutionmoctapka088No ratings yet

- HSEMS BrochureDocument2 pagesHSEMS BrochureAhmed GhariebNo ratings yet

- Financial Management and Decision Making Enhancement Through Integration of Accounting Information System Among Multi Purpose Cooperatives in General Santos City Chapters 1 and 2 EditedDocument15 pagesFinancial Management and Decision Making Enhancement Through Integration of Accounting Information System Among Multi Purpose Cooperatives in General Santos City Chapters 1 and 2 EditedDenise Jane RoqueNo ratings yet

- Material List: Produced On 11/01/2022 With Xpress Selection V9.8.0 - Database DIL 17.2.1Document33 pagesMaterial List: Produced On 11/01/2022 With Xpress Selection V9.8.0 - Database DIL 17.2.1Sicologo CimeNo ratings yet

- Hvac Syatems, Abu DhabiDocument2 pagesHvac Syatems, Abu DhabiAbhilash JosephNo ratings yet