Professional Documents

Culture Documents

Sentimental Analysis Using NLP

Sentimental Analysis Using NLP

Uploaded by

Vicky NagarCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sentimental Analysis Using NLP

Sentimental Analysis Using NLP

Uploaded by

Vicky NagarCopyright:

Available Formats

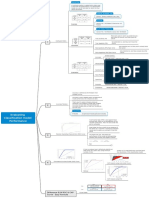

From the Tweet text

1 Finding the Problem Identifying the Sentiments from the text

2 Collecting Dataset Twitter Sentiment dataset

3 Load & Summarize dataset

4 Segregating Dataset into X & Y

Removing the Special

5. characters, Symbols with

Regular Expressions

Removing Stop words and

6

Extracting Features

Splitting Dataset into Train and

7

Test

Load Machine Learning

8

Algorithm

Sentimental

Analysis using 9 Predict with Test Dataset

NLP

Example

A confusion matrix is a table that is often

used to describe the performance of a COVID – 19 - Binary prediction (Yes / No)

classification model on a set of test data for Total no. of Patient : 165

which the true values are known. Real Data:

Covid Yes – 105 Patient

No Covid – 60 Patient

Our ML Predicted Data:

Covid Yes – 110 Patient

No Covid – 55 Patient

These are cases in which we predicted yes (

True positives (TP) they have the covid), and they have the

covid.

We predicted no, and they don't have the

True negatives (TN)

covid.

Elements

We predicted yes, but they don't actually

False positives (FP) have the covid. (Also known as a "Type I

error.")

Evaluating with Confusion

10 Confusion Matrix We predicted no, but they actually have the

Matrix

False negatives (FN) covid. (Also known as a "Type II error.")

Example

Accuracy & Loss Calculation from Confusion Accuracy: Overall correct.

Matrix

(TP+TN)/total = (100+50)/165 = 0.91

Misclassification Rate: Overall wrong | Error

Rate

(FP+FN)/total = (10+5)/165 = 0.09

Calculation

True Positive Rate: Sensitivity or Recall

TP/actual yes = 100/105 = 0.95

False Positive Rate:

Other Calculation

FP/actual no = 10/60 = 0.17

True Negative Rate: Specificity

TN/actual no = 50/60 = 0.83

Precision:

TP/predicted yes = 100/110 = 0.91

Prevalence :

actual yes/total = 105/165 = 0.64

You might also like

- Granqvist & Inyama - Power and Powerlessness of Women in West African OralityDocument139 pagesGranqvist & Inyama - Power and Powerlessness of Women in West African Oralitysector4bk100% (1)

- Screening Epidemiology: Ansariadi, PHDDocument29 pagesScreening Epidemiology: Ansariadi, PHDjilyana adam0% (1)

- Novel Vaccine Approaches (Yiming Shao)Document29 pagesNovel Vaccine Approaches (Yiming Shao)National Press Foundation100% (1)

- Confusion MatrixDocument4 pagesConfusion MatrixMeet LukkaNo ratings yet

- Concrete Bridge ProposalDocument8 pagesConcrete Bridge ProposalJONAM67% (9)

- 06 - Natural Experiment (Part 1) PDFDocument89 pages06 - Natural Experiment (Part 1) PDFJoe23232232No ratings yet

- Emergency Code Used in HospitalsDocument8 pagesEmergency Code Used in HospitalssridhartrichyNo ratings yet

- Effects of Meditation On Brain Coherence and IntelligenceDocument4 pagesEffects of Meditation On Brain Coherence and IntelligenceHugo EstefanoNo ratings yet

- English 6 Workbook PDFDocument6 pagesEnglish 6 Workbook PDFAna Tsinaridze100% (1)

- Gec103 - Week2 - Origins and History of GlobalizationDocument19 pagesGec103 - Week2 - Origins and History of GlobalizationAngel Joy Calisay100% (2)

- English Week 4 Q3Document128 pagesEnglish Week 4 Q3Marlene Tagavilla-Felipe Diculen100% (1)

- Evaluating Classification Model PerformanceDocument1 pageEvaluating Classification Model PerformanceKUET BME 18No ratings yet

- Week 1Document38 pagesWeek 1khizerNo ratings yet

- Confusion MatrixDocument4 pagesConfusion MatrixWafa'a AbdoIslamNo ratings yet

- Confusion Matrix: Example Table of Confusion References External LinksDocument3 pagesConfusion Matrix: Example Table of Confusion References External LinksJohn DoeNo ratings yet

- DA For Mathematical Modelling Theory 20BCE0985Document11 pagesDA For Mathematical Modelling Theory 20BCE0985Ady SarangNo ratings yet

- EPI Formula SheetDocument4 pagesEPI Formula Sheet2rpghsjrsdNo ratings yet

- 3.0 Unit # 3 (Part I) - DSC7701Document15 pages3.0 Unit # 3 (Part I) - DSC7701DishankBaidNo ratings yet

- Stats Project 2023 MarchDocument21 pagesStats Project 2023 MarchAlishba RasulNo ratings yet

- Questions Bank JciDocument18 pagesQuestions Bank JciVenice s SantosNo ratings yet

- ClassificationDocument2 pagesClassificationDooja SedaliNo ratings yet

- (14374331 - Clinical Chemistry and Laboratory Medicine (CCLM) ) Towards The Rational Utilization of SARS-CoV-2 Serological Tests in Clinical PracticeDocument3 pages(14374331 - Clinical Chemistry and Laboratory Medicine (CCLM) ) Towards The Rational Utilization of SARS-CoV-2 Serological Tests in Clinical PracticeSisca PrimaNo ratings yet

- Hai Toolkit For LTCDocument82 pagesHai Toolkit For LTCShannaNo ratings yet

- Decoding by Linear Programming Candes Tao 2005Document13 pagesDecoding by Linear Programming Candes Tao 2005SHASWATA DUTTANo ratings yet

- Predictive Value - SXTKDocument29 pagesPredictive Value - SXTKHạnh VũNo ratings yet

- Regis Mani AAOMPT Poster 2008Document1 pageRegis Mani AAOMPT Poster 2008smokey73No ratings yet

- Kappa Viera GarrettDocument4 pagesKappa Viera GarrettBarzan AliNo ratings yet

- Artificial IntelligenceDocument29 pagesArtificial IntelligenceSkaManojNo ratings yet

- HIV - AIDSDocument1 pageHIV - AIDSMa. Ferimi Gleam BajadoNo ratings yet

- Fault Models, Detection & Simulation Fault Models, Detection & Simulation - Organization OrganizationDocument31 pagesFault Models, Detection & Simulation Fault Models, Detection & Simulation - Organization OrganizationAdhi SuruliNo ratings yet

- VM Covid 2021Document30 pagesVM Covid 2021Diego CrucesNo ratings yet

- Easing: ProcessDocument4 pagesEasing: Processhoangnam147680No ratings yet

- CD Elecsys HIV Combi PT 4th Generation Sell Sheet PP US 11222Document2 pagesCD Elecsys HIV Combi PT 4th Generation Sell Sheet PP US 11222Efraín RiveraNo ratings yet

- Deep Net Model For Detection of Covid-19 Using Radiographs Based On ROC AnalysisDocument6 pagesDeep Net Model For Detection of Covid-19 Using Radiographs Based On ROC AnalysisjoteyNo ratings yet

- EvaluationDocument10 pagesEvaluationNikita AgrawalNo ratings yet

- Unified Covid-19 Algorithms: Guidelines For Primary CareDocument9 pagesUnified Covid-19 Algorithms: Guidelines For Primary Careagnes bastonNo ratings yet

- Article - Calculation For Chi-Square TestsDocument3 pagesArticle - Calculation For Chi-Square TestsKantri YantriNo ratings yet

- JCS: An Explainable COVID-19 Diagnosis System by Joint Classification and SegmentationDocument11 pagesJCS: An Explainable COVID-19 Diagnosis System by Joint Classification and Segmentationzj xiaoNo ratings yet

- Lowess Notes: Proportion of Individuals Without The Disease Whom Test Is NegativeDocument11 pagesLowess Notes: Proportion of Individuals Without The Disease Whom Test Is NegativeCoffee MochaNo ratings yet

- Chapter 3: Bayes Theory: ObjectiveDocument33 pagesChapter 3: Bayes Theory: ObjectiveLê NgọcNo ratings yet

- Stats & ProbDocument4 pagesStats & ProbTeshia LuceroNo ratings yet

- Analisis de Precision Del Modelos Predictivos v2Document5 pagesAnalisis de Precision Del Modelos Predictivos v2cesarlachapelleNo ratings yet

- Binary Classification PDFDocument27 pagesBinary Classification PDFashish kadamNo ratings yet

- Systematic Review and Meta-Analysis On The Value of Chest CT in The Diagnosis of Coronavirus Disease (COVID-19) : Sol ScientiaeDocument9 pagesSystematic Review and Meta-Analysis On The Value of Chest CT in The Diagnosis of Coronavirus Disease (COVID-19) : Sol Scientiaexoleoni97No ratings yet

- Document8 1Document6 pagesDocument8 1api-589106454No ratings yet

- Rollout Session 4 - Overview of The SmartVA QuestionnaireDocument25 pagesRollout Session 4 - Overview of The SmartVA QuestionnaireRaymond FolNo ratings yet

- 3b. IntroductionToMachineLearningDocument42 pages3b. IntroductionToMachineLearningGauravNo ratings yet

- Machine Learning Techniques To Predict Different Levels of Hospital Care of Covid-19Document26 pagesMachine Learning Techniques To Predict Different Levels of Hospital Care of Covid-19Èlgoog ìnvertionNo ratings yet

- Analytic Method:: Model EvaluationDocument17 pagesAnalytic Method:: Model EvaluationainNo ratings yet

- Estimating RiskDocument47 pagesEstimating RiskEvan Permana PutraNo ratings yet

- 08.06 Model EvaluationDocument28 pages08.06 Model EvaluationMuhammad UmairNo ratings yet

- Affimed Presentation-Mar2020 Final-1 PDFDocument22 pagesAffimed Presentation-Mar2020 Final-1 PDFElio GonzalezNo ratings yet

- Fault Models, Detection & Simulation Fault Models, Detection & Simulation - Organization OrganizationDocument31 pagesFault Models, Detection & Simulation Fault Models, Detection & Simulation - Organization OrganizationaanbalanNo ratings yet

- Diagnostic Talk 2012Document28 pagesDiagnostic Talk 2012Felix Nathan TrisnadiNo ratings yet

- C1893753 CW1 ReportDocument6 pagesC1893753 CW1 ReportSubhro MitraNo ratings yet

- Detection of Low Contrast Lesions in Computed Body Tomogarphy - An Experimental Study of Simulated LesionsDocument6 pagesDetection of Low Contrast Lesions in Computed Body Tomogarphy - An Experimental Study of Simulated LesionsMauro Rojas ZúñigaNo ratings yet

- Jon Rappoport's Blog: Corona: Creating The Illusion of A Pandemic Through Diagnostic TestsDocument24 pagesJon Rappoport's Blog: Corona: Creating The Illusion of A Pandemic Through Diagnostic TestsDonnaveo ShermanNo ratings yet

- Divergence-Based Robust Inference Under Proportional Hazards Model For One-Shot Device Life-TestDocument13 pagesDivergence-Based Robust Inference Under Proportional Hazards Model For One-Shot Device Life-Testudita.iitismNo ratings yet

- Adaptive Cluster Sampling-Based Design For Estimating COVID-19 Cases With Random SamplesDocument9 pagesAdaptive Cluster Sampling-Based Design For Estimating COVID-19 Cases With Random SamplesRizka Amalia PutriNo ratings yet

- MathBioCwork2016 PDFDocument4 pagesMathBioCwork2016 PDFMakde GandekNo ratings yet

- Model Validity (1) : Huawei Confidential 39Document13 pagesModel Validity (1) : Huawei Confidential 39muhamad nadrNo ratings yet

- Data Mining Final Exam Future University 2018Document3 pagesData Mining Final Exam Future University 2018Omer HushamNo ratings yet

- Coronavirus Disease (COVID-19) - AI Based Risk Assessment Tool v2.0 (Detail)Document38 pagesCoronavirus Disease (COVID-19) - AI Based Risk Assessment Tool v2.0 (Detail)vedNo ratings yet

- BIOST 511 Assignment 8 Solutions: N X N XDocument4 pagesBIOST 511 Assignment 8 Solutions: N X N XKhalid AbdulrahmanNo ratings yet

- Handout Confusion MatrixDocument6 pagesHandout Confusion MatrixDarya YanovichNo ratings yet

- Car Price Prediction Using RANDOM FOREST REGRESSIONDocument1 pageCar Price Prediction Using RANDOM FOREST REGRESSIONVicky NagarNo ratings yet

- Movie Recommendation System Using SVDDocument1 pageMovie Recommendation System Using SVDVicky NagarNo ratings yet

- Breast Cancer Tumor Prediction Using XGBOOSTDocument1 pageBreast Cancer Tumor Prediction Using XGBOOSTVicky NagarNo ratings yet

- Data Analytics Master Class Data Analytics Master Class: Introduction To A.I & D.ADocument25 pagesData Analytics Master Class Data Analytics Master Class: Introduction To A.I & D.AVicky NagarNo ratings yet

- Ijmcar-Near-Module Homomorphism Defined Over Different Near-RingsDocument10 pagesIjmcar-Near-Module Homomorphism Defined Over Different Near-RingsTJPRC PublicationsNo ratings yet

- Gwendolyn BrooksDocument2 pagesGwendolyn BrooksgaraiszNo ratings yet

- RE054 Parts&Tools GuideA4 V2 LowresDocument8 pagesRE054 Parts&Tools GuideA4 V2 LowresAlan Joel Condori TorresNo ratings yet

- Ijreas Volume 2, Issue 2 (February 2012) ISSN: 2249-3905 Power Quality Innovation in Harmonic FilteringDocument11 pagesIjreas Volume 2, Issue 2 (February 2012) ISSN: 2249-3905 Power Quality Innovation in Harmonic FilteringYellaturi Siva Kishore ReddyNo ratings yet

- Protection of Children From Sexual Offences ActDocument19 pagesProtection of Children From Sexual Offences ActKomal SinghNo ratings yet

- Sir Syed College Campus 1 Wah CanttDocument2 pagesSir Syed College Campus 1 Wah CanttAreebNo ratings yet

- Uniformity and VariabilityDocument4 pagesUniformity and VariabilityMarija MarticNo ratings yet

- L2 Introduction To HematologyDocument3 pagesL2 Introduction To HematologyJamil LausNo ratings yet

- Elementary Arabic: Department of Religious Studies and ArabicDocument69 pagesElementary Arabic: Department of Religious Studies and ArabicMohammed KhanNo ratings yet

- CPCRDocument35 pagesCPCRIkhsan AmadeaNo ratings yet

- Formative and Summative Assessment - Can They Serve Learning Together (AERA 2003) PDFDocument18 pagesFormative and Summative Assessment - Can They Serve Learning Together (AERA 2003) PDFTan Geat LianNo ratings yet

- Economics 3 - Economic Theory & Public FinanceDocument174 pagesEconomics 3 - Economic Theory & Public FinanceYashas Krishna100% (1)

- Cooling Fin OptimizationDocument8 pagesCooling Fin OptimizationKamer YıldızNo ratings yet

- Tata Docomo Failure Analysis by Akesh Kumar RauniyarDocument9 pagesTata Docomo Failure Analysis by Akesh Kumar RauniyarAkesh GuptaNo ratings yet

- RIZAL MODULE 5 - Rizal's First Trip in Europe EACDocument17 pagesRIZAL MODULE 5 - Rizal's First Trip in Europe EACJ Y A J S VLOGS100% (1)

- Inchausti Versus YuloDocument8 pagesInchausti Versus YuloJohn AceNo ratings yet

- UNIT-3: Cause/Effect EssayDocument26 pagesUNIT-3: Cause/Effect EssayMario PasalewangiNo ratings yet

- Evidence Your Next Holiday DestinationDocument11 pagesEvidence Your Next Holiday DestinationDaniel UsedaNo ratings yet

- American AnthropologistDocument2 pagesAmerican AnthropologistSheetal MohantyNo ratings yet

- Reading Comprehension Scarecrow FriendsDocument2 pagesReading Comprehension Scarecrow Friendsapi-524919792No ratings yet

- Anticholinergic: Classification Generic Name Brand NameDocument6 pagesAnticholinergic: Classification Generic Name Brand NameKarina MadriagaNo ratings yet

- Unit 13 PDFDocument12 pagesUnit 13 PDFJaysonGayumaNo ratings yet

- Lafarge PartitionsDocument40 pagesLafarge PartitionsBarrack O MNo ratings yet

- Ethical DilemmaDocument1 pageEthical DilemmaAnaadilNo ratings yet