Professional Documents

Culture Documents

Breast Cancer Tumor Prediction Using XGBOOST

Uploaded by

Vicky NagarCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Breast Cancer Tumor Prediction Using XGBOOST

Uploaded by

Vicky NagarCopyright:

Available Formats

1 Problem Breast Cancer Classification

Benign, Mallign etc

Breast Cancer based on cell

2 Dataset structure

(683, 11)

dataset.shape

3 Summarize Dataset

dataset.head(5)

4 Segregating Dataset into X & Y

Splitting Dataset into Train &

5 Test

Difference between the average prediction

of our model and the actual value

Bias

Model with High bias leads to high error

on training and test data

Bias & Variance

variability of model prediction for a given

data point - Data Spread

Variance Model with high variance pays a lot of

attention to training data and does not for

new data

Bagging Only controls for high variance in a model

Boosting algorithms play a crucial role in

dealing both bias & variance

Boosting is a sequential technique which

Overview works on the principle of ensemble

It combines a set of weak learners and

delivers improved prediction accuracy

Boosting

At any instant t, the model outcomes are

weighed based on the outcomes of

previous instant t-1

Process The outcomes predicted correctly are

given a lower weight and the ones miss-

classified are weighted higher

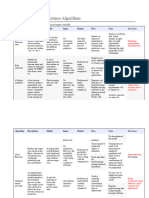

Tree-Specific Parameters It affects each individual tree

Types of Parameters Boosting Parameters It affects the boosting operation

Miscellaneous Parameters It affects overall functioning

1 Initialize the outcome

Gradient Boosting Machine Parameter

Update the weights for targets based on

previous run

Breast cancer Tumor

prediction using Fit the model on selected subsample of

data

XGBOOST 6 Steps of GBM

Algorithm 2 Iterate from 1 to total number of trees

Make predictions on the full set of

observations

Update the output with current results

taking into account the learning rate

3 Return the final output

Ensemble Learning

It also combines the results of many

1 models

Like Random Forests, It uses Decision

2 Trees as base learners

Individual decision trees are low-bias, high-

3 variance models

Tress used by XGBoost is different - instead After the tree reaches max depth, the

of containing a single decision in each decision can be made by converting the

Overview “leaf” node, they contain real-value scores scores into categories using a certain

4 of whether an instance belongs to a group threshold

It has Regularization, whereas GBM

5 implementation has no regularization Reduces Overfitting

6 It implements Parallel Processing

GBM would stop splitting a node when it

encounters a negative loss in the split.

Thus it is more of a greedy algorithm

XGBOOST - eXtreme Gradient Boosting

XGBoost on the other hand make splits

7 Tree Pruning upto the max_depth specified and then

start pruning the tree backwards and

remove splits beyond which there is no

positive gain

8 Built-in Cross-Validation

Booster Parameters It affects the boosting operation

Parameters Learning Task Parameters It guides optimized performance

General Parameters It affects each individual tree

7 Training with XGBOOST

8 Confusion Matrix

It is a procedure used to estimate the skill

of the model on new data

9 K-Fold Cross Validation

k that refers to the number of groups that

a given data sample

You might also like

- Pythontrainingtutorial 170613150508Document32 pagesPythontrainingtutorial 170613150508Ali M. RiyathNo ratings yet

- 1 Algorithm Pseudocode FlowchartDocument32 pages1 Algorithm Pseudocode FlowchartDanielNo ratings yet

- Condition Based Reliability, Availability, Maintainability, and Safety (CB-RAMS) ModelDocument15 pagesCondition Based Reliability, Availability, Maintainability, and Safety (CB-RAMS) ModelganeshdhageNo ratings yet

- Ensemble Methods (Final)Document16 pagesEnsemble Methods (Final)sundram goyalNo ratings yet

- Strategy DeckDocument16 pagesStrategy Decksaicherish90No ratings yet

- Data PruningDocument52 pagesData PruningSoonNo ratings yet

- Understanding Batch Normalization, Layer Normalization and Group Normalization by Implementing From Scratch - LinkedInDocument5 pagesUnderstanding Batch Normalization, Layer Normalization and Group Normalization by Implementing From Scratch - LinkedInmarkus.aureliusNo ratings yet

- M-HOF-Opt: Multi-Objective Hierarchical Output Feedback Optimization Via Multiplier Induced Loss Landscape SchedulingDocument15 pagesM-HOF-Opt: Multi-Objective Hierarchical Output Feedback Optimization Via Multiplier Induced Loss Landscape SchedulinglarrylynnmailNo ratings yet

- Non-Stochastic Best Arm Identification and Hyperparameter OptimizationDocument13 pagesNon-Stochastic Best Arm Identification and Hyperparameter OptimizationVivek BhadouriaNo ratings yet

- Lecture 15 - Recap and Midterm ReviewDocument37 pagesLecture 15 - Recap and Midterm ReviewdeponlyNo ratings yet

- Seasonal Flu PresentationDocument14 pagesSeasonal Flu Presentationronny nyagakaNo ratings yet

- Machine Learning QuestionsDocument2 pagesMachine Learning QuestionsPriyaprasad PandaNo ratings yet

- 16 Comparison of Data Science AlgorithmsDocument13 pages16 Comparison of Data Science AlgorithmsshardullavandeNo ratings yet

- Unit 2Document18 pagesUnit 2rk73462002No ratings yet

- Ethinking The Yperparameters FOR INE TuningDocument20 pagesEthinking The Yperparameters FOR INE TuningRohit SinghNo ratings yet

- Lecture 05 - Cross-Validation and Decision Trees - PlainDocument15 pagesLecture 05 - Cross-Validation and Decision Trees - PlainRajaNo ratings yet

- Exer8 Indigo 1Document2 pagesExer8 Indigo 1Karl SorianoNo ratings yet

- Machine LearningDocument10 pagesMachine LearningMd Shadman SakibNo ratings yet

- ML - Chapter 6 - Model EvaluationDocument65 pagesML - Chapter 6 - Model EvaluationYohannes DerejeNo ratings yet

- Busso 2006Document6 pagesBusso 2006gerardo davidNo ratings yet

- Samatrix Assignment3Document4 pagesSamatrix Assignment3Yash KumarNo ratings yet

- Course Enrollment On Blackboard: Announced On E-ComDocument26 pagesCourse Enrollment On Blackboard: Announced On E-ComSherif MagdyNo ratings yet

- Out-Of-Distribution Image Detection in Neural Networks: 1 3 Various ODD Detection TechniquesDocument3 pagesOut-Of-Distribution Image Detection in Neural Networks: 1 3 Various ODD Detection Techniquesmeriem elkhalNo ratings yet

- Bayesian Feed ForwardDocument10 pagesBayesian Feed Forwardmihai ilieNo ratings yet

- Probabilistic Analysis of Solar Cell Optical Performance Using Gaussian ProcessesDocument11 pagesProbabilistic Analysis of Solar Cell Optical Performance Using Gaussian ProcessesmtopanyuzaNo ratings yet

- Training EvaluationDocument42 pagesTraining EvaluationRaksa KunNo ratings yet

- Maximizing Overall Diversity For Improved Uncertainty Estimates in Deep EnsemblesDocument11 pagesMaximizing Overall Diversity For Improved Uncertainty Estimates in Deep Ensemblesadeka1No ratings yet

- Diabetes Prediction Using Machine LearningDocument8 pagesDiabetes Prediction Using Machine LearningIJRASETPublicationsNo ratings yet

- Virtual Adversarial Training: A Regularization Method For Supervised and Semi-Supervised LearningDocument16 pagesVirtual Adversarial Training: A Regularization Method For Supervised and Semi-Supervised LearningThế Anh NguyễnNo ratings yet

- Intro To Data Science Lecture 5Document7 pagesIntro To Data Science Lecture 5engmjod.88No ratings yet

- Audit Course ReviewDocument11 pagesAudit Course Reviewrahul suryawanshiNo ratings yet

- Dynamic Scale Inferenceby Entropy MinimizationDocument10 pagesDynamic Scale Inferenceby Entropy MinimizationGaston GBNo ratings yet

- Presentation Material 2Document65 pagesPresentation Material 2AASIM AlamNo ratings yet

- Imp Machine Learning Quetions For Gtu3170724 Part 3Document46 pagesImp Machine Learning Quetions For Gtu3170724 Part 3Meet BogharaNo ratings yet

- The Curse of Overparametrization in Adversarial TrainingDocument86 pagesThe Curse of Overparametrization in Adversarial TrainingGuillaume BraunNo ratings yet

- Nagi Gebraeel, Tim Lieuwen, Kamran Paynabar, Reid Berdanier, and Karen TholeDocument1 pageNagi Gebraeel, Tim Lieuwen, Kamran Paynabar, Reid Berdanier, and Karen Tholepartha6789No ratings yet

- Test-Time Training With Self-Supervision For Generalization Under Distribution ShiftsDocument20 pagesTest-Time Training With Self-Supervision For Generalization Under Distribution ShiftsKowshik ThopalliNo ratings yet

- Integrating Image Quality In 2 Match Score Fusion: Ν-Svm BiometricDocument10 pagesIntegrating Image Quality In 2 Match Score Fusion: Ν-Svm Biometricdubey_pNo ratings yet

- Machine Learning AnalysisDocument1 pageMachine Learning AnalysiskatharosyuNo ratings yet

- Probabilistic Analysis of Solar Cell Optical PerfoDocument7 pagesProbabilistic Analysis of Solar Cell Optical PerfomtopanyuzaNo ratings yet

- Psychological Assessment HW #8Document8 pagesPsychological Assessment HW #8maerucelNo ratings yet

- Section CDocument20 pagesSection CSANJAY SOLANKINo ratings yet

- Quantization of ModelsDocument11 pagesQuantization of ModelsganeshNo ratings yet

- Anaytical Case Competition DeckDocument4 pagesAnaytical Case Competition Deckaryantiwari.vaNo ratings yet

- Analysis of Common Supervised Learning Algorithms Through ApplicationDocument20 pagesAnalysis of Common Supervised Learning Algorithms Through Applicationacii journalNo ratings yet

- Futility Analysis in The Cross-Validation of Machine Learning ModelsDocument22 pagesFutility Analysis in The Cross-Validation of Machine Learning ModelsMarius_2010No ratings yet

- Boser1992 Refrensi 12Document9 pagesBoser1992 Refrensi 12bisniskuyNo ratings yet

- MeasureDocument66 pagesMeasurepm9286vNo ratings yet

- Machine Learning Section4 Ebook v03Document20 pagesMachine Learning Section4 Ebook v03camgovaNo ratings yet

- ScholarshipDocument17 pagesScholarshipharindramehtaNo ratings yet

- Relay Protection Condition Assessment Based On Variable Weight Fuzzy Synthetic EvaluationDocument6 pagesRelay Protection Condition Assessment Based On Variable Weight Fuzzy Synthetic EvaluationPencariNo ratings yet

- 5-Uninformed Students Student-Teacher Anomaly DetectionDocument11 pages5-Uninformed Students Student-Teacher Anomaly Detectionfarzad imanpourNo ratings yet

- ProjectDocument12 pagesProject12061017No ratings yet

- A Hybrid Approach For Network Selection and Fast Delivery Handover RouteDocument4 pagesA Hybrid Approach For Network Selection and Fast Delivery Handover RouteAnonymous lPvvgiQjRNo ratings yet

- Data Augmentation Using Synthetic Data For Time Series Classification With Deep Residual NetworksDocument8 pagesData Augmentation Using Synthetic Data For Time Series Classification With Deep Residual NetworksStishNo ratings yet

- Innovative Model To Augment Small Datasets For ClassificationDocument7 pagesInnovative Model To Augment Small Datasets For ClassificationIJAR JOURNALNo ratings yet

- Production Engineering Muster DenkenaDocument8 pagesProduction Engineering Muster DenkenacomocenNo ratings yet

- Leo Breiman 2001 Random Forest Algorithm Weka - Google ScholarDocument6 pagesLeo Breiman 2001 Random Forest Algorithm Weka - Google ScholarPrince AliNo ratings yet

- ECBFMBP: Design of An Ensemble Deep Learning Classifier With Bio-Inspired Feature Selection For High-Efficiency Multidomain Bug PredictionDocument24 pagesECBFMBP: Design of An Ensemble Deep Learning Classifier With Bio-Inspired Feature Selection For High-Efficiency Multidomain Bug PredictionJiyung Byun100% (1)

- Random Forest Vs Logistic Regression For Binary ClassificationDocument25 pagesRandom Forest Vs Logistic Regression For Binary ClassificationRajiv SharmaNo ratings yet

- Breast Cancer Using Image ProcessingDocument3 pagesBreast Cancer Using Image ProcessingRishabh KhoslaNo ratings yet

- Data Science for Beginners: Tips and Tricks for Effective Machine Learning/ Part 4From EverandData Science for Beginners: Tips and Tricks for Effective Machine Learning/ Part 4No ratings yet

- Car Price Prediction Using RANDOM FOREST REGRESSIONDocument1 pageCar Price Prediction Using RANDOM FOREST REGRESSIONVicky NagarNo ratings yet

- Movie Recommendation System Using SVDDocument1 pageMovie Recommendation System Using SVDVicky NagarNo ratings yet

- Sentimental Analysis Using NLPDocument1 pageSentimental Analysis Using NLPVicky NagarNo ratings yet

- Data Analytics Master Class Data Analytics Master Class: Introduction To A.I & D.ADocument25 pagesData Analytics Master Class Data Analytics Master Class: Introduction To A.I & D.AVicky NagarNo ratings yet

- Basic Fundamentals of FIFO DesignDocument67 pagesBasic Fundamentals of FIFO Designhemanth235No ratings yet

- BSC Physical Science Computer ScienceDocument131 pagesBSC Physical Science Computer SciencePrashant JinwalNo ratings yet

- Decision Trees (I) : ISOM3360 Data Mining For Business Analytics, Session 4Document32 pagesDecision Trees (I) : ISOM3360 Data Mining For Business Analytics, Session 4Hiu Tung ChanNo ratings yet

- TED (21) 3134 QPDocument2 pagesTED (21) 3134 QPSreekanth KuNo ratings yet

- Xii SC Practical AssignmentDocument20 pagesXii SC Practical AssignmentSakhyam BhoiNo ratings yet

- Dsa AssignmentDocument4 pagesDsa AssignmentHarshvi ShahNo ratings yet

- System Programming NotesDocument92 pagesSystem Programming NotesNavaraj Pandey100% (1)

- 3-Interacting With Java ProgramsDocument38 pages3-Interacting With Java ProgramsMinosh PereraNo ratings yet

- "/nenter Temperature in Farenheit: ": #Include #IncludeDocument13 pages"/nenter Temperature in Farenheit: ": #Include #Includekibrom mekonenNo ratings yet

- Assignment No 2 JavaDocument7 pagesAssignment No 2 JavaMahnoorNo ratings yet

- Summary LTE L900 Baseline AuditDocument4 pagesSummary LTE L900 Baseline AuditAdil MuradNo ratings yet

- DS Unit1 Part-1Document65 pagesDS Unit1 Part-1reethu joyceyNo ratings yet

- Beej's Guide To C Programming: Brian "Beej Jorgensen" HallDocument679 pagesBeej's Guide To C Programming: Brian "Beej Jorgensen" HallManjusha SreedharanNo ratings yet

- Ebook C How To Program Early Objects Version 9Th Edition Deitel Test Bank Full Chapter PDFDocument27 pagesEbook C How To Program Early Objects Version 9Th Edition Deitel Test Bank Full Chapter PDFAmyClarkcsgz100% (8)

- Discrete Math 2 Midterm 1Document7 pagesDiscrete Math 2 Midterm 1Ahmedbacha AbdelkaderNo ratings yet

- SEMINAR ON JVMDocument18 pagesSEMINAR ON JVMajay_behera_1No ratings yet

- RE - Lab Program 1 To 4Document7 pagesRE - Lab Program 1 To 4vijipersonal2012No ratings yet

- KTU S7 Elective: CST433 Security in ComputingDocument8 pagesKTU S7 Elective: CST433 Security in ComputingJishnu ManikkothNo ratings yet

- Digital Electronics Chapter 5Document30 pagesDigital Electronics Chapter 5Pious TraderNo ratings yet

- Object Oriented Approach To Programming Logic and Design 4th Edition Joyce Farrell Solutions ManualDocument26 pagesObject Oriented Approach To Programming Logic and Design 4th Edition Joyce Farrell Solutions ManualMrDustinAllisongmer100% (47)

- Hats (En)Document3 pagesHats (En)tammim98100No ratings yet

- Research Paper On Dynamic ProgrammingDocument7 pagesResearch Paper On Dynamic Programmingvguomivnd100% (1)

- Notes CseDocument24 pagesNotes CseKuldeep singh DDENo ratings yet

- Banking System ProjectDocument94 pagesBanking System ProjectOppen Heimer (Ikkakka)No ratings yet

- 3.5.7 Lab - Create A Python Unit TestDocument15 pages3.5.7 Lab - Create A Python Unit TestSamuel GarciaNo ratings yet

- Unit 4-Memory MaangementDocument15 pagesUnit 4-Memory MaangementHabtieNo ratings yet

- C Programming - IntroductionDocument16 pagesC Programming - IntroductionrojaNo ratings yet

- Nano Scientific Research Centre Pvt. LTD., #6 Floor, Siri Estates, Opp Lane To R.S. Brothers, Ameerpet, Hyderabad - 500073 C++ Interview QuestionsDocument9 pagesNano Scientific Research Centre Pvt. LTD., #6 Floor, Siri Estates, Opp Lane To R.S. Brothers, Ameerpet, Hyderabad - 500073 C++ Interview Questionshari NarnavaramNo ratings yet