Professional Documents

Culture Documents

IP Unit 5 Notes

IP Unit 5 Notes

Uploaded by

kartik gupta0 ratings0% found this document useful (0 votes)

5 views97 pagesCopyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

5 views97 pagesIP Unit 5 Notes

IP Unit 5 Notes

Uploaded by

kartik guptaCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 97

Unit 5.

IMAGE COMPRESSION AND

RECOGNITION

IMAGE COMPRESSION

• Image compression deals with reducing the amount of data

required to represent a digital image by removing of

redundant data.

• Images can be represented in digital format in many ways.

• Encoding the contents of a 2-D image in a raw bitmap (raster)

format is usually not economical and may result in very large

files.

• Since raw image representations usually require a large

amount of storage space (and proportionally long

transmission times in the case of file uploads/ downloads),

most image file formats employ some type of compression.

• The need to save storage space and shorten transmission

time, as well as the human visual system tolerance to a

modest amount of loss, have been the driving factors behind

image compression techniques.

Goal of image compression

• The goal of image compression is to reduce the amount of

data required to represent a digital image.

Data ≠ Information:

• Data and information are not synonymous terms!

• Data is the means by which information is conveyed.

• Data compression aims to reduce the amount of data

required to represent a given quality of information

while preserving as much information as possible.

• The same amount of information can be represented

by various amount of data.

• Ex1: You have an extra class after completion of 3.50

p.m

• Ex2: Extra class have been scheduled after 7th hour for

you.

• Ex3: After 3.50 p.m you should attended extra class.

Definition of compression ratio

Definitions of Data Redundancy

• Two-dimensional intensity arrays suffer from three

principal types of data redundancies that can be

identified and exploited:

1. Coding redundancy

2. Spatial and temporal redundancy.

3. Irrelevant information.

Coding redundancy

• Code: a list of symbols (letters, numbers, bits etc.,)

• Code word: a sequence of symbol used to represent a piece of

information or an event (e.g., gray levels).

• Code word length: number of symbols in each code word.

Spatial and Temporal Redundancy

• In the corresponding 2-D intensity array:

1. All 256 intensities are equally probable.

As Fig. 8.2 shows, the histogram of the image is

uniform.

2. Because the intensity of each line was selected

randomly, its pixels are independent of one another

in the vertical direction.

3. Because the pixels along each line are identical, they

are maximally correlated (completely dependent on

one another) in the horizontal direction.

Irrelevant Information

• Most 2-D intensity arrays contain information that is

ignored by the human visual system and/or

extraneous to the intended use of the image.

• It is redundant in the sense that it is not used.

• Its main components are:

• Mapper: transforms the input data into a (usually nonvisual)

format designed to reduce interpixel redundancies in the

input image. This operation is generally reversible and may or

may not directly reduce the amount of data required to

represent the image.

• Quantizer: reduces the accuracy of the mapper’s output in

accordance with some pre- established fidelity criterion.

Reduces the psychovisual redundancies of the input image.

This operation is not reversible and must be omitted if lossless

compression is desired.

• Symbol (entropy) encoder: creates a fixed- or variable-length

code to represent the quantizer’s output and maps the output

in accordance with the code. In most cases, a variable-length

code is used. This operation is reversible.

Some Basic Compression Methods

• Error-free compression

• Error-free compression techniques usually rely on entropy-based

encoding algorithms.

• The concept of entropy is mathematically described in equation:

where:

• a j is a symbol produced by the information source

• P ( a j ) is the probability of that symbol

• J is the total number of different symbols

• H ( z ) is the entropy of the source.

• The concept of entropy provides an upper bound on how much

compression can be achieved, given the probability distribution of

the source.

• In other words, it establishes a theoretical limit on the amount of

lossless compression that can be achieved using entropy encoding

techniques alone.

Huffman Coding

• One of the most popular techniques for removing coding

redundancy is due to Huffman (Huffman [1952]).

• When coding the symbols of an information source

individually, Huffman coding yields the smallest possible

number of code symbols per source symbol.

• The first step in Huffman’s approach is to create a series

of source reductions by ordering the probabilities of the

symbols under consideration and combining the lowest

probability symbols into a single symbol that replaces

them in the next source reduction.

• Figure 8.7 illustrates this process for binary coding (K-ary

Huffman codes can also be constructed).

• The second step in Huffman’s procedure is to code each

reduced source, starting with the smallest source and

working back to the original source.

• The minimal length binary code for a two-symbol source, of

course, are the symbols 0 and 1.

• As Fig. 8.8 shows, these symbols are assigned to the two

symbols on the right (the assignment is arbitrary; reversing

the order of the 0 and 1 would work just as well).

• The average length of this code is

and the entropy of the source is 2.14 bits/symbol.

• Huffman’s procedure creates the optimal code for a

set of symbols and probabilities subject to the

constraint that the symbols be coded one at a time.

Variable Length Coding (VLC)

• Most entropy-based encoding techniques rely on

assigning variable-length codewords to each symbol,

whereas the most likely symbols are assigned shorter

codewords.

• In the case of image coding, the symbols may be raw

pixel values or the numerical values obtained at the

output of the mapper stage (e.g., differences between

consecutive pixels, run-lengths, etc.).

• The most popular entropy-based encoding technique is

the Huffman code.

• It provides the least amount of information units (bits)

per source symbol.

Arithmetic Coding

• Unlike the variable-length codes of the previous

two sections, arithmetic coding generates

nonblock codes.

• In arithmetic coding, which can be traced to the

work of Elias (see Abramson [1963]), a one-to-

one correspondence between source symbols

and code words does not exist.

• Instead, an entire sequence of source symbols (or

message) is assigned a single arithmetic code

word.

• Figure 8.12 illustrates the basic arithmetic coding

process.

LZW Coding

• In this section, we consider an error-free

compression approach that also addresses

spatial redundancies in an image.

• The technique, called Lempel-Ziv-Welch (LZW)

coding, assigns fixed-length code words to

variable length sequences of source symbols.

Run-length encoding (RLE)

• RLE is one of the simplest data compression

techniques.

• It consists of replacing a sequence (run) of

identical symbols by a pair containing the symbol

and the run length.

• It is used as the primary compression technique

in the 1-D CCITT Group 3 fax standard and in

conjunction with other techniques in the JPEG

image compression standard.

Differential coding

• Differential coding techniques explore the

interpixel redundancy in digital images.

• The basic idea consists of applying a simple

difference operator to neighboring pixels to

calculate a difference image, whose values are

likely to follow within a much narrower range

than the original gray-level range.

• As a consequence of this narrower distribution –

and consequently reduced entropy – Huffman

coding or other VLC schemes will produce shorter

codewords for the difference image.

Predictive coding

• Predictive coding techniques constitute

another example of exploration of interpixel

redundancy, in which the basic idea is to

encode only the new information in each

pixel.

• This new information is usually defined as the

difference between the actual and the

predicted value of that pixel.

Lossless predictive coding

• Figure 8.33 shows the basic components of a lossless

predictive coding system.

• The system consists of an encoder and a decoder,

each containing an identical predictor.

• As successive samples of discrete time input signal,

f(n), are introduced to the encoder, the predictor

generates the anticipated value of each sample

based on a specified number of past samples.

• The output of the predictor is then rounded to the

nearest integer, denoted and used to form the

difference or prediction error

Dictionary-based coding

• Dictionary-based coding techniques are based on the

idea of incrementally building a dictionary (table) while

receiving the data.

• Unlike VLC techniques, dictionary-based techniques

use fixed-length codewords to represent variable-

length strings of symbols that commonly occur

together.

• Consequently, there is no need to calculate, store, or

transmit the probability distribution of the source,

which makes these algorithms extremely convenient

and popular.

• The best-known variant of dictionary-based coding

algorithms is the LZW (Lempel-Ziv-Welch) encoding

scheme, used in popular multimedia file formats such

as GIF, TIFF, and PDF.

Lossy compression

• Lossy compression techniques deliberately

introduce a certain amount of distortion to

the encoded image, exploring the

psychovisual redundancies of the original

image.

• These techniques must find an appropriate

balance between the amount of error (loss)

and the resulting bit savings.

Quantization

• The quantization stage is at the core of any lossy image encoding

algorithm.

• Quantization, in at the encoder side, means partitioning of the

input data range into a smaller set of values.

• There are two main types of quantizers: scalar quantizers and

vector quantizers.

• A scalar quantizer partitions the domain of input values into a

smaller number of intervals.

• If the output intervals are equally spaced, which is the simplest way

to do it, the process is called uniform scalar quantization;

otherwise, for reasons usually related to minimization of total

distortion, it is called nonuniform scalar quantization.

• One of the most popular nonuniform quantizers is the Lloyd-Max

quantizer.

• Vector quantization (VQ) techniques extend the basic principles of

scalar quantization to multiple dimensions. Because of its fast

lookup capabilities at the decoder side, VQ-based coding schemes

are particularly attractive to multimedia applications.

Transform coding

• The techniques discussed so far work directly on the pixel values

and are usually called spatial domain techniques.

• Transform coding techniques use a reversible, linear mathematical

transform to map the pixel values onto a set of coefficients, which

are then 137 quantized and encoded.

• The key factor behind the success of transform-based coding

schemes many of the resulting coefficients for most natural images

have small magnitudes and can be quantized (or discarded

altogether) without causing significant distortion in the decoded

image.

• Different mathematical transforms, such as Fourier (DFT), Walsh-

Hadamard (WHT), and Karhunen-Loeve (KLT), have been considered

for the task.

• For compression purposes, the higher the capability of compressing

information in fewer coefficients, the better the transform; for that

reason, the Discrete Cosine Transform (DCT) has become the most

widely used transform coding technique.

Wavelet coding

• Wavelet coding techniques are also based on the idea that

the coefficients of a transform that decorrelates the pixels

of an image can be coded more efficiently than the original

pixels themselves.

• The main difference between wavelet coding and DCT-

based coding is the omission of the first stage.

• Because wavelet transforms are capable of representing an

input signal with multiple levels of resolution, and yet

maintain the useful compaction properties of the DCT, the

subdivision of the input image into smaller subimages is no

longer necessary.

• Wavelet coding has been at the core of the latest image

compression standards, most notably JPEG 2000, which is

discussed in a separate short article.

Image compression standards

• JPEG

• One of the most popular and comprehensive continuous

tone, still frame compression standards is the JPEG

standard.

• It defines three different coding systems:

• (1) a lossy baseline coding system, which is based on the

DCT and is adequate for most compression applications;

• (2) an extended coding system for and

• (3) a lossless independent coding system for reversible

compression.

• To be JPEG compatible, a product or system must include

support for the baseline system.

• No particular file format, spatial resolution, or color

space model is specified.

MPEG

Unit 5.1

IMAGE COMPRESSION AND

RECOGNITION

Representation and Description

– Representing regions in 2 ways:

• Based on their external characteristics (its

boundary):

– Shape characteristics

• Based on their internal characteristics (its

region):

– Regional properties: color, texture, and …

• Both

– Describes the region based on a selected

representation:

• Representation -> boundary or textural features

• Description -> length, orientation, the number

of concavities in the boundary, statistical

measures of region.

• Invariant Description:

– Size (Scaling)

– Translation

– Rotation

Boundary (Border) Following:

• We need the boundary as a ordered sequence of points.

Polygonal Approximations Using Minimum-Perimeter

Polygons

MPP algorithm

1. The MPP bounded by a simply connected cellular

complex is not self intersecting.

2. Every convex vertex of the MPP is a W vertex, but not

every W vertex of a boundary is a vertex of the MPP.

3. Every mirrored concave vertex of the MPP is a B vertex,

but not every B vertex of a boundary is a vertex of the

MPP.

4. All B vertices are on or outside the MPP, and all W

vertices are on or inside the MPP.

5. The uppermost, leftmost vertex in a sequence of

vertices contained in a cellular complex is always a W

vertex of the MPP.

Other Polygonal Approximation Approaches

Regional Descriptors

FIGURE 11.22 Infrared images of the Americas at night.

FIGURE 11.23 A region with two holes.

FIGURE 11.24 A region with three connected components.

FIGURE 11.25 Regions with Euler numbers equal to 0 and respectively. -1,

FIGURE 11.26 A region containing a polygonal network.

Example

Patterns and Pattern Classes

• A pattern is an arrangement of descriptors.

• The name feature is used often in the pattern

recognition literature to denote a descriptor.

• A pattern class is a family of patterns that

share some common properties.

• Pattern classes are denoted w1,w2,.....,wW

where W is the number of classes.

• Three common pattern arrangements used in

practice are vectors (for quantitative

descriptions) and strings and trees (for

structural descriptions).

• Pattern vectors are represented by bold

lowercase letters, such as x, y, and z, and take

the form

Recognition Based on Matching

• Recognition techniques based on matching

represent each class by a prototype pattern

vector.

• An unknown pattern is assigned to the class to

which it is closest in terms of a predefined

metric.

Minimum distance classifier

• Suppose that we define the prototype of each

pattern class to be the mean vector of the patterns

of that class:

You might also like

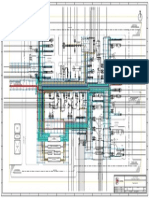

- Fabrication of ManifoldDocument2 pagesFabrication of Manifoldsarangpune100% (1)

- Administrative Theory Taylor, Fayol, Gulick and UrwickDocument15 pagesAdministrative Theory Taylor, Fayol, Gulick and Urwickspace_007785% (33)

- LabVIEW Core 2 - Participant GuideDocument104 pagesLabVIEW Core 2 - Participant GuideViktor DilberNo ratings yet

- Book ChapterDocument410 pagesBook ChapterTU NGO THIENNo ratings yet

- Lecture 14 AutoencodersDocument39 pagesLecture 14 AutoencodersDevyansh Gupta100% (1)

- Robot Process Automation RPA and Its FutureDocument25 pagesRobot Process Automation RPA and Its FutureFATHIMATH SAHALANo ratings yet

- Image Compression: CS474/674 - Prof. BebisDocument110 pagesImage Compression: CS474/674 - Prof. BebisYaksha YeshNo ratings yet

- Image CompressionDocument111 pagesImage CompressionKuldeep MankadNo ratings yet

- Digital Image Processing (Image Compression)Document38 pagesDigital Image Processing (Image Compression)MATHANKUMAR.S100% (3)

- Sedimentation: Engr. Nadeem Karim BhattiDocument23 pagesSedimentation: Engr. Nadeem Karim BhattiEngr Sarang Khan100% (1)

- Unit IVDocument97 pagesUnit IVsarayu2804No ratings yet

- CHAPTER FOURmultimediaDocument23 pagesCHAPTER FOURmultimediameshNo ratings yet

- Chapter Six 6A ICDocument30 pagesChapter Six 6A ICRobera GetachewNo ratings yet

- Aadel VeriDocument37 pagesAadel VerimustafaNo ratings yet

- Chapter-5 Data CompressionDocument53 pagesChapter-5 Data CompressionSapana GurungNo ratings yet

- CompressionDocument38 pagesCompressionShivani Goel100% (1)

- ImageCompression-UNIT-V-students MaterialDocument88 pagesImageCompression-UNIT-V-students MaterialHarika JangamNo ratings yet

- Module 5-ImgDocument14 pagesModule 5-ImgDEVID ROYNo ratings yet

- Dip 4 Unit NotesDocument10 pagesDip 4 Unit NotesMichael MariamNo ratings yet

- Digital Image Processing (Chapter 8)Document25 pagesDigital Image Processing (Chapter 8)Md.Nazmul Abdal ShourovNo ratings yet

- Chapter 5 Data CompressionDocument18 pagesChapter 5 Data CompressionrpNo ratings yet

- Chapter 6 Lossy Compression AlgorithmsDocument46 pagesChapter 6 Lossy Compression AlgorithmsDesu WajanaNo ratings yet

- What Is The Need For Image Compression?Document38 pagesWhat Is The Need For Image Compression?rajeevrajkumarNo ratings yet

- Main Techniques and Performance of Each CompressionDocument23 pagesMain Techniques and Performance of Each CompressionRizki AzkaNo ratings yet

- Data Represintation and StorageDocument19 pagesData Represintation and StorageShamal HalabjayNo ratings yet

- DIP S8ECE MODULE5 Part1Document49 pagesDIP S8ECE MODULE5 Part1Neeraja JohnNo ratings yet

- Compression Using Huffman CodingDocument9 pagesCompression Using Huffman CodingSameer SharmaNo ratings yet

- Test LahDocument47 pagesTest LahBonny AuliaNo ratings yet

- Image DatabaseDocument16 pagesImage Databasemulayam singh yadavNo ratings yet

- Image Compression (Chapter 8) : CS474/674 - Prof. BebisDocument128 pagesImage Compression (Chapter 8) : CS474/674 - Prof. Bebissrc0108No ratings yet

- Unit 4Document10 pagesUnit 4Pranav jadhavNo ratings yet

- Huffman Coding PaperDocument3 pagesHuffman Coding Paperrohith4991No ratings yet

- Compression and Decompression TechniquesDocument68 pagesCompression and Decompression TechniquesVarun JainNo ratings yet

- Compression and DecompressionDocument33 pagesCompression and DecompressionNaveena SivamaniNo ratings yet

- Text and Image CompressionDocument54 pagesText and Image CompressionSapana Kamble0% (1)

- IP Lectures 11 CompressionDocument58 pagesIP Lectures 11 CompressiondebashishNo ratings yet

- Chapter ThreeDocument30 pagesChapter ThreemekuriaNo ratings yet

- DIGITALtvDocument40 pagesDIGITALtvSaranyaNo ratings yet

- MULTIMEDIA PROG ASSIGNMENT ONE Compression AlgorithmDocument6 pagesMULTIMEDIA PROG ASSIGNMENT ONE Compression AlgorithmBosa BossNo ratings yet

- Define Psychovisual RedundancyDocument3 pagesDefine Psychovisual RedundancyKhalandar BashaNo ratings yet

- 09 CM0340 Basic Compression AlgorithmsDocument73 pages09 CM0340 Basic Compression AlgorithmsShobhit JainNo ratings yet

- Huffman Coding Technique For Image Compression: ISSN:2320-0790Document3 pagesHuffman Coding Technique For Image Compression: ISSN:2320-0790applefounderNo ratings yet

- Image CompressionDocument5 pagesImage Compressionamt12202No ratings yet

- Autoencoder - Unit 4Document39 pagesAutoencoder - Unit 4Ankit MehtaNo ratings yet

- Image Compression 2 UpdateDocument10 pagesImage Compression 2 UpdateMohamd barcaNo ratings yet

- Gip Unit 5 - 2018-19 EvenDocument36 pagesGip Unit 5 - 2018-19 EvenKalpanaMohanNo ratings yet

- Lecture 14 AutoencodersDocument39 pagesLecture 14 AutoencodersDevyansh GuptaNo ratings yet

- Multimedia Systems: Chapter 7: Data CompressionDocument41 pagesMultimedia Systems: Chapter 7: Data CompressionJoshua chirchirNo ratings yet

- Fractal Image Compression Using Canonical Huffman CodingDocument38 pagesFractal Image Compression Using Canonical Huffman CodingMadhu MitaNo ratings yet

- Chapter 10 CompressionDocument66 pagesChapter 10 CompressionSandhya PandeyNo ratings yet

- The Lecture Contains:: Lecture 40: Need For Video Coding, Elements of Information Theory, Lossless CodingDocument8 pagesThe Lecture Contains:: Lecture 40: Need For Video Coding, Elements of Information Theory, Lossless CodingAdeoti OladapoNo ratings yet

- 13imagecompression 120321055027 Phpapp02Document54 pages13imagecompression 120321055027 Phpapp02Tripathi VinaNo ratings yet

- Digitized Pictures: by K. Karpoora Sundari ECE Department, K. Ramakrishnan College of Technology, SamayapuramDocument31 pagesDigitized Pictures: by K. Karpoora Sundari ECE Department, K. Ramakrishnan College of Technology, SamayapuramPerumal NamasivayamNo ratings yet

- Fundamentals of Compression: Prepared By: Haval AkrawiDocument21 pagesFundamentals of Compression: Prepared By: Haval AkrawiMuhammad Yusif AbdulrahmanNo ratings yet

- Neural Network Unsupervised Machine Learning: What Are Autoencoders?Document22 pagesNeural Network Unsupervised Machine Learning: What Are Autoencoders?shubh agrawalNo ratings yet

- Neural Network Unsupervised Machine Learning: What Are Autoencoders?Document22 pagesNeural Network Unsupervised Machine Learning: What Are Autoencoders?shubh agrawalNo ratings yet

- Chap 5 CompressionDocument43 pagesChap 5 CompressionMausam PokhrelNo ratings yet

- Compression Techniques and Cyclic Redundency CheckDocument5 pagesCompression Techniques and Cyclic Redundency CheckgirishprbNo ratings yet

- FALLSEM2022-23 CSE4019 ETH VL2022230104728 2022-10-19 Reference-Material-IDocument33 pagesFALLSEM2022-23 CSE4019 ETH VL2022230104728 2022-10-19 Reference-Material-ISRISHTI ACHARYYA 20BCE2561No ratings yet

- Unit-Ii Itc 2302Document21 pagesUnit-Ii Itc 2302Abhishek Bose100% (1)

- Presentation Layer PPT 1 PDFDocument16 pagesPresentation Layer PPT 1 PDFSai Krishna SKNo ratings yet

- Image CompressionDocument113 pagesImage Compressionmrunal.gaikwadNo ratings yet

- Compression: DMET501 - Introduction To Media EngineeringDocument26 pagesCompression: DMET501 - Introduction To Media EngineeringMohamed ZakariaNo ratings yet

- Image Compression: Efficient Techniques for Visual Data OptimizationFrom EverandImage Compression: Efficient Techniques for Visual Data OptimizationNo ratings yet

- Human Visual System Model: Understanding Perception and ProcessingFrom EverandHuman Visual System Model: Understanding Perception and ProcessingNo ratings yet

- Color Profile: Exploring Visual Perception and Analysis in Computer VisionFrom EverandColor Profile: Exploring Visual Perception and Analysis in Computer VisionNo ratings yet

- Jabra Revo Wireless Manual EN RevB PDFDocument19 pagesJabra Revo Wireless Manual EN RevB PDFJoan FangNo ratings yet

- Community DiagnosisDocument2 pagesCommunity Diagnosisfiles PerpsNo ratings yet

- Lenyx Chemicals Limay, Bataan, PhilippinesDocument1 pageLenyx Chemicals Limay, Bataan, PhilippinesMikee FelipeNo ratings yet

- Operating Range Diagram 21000: Manitowoc Cranes, IncDocument2 pagesOperating Range Diagram 21000: Manitowoc Cranes, Incdriller27No ratings yet

- Agastya Batttery ReportDocument1 pageAgastya Batttery ReportAditya NehraNo ratings yet

- Apps Deploy StatusDocument3 pagesApps Deploy StatusZNo ratings yet

- Scholarship Essay SampleDocument1 pageScholarship Essay SampleSipho Troy MoyoNo ratings yet

- A Comparative Study of Programming Languages in Rosetta Code PDFDocument293 pagesA Comparative Study of Programming Languages in Rosetta Code PDFjwpaprk1No ratings yet

- How To Verify Tax at The Table Level For Purchasing Documents (Doc ID 2251671.1)Document4 pagesHow To Verify Tax at The Table Level For Purchasing Documents (Doc ID 2251671.1)Lo JenniferNo ratings yet

- FABELLA PGS Compliance Updated June 2023Document28 pagesFABELLA PGS Compliance Updated June 2023Jordan Michael De VeraNo ratings yet

- PLF-77 Servive ManualDocument26 pagesPLF-77 Servive Manualapi-3711045100% (1)

- Kristin Ackerson, Virginia Tech EE Spring 2002Document29 pagesKristin Ackerson, Virginia Tech EE Spring 2002jit_72No ratings yet

- Refers To The Structure or The Arrangement of The Components or Elements of A CurriculumDocument3 pagesRefers To The Structure or The Arrangement of The Components or Elements of A Curriculumlilibeth odalNo ratings yet

- Estolas, Jude Marco Synthesis Paper2Document9 pagesEstolas, Jude Marco Synthesis Paper2Denise Nicole EstolasNo ratings yet

- 165 Health Technology Assessment in IndiaDocument12 pages165 Health Technology Assessment in IndiaVinay GNo ratings yet

- Transit Capacity and Quality of Service Manual, Third Edition (2013)Document51 pagesTransit Capacity and Quality of Service Manual, Third Edition (2013)Roberto ValdovinosNo ratings yet

- The Ailing PlanetDocument4 pagesThe Ailing PlanetadiosNo ratings yet

- SR No Company Name Other Cities Nu Fax Number Address CityDocument2 pagesSR No Company Name Other Cities Nu Fax Number Address CityAarti IyerNo ratings yet

- Argumentation Analysis EssayDocument2 pagesArgumentation Analysis EssayAlley FitzgeraldNo ratings yet

- Agc Plant Management Installation Instructions 4189340926 UkDocument61 pagesAgc Plant Management Installation Instructions 4189340926 UkFernando Souza castroNo ratings yet

- Operation Research Sec BDocument11 pagesOperation Research Sec BTremaine AllenNo ratings yet

- Project Portfolio Management & Strategic Alignment PDFDocument101 pagesProject Portfolio Management & Strategic Alignment PDFtitli123No ratings yet

- Characterization of AA6111 Aluminum Alloy Thin Strips Produced Via The Horizontal Single Belt Casting ProcessDocument8 pagesCharacterization of AA6111 Aluminum Alloy Thin Strips Produced Via The Horizontal Single Belt Casting ProcessUsman NiazNo ratings yet

- Lab3 CN1Document5 pagesLab3 CN1Diksha NasaNo ratings yet