Professional Documents

Culture Documents

MANOVA

MANOVA

Uploaded by

Anonymous hkWIKjoXFVOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

MANOVA

MANOVA

Uploaded by

Anonymous hkWIKjoXFVCopyright:

Available Formats

MANOVA: Multivariate

Analysis of Variance

Review of ANOVA: Univariate

Analysis of Variance

An univariate analysis of variance looks for the causal

impact of a nominal level independent variable (factor) on a

single, interval or better level dependent variable

The basic question you seek to answer is whether or not

there is a difference in scores on the dependent variable

attributable to membership in one or the other category of

the independent variable

Analysis of Variance (ANOVA): Required when there are three

or more levels or conditions of the independent variable (but

can be done when there are only two)

What is the impact of ethnicity (IV) (Hispanic, AfricanAmerican, Asian-Pacific Islander, Caucasian, etc) on annual

salary (DV)?

What is the impact of three different methods of meeting a

potential mate (IV) (online dating service; speed dating; setup

by friends) on likelihood of a second date (DV)

Basic Analysis of Variance Concepts

We are going to make two estimates of the common population

variance, 2

The first estimate of the common variance 2 is called the between (or

among) estimate and it involves the variance of the IV category

means about the grand mean

The second is called the within estimate, which will be a weighted

average of the variances within each of the IV categories. This is an

unbiased estimate of 2

The ANOVA test, called the F test, involves comparing the between

estimate to the within estimate

If the null hypothesis, that the population means on the DV for the

levels of the IV are equal to one another, is true, then the ratio of

the between to the within estimate of 2 should be equal to one

(that is, the between and within estimates should be the same)

If the null hypothesis is false, and the population means are not

equal, then the F ratio will be significantly greater than unity

(one).

Basic ANOVA Output

Tests of Between-Subjects Effects

Dependent Variable: Respondent Socioeconomic Index

The IV,

fathers

highest

degree

Source

Corrected Model

Intercept

PADEG

Error

Total

Corrected Total

Type III Sum

of Squares

29791.484b

1006433.085

29791.484

382860.051

3073446.860

412651.535

df

4

1

4

1148

1153

1152

Mean Square

7447.871

1006433.085

7447.871

333.502

a. Computed using alpha = .05

b. R Squared = .072 (Adjusted R Squared = .069)

F

22.332

3017.774

22.332

Sig.

.000

.000

.000

Partial Eta

Squared

.072

.724

.072

Noncent.

Parameter

89.329

3017.774

89.329

Observed

a

Power

1.000

1.000

1.000

Some of the things that we learned to look for on the ANOVA output:

A. The value of the F ratio (same line as the IV or factor)

B. The significance of that F ratio (same line)

C. The partial eta squared (an estimate of the amount of the effect

size attributable to between-group differences (differences in levels of

the IV (ranges from 0 to 1 where 1 is strongest)

D. The power to detect the effect (ranges from 0 to 1 where 1 is

strongest)

More Review of ANOVA

Even if we have obtained a significant value of F and the overall

difference of means is significant, the F statistic isnt telling us

anything about how the mean scores varied among the levels of the

IV.

We can do some pairwise tests after the fact in which we compare

the means of the levels of the IV

The type of test we do depends on whether or not the variances of

the groups (conditions or levels of the IV) are equal

We test this using the Levene statistic.

If it is significant at p < .05 (group variances are significantly different)

we use an alternative post-hoc test like Tamhane

If it is not significant (groups variances are not significantly different) we

can use the Sheff or similar test

In this example, variances are not significantly different (p > .05) so we

use the Sheff test

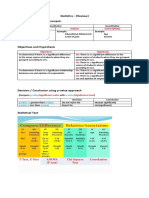

Test of Homogeneity of Variances

Self-disclosure

Levene

Statistic

.000

df1

df2

2

Sig.

1.000

Review of Factorial ANOVA

Two-way ANOVA is applied to a situation in which you

have two independent nominal-level variables and one

interval or better dependent variable

Each of the independent variables may have any number

of levels or conditions (e.g., Treatment 1, Treatment 2,

Treatment 3 No Treatment)

In a two-way ANOVA you will obtain 3 F ratios

One of these will tell you if your first independent

variable has a significant main effect on the DV

A second will tell you if your second independent

variable has a significant main effect on the DV

The third will tell you if the interaction of the two

independent variables has a significant effect on the

DV, that is, if the impact of one IV depends on the

level of the other

Review: Factorial ANOVA

Example

Tests of Hypotheses:

(1) There is no significant main effect for education level (F(2, 58) = 1.685, p = .

194, partial eta squared = .055) (red dots)

(2) There is no significant main effect for marital status (F (1, 58) = .441, p = .

509, partial eta squared = .008)(green dots)

(3) There is a significant interaction effect of marital status and education level (F

(2, 58) = 3.586, p = .034, partial eta squared = .110) (blue dots)

Plots of Interaction Effects

Estimated Marginal Means of TIMENET

9

8

Estimated Marginal Means

7

6

5

4

MarriedorNot

Married/Partner

NotMarried/Partner

HighSchool

CollegeorNot

SomePostHigh

CollegeorMore

Education Level is plotted

along the horizontal axis and

hours spent on the net is

plotted along the vertical

axis. The red and green lines

show how marital status

interacts with education

level. Here we note that

spending time on the

Internet is strongest among

the Post High School group

for single people, but lowest

among this group for married

people

MANOVA: What Kinds of

Hypotheses Can it Test?

A MANOVA or multivariate analysis of variance is a

way to test the hypothesis that one or more

independent variables, or factors, have an effect on a

set of two or more dependent variables

For example, you might wish to test the hypothesis

that sex and ethnicity interact to influence a set of

job-related outcomes including attitudes toward coworkers, attitudes toward supervisors, feelings of

belonging in the work environment, and identification

with the corporate culture

As another example, you might want to test the

hypothesis that three different methods of teaching

writing result in significant differences in ratings of

student creativity, student acquisition of grammar,

and assessments of writing quality by an independent

panel of judges

Why Should You Do a MANOVA?

You do a MANOVA instead of a series of one-at-a-time

ANOVAs for two main reasons

Supposedly to reduce the experiment-wise level of Type I

error (8 F tests at .05 each means the experiment-wise

probability of making a Type I error (rejecting the null

hypothesis when it is in fact true) is 40%! The so-called

overall test or omnibus test protects against this inflated

error probability only when the null hypothesis is true. If

you follow up a significant multivariate test with a bunch of

ANOVAs on the individual variables without adjusting the

error rates for the individual tests, theres no protection

Another reasons to do MANOVA. None of the individual

ANOVAs may produce a significant main effect on the DV,

but in combination they might, which suggests that the

variables are more meaningful taken together than

considered separately

MANOVA takes into account the intercorrelations among the

DVs

Assumptions of MANOVA

1. Multivariate normality

All of the DVs must be distributed normally (can visualize

this with histograms; tests are available for checking this

out)

Any linear combination of the DVs must be distributed

normally

Check out pairwise relationships among the DVs for

nonlinear relationships using scatter plots

All subsets of the variables must have a multivariate

normal distribution

These requirements are rarely if ever tested in practice

MANOVA is assumed to be a robust test that can stand up to

departures from multivariate normality in terms of Type I error

rate

Statistical power (power to detect a main or interaction effect)

may be reduced when distributions are very plateau-like

(platykurtic)

Assumptions of MANOVA, contd

2. Homogeneity of the covariance matrices

In ANOVA we talked about the need for the variances of the

dependent variable to be equal across levels of the

independent variable

In MANOVA, the univariate requirement of equal variances

has to hold for each one of the dependent variables

In MANOVA we extend this concept and require that the

covariance matrices be homogeneous

Computations in MANOVA require the use of matrix

algebra, and each persons score on the dependent

variables is actually a vector of scores on DV1, DV2, DV3,

. DVn

The matrices of the covariances-the variance shared

between any two variables-have to be equal across all

levels of the independent variable

Assumptions of MANOVA, contd

This homogeneity assumption is tested with a test that is similar to

Levenes test for the ANOVA case. It is called Boxs M, and it works

the same way: it tests the hypothesis that the covariance matrices

of the dependent variables are significantly different across levels

of the independent variable

Putting this in English, what you dont want is the case where if

your IV, was, for example, ethnicity, all the people in the other

category had scores on their 6 dependent variables clustered very

tightly around their mean, whereas people in the white category

had scores on the vector of 6 dependent variables clustered very

loosely around the mean. You dont want a leptokurtic set of

distributions for one level of the IV and a platykurtic set for another

level

If Boxs M is significant, it means you have violated an assumption

of MANOVA. This is not much of a problem if you have equal cell

sizes and large N; it is a much bigger issue with small sample sizes

and/or unequal cell sizes (in factorial anova if there are unequal cell

sizes the sums of squares for the three sources (two main effects

and interaction effect) wont add up to the Total SS)

Assumptions of MANOVA, contd

3. Independence of observations

Subjects scores on the dependent measures should not be

influenced by or related to scores of other subjects in the

condition or level

Can be tested with an intraclass correlation coefficient if

lack of independence of observations is suspected

MANOVA Example

Lets test the hypothesis that region of the

country (IV) has a significant impact on

three DVs, Percent of people who are

Christian adherents, Divorces per 1000

population, and Abortions per 1000

populations. The hypothesis is that there

will be a significant multivariate main effect

for region. Another way to put this is that

the vectors of means for the three DVs are

different among regions of the country

This is done with the General Linear Model/

Multivariate procedure in SPSS (we will look

first at an example where the analysis has

already been done)

Computations are done using matrix algebra

to find the ratio of the variability of B

(Between-Groups sums of squares and

cross-products (SSCP) matrix) to that of the

W (Within-Groups SSCP matrix)

South

Midwest

MY1

MY1

MY2

My3

MY2

My3

Vectors of means

on the three DVs

(Y1, Y2, Y3) for

Regions South and

Midwest

MANOVA test of Our Hypothesis

Multivariate Testsd

Effect

Intercept

REGION

Pillai's Trace

Wilks' Lambda

Hotelling's Trace

Roy's Largest Root

Pillai's Trace

Wilks' Lambda

Hotelling's Trace

Roy's Largest Root

Value

.984

.016

62.999

62.999

.620

.465

.971

.754

F

Hypothesis df

818.987b

3.000

818.987b

3.000

818.987b

3.000

b

818.987

3.000

3.562

9.000

3.900

9.000

4.062

9.000

c

10.299

3.000

Error df

39.000

39.000

39.000

39.000

123.000

95.066

113.000

41.000

Sig.

.000

.000

.000

.000

.001

.000

.000

.000

Partial Eta

Squared

.984

.984

.984

.984

.207

.225

.244

.430

Noncent.

Parameter

2456.960

2456.960

2456.960

2456.960

32.057

27.605

36.561

30.897

Observed

a

Power

1.000

1.000

1.000

1.000

.986

.964

.994

.997

a. Computed using alpha = .05

b. Exact statistic

c. The statistic is an upper bound on F that yields a lower bound on the significance level.

d. Design: Intercept+REGION

First we will look at the overall F test (over all three dependent variables). What we

are most interested in is a statistic called Wilks lambda (), and the F value

associated with that. Lambda is a measure of the percent of variance in the DVs

that is *not explained* by differences in the level of the independent variable.

Lambda varies between 1 and zero, and we want it to be near zero (e.g, no variance

that is not explained by the IV). In the case of our IV, REGION, Wilks lambda is .

465, and has an associated F of 3.90, which is significant at p. <001. Lambda is the

ratio of W to T (Total SSCP matrix)

MANOVA Test of our Hypothesis,

contd

Multivariate Testsd

Effect

Intercept

REGION

Pillai's Trace

Wilks' Lambda

Hotelling's Trace

Roy's Largest Root

Pillai's Trace

Wilks' Lambda

Hotelling's Trace

Roy's Largest Root

Value

.984

.016

62.999

62.999

.620

.465

.971

.754

F

Hypothesis df

818.987b

3.000

818.987b

3.000

818.987b

3.000

818.987b

3.000

3.562

9.000

3.900

9.000

4.062

9.000

10.299c

3.000

Error df

39.000

39.000

39.000

39.000

123.000

95.066

113.000

41.000

Sig.

.000

.000

.000

.000

.001

.000

.000

.000

Partial Eta

Squared

.984

.984

.984

.984

.207

.225

.244

.430

Noncent.

Parameter

2456.960

2456.960

2456.960

2456.960

32.057

27.605

36.561

30.897

Observed

a

Power

1.000

1.000

1.000

1.000

.986

.964

.994

.997

a. Computed using alpha = .05

b. Exact statistic

c. The statistic is an upper bound on F that yields a lower bound on the significance level.

d. Design: Intercept+REGION

Continuing to examine our

output, we find that the partial

eta squared associated with the

main effect of region is .225 and

the power to detect the main

effect is .964. These are very

good results!

We would write this up in the following way:

A one-way MANOVA revealed a significant

multivariate main effect for region, Wilks

= .465, F (9, 95.066) = 3.9, p <. 001,

partial eta squared = .225. Power to detect

the effect was .964. Thus hypothesis 1 was

confirmed.

Boxs Test of Equality of Covariance

Matrices

a

Box's Test of Equality of Covariance Matrices

Box's M

F

df1

df2

Sig.

60.311

2.881

18

4805.078

.000

Tests the null hypothesis that the observed covariance

matrices of the dependent variables are equal across groups.

a. Design: Intercept+REGION

Checking out the Boxs M test we find that the test is significant (which means that there are

significant differences among the regions in the covariance matrices). If we had low power

that might be a problem, but we dont have low power. However, when Boxs test finds that

the covariance matrices are significantly different across levels of the IV that may indicate an

increased possibility of Type I error, so you might want to make a smaller error region. If you

redid the analysis with a confidence level of .001, you would still get a significant result, so

its probably OK. You should report the results of the Boxs M, though.

Looking at the Individual

Dependent Variables

If the overall F test is significant, then its common

practice to go ahead and look at the individual

dependent variables with separate ANOVA tests

The experimentwise alpha protection provided by the

overall or omnibus F test does not extend to the

univariate tests. You should divide your confidence

levels by the number of tests you intend to perform,

so in this case if you expect to look at F tests for the

three dependent variables you should require that p <

.017 (.05/3)

This procedure ignores the fact the variables may be

intercorrelated and that the separate ANOVAS do not

take these intercorrelations into account

You could get three significant F ratios but if the

variables are highly correlated youre basically getting

the same result over and over

Univariate ANOVA tests of

Three Dependent Variables

Above is a portion of the output table reporting the ANOVA tests on the three

dependent variables, abortions per 1000, divorces per 1000, and % Christian

adherents. Note that only the F values for %Christian adherents and Divorces per

1000 population are significant at your criterion of .017. (Note: the MANOVA

procedure doesnt seem to let you set different p levels for the overall test and the

univariate tests, so the power here is higher than it would be if you did these tests

separately in a ANOVA procedure and set p to .017 before you did the tests.)

Writing up More of Your Results

So far you have written the following:

A one-way MANOVA revealed a significant multivariate

main effect for region, Wilks = .465, F (9, 95.066) =

3.9, p <. 001, partial eta squared = .225. Power to

detect the effect was .964. Thus hypothesis 1 was

confirmed.

You continue to write:

Given the significance of the overall test, the univariate

main effects were examined. Significant univariate main

effects for region were obtained for percentage of

Christian adherents, F (3, 41 ) = 3.944, p <.015 ,

partial eta square =.224, power = .794 ; and number

of divorces per 1000 population, F (3,41 ) = 8.789 , p

<.001 , partial eta square = .391, power = .991

Finally, Post-hoc Comparisons with Sheff Test

for the DVs that had Significant Univariate

ANOVAs

The Levenes statistics for the two DVs that had significant

univariate ANOVAs are all non-significant, meaning that

the group variances were equal, so you can use the

Sheff tests for comparing pairwise group means, e.g., do

the South and the West differ significantly on % of

Christian adherents and number of divorces.

a

Levene's Test of Equality of Error Variances

2. Census region

Dependent Variable

Abortions per 1,000

women

Percent of pop who are

Christian adherents

Divorces per 1,000 pop

Census region

Northeast

Midwest

South

West

Northeast

Midwest

South

West

Northeast

Midwest

South

West

Mean

23.333

14.136

17.229

18.118

53.389

60.182

55.921

43.718

3.600

3.745

4.964

5.591

Std. Error

3.188

2.884

2.556

2.884

3.907

3.534

3.133

3.534

.353

.319

.283

.319

95% Confidence Interval

Lower Bound

Upper Bound

16.895

29.772

8.312

19.960

12.066

22.391

12.294

23.942

45.498

61.280

53.044

67.320

49.594

62.248

36.580

50.856

2.887

4.313

3.101

4.390

4.393

5.536

4.946

6.236

F

Abortions per 1,000

women

Percent of pop who are

Christian adherents

Divorces per 1,000 pop

df1

df2

Sig.

1.068

41

.373

1.015

41

.396

1.641

41

.195

Tests the null hypothesis that the error variance of the dependent variable is

equal across groups.

a. Design: Intercept+REGION

Significant Pairwise Regional Differences

on the Two Significant DVs

You might want

to set your

confidence

level cutoff

even lower

since you are

going to be

doing 12 tests

here (4(3)/2)

for each

variable

Writing up All of Your MANOVA

Results

Your final paragraph will look like this

A one-way MANOVA revealed a significant multivariate main effect

for region, Wilks = .465, F (9, 95.066) = 3.9, p <. 001, partial

eta squared = .225. Power to detect the effect was .964. Thus

Hypothesis 1 was confirmed. Given the significance of the overall

test, the univariate main effects were examined. Significant

univariate main effects for region were obtained for percentage of

Christian adherents, F (3, 41 ) = 3.944, p <.015 , partial eta

square =.224, power = .794 ; and number of divorces per 1000

population, F (3,41 ) =8.789 , p <.001 , partial eta square = .391,

power = .991. Significant regional pairwise differences were

obtained in number of divorces per 1000 population between the

West and both the Northeast and Midwest. The mean number of

divorces per 1000 population were 5.59 in the West, 3.6 in the

Northeast, and 3.74 in the Midwest. You can present the pairwise

results and the MANOVA overall F results and univariate F results

in separate tables

Now You Try It!

Go here to download the file

statelevelmodified.sav

Lets test the hypothesis that region of the

country and availability of an educated

workforce have an impact on three

dependent variables: % union members,

per capita income, and unemployment rate

Although a test will be performed for an

interaction between region and workforce

education level, no specific effect is

hypothesized

Go to SPSS Data Editor

Running a MANOVA in SPSS

Go to Analyze/General Linear Model/ Multivariate

Move Census Region and HS Educ into the Fixed Factors

category (this is where the IVs go)

Move per capita income, unemployment rate, and % of

workers who are union members into the Dependent Variables

category

Under Plots, create four plots, one for each of the two main

effects (region, HS educ) and two for their interaction. Use

the Add button to add each new plot

Move region into the horizontal axis window and click the Add

button

Move hscat4 (HS educ) into the horizontal axis window and click

the Add button

Move region into the horizontal axis window and hscat 4 into the

separate lines window and click Add

Move hscat4 (HS educ) into the horizontal axis window and region

into the separate lines window and click Add, then click Continue

Setting up MANOVA in SPSS

Select descriptive statistics, estimates of

effect size, observed power, and

homogeneity tests

Set the confidence level to .05 and click

continue

Click OK

Compare your output to the next several

slides

Under Options, move all of the factors including

the interactions into the Display Means for window

MANOVA Main and Interaction

Effects

a

Box's Test of Equality of Covariance Matrices

Box's M

F

df1

df2

Sig.

55.398

2.191

18

704.185

.003

Tests the null hypothesis that the observed covariance

matrices of the dependent variables are equal across groups.

a. Design: Intercept+REGION+HSCAT4+REGION *

HSCAT4

Note that there are significant main effects for both region (green) and hscat4

(red) but not for their interaction (blue). Note the values of Wilks lambda;

only .237 of the variance is unexplained by region. Thats a very good result.

Boxs M is significant which is not so good but we do have high power. If you

redid the analysis with a lower significance level you would lose hscat4

Univariate Tests: ANOVAs on each of

the Three DVs for Region, HS Educ

Since we have obtained a significant multivariate main effect for each

factor, we can go ahead and do the univariate F tests where we look at

each DV in turn to see if the two IVS have a significant impact on them

separately. Since we are doing six tests here we are going to reguire an

experiment-wise alpha rate of .05, so we will divide it by six to get an

acceptable confidence level for each of the six tests, so we will set the

alpha level to p < .008. By that criterion, the only significant univariate

result is for the effect of region on unemployment rate. With a more

lenient criterion of .05 (and a greater probability of Type I error), three

other univariate tests would have been significant

Pairwise Comparisons on the

Significant Univariate Tests

We found that the only significant univariate main effect was for

the effect of region on unemployment rate. Now lets ask the

question, what are the differences between regions in

unemployment rate, considered two at a time?

What does the Levenes statistic say about the kind of post-hoc

test we can do with respect to the region variable?

According to the output, the group variances on unemployment

rate are not significantly different, so we can do a Sheff test

a

Levene's Test of Equality of Error Variances

F

Percent of workers who

are union members

Unemployment rate

percap income

df1

df2

Sig.

2.645

12

37

.012

1.281

2.573

12

12

37

37

.270

.014

Tests the null hypothesis that the error variance of the dependent variable is

equal across groups.

a. Design: Intercept+REGION+HSCAT4+REGION * HSCAT4

Pairwise Difference of Means

Since we are doing 6 significance tests (K(k-1)/2) looking at the pairwise

tests comparing the employment rate by region, we can use the smaller

confidence level again to protect against inflated alpha error, so lets divide

the .05 by 6 and set .008 as our error level. By this standard, the South

and Midwest and the West and Midwest are significantly different in

unemployment rate.

Reporting the Differences

2. Census region

Dependent Variable

Percent of workers who

are union members

Unemployment rate

percap income

Census region

Northeast

Midwest

South

West

Northeast

Midwest

South

West

Northeast

Midwest

South

West

Mean

Std. Error

16.254

1.851

14.454a

1.656

9.447a

1.612

13.861a

1.584

5.108

.334

3.917a

.299

5.076a

.290

6.294a

.285

23822.583

1010.336

20624.016a

904.224

21051.631a

879.836

21386.655a

864.768

95% Confidence Interval

Lower Bound

Upper Bound

12.504

20.004

11.098

17.810

6.182

12.713

10.651

17.070

4.433

5.784

3.312

4.521

4.488

5.665

5.716

6.872

21775.447

25869.719

18791.884

22456.147

19268.915

22834.348

19634.468

23138.841

a. Based on modified population marginal mean.

Significant mean differences in unemployment rate were

obtained between the Midwest (M = 3.917) and the West

(6.294) and Midwest and the South (M = 5.076)

Lab # 9

Duplicate the preceding data analysis in SPSS. Write

up the results (the tests of the hypothesis about the

main effects of region and HS Educ on the three

dependent variables of per capita income,

unemployment rate, and % union members, as if you

were writing for publication. Put your paragraph in a

Word document, and illustrate your results with tables

from the output as appropriate (for example, the

overall multivariate F table and the table of mean

scores broken down by regions). You can also use

plots to illustrate significant effects

You might also like

- 2 Storey Terrace HousesDocument11 pages2 Storey Terrace Housesrokiahhassan100% (1)

- Community Service Project ReportDocument12 pagesCommunity Service Project ReportTrophyson50% (2)

- CHYS 3P15 Final Exam ReviewDocument7 pagesCHYS 3P15 Final Exam ReviewAmanda ScottNo ratings yet

- T TestDocument16 pagesT TestMohammad Abdul OhabNo ratings yet

- Iri1 WDDocument12 pagesIri1 WDecplpraveen100% (2)

- A Study On The Effectiveness of Inventory Management at Ashok Leyland Private Limited in ChennaiDocument9 pagesA Study On The Effectiveness of Inventory Management at Ashok Leyland Private Limited in ChennaiGaytri AggarwalNo ratings yet

- MANOVADocument33 pagesMANOVAVishnu Prakash SinghNo ratings yet

- MANOVA - AnalysisDocument33 pagesMANOVA - Analysiskenshin1313No ratings yet

- Introduction To Analysis of Variance (ANOVA)Document51 pagesIntroduction To Analysis of Variance (ANOVA)Alyssa AmigoNo ratings yet

- Unit 7 2 Hypothesis Testing and Test of DifferencesDocument13 pagesUnit 7 2 Hypothesis Testing and Test of Differencesedselsalamanca2No ratings yet

- Glossary StatisticsDocument6 pagesGlossary Statisticssilviac.microsysNo ratings yet

- 8614 22Document13 pages8614 22Muhammad NaqeebNo ratings yet

- Statistics - (Review) Scales/Levels of Measurement:: Nominal Ordinal Interval/RatioDocument4 pagesStatistics - (Review) Scales/Levels of Measurement:: Nominal Ordinal Interval/RatioDaily TaxPHNo ratings yet

- Statistical Treatment of DataDocument27 pagesStatistical Treatment of Dataasdf ghjkNo ratings yet

- Statistics For Psychology 6th Edition Aron Solutions ManualDocument38 pagesStatistics For Psychology 6th Edition Aron Solutions Manualedithelizabeth5mz18100% (20)

- Kami Export - Brennan Murray - Chapter 13 (13.1 - 13.3) F Distribution and One-Way ANOVADocument3 pagesKami Export - Brennan Murray - Chapter 13 (13.1 - 13.3) F Distribution and One-Way ANOVABrennan MurrayNo ratings yet

- ANOVA de Dos VíasDocument17 pagesANOVA de Dos VíasFernando GutierrezNo ratings yet

- Anova Table in Research PaperDocument5 pagesAnova Table in Research Paperfyr90d7mNo ratings yet

- Writing The Results SectionDocument11 pagesWriting The Results SectionAlina CiabucaNo ratings yet

- Chapter 5 (Part Ii)Document20 pagesChapter 5 (Part Ii)Natasha GhazaliNo ratings yet

- Statistical TreatmentDocument16 pagesStatistical TreatmentMaria ArleneNo ratings yet

- An o Va (Anova) : Alysis F RianceDocument29 pagesAn o Va (Anova) : Alysis F RianceSajid AhmadNo ratings yet

- F TestDocument7 pagesF TestShamik MisraNo ratings yet

- Statistical TestsDocument9 pagesStatistical Testsalbao.elaine21No ratings yet

- Definition of A Measure of AssociationDocument9 pagesDefinition of A Measure of AssociationAlexis RenovillaNo ratings yet

- Chapter 11Document6 pagesChapter 11Khay OngNo ratings yet

- SmartPLS UtilitiesDocument11 pagesSmartPLS UtilitiesplsmanNo ratings yet

- Sample Thesis Using AnovaDocument6 pagesSample Thesis Using Anovapbfbkxgld100% (2)

- One Way AnovaDocument47 pagesOne Way AnovaMaimoona KayaniNo ratings yet

- Attachment 1 4Document10 pagesAttachment 1 4olga orbaseNo ratings yet

- Anovspss PDFDocument2 pagesAnovspss PDFkjithingNo ratings yet

- Textual Analysis of Big Data in Social Media Through Data Mining and Fuzzy Logic ProcessDocument9 pagesTextual Analysis of Big Data in Social Media Through Data Mining and Fuzzy Logic ProcessNers Nieva-Redan Pablo DitNo ratings yet

- CRJ 503 PARAMETRIC TESTS DifferencesDocument10 pagesCRJ 503 PARAMETRIC TESTS DifferencesWilfredo De la cruz jr.No ratings yet

- 1499153291Module11Q1Univariateanalysis PDFDocument11 pages1499153291Module11Q1Univariateanalysis PDFSonika P SinghNo ratings yet

- Chapter Iii - Measures of Central Tendency PDFDocument19 pagesChapter Iii - Measures of Central Tendency PDFJims PotterNo ratings yet

- Prof. James AnalysisDocument142 pagesProf. James Analysismeshack mbalaNo ratings yet

- 1668068232statistical PDFDocument26 pages1668068232statistical PDFLisna CholakkalNo ratings yet

- Biostat PortfolioDocument21 pagesBiostat PortfolioIan IglesiaNo ratings yet

- Descriptive and Inferential Statistical ToolsDocument22 pagesDescriptive and Inferential Statistical ToolsEscare Rahjni FaithNo ratings yet

- ARD 3201 Tests For Significance in EconometricsDocument3 pagesARD 3201 Tests For Significance in EconometricsAlinaitwe GodfreyNo ratings yet

- SPSS Training Program & Introduction To Statistical Testing: Variance and VariablesDocument13 pagesSPSS Training Program & Introduction To Statistical Testing: Variance and VariablesThu DangNo ratings yet

- ANOVA For Feature Selection in Machine Learning by Sampath Kumar Gajawada Towards Data ScienceDocument10 pagesANOVA For Feature Selection in Machine Learning by Sampath Kumar Gajawada Towards Data ScienceCuvoxNo ratings yet

- Analysis of Variance: (Anova)Document35 pagesAnalysis of Variance: (Anova)Lata DhungelNo ratings yet

- Activity No. 8.1 Statistical TreatmentDocument1 pageActivity No. 8.1 Statistical TreatmentTrisha BenhalidNo ratings yet

- Biostatistics Assessment-I: 1. What Type of Statistical Analysis Technique Will Address Each of The Following ResearchDocument2 pagesBiostatistics Assessment-I: 1. What Type of Statistical Analysis Technique Will Address Each of The Following ResearchtemesgenNo ratings yet

- Use of F Distribution (Analysis of Variance (ANOVA) )Document10 pagesUse of F Distribution (Analysis of Variance (ANOVA) )pandit shendeNo ratings yet

- Q.1 What Do You Know About? An Independent Sample T-Test. and A Paired Sample T-TestDocument12 pagesQ.1 What Do You Know About? An Independent Sample T-Test. and A Paired Sample T-TestSajawal ManzoorNo ratings yet

- List of Important AP Statistics Concepts To KnowDocument9 pagesList of Important AP Statistics Concepts To Knowm234567No ratings yet

- MMW (Data Management) - Part 2Document43 pagesMMW (Data Management) - Part 2arabellah shainnah rosalesNo ratings yet

- Lecture 12 (Data Analysis and InterpretationDocument16 pagesLecture 12 (Data Analysis and InterpretationAltaf KhanNo ratings yet

- Sample Chapter 3Document8 pagesSample Chapter 3thepogi2005No ratings yet

- Chapters 19Document12 pagesChapters 19Renz ClaviteNo ratings yet

- Chapter ADocument46 pagesChapter AKamijo YoitsuNo ratings yet

- Advanced Statistics Previous Year QuestionsDocument20 pagesAdvanced Statistics Previous Year QuestionsTaskia SarkarNo ratings yet

- RESEARCH 10 q3 w7-8Document10 pagesRESEARCH 10 q3 w7-8ARNELYN SAFLOR-LABAONo ratings yet

- For Chapter 3Document3 pagesFor Chapter 3miko roseteNo ratings yet

- Hello JudyDocument3 pagesHello Judyapi-305512799No ratings yet

- Chapter 5 Hypothesis TestingDocument27 pagesChapter 5 Hypothesis Testingkidi mollaNo ratings yet

- Non-Parametric TestsDocument13 pagesNon-Parametric TestsDiana Jean Alo-adNo ratings yet

- Cronbach's Alpha: Cohen's D Is An Effect Size Used To Indicate The Standardised Difference Between Two Means. It CanDocument2 pagesCronbach's Alpha: Cohen's D Is An Effect Size Used To Indicate The Standardised Difference Between Two Means. It CanVAKNo ratings yet

- Advance Statistics XXXDocument5 pagesAdvance Statistics XXXLichelle BalagtasNo ratings yet

- Independent Samples T-Test: Module No. 2Document9 pagesIndependent Samples T-Test: Module No. 2Ash AbanillaNo ratings yet

- Julie Ann A. Analista February 2014Document67 pagesJulie Ann A. Analista February 2014Julie AnalistaNo ratings yet

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignFrom EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignNo ratings yet

- AllHome All Out Sale Employees As of Sept 19Document298 pagesAllHome All Out Sale Employees As of Sept 19Reymark MusteraNo ratings yet

- English ReduplicationDocument5 pagesEnglish ReduplicationHarry TapiaNo ratings yet

- Panasonic KX-FL511 ServiceManualDocument316 pagesPanasonic KX-FL511 ServiceManualLee OnNo ratings yet

- Cooper Tire Serbia - Vendor List of Equipment and Materials - 12.09.2018.Document3 pagesCooper Tire Serbia - Vendor List of Equipment and Materials - 12.09.2018.Nikola ZecevicNo ratings yet

- Om On280Document38 pagesOm On280yonasNo ratings yet

- Financial Ratios Guide For Non Government Schools Registration Board VisitDocument4 pagesFinancial Ratios Guide For Non Government Schools Registration Board VisitSurendra DevadigaNo ratings yet

- 5G Vision, Requirements, and Enabling Technologies PDFDocument328 pages5G Vision, Requirements, and Enabling Technologies PDFDenmark Wilson100% (1)

- Vehicle ServiceDocument114 pagesVehicle ServiceiDTech Technologies100% (1)

- Alternative Energy Resources in The Philippines: Dana Franchesca M. DichosoDocument11 pagesAlternative Energy Resources in The Philippines: Dana Franchesca M. DichosoDana Franchesca DichosoNo ratings yet

- Design of Intze Water TankDocument21 pagesDesign of Intze Water TankAbhijeet GuptaNo ratings yet

- Determinants of Competitive Advantage of Firms in The Telecommunications...Document74 pagesDeterminants of Competitive Advantage of Firms in The Telecommunications...MILAO BENSON KOKONYANo ratings yet

- APT Rules, 1973 NewDocument34 pagesAPT Rules, 1973 NewMuhammad HuzaifaNo ratings yet

- PV ComplianceDocument10 pagesPV ComplianceSaratha K100% (1)

- Fall Civil Procedure Outline FinalDocument22 pagesFall Civil Procedure Outline FinalKiersten Kiki Sellers100% (1)

- Setup For Parallel Computation: ConfigurationDocument2 pagesSetup For Parallel Computation: ConfigurationVertaNo ratings yet

- Advantage & Disadvantage of AntivirusDocument2 pagesAdvantage & Disadvantage of AntivirusSrijita SinhaNo ratings yet

- Feasibility Study For 5 Hectare Socialized HousingDocument3 pagesFeasibility Study For 5 Hectare Socialized HousingAtnapaz JodNo ratings yet

- Ged-104-The-Contemporary-World-Modules-1 (1) - RemovedDocument55 pagesGed-104-The-Contemporary-World-Modules-1 (1) - RemovedJB AriasdcNo ratings yet

- MCA 3rd Year 1st Sem HB - 2012-2013Document200 pagesMCA 3rd Year 1st Sem HB - 2012-2013moravinenirambabuNo ratings yet

- CT753 - 2020 08 29T04 26 21.878Z - Simulation - ModelingDocument73 pagesCT753 - 2020 08 29T04 26 21.878Z - Simulation - ModelingAnish shahNo ratings yet

- Strategic Management Process in Hospitality Industry: Presented byDocument13 pagesStrategic Management Process in Hospitality Industry: Presented bySakshi ShardaNo ratings yet

- SB70491ATOSH6ADocument21 pagesSB70491ATOSH6ACarlosNo ratings yet

- BowTieXP Software Manual - For Release 9.2Document285 pagesBowTieXP Software Manual - For Release 9.2mpinto2011No ratings yet

- Richard Davies ResumeDocument2 pagesRichard Davies ResumeAnonymous sAmQdzkUNo ratings yet

- OM 80669C R6 Safety-Multi PDFDocument362 pagesOM 80669C R6 Safety-Multi PDFKaro LisNo ratings yet

- PNA Vol 2 Chapter V Section 1 PDFDocument7 pagesPNA Vol 2 Chapter V Section 1 PDFCesar Augusto Carneiro SilvaNo ratings yet