Professional Documents

Culture Documents

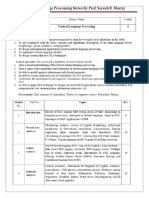

Natural Language Processing Lec 4

Uploaded by

Touseef sultan0 ratings0% found this document useful (0 votes)

9 views21 pagesThe document discusses several key concepts in natural language processing including compositional semantics, semantic composition, and word sense disambiguation. Compositional semantics is the principle that the meaning of a phrase can be derived from the meanings of its constituent parts and the rules by which they are combined. Semantic composition refers to how syntax conveys meaning through composition rules. Word sense disambiguation aims to determine which meaning of an ambiguous word is intended based on the context.

Original Description:

Original Title

Natural language processing lec 4

Copyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses several key concepts in natural language processing including compositional semantics, semantic composition, and word sense disambiguation. Compositional semantics is the principle that the meaning of a phrase can be derived from the meanings of its constituent parts and the rules by which they are combined. Semantic composition refers to how syntax conveys meaning through composition rules. Word sense disambiguation aims to determine which meaning of an ambiguous word is intended based on the context.

Copyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views21 pagesNatural Language Processing Lec 4

Uploaded by

Touseef sultanThe document discusses several key concepts in natural language processing including compositional semantics, semantic composition, and word sense disambiguation. Compositional semantics is the principle that the meaning of a phrase can be derived from the meanings of its constituent parts and the rules by which they are combined. Semantic composition refers to how syntax conveys meaning through composition rules. Word sense disambiguation aims to determine which meaning of an ambiguous word is intended based on the context.

Copyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You are on page 1of 21

Natural language processing

Instructor: Touseef Sultan

Compositional Semantics

• Principle of Compositionality: meaning of each whole phrase

derivable from meaning of its parts.

• Sentence structure conveys some meaning

• Deep grammars: model semantics alongside syntax, one semantic

composition rule per syntax rule

Semantic composition is non-trivial

• Similar syntactic structures may have different meanings:

it barks it rains;

it snows – pleonastic pronouns

• Different syntactic structures may have the same meaning:

Kim seems to sleep.

It seems that Kim sleeps.

• Not all phrases are interpreted compositionally, e.g. idioms:

red tape

kick the bucket

but they can be interpreted compositionally too, so we can not simply block them.

Semantic composition is non-trivial

• Elliptical constructions where additional meaning arises through

composition, e.g. logical metonymy:

fast programmer

fast plane

• Meaning transfer and additional connotations that arise

through composition, e.g. metaphor

• cant buy this story.

This sum will buy you a ride on the train.

• Recursion

Compositional semantic models

• 1. Compositional distributional semantics

I. model composition in a vector space

II. unsupervised

III. general-purpose representations

• 2. Compositional semantics in neural networks

I. supervised

II. (typically) task-specific representations

Compositional distributional semantics

Can distributional semantics be extended to account for the meaning of

phrases and sentences?

• Language can have an infinite number of sentences, given a limited

vocabulary

• So we can not learn vectors for all phrases and sentences

• and need to do composition in a distributional space

1) How do we learn a (task-specific) representation of a sentence with a

neural network?

2) How do we make a prediction for a given task from that

representation?

Semantic Role labeling

• In natural language processing, semantic role labeling (also called

shallow semantic parsing or slot-filling) is the process that assigns

labels to words or phrases in a sentence that indicates their semantic

role in the sentence, such as that of an agent, goal, or result.

SRL

• It serves to find the meaning of the sentence. To do this, it

detects the arguments associated with the predicate or verb of

a sentence and how they are classified into their specific roles.

A common example is the sentence "Mary sold the book to

John." The agent is "Mary," the predicate is "sold" (or rather, "to

sell,") the theme is "the book," and the recipient is "John."

Another example is how "the book belongs to me" would need

two labels such as "possessed" and "possessor" and "the book

was sold to John" would need two other labels such as theme

and recipient, despite these two clauses being similar to

"subject" and "object" functions.

SRL Uses

• Semantic role labeling is mostly used for machines to understand the

roles of words within sentences. This benefits applications similar to

Natural Language Processing programs that need to understand not

just the words of languages, but how they can be used in varying

sentences. A better understanding of semantic role labeling could

lead to advancements in question answering, information extraction,

automatic text summarization, text data mining, and speech

recognition.

Word sense disambiguation

• We understand that words have different meanings based on the context of its usage in

the sentence. If we talk about human languages, then they are ambiguous too because

many words can be interpreted in multiple ways depending upon the context of their

occurrence.

• Word sense disambiguation, in natural language processing (NLP), may be defined as

the ability to determine which meaning of word is activated by the use of word in a

particular context. Lexical ambiguity, syntactic or semantic, is one of the very first

problem that any NLP system faces. Part-of-speech (POS) taggers with high level of

accuracy can solve Word’s syntactic ambiguity. On the other hand, the problem of

resolving semantic ambiguity is called WSD (word sense disambiguation). Resolving

semantic ambiguity is harder than resolving syntactic ambiguity.

• For example, consider the two examples of the distinct sense that exist for

the word “bass” −

• I can hear bass sound.

• He likes to eat grilled bass.

• The occurrence of the word bass clearly denotes the distinct meaning. In

first sentence, it means frequency and in second, it means fish. Hence, if

it would be disambiguated by WSD then the correct meaning to the above

sentences can be assigned as follows

• I can hear bass/frequency sound.

• He likes to eat grilled bass/fish.

Evaluation of WSD

The evaluation of WSD requires the following two inputs −

A Dictionary

• The very first input for evaluation of WSD is dictionary, which is used to specify

the senses to be disambiguated.

Test Corpus

• Another input required by WSD is the high-annotated test corpus that has the target

or correct-senses. The test corpora can be of two types & minsu;

• Lexical sample − This kind of corpora is used in the system, where it is required to

disambiguate a small sample of words.

• All-words − This kind of corpora is used in the system, where it is expected to

disambiguate all the words in a piece of running text.

Approaches and Methods to Word Sense

Disambiguation (WSD)

• Approaches and methods to WSD are classified according to the source of knowledge

used in word disambiguation.

• Let us now see the four conventional methods to WSD −

1-Dictionary-based or Knowledge-based Methods

• As the name suggests, for disambiguation, these methods primarily rely on dictionaries,

treasures and lexical knowledge base. They do not use corpora evidences for

disambiguation. The Lesk method is the seminal dictionary-based method introduced by

Michael Lesk in 1986. The Lesk definition, on which the Lesk algorithm is based

is “measure overlap between sense definitions for all words in context”. However, in

2000, Kilgarriff and Rosensweig gave the simplified Lesk definition as “measure

overlap between sense definitions of word and current context”, which further means

identify the correct sense for one word at a time. Here the current context is the set of

words in surrounding sentence or paragraph..

2-Supervised Methods

For disambiguation, machine learning methods make use of sense-annotated corpora to train. These

methods assume that the context can provide enough evidence on its own to disambiguate the

sense. In these methods, the words knowledge and reasoning are deemed unnecessary. The context

is represented as a set of “features” of the words. It includes the information about the surrounding

words also. Support vector machine and memory-based learning are the most successful supervised

learning approaches to WSD. These methods rely on substantial amount of manually sense-tagged

corpora, which is very expensive to create.

3-Semi-supervised Methods

Due to the lack of training corpus, most of the word sense disambiguation algorithms use semi-

supervised learning methods. It is because semi-supervised methods use both labelled as well as

unlabeled data. These methods require very small amount of annotated text and large amount of

plain unannotated text. The technique that is used by semisupervised methods is bootstrapping

from seed data.

4-Unsupervised Methods

These methods assume that similar senses occur in similar context. That

is why the senses can be induced from text by clustering word

occurrences by using some measure of similarity of the context. This

task is called word sense induction or discrimination. Unsupervised

methods have great potential to overcome the knowledge acquisition

bottleneck due to non-dependency on manual efforts.

Applications of Word Sense Disambiguation

(WSD)

Word sense disambiguation (WSD) is applied in almost every application of language

technology.

Let us now see the scope of WSD −

• Machine Translation

Machine translation or MT is the most obvious application of WSD. In MT, Lexical

choice for the words that have distinct translations for different senses, is done by WSD.

The senses in MT are represented as words in the target language. Most of the machine

translation systems do not use explicit WSD module.

• Lexicography

WSD and lexicography can work together in loop because modern lexicography is

corpusbased. With lexicography, WSD provides rough empirical sense groupings as well

as statistically significant contextual indicators of sense.

• Information Retrieval (IR)

Information retrieval (IR) may be defined as a software program that deals with the

organization, storage, retrieval and evaluation of information from document repositories

particularly textual information. The system basically assists users in finding the

information they required but it does not explicitly return the answers of the questions. WSD

is used to resolve the ambiguities of the queries provided to IR system. As like MT, current

IR systems do not explicitly use WSD module and they rely on the concept that user would

type enough context in the query to only retrieve relevant documents.

• Text Mining and Information Extraction (IE)

In most of the applications, WSD is necessary to do accurate analysis of text. For example,

WSD helps intelligent gathering system to do flagging of the correct words. For example,

medical intelligent system might need flagging of “illegal drugs” rather than “medical

drugs”

Difficulties in Word Sense Disambiguation

(WSD)

• Followings are some difficulties faced by word sense disambiguation (WSD) −

• Differences between dictionaries

The major problem of WSD is to decide the sense of the word because different senses can be very closely

related. Even different dictionaries and thesauruses can provide different divisions of words into senses.

• Different algorithms for different applications

Another problem of WSD is that completely different algorithm might be needed for different applications.

For example, in machine translation, it takes the form of target word selection; and in information retrieval,

a sense inventory is not required.

• Inter-judge variance

Another problem of WSD is that WSD systems are generally tested by having their results on a task

compared against the task of human beings. This is called the problem of interjudge variance.

• Word-sense discreteness

Another difficulty in WSD is that words cannot be easily divided into discrete submeanings.

You might also like

- Wordsmith's Toolbox: Empowering Your Skills with Natural Language ProcessingFrom EverandWordsmith's Toolbox: Empowering Your Skills with Natural Language ProcessingNo ratings yet

- Big Data AnalyticsDocument13 pagesBig Data Analyticsgauri krishnamoorthyNo ratings yet

- First StageDocument15 pagesFirst StageSanyam ShuklaNo ratings yet

- Unit-III PDFDocument72 pagesUnit-III PDFBhagyaNo ratings yet

- CMR University School of Engineering and Technology Department of Cse and ItDocument8 pagesCMR University School of Engineering and Technology Department of Cse and ItSmart WorkNo ratings yet

- Researchpaper Word2vecDocument11 pagesResearchpaper Word2vecmakikaka12No ratings yet

- Unit 3 NotesDocument37 pagesUnit 3 NotesDeepak DeepuNo ratings yet

- NLP MergedDocument975 pagesNLP Mergedsireesha valluri100% (1)

- Natural Language Processing Lec 1Document23 pagesNatural Language Processing Lec 1Touseef sultanNo ratings yet

- NLP Self NotesDocument12 pagesNLP Self NotesKadarla ManasaNo ratings yet

- Natural Language Processing (NLP) : Chapter 1: Introduction To NLPDocument96 pagesNatural Language Processing (NLP) : Chapter 1: Introduction To NLPAndualem AlefeNo ratings yet

- Group 4 Reading and TranslationDocument36 pagesGroup 4 Reading and Translationtoannv8187No ratings yet

- Natural Language Processing (NPL) : Group Name: Goal DiggersDocument22 pagesNatural Language Processing (NPL) : Group Name: Goal DiggersArvind KumawatNo ratings yet

- 1.introduction To Natural Language Processing (NLP)Document37 pages1.introduction To Natural Language Processing (NLP)Syed hasan haider100% (1)

- Word Sense Disambiguation and Its Approaches: Vimal Dixit, Kamlesh Dutta and Pardeep SinghDocument5 pagesWord Sense Disambiguation and Its Approaches: Vimal Dixit, Kamlesh Dutta and Pardeep SinghhemantNo ratings yet

- Word 2 VectorDocument4 pagesWord 2 Vectormakikaka12No ratings yet

- PARTS OF SPEECH TAGGING ArticleDocument4 pagesPARTS OF SPEECH TAGGING ArticleSudhanshu SinghalNo ratings yet

- Machine TranslationDocument6 pagesMachine TranslationEssam KassabNo ratings yet

- Assignment No 6Document5 pagesAssignment No 6Muhammad Afaq akramNo ratings yet

- An Online Punjabi Shahmukhi Lexical ResourceDocument7 pagesAn Online Punjabi Shahmukhi Lexical ResourceJameel AbbasiNo ratings yet

- Chapter 6-NLP BasicsDocument27 pagesChapter 6-NLP Basicsamanterefe99No ratings yet

- Introduction To NLP and AmbiguityDocument42 pagesIntroduction To NLP and AmbiguitySushanNo ratings yet

- B - N - M - Institute of Technology: Department of Computer Science & EngineeringDocument44 pagesB - N - M - Institute of Technology: Department of Computer Science & Engineering1DT18IS029 DILIPNo ratings yet

- Word2Vec - A Baby Step in Deep Learning But A Giant Leap Towards Natural Language ProcessingDocument12 pagesWord2Vec - A Baby Step in Deep Learning But A Giant Leap Towards Natural Language ProcessingMarian AldescuNo ratings yet

- Natural Language ProcessingDocument7 pagesNatural Language ProcessingAsim Arunava Sahoo75% (4)

- Chapter-1 Introduction To NLPDocument12 pagesChapter-1 Introduction To NLPSruja KoshtiNo ratings yet

- Word Sense Disambiguation Methods Applied To English and RomanianDocument8 pagesWord Sense Disambiguation Methods Applied To English and RomanianLeonNo ratings yet

- Text Semantic SimilarityDocument17 pagesText Semantic SimilaritySaathvik R KhatavkarNo ratings yet

- Natural Language ProcessingDocument24 pagesNatural Language ProcessingsadiaNo ratings yet

- 5.natural Language ProcessingDocument5 pages5.natural Language ProcessingDEVARAJ PNo ratings yet

- 3.1 Natural Language ProcessingDocument5 pages3.1 Natural Language ProcessingFardeen AzharNo ratings yet

- Vickrey - Word-Sense Disambiguation For Machine TranslationDocument8 pagesVickrey - Word-Sense Disambiguation For Machine TranslationPedor RomerNo ratings yet

- Project ProposalDocument4 pagesProject Proposal• Ali •No ratings yet

- NLP PresentationDocument19 pagesNLP PresentationMUHAMMAD NOFIL BHATTYNo ratings yet

- Natural Language ProcessingDocument13 pagesNatural Language ProcessingManju VinoNo ratings yet

- Introduction To Natural Language Processing and NLTKDocument23 pagesIntroduction To Natural Language Processing and NLTKNikhil SainiNo ratings yet

- Group Assignment: Unit OneDocument27 pagesGroup Assignment: Unit OneKena hkNo ratings yet

- (A) What Is Traditional Model of NLP?: Unit - 1Document18 pages(A) What Is Traditional Model of NLP?: Unit - 1Sonu KumarNo ratings yet

- NLP PresentationDocument19 pagesNLP PresentationMUHAMMAD NOFIL BHATTYNo ratings yet

- NATURAL LANGUAGE PROCESSING Unit 1 Till Good GrammarDocument13 pagesNATURAL LANGUAGE PROCESSING Unit 1 Till Good Grammardavad25089No ratings yet

- Implementation of Speech Synthesis System Using Neural NetworksDocument4 pagesImplementation of Speech Synthesis System Using Neural NetworksInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Unit 2Document8 pagesUnit 2sukeshinivijay61No ratings yet

- Unit - 5Document13 pagesUnit - 5298hrqr66nNo ratings yet

- Unit 5 - NotesDocument11 pagesUnit 5 - NotesBhavna SharmaNo ratings yet

- Inflection Morphology: InflexionDocument4 pagesInflection Morphology: InflexionPranjalKothavadeNo ratings yet

- NLP Detail 3Document5 pagesNLP Detail 3shahzad sultanNo ratings yet

- NLP Unit I Notes-1Document22 pagesNLP Unit I Notes-1nsyadav8959100% (1)

- Unit V Intelligence and Applications: Morphological Analysis/Lexical AnalysisDocument30 pagesUnit V Intelligence and Applications: Morphological Analysis/Lexical AnalysisDhoni viratNo ratings yet

- 6CS4 AI Unit-5Document65 pages6CS4 AI Unit-5Nikhil KumarNo ratings yet

- NLP Notes (Ch1-5) PDFDocument41 pagesNLP Notes (Ch1-5) PDFVARNESH GAWDE100% (1)

- Assignment of AI FinishedDocument16 pagesAssignment of AI FinishedAga ChimdesaNo ratings yet

- Scientific Research Report: Vietnam National UniversityDocument9 pagesScientific Research Report: Vietnam National UniversityHuy Vo VanNo ratings yet

- WINTER - Do Shubham UniyalDocument11 pagesWINTER - Do Shubham UniyalRavi Shankar KumarNo ratings yet

- Cbse - Department of Skill Education Artificial IntelligenceDocument11 pagesCbse - Department of Skill Education Artificial IntelligenceNipun SharmaNo ratings yet

- Solutions To NLP I Mid Set ADocument8 pagesSolutions To NLP I Mid Set Ajyothibellaryv100% (1)

- NLP Unit 1 AnswersDocument7 pagesNLP Unit 1 AnswersMallepally ChakravardhanNo ratings yet

- Chapter 6Document28 pagesChapter 6Dewanand Giri100% (1)

- Natural Language ProcessingDocument15 pagesNatural Language ProcessingridhamNo ratings yet

- Unveiling the Power of Language: A Practical Approach to Natural Language ProcessingFrom EverandUnveiling the Power of Language: A Practical Approach to Natural Language ProcessingNo ratings yet

- Natural Language Processing Lec 3Document25 pagesNatural Language Processing Lec 3Touseef sultanNo ratings yet

- Natural Language Processing Lec 5Document12 pagesNatural Language Processing Lec 5Touseef sultanNo ratings yet

- Natural Language Processing Lec 1Document23 pagesNatural Language Processing Lec 1Touseef sultanNo ratings yet

- Natural Language Processing Lec 2Document30 pagesNatural Language Processing Lec 2Touseef sultanNo ratings yet

- Computer 12 CH01MCQsDocument11 pagesComputer 12 CH01MCQsTouseef sultanNo ratings yet

- Q1. Fill in The Blanks: (Ch#2) Basic Concepts and Terminology Computer Science Part-IIDocument5 pagesQ1. Fill in The Blanks: (Ch#2) Basic Concepts and Terminology Computer Science Part-IITouseef sultanNo ratings yet

- New Doc 2019-04-12 09.18.25Document5 pagesNew Doc 2019-04-12 09.18.25Touseef sultanNo ratings yet

- CA 1st LecDocument22 pagesCA 1st LecTouseef sultanNo ratings yet

- CA Assignment 2Document2 pagesCA Assignment 2Touseef sultan50% (2)

- Services, Threading, AsyncTask in AndroidDocument16 pagesServices, Threading, AsyncTask in AndroidPrafful JainNo ratings yet

- Music 7: First Quarter: Lesson 1: Folk SongsDocument10 pagesMusic 7: First Quarter: Lesson 1: Folk Songsnemia macabingkilNo ratings yet

- Homework Task 1: Submission Date: See Activities CalendarDocument4 pagesHomework Task 1: Submission Date: See Activities CalendarJorge Talavera AnayaNo ratings yet

- UNIT 2 Worksheet No 2.2Document2 pagesUNIT 2 Worksheet No 2.2Brevish FrancoNo ratings yet

- 0380017Document16 pages0380017Petar UrsićNo ratings yet

- 1001 Vocabulary & Spelling QuestionsDocument160 pages1001 Vocabulary & Spelling QuestionsVicente Tan100% (1)

- Reader Response FormDocument3 pagesReader Response FormShan Zedrick SungaNo ratings yet

- Anglo-Saxon LiteratureDocument19 pagesAnglo-Saxon LiteratureAltaf KalwarNo ratings yet

- Business Communication Lecture Notes Unit IDocument5 pagesBusiness Communication Lecture Notes Unit IAmit Kumar83% (6)

- Riverbed Getting StartedDocument142 pagesRiverbed Getting Startedarvindshukla786No ratings yet

- Lesson7&8 MILDocument4 pagesLesson7&8 MILJustine Roberts E. MatorresNo ratings yet

- Arabic Writing Sheet Revised 2012Document19 pagesArabic Writing Sheet Revised 2012Mahmoud ElgayyarNo ratings yet

- Assingment Oral CommunicationDocument14 pagesAssingment Oral Communicationqasehola100% (1)

- GEED 10053 Quiz 2Document3 pagesGEED 10053 Quiz 2acurvz2005100% (2)

- Structures of English SyllabusDocument7 pagesStructures of English Syllabussherry mae100% (5)

- Level1 Lesson17 v2 Using Semicolons and ColonsDocument19 pagesLevel1 Lesson17 v2 Using Semicolons and Colonsapi-296179711No ratings yet

- F5 201 - Study Guide - TMOS Administration r2Document108 pagesF5 201 - Study Guide - TMOS Administration r2Anonymous 9yIt5ynNo ratings yet

- EJO Book of LamechDocument10 pagesEJO Book of LamechPhillip ArachNo ratings yet

- An Analysis of Slang Words Used in Social MediaDocument5 pagesAn Analysis of Slang Words Used in Social MediaJulliene DiazNo ratings yet

- Bohol Association of Catholic Schools Diocese of Talibon Saint Joseph Academy Candijay, Bohol EmailDocument3 pagesBohol Association of Catholic Schools Diocese of Talibon Saint Joseph Academy Candijay, Bohol Emailmira rochenieNo ratings yet

- Function Bloc: Function Bloc Modbus RTU Master Serial Port HostlinkDocument2 pagesFunction Bloc: Function Bloc Modbus RTU Master Serial Port Hostlinkjunior cezarNo ratings yet

- Costemaxime - Summary of Clean Code by Robert C Martin - BWDocument2 pagesCostemaxime - Summary of Clean Code by Robert C Martin - BWFacundo AlvarezNo ratings yet

- Puroc Psyche MovieDocument2 pagesPuroc Psyche MovieLiza MPNo ratings yet

- Speak Confidently - Speak English With Vanessa-1Document5 pagesSpeak Confidently - Speak English With Vanessa-1Kamilia Hamdi100% (1)

- Toc PDFDocument11 pagesToc PDFSpipparisNo ratings yet

- Mohan Raj - International Voice Process - 1.2 Years - Tech MahindraDocument2 pagesMohan Raj - International Voice Process - 1.2 Years - Tech MahindraGulnaz KhanNo ratings yet

- Graphic Aids DeepuDocument14 pagesGraphic Aids DeepuAnurag TiwariNo ratings yet

- Lesson Plan On Belief and ConvictionDocument16 pagesLesson Plan On Belief and ConvictionLorraine Joyce LagosNo ratings yet

- Reflective Writing For Nursing and Midwifery Tip Sheet S220Document9 pagesReflective Writing For Nursing and Midwifery Tip Sheet S220p-679747No ratings yet

- CN AssignmentDocument2 pagesCN AssignmentVansh KalariyaNo ratings yet