Professional Documents

Culture Documents

Data Structures Using C, 2e Reema Thareja

Uploaded by

seravanakumarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Structures Using C, 2e Reema Thareja

Uploaded by

seravanakumarCopyright:

Available Formats

Data Structures Using

C, 2e

Reema Thareja

© Oxford University Press 2014. All rights reserved.

Chapter 2

Introduction to Data

Structures and Algorithms

© Oxford University Press 2014. All rights reserved.

Introduction

• A data structure is an arrangement of data either in computer's

memory or on the disk storage.

• Some common examples of data structures are arrays, linked

lists, queues, stacks, binary trees, graphs, and hash tables.

• Data structures are widely applied in areas like:

Compiler design

Operating system

Statistical analysis package

DBMS

Numerical analysis

Simulation

Artificial Intelligence

© Oxford University Press 2014. All rights reserved.

Classification of Data Structures

• Primitive data structures are the fundamental data types which

are supported by a programming language. Some basic data types

are integer, real, and boolean. The terms ‘data type’, ‘basic data

type’, and ‘primitive data type’ are often used interchangeably.

• Non-primitive data structures are those data structures which are

created using primitive data structures. Examples of such data

structures include linked lists, stacks, trees, and graphs.

• Non-primitive data structures can further be classified into two

categories: linear and non-linear data structures.

© Oxford University Press 2014. All rights reserved.

Classification of Data Structures

• If the elements of a data structure are stored in a linear or

sequential order, then it is a linear data structure. Examples are

arrays, linked lists, stacks, and queues.

• If the elements of a data structure are not stored in a sequential

order, then it is a non-linear data structure. Examples are trees

and graphs.

© Oxford University Press 2014. All rights reserved.

Arrays

• An array is a collection of similar data elements.

• The elements of an array are stored in consecutive memory locations

and are referenced by an index (also known as the subscript).

• Arrays are declared using the following syntax:

type name[size];

1st element 2nd 3rd 4th 5th element 6th 7th 8th element 9th 10th

element element element element element element element

marks[0] marks[1] marks[2] marks[3] marks[4] marks[5] marks[6] marks[7] marks[8] marks[9 ]

© Oxford University Press 2014. All rights reserved.

Linked Lists

• A linked list is a very flexible dynamic data structure in which elements

can be added to or deleted from anywhere.

• In a linked list, each element (called a node) is allocated space as it is

added to the list.

• Every node in the list points to the next node in the list. Therefore, in a

linked list every node contains two types of information:

The data stored in the node

A pointer or link to the next node in the list

© Oxford University Press 2014. All rights reserved.

Stack

• A stack is a last-in, first-out (LIFO) data structure in which insertion

and deletion of elements are done only at one end, known as TOP

of the stack.

• Every stack has a variable TOP associated with it, which is used to

store the address of the topmost element of the stack.

• If TOP = NULL, then it indicates that the stack is empty.

• If TOP = MAX-1, then the stack is full.

A AB ABC ABCD ABCDE

0 1 2 3 TOP = 4 5 6 7 8 9

© Oxford University Press 2014. All rights reserved.

Queue

• A queue is a FIFO (first-in, first-out) data structure in which the

element that is inserted first is the first one to be taken out.

• The elements in a queue are added at one end called the REAR and

removed from the other one end called FRONT.

• When REAR = MAX – 1, then the queue is full.

• If FRONT = NULL and Rear = NULL, this means there is no element

in the queue.

9 7 18 14 36 45

0 FRONT = 1 2 3 4 5 REAR = 6 7 8 9

© Oxford University Press 2014. All rights reserved.

Tree

• A tree is a non-linear data structure which consists of a collection of nodes arranged in a

hierarchical order.

• One of the nodes is designated as the root node, and the remaining nodes can be

partitioned into disjoint sets such that each set is the sub-tree of the root.

• A binary tree is the simplest form of tree which

consists of a root node and left and right sub-trees.

• The root element is pointed by ‘root’ pointer. ROOT NODE

1

• If root = NULL, then it means the tree is empty. T2

T1

2 3

4

5 6 7

12

8 9 10 11

© Oxford University Press 2014. All rights reserved.

Graph

• A graph is a non-linear data structure which is a collection of vertices (also

called nodes) and edges that connect these vertices.

• A graph is often viewed as a generalization of the tree structure, where

instead of a having a purely parent-to-child relationship between nodes,

any kind of complex relationship can exist.

• Every node in the graph can be connected with any other node.

• When two nodes are connected via an edge, the two nodes are known as

neighbors.

© Oxford University Press 2014. All rights reserved.

Abstract Data Type

• An Abstract Data Type (ADT) is the way at which we look at a data

structure, focusing on what it does and ignoring how it does its job.

• For example, stacks and queues are perfect examples of an

abstract data type. We can implement both these ADTs using an

array or a linked list. This demonstrates the "abstract" nature of

stacks and queues.

© Oxford University Press 2014. All rights reserved.

Abstract Data Type

• In C, an Abstract Data Type can be a structure considered without

regard to its implementation. It can be thought of as a "description"

of the data in the structure with a list of operations that can be

performed on the data within that structure.

• The end user is not concerned about the details of how the methods

carry out their tasks. They are only aware of the methods that are

available to them and are concerned only about calling those

methods and getting back the results but not HOW they work.

© Oxford University Press 2014. All rights reserved.

Algorithm

• An “algorithm" is a formally defined procedure for performing some

calculation. It provides a blueprint to write a program to solve a

particular problem.

• It is considered to be an effective procedure for solving a problem in

finite number of steps. That is, a well-defined algorithm always

provides an answer and is guaranteed to terminate.

• Algorithms are mainly used to achieve software re-use. Once we

have an idea or a blueprint of a solution, we can implement it in any

high level language like C, C++, Java, so on and so forth.

© Oxford University Press 2014. All rights reserved.

Algorithm

Write an algorithm to find whether a number is even or odd

Step 1: Input the first number as A

Step 2: IF A%2 =0

Then Print "EVEN"

ELSE

PRINT "ODD"

Step 3: END

© Oxford University Press 2014. All rights reserved.

Time and Space Complexity of

Algorithm

• To analyze an algorithm means determining the amount of

resources (such as time and storage) needed to execute it.

• Efficiency or complexity of an algorithm is stated in terms of time

complexity and space complexity.

• Time complexity of an algorithm is basically the running time of the

program as a function of the input size.

• Similarly, space complexity of an algorithm is the amount of

computer memory required during the program execution, as a

function of the input size.

© Oxford University Press 2014. All rights reserved.

Time and Space Complexity of

Algorithm

• Time complexity of an algorithm depends on the number of

instructions executed. This number is primarily dependent on the

size of the program's input and the algorithm used.

• The space needed by a program depends on:

Fixed part includes space needed for storing instructions, constants,

variables, and structured variables.

Variable part includes space needed for recursion stack, and for

structured variables that are allocated space dynamically during the

run-time of the program.

© Oxford University Press 2014. All rights reserved.

Calculating Algorithm Efficiency

• The efficiency of an algorithm is expressed in terms of the number

of elements that has to be processed. So, if n is the number of

elements, then the efficiency can be stated as Efficiency = f(n).

• If a function is linear (without any loops or recursions), the

efficiency of that algorithm or the running time of that algorithm

can be given as the number of instructions it contains.

• If an algorithm contains certain loops or recursive functions then

its efficiency may vary depending on the number of loops and the

running time of each loop in the algorithm.

© Oxford University Press 2014. All rights reserved.

Calculating Algorithm Efficiency

Linear loops

for(i=0;i<100;i++)

statement block

f(n) = (n)

Logarithmic Loops

for(i=1;i<1000;i*=2) for(i=1000;i>=1;i/=2)

statement block; statement block;

f(n) = log n

Nested Loops

Linear logarithmic

for(i=0;i<10;i++)

for(j=1; j<10;j*=2)

statement block;

f(n)= n log n

© Oxford University Press 2014. All rights reserved.

Big O Notation

• Big-O notation, where the "O" stands for "order of", is concerned

with what happens for very large values of n.

• For example, if a sorting algorithm performs n2 operations to sort

just n elements, then that algorithm would be described as an O(n2)

algorithm.

• When expressing complexity using Big O notation, constant

multipliers are ignored. So a O(4n) algorithm is equivalent to O(n),

which is how it should be written.

© Oxford University Press 2014. All rights reserved.

Big O Notation

• If f(n) and g(n) are functions defined on positive integer number n,

then

f(n) = O(g(n))

• That is, f of n is big O of g of n if and only if there exists positive

constants c and n, such that

f (n) ≤ cg(n)

• This means that for large amounts of data, f(n) will grow no more

than a constant factor than g(n). Hence, g provides an upper bound.

© Oxford University Press 2014. All rights reserved.

Categories of Algorithms

• Constant time algorithms have running time complexity given as

O(1)

• Linear time algorithms have running time complexity given as O(n)

• Logarithmic time algorithms have running time complexity given as

O(log n)

• Polynomial time algorithms have running time complexity given as

O(nk) where k>1

• Exponential time algorithms have running time complexity given as

O(2n)

n O(1) O(log n) O(n) O(n log n) O(n2) O(n3)

1 1 1 1 1 1 1

2 1 1 2 2 4 8

4 1 2 4 8 16 64

8 1 3 8 24 64 512

16 1 4 16 64 256 4,096

© Oxford University Press 2014. All rights reserved.

Omega Notation

• Omega notation provides a tight lower bound for f(n). This means

that the function can never do better than the specified value but it

may do worse.

• Ω notation is simply written as, f(n) ∈ Ω(g(n)), where n is the

problem size and Ω(g(n)) = {h(n): ∃ positive constants c > 0, n0 such

that 0 ≤ cg(n) ≤ h(n), ∀ n ≥ n0}.

• Examples of functions in Ω(n2) include: n2, n2.9, n3 + n, 540n2 + 10

• Examples of functions not in Ω(n3) include: n, n2.9, n2

© Oxford University Press 2014. All rights reserved.

Theta Notation

• Theta notation provides an asymptotically tight bound for f(n).

• Θ notation is simply written as, f(n) ∈ Θ(g(n)), where n is the

problem size and Θ(g(n)) = {h(n): ∃ positive constants c1, c2 and n0

such that 0 ≤ c1g(n) ≤ h(n) ≤ c2g(n), ∀ n ≥ n0}.

• Hence, we can say that Θ(g(n)) comprises a set of all the functions

h(n) that are between c1g(n) and c2g(n) for all values of n ≥ n0.

• To summarize,

The best case in Θ notation is not used.

Worst case Θ describes asymptotic bounds for worst case

combination of input values.

If we simply write Θ, it means same as worst case Θ.

© Oxford University Press 2014. All rights reserved.

Little o Notation

• Little o notation provides a non-asymptotically tight upper bound for

f(n).

• To express a function using this notation, we write f(n) ∈ o(g(n))

where o(g(n)) = {h(n) : ∃ positive constants c, n0 such that for any c

> 0, n0 >0 and 0 ≤ h(n) ≤ cg(n), ∀ n ≥ n0}.

• This is unlike the Big O notation where we say for some c > 0 (not

any).

• For example, 5n3 = O(n3) is asymptotically tight upper bound but 5n2

= O(n3) is non-asymptotically tight bound for f(n).

• Examples of functions in o(n3) include: n2.9, n3 / log n, 2n2

• Examples of functions not in o(n3) include: 3n3, n3, n3 / 1000

© Oxford University Press 2014. All rights reserved.

Little Omega Notation

• Little Omega notation provides a non-asymptotically tight lower

bound for f(n).

• It can be simply written as, f(n) ∈ ω(g(n)), where ω(g(n)) = {h(n) : ∃

positive constants c, n0 such that for any c > 0, n0 >0 and 0 ≤ cg(n) <

h(n), ∀ n ≥ n0}.

• This is unlike the Ω notation where we say for some c > 0 (not any).

• For example, 5n3 = Ω (n3) is asymptotically tight upper bound but 5n2

= ω(n3) is non-asymptotically tight bound for f(n).

• Example of functions in ω(g(n)) include: n3 = ω(n2), n3.001 = ω(n3),

n2logn = ω(n2)

• Example of a function not in ω(g(n)) is 5n2 ≠ ω(n2) (just as 5≠5) |

© Oxford University Press 2014. All rights reserved.

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (589)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Confession GuideDocument8 pagesConfession Guideapi-231988404No ratings yet

- Sherry B. Ortner - Is Female To Male As Nature Is Tu Culture (Rosaldo & Lamphere, 1974)Document21 pagesSherry B. Ortner - Is Female To Male As Nature Is Tu Culture (Rosaldo & Lamphere, 1974)P. A. F. JiménezNo ratings yet

- Spouses Plaza V Lustiva Case DigestDocument2 pagesSpouses Plaza V Lustiva Case DigestLouNo ratings yet

- Mrunal Daily CurrentDocument764 pagesMrunal Daily CurrentShyam Ji RanaNo ratings yet

- Coding PDFDocument1 pageCoding PDFShyam Ji RanaNo ratings yet

- My Placement Status (Autosaved) DRIVEDocument250 pagesMy Placement Status (Autosaved) DRIVEShyam Ji RanaNo ratings yet

- Experiment Number 4 (A) Binary Frequency Shift Keying Modulator (B-FSK)Document4 pagesExperiment Number 4 (A) Binary Frequency Shift Keying Modulator (B-FSK)Shyam Ji RanaNo ratings yet

- Write Comment To Make Your Programs Readable. Use Descriptive Variables in Your Programs (Name of The Variables Should Show Their Purposes)Document6 pagesWrite Comment To Make Your Programs Readable. Use Descriptive Variables in Your Programs (Name of The Variables Should Show Their Purposes)Shyam Ji RanaNo ratings yet

- Experiment Number 1 (A) Amplitude Shift Keying Modulator (ASK)Document4 pagesExperiment Number 1 (A) Amplitude Shift Keying Modulator (ASK)Shyam Ji RanaNo ratings yet

- Assignment2 2Document6 pagesAssignment2 2Shyam Ji RanaNo ratings yet

- Assignment ON Graph Submission Date: 31 August 2018Document1 pageAssignment ON Graph Submission Date: 31 August 2018Shyam Ji RanaNo ratings yet

- Experiment No. 7: Speed Control of DC Shunt Motor Using (A) Field Control Method (B) Armature Voltage Control MethodDocument3 pagesExperiment No. 7: Speed Control of DC Shunt Motor Using (A) Field Control Method (B) Armature Voltage Control MethodShyam Ji RanaNo ratings yet

- NVS Upload UploadAdmissionNotificationFiles PDFDocument454 pagesNVS Upload UploadAdmissionNotificationFiles PDFShyam Ji RanaNo ratings yet

- NVS Upload UploadAdmissionNotificationFilesDocument454 pagesNVS Upload UploadAdmissionNotificationFilesShyam Ji RanaNo ratings yet

- 06 CALL & RET - PPSXDocument15 pages06 CALL & RET - PPSXShyam Ji RanaNo ratings yet

- 01 Logic Diag & Simplified Arch - PPSXDocument10 pages01 Logic Diag & Simplified Arch - PPSXShyam Ji RanaNo ratings yet

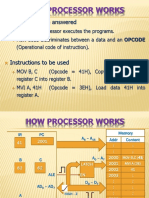

- How Processor Works: Questions To Be AnsweredDocument11 pagesHow Processor Works: Questions To Be AnsweredShyam Ji RanaNo ratings yet

- PDFDocument4 pagesPDFSantosh Reddy ChennuruNo ratings yet

- Radha The Beloved of JagannathDocument1 pageRadha The Beloved of Jagannathapi-26168166No ratings yet

- CRONUT Opposition 91215813 2014-04-08Document69 pagesCRONUT Opposition 91215813 2014-04-08mhintzesqNo ratings yet

- The Performance Diagnostic Checklist-Human Services: A CorrectionDocument6 pagesThe Performance Diagnostic Checklist-Human Services: A CorrectionBeto RV100% (1)

- Ok Ale Boet Al ManualDocument131 pagesOk Ale Boet Al ManualJaimeNo ratings yet

- Aitken J. 'Communication Technology' PDFDocument430 pagesAitken J. 'Communication Technology' PDFMadalina-Beatrice LightNo ratings yet

- 5.3 Family Law II Pranoy Goswami SM0117037 FinalDocument20 pages5.3 Family Law II Pranoy Goswami SM0117037 FinalPRANOY GOSWAMINo ratings yet

- RSPCA V Peter Hughes & Daughter Re 30 Dogs-Not GuiltyDocument2 pagesRSPCA V Peter Hughes & Daughter Re 30 Dogs-Not GuiltyGlynne SutcliffeNo ratings yet

- Prof. Elec. 5 Crowd & Crisis MGTDocument14 pagesProf. Elec. 5 Crowd & Crisis MGTGezelle NirzaNo ratings yet

- Battle of UhadDocument14 pagesBattle of UhadMuiz “Daddy” AyubiNo ratings yet

- Marketing Plan For Hello TelecomDocument5 pagesMarketing Plan For Hello Telecomshamsullah khanNo ratings yet

- Machine Theory عربيDocument695 pagesMachine Theory عربيahlamNo ratings yet

- Perdev Week 1 - Lesson 4Document3 pagesPerdev Week 1 - Lesson 4Namja Ileum Ozara GaabucayanNo ratings yet

- LSRW Skills: A Way To Enhance CommunicationDocument4 pagesLSRW Skills: A Way To Enhance CommunicationIJELS Research JournalNo ratings yet

- On Neutrosophic Feebly Open Set in Neutrosophic Topological SpacesDocument8 pagesOn Neutrosophic Feebly Open Set in Neutrosophic Topological SpacesMia AmaliaNo ratings yet

- Operational Management of Ford Motors CorporationDocument56 pagesOperational Management of Ford Motors CorporationMuhammad Hassan KhanNo ratings yet

- Titration - WikipediaDocument71 pagesTitration - WikipediaBxjdduNo ratings yet

- Students-Assignment-Sheet Nursing FormatDocument3 pagesStudents-Assignment-Sheet Nursing FormatMARY JOYCE S. HERNANDEZNo ratings yet

- 03 Activity.Document3 pages03 Activity.Philip John1 Gargar'sNo ratings yet

- Concept Attainment Lesson Plan 2Document5 pagesConcept Attainment Lesson Plan 2api-280736706No ratings yet

- First Aid Kit ContentsDocument8 pagesFirst Aid Kit Contentsalexis mae lavitoriaNo ratings yet

- Stronetz, A Faithful Physician, Is Also Very Loyal To The Prince Demitri. The KranitzkiDocument2 pagesStronetz, A Faithful Physician, Is Also Very Loyal To The Prince Demitri. The KranitzkiSahil PrasharNo ratings yet

- MARTINS Zoonpolitikon 2019Document37 pagesMARTINS Zoonpolitikon 2019Ridlo IlwafaNo ratings yet

- Ctri Data Base India IcmrDocument2,599 pagesCtri Data Base India Icmrdr sanjayNo ratings yet

- Mega Estudos - Links (Mega Servidor)Document3 pagesMega Estudos - Links (Mega Servidor)juao pedroNo ratings yet

- English 3 Periodical Exam Reviewer With AnswerDocument3 pagesEnglish 3 Periodical Exam Reviewer With AnswerPandesal UniversityNo ratings yet

- Maryland Technician - Initial - ApplicationDocument8 pagesMaryland Technician - Initial - ApplicationSun JinNo ratings yet