0% found this document useful (0 votes)

82 views101 pagesWeek 04

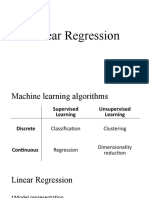

Linear regression finds the parameters that minimize the mean squared error between predictions and true targets. It does this using gradient descent, which iteratively updates the parameters in the opposite direction of the gradient of the cost function. For linear regression with multiple variables, gradient descent simultaneously updates all the parameters by taking a step in the opposite direction of each parameter's gradient. This allows linear regression to model relationships between an output and multiple input features.

Uploaded by

Osii CCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

82 views101 pagesWeek 04

Linear regression finds the parameters that minimize the mean squared error between predictions and true targets. It does this using gradient descent, which iteratively updates the parameters in the opposite direction of the gradient of the cost function. For linear regression with multiple variables, gradient descent simultaneously updates all the parameters by taking a step in the opposite direction of each parameter's gradient. This allows linear regression to model relationships between an output and multiple input features.

Uploaded by

Osii CCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

/ 101