Professional Documents

Culture Documents

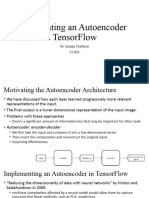

Chap 4 Beyond Gradient Descent

Uploaded by

HRITWIK GHOSH0 ratings0% found this document useful (0 votes)

3 views26 pagesCopyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

3 views26 pagesChap 4 Beyond Gradient Descent

Uploaded by

HRITWIK GHOSHCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You are on page 1of 26

Beyond Gradient Descent

Dr. Sanjay Chatterji

CS 837

The Challenges with Gradient Descent

Deep neural networks are able to crack problems that were previously

deemed intractable.

Training deep neural networks end to end, however, is fraught with

difficult challenges

massive labelled datasets (ImageNet, CIFAR, etc.)

better hardware in the form of GPU acceleration

several algorithmic discoveries

More recently, breakthroughs in optimization methods have enabled us

to directly train models in an end-to-end fashion.

The primary challenge in optimizing deep learning models is that we are

forced to use minimal local information to infer the global structure of the

error surface.

This is a hard problem because there is usually very little correspondence

between local and global structure.

Organization of the chapter

• The next couple of sections will focus primarily

on local minima and whether they pose

hurdles for successfully training deep models.

• In subsequent sections, we will further explore

the nonconvex error surfaces induced by deep

models, why vanilla mini-batch gradient

descent falls short, and how modern

nonconvex optimizers overcome these pitfalls.

Local Minima in the Error Surfaces

Assume an ant on the continental United States map, and goal

is to find the lowest point on the surface.

The surface of the US is extremely complex (a non convex

surface).

Even a mini-batch version won’t save us from a deep local

minimum.

Couple of critical questions

• Theoretically, local minima pose a significant

issue.

• But in practice, how common are local minima

in the error surfaces of deep networks?

• In which scenarios are they actually

problematic for training?

• In the following two sections, we’ll pick apart

common misconceptions about local minima.

Model Identifiability

The first source of local minima is tied to a concept

commonly referred to as model identifiability.

Deep neural networks has an infinite number of local

minima. There are two major reasons.

within a layer any rearrangement (symmetric) of

neurons will give same final output. Total n!l equivalent

configurations.

As ReLU uses a piecewise linear function, we can

multiply incoming weights by k while scaling outgoing

weights by 1/k without changing the behavior of the

network.

Continued…

Local minima arise for non-identifiability are not

inherently problematic

• All non-identifiable configurations behave in

an indistinguishable fashion

• Local minima are only problematic when they

are spurious: configuration of weights at local

minima in a neural network has higher error

than the configuration at the global minimum.

How Pesky Are Spurious Local Minima in

Deep Networks?

• For many years, deep learning practitioners blamed all of their

troubles in training deep networks on spurious local minima.

• Recent studies indicate that most local minima have error rates

and generalization characteristics similar to global minima.

• We might try to tackle this problem by plotting the value of the

error function over time as we train a deep neural network.

• This strategy, however, doesn’t give us enough information about

the error surface.

• Instead of analyzing the error function over time, Goodfellow et

al. (2014) investigated error surface between an initial parameter

vector and a successful final solution using linear interpolation.

θα= α ・ θf + (1 −α) ・ θi.

Continued..

• If we run this experiment over and over again, we find that there

are no truly troublesome local minima that would get us stuck.

• Vary the value of alpha to see how the error surface changes as we

traverse the line between the randomly initialized point and the

final SGD solution.

• The true struggle of gradient descent isn’t the existence of trouble-

some local minima, but to find the appropriate direction to move.

Flat Regions in the Error Surface

• The gradient approaches zero in a peculiar flat region (alpha

= 1). Not local minima.

• zero gradient might slow down learning.

• More generally, given an arbitrary function, a point at which

the gradient is the zero vector is called a critical point.

• These “flat” regions that are potentially pesky but not

necessarily deadly are called saddle points.

• As function has more and more dimensions, saddle points

are exponentially more likely than local minima.

Continued..

• In d-dimensional parameter space, we can slice through a

critical point on d different axes.

• A critical point can only be a local minimum if it appears as a

local minimum in every single one of the d one-dimensional

subspaces

• critical point can come in one of three different flavors in a

one dimensional subspace

– probability that a random critical point is in a random function is

1/3d

– a random function with k critical points has an expected number

of k/3d local minima.

– as the dimensionality of our parameter space increases, local

minima become exponentially more rare.

When the Gradient Points in the Wrong

Direction

• Upon analyzing the error surfaces of deep networks, it seems like

the most critical challenge to optimizing deep networks is finding

the correct trajectory to move in.

• Gradient isn’t usually a very good indicator of the good trajectory.

• When the contours are perfectly circular does the gradient always

point in the direction of the local minimum?

• If the contours are extremely elliptical, the gradient can be as

inaccurate as 90 degrees away from the correct direction!

• For every weight wi in the parameter space, the gradient computes

“how the value of the error changes as we change the value of wi”:

∂E/∂wi

• It gives us the direction of steepest descent.

• If contours are perfectly circular and we take big step, the gradient

doesn’t change direction as we move.

Gradient using second derivatives

• The gradient changes under our feet as we move in a

certain direction. Compute second derivatives.

• We can compile this information into a special matrix

known as the Hessian matrix (H).

• Computing the Hessian matrix exactly is a difficult task.

Momentum-Based Optimization

• The problem of an ill-conditioned Hessian matrix

manifests itself in the form of gradients that fluctuate

wildly.

• One popular mechanism for dealing with ill-

conditioning bypasses the computation of the Hessian,

and focuses on how to cancel out these fluctuations

over the duration of training.

• There are two major components that determine how a

ball rolls down an error surface.

– Acceleration

– Motion

Momentum-Based Optimization

• Our goal, then, is to somehow generate an analog for velocity

in our optimization algorithm.

• We can do this by keeping track of an exponentially weighted

decay of past gradients.

• We use the momentum hyperparameter m to determine what

fraction of the previous velocity to retain in the new update.

• Momentum significantly reduces the volatility of updates. The

larger the momentum, the less responsive we are to new

updates.

A Brief View of Second-Order Methods

• Computing the Hessian is a computationally difficult task.

• Momentum afforded us significant speedup without

having to worry about it altogether.

• Several second-order methods, have been researched

over the past several years that attempt to approximate

the Hessian directly.

– Conjugate gradient descent (conjugate direction relative to the

previous choice)

– Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm (inverse

of the Hessian matrix)

Second-Order Methods

• The conjugate direction is chosen by using an indirect

approximation of the Hessian to linearly combine the

gradient and our previous direction.

• With a slight modification, this method generalizes to

the nonconvex error surfaces we find in deep networks.

• BFGS has a significant memory footprint, but recent

work has produced a more memory-efficient version

known as L-BFGS.

Learning Rate Adaptation

• One of the major breakthroughs in modern deep network

optimization was the advent of learning rate adaption.

• The basic concept behind learning rate adaptation is that

the optimal learning rate is appropriately modified over

the span of learning to achieve good convergence

properties.

• Some popular adaptive learning rate algorithms

– AdaGrad

– RMSProp

– Adam

AdaGrad—Accumulating Historical

Gradients

• It attempts to adapt the global learning rate over time using an

accumulation of the historical gradients.

• Specifically, we keep track of a learning rate for each

parameter.

• This learning rate is inversely scaled with respect to the root

mean square of all the parameter’s historical gradients

(gradient accumulation vector r).

• Note that we add a tiny number δ(~10-7) to the denominator in

order to prevent division by zero.

Simply using a naive accumulation

of gradients isn’t sufficient

• This update mechanism means that the parameters with the

largest gradients experience a rapid decrease in their learning

rates, while parameters with smaller gradients only observe a

small decrease in their learning rates.

• AdaGrad also has a tendency to cause a premature drop in

learning rate, and as a result doesn’t work particularly well for

some deep models.

• While AdaGrad works well for simple convex functions, it isn’t

designed to navigate the complex error surfaces of deep

networks.

• Flat regions may force AdaGrad to decrease the learning rate

before it reaches a minimum.

RMSProp—Exponentially Weighted Moving

Average of Gradients

• Lets bring back a concept we introduced earlier while discussing

momentum to dampen fluctuations in the gradient.

• Compared to naive accumulation, exponentially weighted

moving averages also enable us to “toss out” measurements

that we made a long time ago.

• The decay factor ρ determines how long we keep old gradients.

• The smaller the decay factor, the shorter the effective window.

Plugging this modification into AdaGrad gives rise to the

RMSProp learning algorithm.

Adam—Combining Momentum and

RMSProp

• Spiritually, we can think about Adam as a variant

combination of RMSProp and momentum.

• We want to keep track of an exponentially weighted moving

average of the gradient (first moment of the gradient).

• Similarly to RMSProp, we can maintain an exponentially

weighted moving average of the historical gradients (second

moment of the gradient).

Bias in Adam

• However, it turns out these estimations are biased relative to the real

moments because we start off by initializing both vectors to the zero

vector.

• In order to remedy this bias, we derive a correction factor for both

estimations.

• Recently, Adam has gained popularity because of its corrective measures

against the zero initialization bias (a weakness of RMSProp) and its ability

to combine the core concepts behind RMSProp with momentum more

effectively.

• The default hyperparameter settings for Adam for TensorFlow generally

perform quite well, but Adam is also generally robust to choices in

hyperparameters.

• The only exception is that the learning rate may need to be modified in

certain cases from the default value of 0.001.

The Philosophy Behind Optimizer Selection

• We’ve discussed several strategies that are used to make

navigating the complex error surfaces of deep networks more

tractable.

• These strategies have culminated in several optimization

algorithms.

• While it would be awfully nice to know when to use which

algorithm, there is very little consensus among expert

practitioners.

• Currently, the most popular algorithms are mini-batch gradient

descent, mini-batch gradient with momentum, RMSProp,

RMSProp with momentum, Adam, and AdaDelta.

Thank You

You might also like

- The Complete Paladin's HandbookDocument147 pagesThe Complete Paladin's HandbookTrey Martin-Ellis80% (5)

- Design On Slab On GradeDocument4 pagesDesign On Slab On GradeAhmad Mensa100% (1)

- Macbeth Classwork WorkbookDocument36 pagesMacbeth Classwork WorkbookMairead KellyNo ratings yet

- Sequence AlignmentDocument92 pagesSequence AlignmentarsalanNo ratings yet

- CH 17 - Water Production ControlDocument10 pagesCH 17 - Water Production ControlMaria Theresa-OmwesiimiNo ratings yet

- An Analysis of Convolutional Neural Network ArchitecturesDocument54 pagesAn Analysis of Convolutional Neural Network ArchitecturesRaahul SinghNo ratings yet

- Alchemy Tried in The FireDocument5 pagesAlchemy Tried in The FireKeith BoltonNo ratings yet

- Gradient DescentDocument17 pagesGradient DescentJatin Kumar GargNo ratings yet

- DL Class1Document18 pagesDL Class1Rishi ChaaryNo ratings yet

- Geoffrey Hinton With Nitish Srivastava Kevin Swersky: Neural Networks For Machine LearningDocument31 pagesGeoffrey Hinton With Nitish Srivastava Kevin Swersky: Neural Networks For Machine LearningMahapatra MilonNo ratings yet

- Lecture 4Document45 pagesLecture 4Parag DhanawadeNo ratings yet

- Neural Networks For Machine Learning: Lecture 6a Overview of Mini - Batch Gradient DescentDocument31 pagesNeural Networks For Machine Learning: Lecture 6a Overview of Mini - Batch Gradient DescentMario PeñaNo ratings yet

- Deep Learning (All in One)Document23 pagesDeep Learning (All in One)B BasitNo ratings yet

- Gradient DescentDocument8 pagesGradient DescentrajaNo ratings yet

- 8.2 NNOptimizationDocument17 pages8.2 NNOptimizationNitish RavuvariNo ratings yet

- Introtodeeplearning MIT 6.S191Document36 pagesIntrotodeeplearning MIT 6.S191jayateerthaNo ratings yet

- DL Class2Document30 pagesDL Class2Rishi ChaaryNo ratings yet

- SP18 Practice MidtermDocument5 pagesSP18 Practice MidtermHasimNo ratings yet

- Christopher Manning Lecture 5: Language Models and Recurrent Neural Networks (Oh, and Finish Neural Dependency Parsing J)Document66 pagesChristopher Manning Lecture 5: Language Models and Recurrent Neural Networks (Oh, and Finish Neural Dependency Parsing J)Muhammad Arshad AwanNo ratings yet

- Recurrent Neural Networks: Anahita Zarei, PH.DDocument37 pagesRecurrent Neural Networks: Anahita Zarei, PH.DNickNo ratings yet

- 6 CNNDocument50 pages6 CNNSWAMYA RANJAN DASNo ratings yet

- Slide 2 PDFDocument44 pagesSlide 2 PDFmuskan agarwalNo ratings yet

- UNIT - IV (Compatibility Mode)Document8 pagesUNIT - IV (Compatibility Mode)SreenivasaRao ChNo ratings yet

- Handwritten Digit Recognition: Jitendra MalikDocument55 pagesHandwritten Digit Recognition: Jitendra MalikSUMIT DATTANo ratings yet

- Lec 10 SVMDocument35 pagesLec 10 SVMvijayNo ratings yet

- Deep Learning-SummeryDocument24 pagesDeep Learning-SummeryAram ShojaeiNo ratings yet

- CC511 Week 7 - Deep - LearningDocument33 pagesCC511 Week 7 - Deep - Learningmohamed sherifNo ratings yet

- GD in LRDocument23 pagesGD in LRPooja PatwariNo ratings yet

- ML Lec-6Document16 pagesML Lec-6BHARGAV RAONo ratings yet

- Convolutional Neural Networks For Hand-Written Digit RecognitionDocument30 pagesConvolutional Neural Networks For Hand-Written Digit RecognitionXuân XuânnNo ratings yet

- Introduction To Mean ShiftDocument13 pagesIntroduction To Mean Shifttiendat_20791No ratings yet

- Fairmot Explained 1Document19 pagesFairmot Explained 1api-332129590No ratings yet

- Ann 2Document34 pagesAnn 2Harsh Mohan SahayNo ratings yet

- Advantages BpaDocument38 pagesAdvantages BpaSelva KumarNo ratings yet

- Ee126 Project 1Document5 pagesEe126 Project 1api-286637373No ratings yet

- L5 - UCLxDeepMind DL2020Document52 pagesL5 - UCLxDeepMind DL2020xzj294197164No ratings yet

- Deep Learning - Intro, Methods & ApplicationsDocument37 pagesDeep Learning - Intro, Methods & ApplicationsRakesh P100% (1)

- Deep MLP'sDocument44 pagesDeep MLP'sprabhakaran sridharanNo ratings yet

- Gradient DescentDocument9 pagesGradient Descentalwyn.benNo ratings yet

- Analytical DesignDocument38 pagesAnalytical Designsawyl AHAMEFULA MBANo ratings yet

- 2 Numerical ToolsDocument79 pages2 Numerical ToolsSecular GuyNo ratings yet

- Unit IV BPA GDDocument12 pagesUnit IV BPA GDThrisha KumarNo ratings yet

- Neural Networks For Machine Learning: Lecture 16a Learning A Joint Model of Images and CaptionsDocument19 pagesNeural Networks For Machine Learning: Lecture 16a Learning A Joint Model of Images and CaptionsReshma KhemchandaniNo ratings yet

- Co 4Document56 pagesCo 4Gaddam YakeshreddyNo ratings yet

- L25 Recap of Data Analysis PDFDocument3 pagesL25 Recap of Data Analysis PDFAnanya AgarwalNo ratings yet

- F16midterm Sols v2Document14 pagesF16midterm Sols v2Nawaal SiddiqueNo ratings yet

- Week4 UpdatesDocument15 pagesWeek4 UpdatesIdbddmmNo ratings yet

- Unit 3Document110 pagesUnit 3Nishanth NuthiNo ratings yet

- Lecture 4Document33 pagesLecture 4Venkat ram ReddyNo ratings yet

- Neural Networks For Machine Learning: Lecture 14A Learning Layers of Features by Stacking RbmsDocument39 pagesNeural Networks For Machine Learning: Lecture 14A Learning Layers of Features by Stacking RbmsTyler RobertsNo ratings yet

- Artificial Neural Networks - Lect - 4Document17 pagesArtificial Neural Networks - Lect - 4ma5395822No ratings yet

- AI Assignment 2 Q and ADocument7 pagesAI Assignment 2 Q and AChinmay JoshiNo ratings yet

- Gradient Descent OptimizationDocument27 pagesGradient Descent OptimizationAkash Raj BeheraNo ratings yet

- UNIT-2 Foundations of Deep LearningDocument64 pagesUNIT-2 Foundations of Deep LearningbhavanaNo ratings yet

- Literature Review On Travelling Salesman ProblemDocument8 pagesLiterature Review On Travelling Salesman ProblemafmzwrhwrwohfnNo ratings yet

- The COSMOS Companion, Understanding COSMOS Accuracy - Meshing and ConvergenceDocument36 pagesThe COSMOS Companion, Understanding COSMOS Accuracy - Meshing and ConvergenceJohn BaynerNo ratings yet

- Data AcquisitionDocument28 pagesData Acquisitionvkv.foeNo ratings yet

- Data Structure NotesDocument6 pagesData Structure NotesExclusive MundaNo ratings yet

- Chap 4Document24 pagesChap 4Hamze Ordawi1084No ratings yet

- Lecture 5Document31 pagesLecture 5Reema AmgadNo ratings yet

- AI Lec-06Document30 pagesAI Lec-06hwangzang.nguyenkhacNo ratings yet

- InterpolationDocument84 pagesInterpolationkavetisNo ratings yet

- UNIT I - Session 2Document12 pagesUNIT I - Session 2Angeline RNo ratings yet

- Solution HW02Document6 pagesSolution HW02Nguyen HikariNo ratings yet

- Chap 6 EmbeddingDocument44 pagesChap 6 EmbeddingHRITWIK GHOSHNo ratings yet

- Chap 3.1 Embedding in TensorflowDocument23 pagesChap 3.1 Embedding in TensorflowHRITWIK GHOSHNo ratings yet

- NLP Soft-Eng Algo Autumn End SemesterDocument8 pagesNLP Soft-Eng Algo Autumn End SemesterHRITWIK GHOSHNo ratings yet

- DFD 2Document39 pagesDFD 2HRITWIK GHOSHNo ratings yet

- Pierson v. Ray, 386 U.S. 547 (1967)Document14 pagesPierson v. Ray, 386 U.S. 547 (1967)Scribd Government Docs100% (1)

- 7Document20 pages7karimNo ratings yet

- Skin Diseases: 100 Million Three MillionDocument2 pagesSkin Diseases: 100 Million Three MillionAria DomingoNo ratings yet

- Check For Dirty Mylar Window: Collimator Cal Block DiagramDocument1 pageCheck For Dirty Mylar Window: Collimator Cal Block DiagramMai Thanh SơnNo ratings yet

- LC-3-Mod-3-Ethnocentrism-and-Cultural-Relativism AnswerDocument3 pagesLC-3-Mod-3-Ethnocentrism-and-Cultural-Relativism AnswerJohnray RonaNo ratings yet

- Transform Operator Random Generator Delimiter Based Encryption Standard (TORDES)Document4 pagesTransform Operator Random Generator Delimiter Based Encryption Standard (TORDES)Ajay ChaudharyNo ratings yet

- An Empirical Analysis of Money Demand Function in Nepal: Birendra Bahadur BudhaDocument17 pagesAn Empirical Analysis of Money Demand Function in Nepal: Birendra Bahadur BudhaIsmith PokhrelNo ratings yet

- Biometric DevicesDocument10 pagesBiometric DevicesAnonymous rC393RQm5VNo ratings yet

- Lab Report 2Document3 pagesLab Report 2Hassan MehmoodNo ratings yet

- Untitled DocumentDocument4 pagesUntitled Documentallyssa abrogenaNo ratings yet

- Anim Breeding RGDocument30 pagesAnim Breeding RGVidya shree GNo ratings yet

- EE5630 - EE5660 - Exam - Main - FinalDocument5 pagesEE5630 - EE5660 - Exam - Main - FinalMadalin PetrachiNo ratings yet

- Chapter 2 NotesDocument5 pagesChapter 2 NotesmatthewNo ratings yet

- Jessica - Journal (Revisi)Document7 pagesJessica - Journal (Revisi)jessica paulimaNo ratings yet

- OnGuard 7.6 - DS Web02Document2 pagesOnGuard 7.6 - DS Web02dune_misterNo ratings yet

- 301 enDocument263 pages301 enFedja NetjasovNo ratings yet

- 2011 WednesdayDocument123 pages2011 WednesdaykarthikkandaNo ratings yet

- BDA Bridge InspectionDocument33 pagesBDA Bridge InspectionBalaji RathinavelNo ratings yet

- Management of Change: Did You Know?Document1 pageManagement of Change: Did You Know?baaziz2015No ratings yet

- East 43rd Street-Alan BattersbyDocument102 pagesEast 43rd Street-Alan BattersbyVic Thor MoralonsoNo ratings yet

- Dissertation On Social Media and PoliticsDocument6 pagesDissertation On Social Media and PoliticsBuyCollegePaperOnlineAtlanta100% (1)

- Beautiful in White Lyrics: Lyric VideoDocument3 pagesBeautiful in White Lyrics: Lyric VideoWiwit ALchemyNo ratings yet

- Single-Phase ARD Series Elevator Automatic Rescue DeviceDocument11 pagesSingle-Phase ARD Series Elevator Automatic Rescue Deviceaheneke1No ratings yet

- Hunter-Gatherer Foraging Variability Dur PDFDocument367 pagesHunter-Gatherer Foraging Variability Dur PDFAleks GarcíaNo ratings yet

- CMP Bengaluru - Final ReportDocument242 pagesCMP Bengaluru - Final ReportSajjad HossainNo ratings yet