Professional Documents

Culture Documents

McGurk Effect Study Wright and Wareham

Uploaded by

thebeholderCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

McGurk Effect Study Wright and Wareham

Uploaded by

thebeholderCopyright:

Available Formats

103

Legal and Criminological Psychology (2005), 10, 103108

q 2005 The British Psychological Society

The

British

Psychological

Society

www.bpsjournals.co.uk

Mixing sound and vision: The interaction of

auditory and visual information for earwitnesses

of a crime scene

Daniel B. Wright* and Gary Wareham

Psychology Department, University of Sussex, Brighton, UK

Purpose. Previous research has shown that visual information impairs the

perception of the sound of individual syllables, often called the McGurk effect. In

everyday life sounds are seldom heard as individual syllables, but are embedded in

words, and these words within sentences. The purpose of this research is to see

whether auditory and visual information interact in the perception of a contextually

rich scene that is of forensic importance.

Methods. Participants were shown a video of a man following a woman. The man

either says Hes got your boot or Hes gonna shoot. Half the participants saw the

actor say the same phrase as they heard, and half saw a different phrase than they heard.

Results. When the visual and acoustic patterns did not match, people made

mistakes. Many reported the fusion: Hes got your shoe.

Conclusions. This is the first demonstration of the interaction of auditory and visual

information for complex scenes. The scene is one of forensic importance and therefore

the findings are of importance within the emerging field of earwitness testimony.

When people perceive a sound, they are influenced not just by the physical sound, but

also by visual and contextual information. For example, if you hear someone say the

syllable ba, but you see them saying ga, you may perceive a fusion of these, the sound

da. This is called the McGurk effect (McGurk & MacDonald, 1976). It is a robust and

striking effect. The influence of contextual information on the perception of auditory

information is also easily demonstrated by asking a classroom of students: How many

animals of each kind did Moses take on the ark? The typical response is two, the socalled Moses illusion (Erickson & Mattson, 1981). Similarly, the visual memories we have

of complex scenes can be altered by verbal post-event information (Loftus, Miller, &

Burns, 1978; Wright & Loftus, 1998). Here we report a study where participants view a

contextually rich scene. For some, the visual and auditory cues are congruent, for others

they are incongruent. By using complex scenes our findings are applicable to witness

* Correspondence should be addressed to Dr Daniel B. Wright, Psychology Department, University of Sussex, Falmer, Brighton

BN1 9QH, UK (e-mail: DanW@Sussex.ac.uk).

DOI:10.1348/135532504X15240

104

Daniel B. Wright and Gary Wareham

testimony (see Wright & Davies, 1999, for a general review; see Yarmey, 1995, for a

review specifically of earwitness testimony).

Recalling and interpreting what somebody said can be critical for criminal cases.

Consider the case of Derek Bentley. In 1952 Derek Bentley and Chris Craig were

involved in a robbery when they were confronted by police. According to police

officers, Bentley said, Let him have it. The police claimed that this incited Craig, then

16, to shoot and kill Police Constable Sydney Miles. According to this interpretation

Bentley was also culpable. Craig was convicted of murder and as a juvenile served 10

years. Bentley, aged 19 but with a mental age of 11, was convicted and in 1953 was the

last person to be hanged in the United Kingdom. After a long campaign, in 1998 he was

posthumously pardoned. There has been much dispute about what, if anything, Bentley

said, but if he had said, Let him have it, the meaning is ambiguous. His trial lawyers

argued that he was urging Craig to hand the gun over to the police. This would have

meant Bentley should not have been convicted.

There are two types of information that are relevant to earwitness testimony: who

said the phrase and what was said. Sometimes the police are interested in the identity of

speaker. In these cases, the police sometimes produce a voice identification parade.

Cook and Wilding (1997a, b) have done much research on this. They find that visual

information can interfere with voice identification, what they call the face

overshadowing effect. The police are also often interested in what was said, which is

the focus of this study. As in the Derek Bentley case, often the identity of the speaker is

not an issue.

The aim of the current study is to see what occurs when visual and auditory are

incongruent in a contextually rich, but ambiguous setting. This is similar to testing

whether the McGurk effect, which is normally tested by presenting single syllables,

applies to more complex stimuli. The McGurk effect can occur even when the sound

and visual information are not well synchronized (180 ms out; Munhall, Gribble, Sacco,

& Ward, 1996) and when the visual information is quite coarse (MacDonald, Andersen,

& Bachmann, 2000). It has been shown to exist with prelinguistic infants (Rosenblum,

Schmuckler, & Johnson, 1997) and in other cultures, although sometimes the effects are

smaller (Sekiyama & Tohkura, 1991).

However, there are limitations to the McGurk effect. For example, Walker, Bruce, and

OMalley (1995) have found that the effect is much less pronounced with familiar faces

than non-familiar faces. This may be due to there being more meaning in faces of people

who we know. Easton and Basala (1982) reported that it was more difficult to detect the

effect with meaningful stimuli. However, Deckle, Fowler, and Funnell (1992) did find

the effect for words. More recently, Sams, Manninen, Surakka, Helin, and Katto (1998)

found the effect with their Finnish sample for words embedded within three word

sentences. The current study investigates whether the McGurk effect can occur for a

sentence within a complex scene.

Method

Eighty participants, half of whom were male, volunteered for this study. The ages ranged

from 9 to 66 years old, with a mean of 26 years. They were recruited from a supermarket

and the University of Sussex, and were tested individually. No reliable differences were

found by gender, age, or test location.

The interaction of auditory and visual information for earwitnesses

105

Four versions of a video were created for this study (see left side of Table 1). They

were all identical until the final scene, and all lasted approximately 1 minute. In the early

evening, a woman is shown walking along a street. A man is behind her. Thinking that he

might be following her she begins to walk faster. He is still behind her. She starts to run

and her boot falls off. The man picks it up and runs after her. A male bystander sees this

chase and then says either, Hes gonna shoot. or Hes got your boot. These phrases

were designed to be acoustically similar. Each is four syllables in length:

Hes

Hes

gon na

got your

shoot

boot

However, the two have very different meanings. Like Bentleys, Let him have it, one

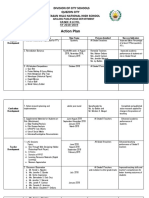

Table 1. Responses for what participants thought the bystander said

Responds with: : :

Video

Cond.

1

2

3

4

Heard

Hes

Hes

Hes

Hes

gonna shoot

got your boot

got your boot

gonna shoot

Saw

Hes

Hes

Hes

Hes

gonna shoot

got your boot

gonna shoot

got your boot

Total

Heard

Saw

Fuse

Other

Total

15

9

0

0

2

0

1

10

0

0

4

1

20

20

20

20

13

80

18

20

interpretation is that the man is committing a crime and one is not. Further, the final

words shoot and boot, could be fused into shoe. It is acoustically similar to shoot and

semantically similar to boot.

This final scene was shot from approximately one metre away and clearly showed

the actor saying the critical sentence. The scene was shot twice. In one the bystander

said, Hes gonna shoot, and in the other he said, Hes got your boot. The actor was

trained to say these at the same pace to allow them to be synchronized. Four versions

were created: the two original ones and two where the visual and acoustic materials

were incongruent. Care was taken to synchronize the lip movement and sounds for each

syllable.

Participants were randomly allocated to one of four conditions of a 2 2 design,

with the restriction that each film was seen by 20 people. All participants were tested

individually. They were told to watch the film carefully. The very last part of the film was

when the bystander said the critical phrase. Immediately (i.e. within a couple of seconds

of hearing the phrase) after this, participants were asked, Can you tell me what the man

at the end of the film said? Finally, participants were debriefed.

Results

Participants either heard and saw different phrases or the same phrase. If they heard and

saw different phrases their responses were coded as one of the following four options:

what was heard, what was seen, a fused response, or other incorrect response. We

defined a fused response as recalling the word shoe as the final word. If the

participants heard and saw the same phrase their responses were coded as one of three

106

Daniel B. Wright and Gary Wareham

options: heard and saw, a fused response, or other incorrect response. Fused responses

were determined in the same way. Of course these were not fused because the

participants heard and saw the same phrase, but the scoring was kept the same for

comparison purposes. The right side of Table 1 shows the responses for each condition.

Other coding strategies were also used and led to the same conclusions.

Two observations are clear from Table 1. First, the people in the congruent

conditions, where they heard and saw the same phrase, were very accurate (95%

correct). Second, when the people in the incongruent conditions made mistakes, they

never reported what the lips said, but mostly reported a fuse, stating that shoe was

the last word. Logistic regressions were run to examine these effects further. There were

two independent variables (congruent vs. incongruent, heard shoot vs. heard boot), and

the dependent variable was correct versus incorrect. The interaction was nonsignificant (x2 1 0:95, p :33) and was removed from the model. Both main effects

were significant. People who heard shoot were more likely to make an error

(x2 1 5:75, p :02, conditional odds ratio 4:41, with a 95% confidence interval of

1.2315.80). More importantly, those participants given the incongruent stimuli were

much more likely to make an errant response (x2 1 16:61, p , :001,

conditional odds ratio 15:10, 95% CI 3.0275.54). Thus, visual information interferes

with voice perception.1

Eighteen people responded incorrectly. Some of these people gave errant phrases for

a few parts of the phrase, but every participant who made an error incorrectly produced

the final word. This is the word that determines whether the man is attacking the

woman or simply returning something of hers. Most of the errors had the word shoe

being recalled. However, there were other errors. Three errors were acoustically similar

to the correct responses (e.g. booze instead of boot), and two errors were not (Hes

got your food and Hes got your glue). Hes got your glue was interesting because

although glue shares few acoustic or semantic features with boot and shoot, it does

rhyme with the most popular error, the fusion shoe. This is just a single case and while

it deserves mention, further examples are necessary before more is made of it.

Twelve of the erroneous participants also made errors in other parts of the phrase.

Three people said got your, one said got my, two said got a, and six said got her in

response to having her boot/shoe. There was only one participant who did not get the

initial word: hes. This individual said, Is that your shoe?.

Discussion

Auditory and visual information can interact within a complex scene. While this has

been shown for individual syllables in the McGurk effect, in this study we demonstrated

that visual information interferes with the perception of complex phrases embedded in

a forensically relevant situation. It was not just that the visual information interfered

with perception, but that the majority of errors were a fusion of two words. Interestingly,

the fusion was between the acoustic characteristics of one word, shoot, and the

semantic characteristics of another word, boot. This fusion tended to occur when the

participant heard shoot but saw boot. Calvert et al. (1997) have shown that lip

1

The analyses were repeated without the non-fused errors. The conclusions are the same. The conditional odds ratio for which

word they heard is 19.24 with a 95% confidence interval of 2.20168.36. The conditional odds ratio for being presented with

congruent or incongruent stimuli is 10.88 with a 95% confidence interval of 2.0258.67.

The interaction of auditory and visual information for earwitnesses

107

reading and auditory perception are processed by some of the same brain regions, but

that each evokes activity in other regions. It may be that the visual material activates the

semantic meaning of the word more than the acoustic material does. This is worth

further investigation.

As stated earlier, one of our reasons for conducting this research was because we

wanted to see the extent that non-acoustic information could influence the perception

of speech. This is critical for many criminal cases. In the condition where the effect was

most pronounced, participants heard the word shoot, but they saw the lips say boot

and onscreen, the male actor was shown carrying a boot. The visual and contextual

information led to half of the participants responding shoe. These participants were

not only using contextual information. If that were the case, the participants in the other

three conditions would have also frequently recalled shoe.

The choice of stimuli was purposeful. We knew that the word shoe related to both

of the critical words, but in different ways. The particular phrases were chosen to show

that visual and auditory information can interact. With other phrases the interaction may

fuse in less predictable ways, depending on the particular characteristics of each phrase.

This study has important implications for assessing the accuracy of earwitness

testimony. It is important to realize that perception of complex sounds is influenced

both by other sensory input and by the situation within which the utterance is heard.

More research is necessary in trying to understand earwitness testimony ( Wilding,

Cook, & Davis, 2000). In this study, the focus was on what was said rather than who said

it. Subtle differences between words can cause ambiguity, and this could potentially be

the difference between whether an individual is guilty or not guilty of a crime. This

research shows that errors that people hear can be easily created by visual information.

References

Calvert, G. A., Bullmore, E. T., Brammer, M. J., Campbell, R., Williams, S. C. R., McGuire, P. K.,

Woodruff, P. W. R., Iversen, S. D., & David, A. S. (1997). Activation of auditory cortex during

silent lipreading. Science, 276, 593596.

Cook, S., & Wilding, J. (1997a). Earwitness testimony: Never mind the variety, hear the length.

Applied Cognitive Psychology, 11, 95111.

Cook, S., & Wilding, J. (1997b). Earwitness testimony. 2.: Voices, faces, and context. Applied

Cognitive Psychology, 11, 527541.

Deckle, D. J., Fowler, C. A., & Funnell, M. G. (1992). Audiovisual integration in perception of real

words. Perception and Psychophysics, 51, 355362.

Easton, R. D., & Basala, M. (1982). Perceptual dominance during lipreading. Perception and

Psychophysics, 32, 562570.

Erickson, T. D., & Mattson, M. E. (1981). From words to meaning: A semantic illusion. Journal of

Verbal Learning and Verbal Behavior, 20, 540551.

Loftus, E. F., Miller, D. G., & Burns, H. J. (1978). Semantic integration of verbal information into a

visual memory. Journal of Experimental Psychology: Human Learning and Memory, 4,

1931.

MacDonald, J., Andersen, S., & Bachmann, T. (2000). Hearing by eye: How much spatial

degradation can be tolerated? Perception, 29, 11551168.

McGurk, H., & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264, 746748.

Munhall, K. G., Gribble, P., Sacco, L., & Ward, M. (1996). Temporal constraints on the McGurk

effect. Perception and Psychophysics, 58, 351362.

Rosenblum, L. D., Schmuckler, M. A., & Johnson, J. A. (1997). The McGurk effect in infants.

Perception and Psychophysics, 59, 347357.

108

Daniel B. Wright and Gary Wareham

Sams, M., Manninen, P., Surakka, V., Helin, P., & Katto, R. (1998). McGurk effect in Finnish

syllables, isolated words, and words in sentences: Effects of word meaning and sentence

context. Speech Communication, 26, 7587.

Sekiyama, K., & Tohkura, Y. (1991). McGurk effect in non-English listeners: Few visual effects for

Japanese subjects hearing Japanese syllables of high auditory intelligibility. Journal of the

Acoustical Society of America, 90, 17971805.

Walker, S., Bruce, V., & OMalley, C. (1995). Facial identity and facial speech processing: Familiar

faces and voices in the McGurk effect. Perception and Psychophysics, 57, 11241133.

Wilding, J., Cook, S., & Davis, J. (2000). Sound familiar? Psychologist, 13, 558562.

Wright, D. B., & Davies, G. M. (1999). Eyewitness testimony. In F. T. Durso, R. S. Nickerson,

R. W. Schvaneveldt, S. T. Dumais, D. S. Lindsay, & M. T. H. Chi (Eds.), Handbook of

applied cognition (pp. 789818). Chichester, UK: John Wiley & Sons.

Wright, D. B., & Loftus, E. F. (1998). How misinformation alters memories. Journal of

Experimental Child Psychology, 71, 155164.

Yarmey, A. D. (1995). Earwitness speaker identification. Psychology, Public Policy, and Law, 4,

792816.

Received 13 October 2003; revised version received 25 February 2004

You might also like

- Early Work: Pierre Janet Sigmund FreudDocument4 pagesEarly Work: Pierre Janet Sigmund FreudGeorgeNo ratings yet

- Verbal Overshadowing in Voice Recognition: Timothy J. Perfect, Laura J. Hunt and Christopher M. HarrisDocument8 pagesVerbal Overshadowing in Voice Recognition: Timothy J. Perfect, Laura J. Hunt and Christopher M. HarrisciaomiucceNo ratings yet

- Vanags 2005Document18 pagesVanags 2005ciaomiucceNo ratings yet

- Beyond The Perception-Behavior Link: The Ubiquitous Utility and Motivational Moderators of Nonconscious MimicryDocument24 pagesBeyond The Perception-Behavior Link: The Ubiquitous Utility and Motivational Moderators of Nonconscious MimicryMitchell StroiczNo ratings yet

- Wright & Wanley (2003)Document11 pagesWright & Wanley (2003)Kevin CeledónNo ratings yet

- Eye Witness TestimonyDocument5 pagesEye Witness TestimonyaprilgreenwoodNo ratings yet

- 1.0. Including Critical Analysis - AnswersDocument4 pages1.0. Including Critical Analysis - Answerssunil985959No ratings yet

- Eye Witness TestimonyDocument32 pagesEye Witness Testimonyapi-308625760No ratings yet

- Psycho ProjectDocument19 pagesPsycho ProjectKashish ChhabraNo ratings yet

- The Effect of Leading Questions On Perception of Character Candidate Number: 148917 School of Psychology, University of Sussex, Brighton, UKDocument21 pagesThe Effect of Leading Questions On Perception of Character Candidate Number: 148917 School of Psychology, University of Sussex, Brighton, UKEllen FrancesNo ratings yet

- Studies That Work - IA WorksheetDocument3 pagesStudies That Work - IA WorksheetJannickFramptonNo ratings yet

- Loftus and Zanni 1975Document3 pagesLoftus and Zanni 1975Anna NatashaNo ratings yet

- Davis - Judging The Credibility of Criminal Suspect Statements Does Mode of Presentation MatterDocument18 pagesDavis - Judging The Credibility of Criminal Suspect Statements Does Mode of Presentation MatterMiltonNo ratings yet

- Critical Review Sustained Inattentional Deafness For Dynamic EventsDocument5 pagesCritical Review Sustained Inattentional Deafness For Dynamic Eventsedwardhumphreys69No ratings yet

- Vornik, Sharman, & Garry (2003) AccentsDocument10 pagesVornik, Sharman, & Garry (2003) AccentspelelemanNo ratings yet

- Holler, Shovelton & Beattie 2009 PDFDocument16 pagesHoller, Shovelton & Beattie 2009 PDFMassimo BiottiNo ratings yet

- English Composition Essay ExamplesDocument8 pagesEnglish Composition Essay Examplesolylmqaeg100% (2)

- Sarcina StroopDocument8 pagesSarcina StroopPasca Liana MariaNo ratings yet

- Detecting Deceit Via Analyses of VerbalDocument52 pagesDetecting Deceit Via Analyses of VerbalvohoangchauqnuNo ratings yet

- Marsh Tversky Hutson EyewitnessDocument31 pagesMarsh Tversky Hutson EyewitnessTatiana BuianinaNo ratings yet

- Evaluate Two Studies Related To Reconstructive MemoryDocument2 pagesEvaluate Two Studies Related To Reconstructive MemoryEnnyNo ratings yet

- PsychDocument6 pagesPsychslaylikehaleNo ratings yet

- Journal of Experimental Child Psychology: Rachel Barr, Nancy Wyss, Mark SomanaderDocument16 pagesJournal of Experimental Child Psychology: Rachel Barr, Nancy Wyss, Mark SomanaderGeorgetownELPNo ratings yet

- Gabbert Et Al., 2003, ACPDocument11 pagesGabbert Et Al., 2003, ACPjulio7345No ratings yet

- Eyewitness Testimony Is Not Always AccurateDocument7 pagesEyewitness Testimony Is Not Always AccuratejaimieNo ratings yet

- Some Findings and Issues: 1. An Early StudyDocument3 pagesSome Findings and Issues: 1. An Early StudyDekaNo ratings yet

- Eyewitness Testimony and Memory BiasesDocument17 pagesEyewitness Testimony and Memory BiasesCristy PestilosNo ratings yet

- Role of Arousal States in Susceptibility To Accept MisinformationDocument16 pagesRole of Arousal States in Susceptibility To Accept MisinformationEdi Fitra KotoNo ratings yet

- People's Perceptions of Their Truthful and Deceptive Interactions in Daily LifeDocument44 pagesPeople's Perceptions of Their Truthful and Deceptive Interactions in Daily LifeMarkoff ChaneyNo ratings yet

- Taylor 1999Document12 pagesTaylor 1999Alexandra DragomirNo ratings yet

- Articulation Rate and Speech-Sound Normalization Failure: Peter Flipsen JRDocument15 pagesArticulation Rate and Speech-Sound Normalization Failure: Peter Flipsen JRNasmevka MinkushNo ratings yet

- Eyewitness Testimony Dissertation IdeasDocument6 pagesEyewitness Testimony Dissertation IdeasWriteMyPaperCheapSingapore100% (1)

- Childhood AmnesiaDocument15 pagesChildhood Amnesiatresxinxetes5406No ratings yet

- 1993 Wolk Coexistence of Disordered PhonologyDocument13 pages1993 Wolk Coexistence of Disordered PhonologyElianaNo ratings yet

- 2009 Impact FactorDocument26 pages2009 Impact FactorIvonne Araneda NaveasNo ratings yet

- Reading 2Document9 pagesReading 2hCby 28No ratings yet

- Lab Report 2 Daniel Van ZantDocument7 pagesLab Report 2 Daniel Van ZantDaniel Van ZantNo ratings yet

- Effects of Written Form Lead Question On Eyewitness TestimonyDocument5 pagesEffects of Written Form Lead Question On Eyewitness TestimonyDragos FarcasNo ratings yet

- Calculating The Decision TimesDocument9 pagesCalculating The Decision TimesSubashini BakthavatchalamNo ratings yet

- Brocklehurst Corley 2011Document45 pagesBrocklehurst Corley 2011ouma.idrissi11No ratings yet

- Eywiotness PDFDocument8 pagesEywiotness PDFViorelNo ratings yet

- Nielsen Rendall 2011 The Sound of Round: Evaluating The Sound-Symbolic Role of Consonants in The Classic Takete-Maluma PhenomenonDocument10 pagesNielsen Rendall 2011 The Sound of Round: Evaluating The Sound-Symbolic Role of Consonants in The Classic Takete-Maluma PhenomenonpotioncomicNo ratings yet

- Pizzighello & Bressan 2008Document9 pagesPizzighello & Bressan 2008an phương nguyễnNo ratings yet

- Manual Babbling in Deaf and Hearing Infants: A Longitudinal StudyDocument8 pagesManual Babbling in Deaf and Hearing Infants: A Longitudinal StudyMonakaNo ratings yet

- LARYNGEAL DYNAMICS IN STUTTERING - PDF / KUNNAMPALLIL GEJODocument16 pagesLARYNGEAL DYNAMICS IN STUTTERING - PDF / KUNNAMPALLIL GEJOKUNNAMPALLIL GEJO JOHN100% (1)

- 25IJELS 105202015 AReview PDFDocument5 pages25IJELS 105202015 AReview PDFIJELS Research JournalNo ratings yet

- Accuracy and Confidence On The Interpersonal Perception TaskDocument16 pagesAccuracy and Confidence On The Interpersonal Perception TaskIva DraškovićNo ratings yet

- Burke Heuer Emotional Story 1992Document14 pagesBurke Heuer Emotional Story 1992ousia_rNo ratings yet

- The Effects of Visual & Auditory Learning On RecallDocument10 pagesThe Effects of Visual & Auditory Learning On RecallLiliana GomezNo ratings yet

- Stroop EffectDocument15 pagesStroop EffectMichal GailNo ratings yet

- Why Are Swear Words "Bad"?Document14 pagesWhy Are Swear Words "Bad"?Simon ClarkNo ratings yet

- Reliability of Memory ERQDocument2 pagesReliability of Memory ERQEnnyNo ratings yet

- The Development of A Comprehensive System For Classifying Interruptions (1988) - Derek Roger, Peter Bull & Sally SmithDocument8 pagesThe Development of A Comprehensive System For Classifying Interruptions (1988) - Derek Roger, Peter Bull & Sally SmithTrần Trọng KiênNo ratings yet

- Stalking: How Perceptions Differ From Reality and Why These Differences MatterDocument39 pagesStalking: How Perceptions Differ From Reality and Why These Differences MatterMIANo ratings yet

- Eyewitness Testimony ResearchDocument26 pagesEyewitness Testimony ResearchCorey Murphy100% (1)

- Definitions of Gender and Sex: The Subtleties of Meaning: Jayde Pryzgoda and Joan C. ChrislerDocument17 pagesDefinitions of Gender and Sex: The Subtleties of Meaning: Jayde Pryzgoda and Joan C. ChrislerRidlo Nur FathoniNo ratings yet

- Learning and Individual Differences: Sophie I. Lindquist, John P. McleanDocument10 pagesLearning and Individual Differences: Sophie I. Lindquist, John P. McleanRaveendranath VeeramaniNo ratings yet

- Beaman, C. P., & Williams, T. I. (2010) - Earworms (Stuck Song Syndrome) - Towards A Natural History of Intrusive Thoughts. British Journal of Psychology, 101 (4), 637-653.Document17 pagesBeaman, C. P., & Williams, T. I. (2010) - Earworms (Stuck Song Syndrome) - Towards A Natural History of Intrusive Thoughts. British Journal of Psychology, 101 (4), 637-653.goni56509No ratings yet

- Goldinger Etal JML 97Document27 pagesGoldinger Etal JML 97damien333No ratings yet

- Raichle ME, Sci Amer 270, 1994Document8 pagesRaichle ME, Sci Amer 270, 1994thebeholderNo ratings yet

- When and Why To Replicate 218-3686-1-PBDocument15 pagesWhen and Why To Replicate 218-3686-1-PBthebeholderNo ratings yet

- Changes in Brain Function (Quantitative EEG Cordance) During Placebo Lead-In and Treatment Outcomes in Clinical Trials For Major DepressionDocument7 pagesChanges in Brain Function (Quantitative EEG Cordance) During Placebo Lead-In and Treatment Outcomes in Clinical Trials For Major DepressiondebdotnetNo ratings yet

- Mapping Symbols To Response Modalities: Interference Effects On Stroop-Like TasksDocument11 pagesMapping Symbols To Response Modalities: Interference Effects On Stroop-Like TasksthebeholderNo ratings yet

- Dfe RR111Document87 pagesDfe RR111thebeholderNo ratings yet

- Deciding What To Replicate PreprintDocument14 pagesDeciding What To Replicate PreprintthebeholderNo ratings yet

- 2018 - Bediou Et Al PDFDocument34 pages2018 - Bediou Et Al PDFthebeholderNo ratings yet

- 2018 - Bediou Et Al PDFDocument34 pages2018 - Bediou Et Al PDFthebeholderNo ratings yet

- Neurostiinte - A Brief History of Human Brain MappingDocument9 pagesNeurostiinte - A Brief History of Human Brain MappingSonia Ilea BucovschiNo ratings yet

- Bialystok Craik Klein ViswanathanDocument14 pagesBialystok Craik Klein ViswanathanMarcelo OrianoNo ratings yet

- Is Bilingualism Associated2018 PDFDocument93 pagesIs Bilingualism Associated2018 PDFthebeholderNo ratings yet

- Whats in The Name AlexithymiaDocument15 pagesWhats in The Name AlexithymiathebeholderNo ratings yet

- 10.1038@s41583 020 0355 6 PDFDocument11 pages10.1038@s41583 020 0355 6 PDFthebeholderNo ratings yet

- 10.1038@s41583 020 0355 6 PDFDocument11 pages10.1038@s41583 020 0355 6 PDFthebeholderNo ratings yet

- (David D. Franks) Neurosociology Fundamentals and PDFDocument136 pages(David D. Franks) Neurosociology Fundamentals and PDFthebeholder100% (1)

- Bialystok Craik Klein ViswanathanDocument14 pagesBialystok Craik Klein ViswanathanMarcelo OrianoNo ratings yet

- Is Bilingualism Associated2018 PDFDocument93 pagesIs Bilingualism Associated2018 PDFthebeholderNo ratings yet

- A Reply To Costa and Mccrae. P or A and C-The Role of TheoryDocument2 pagesA Reply To Costa and Mccrae. P or A and C-The Role of TheorythebeholderNo ratings yet

- Feeling FutureDocument61 pagesFeeling FutureleximanNo ratings yet

- A Dissociation Between The Representation of Tool-Use Skills and Hand Dominance. Insight From Left-And Right-Handed Callostomy PatientsDocument12 pagesA Dissociation Between The Representation of Tool-Use Skills and Hand Dominance. Insight From Left-And Right-Handed Callostomy PatientsthebeholderNo ratings yet

- (David D. Franks) Neurosociology Fundamentals and PDFDocument136 pages(David D. Franks) Neurosociology Fundamentals and PDFthebeholder100% (1)

- Dense Amnesia in A Professional MusicianDocument15 pagesDense Amnesia in A Professional MusicianthebeholderNo ratings yet

- Estimation of Premorbid Intelligence in Spanish People With The Word Accentuation Test and Its Application To The Diagnosis of DemenciaDocument14 pagesEstimation of Premorbid Intelligence in Spanish People With The Word Accentuation Test and Its Application To The Diagnosis of DemenciathebeholderNo ratings yet

- Behavioral SciencesDocument30 pagesBehavioral SciencesthebeholderNo ratings yet

- Hodges 1992Document24 pagesHodges 1992thebeholderNo ratings yet

- Dialnet WhatDoBilingualsThinkAboutTheirCodeswitching 1396245Document12 pagesDialnet WhatDoBilingualsThinkAboutTheirCodeswitching 1396245thebeholderNo ratings yet

- 1 s2.0 S014976341500161X MainDocument18 pages1 s2.0 S014976341500161X MainJohnNo ratings yet

- R. Kingstone - Handbook of Functional Neuroimaging of CognitionDocument401 pagesR. Kingstone - Handbook of Functional Neuroimaging of CognitionGodzlawNo ratings yet

- Ncomms4122 PDFDocument9 pagesNcomms4122 PDFthebeholderNo ratings yet

- Chess Expertise and Fusiform Form AreaDocument2 pagesChess Expertise and Fusiform Form AreathebeholderNo ratings yet

- Design Kickstart: Course SyllabusDocument2 pagesDesign Kickstart: Course SyllabusRicardoNo ratings yet

- RPL Form 1 - Life Experiences 2013Document6 pagesRPL Form 1 - Life Experiences 2013grend30100% (6)

- Noam Chomsky - 10 Strategies of Manipulation by The MediaDocument3 pagesNoam Chomsky - 10 Strategies of Manipulation by The MediaBogdan ComsaNo ratings yet

- Position and Competency Profile: Rpms Tool For Teacher I-Iii (Proficient Teachers)Document3 pagesPosition and Competency Profile: Rpms Tool For Teacher I-Iii (Proficient Teachers)Chel GualbertoNo ratings yet

- Curriculum Map Science 7Document6 pagesCurriculum Map Science 7Lucelle Palaris100% (8)

- RW - DLP Critical Reading As ReasoningDocument8 pagesRW - DLP Critical Reading As ReasoningJulieAnnLucasBagamaspad100% (6)

- Applied Animal Behaviour ScienceDocument10 pagesApplied Animal Behaviour ScienceJosé Alberto León HernándezNo ratings yet

- Spark Limba Modernă 2 Clasa A VII A Teacher S BookDocument104 pagesSpark Limba Modernă 2 Clasa A VII A Teacher S BookStroe Stefania100% (1)

- Career Guidance Module For Grade 11 StudentsDocument43 pagesCareer Guidance Module For Grade 11 StudentsGilbert Gabrillo JoyosaNo ratings yet

- IELTS Practice Listening DrillsDocument2 pagesIELTS Practice Listening DrillsJessa Castillote TanNo ratings yet

- Arminda Nira Hernández Castellano OLLDocument19 pagesArminda Nira Hernández Castellano OLLArminda NiraNo ratings yet

- National Elctrical Installation CurriculumDocument34 pagesNational Elctrical Installation CurriculumRudi FajardoNo ratings yet

- Read Interntaional in Partner With The International School of Tanganyika To Provide Makumbusho Secondary With A Library and Textbooks.Document2 pagesRead Interntaional in Partner With The International School of Tanganyika To Provide Makumbusho Secondary With A Library and Textbooks.Muhidin Issa MichuziNo ratings yet

- 对外汉语语言点教学150例 PDFDocument336 pages对外汉语语言点教学150例 PDFangela100% (1)

- SLT and Deindividuation Ao1 and Ao2Document4 pagesSLT and Deindividuation Ao1 and Ao2laurice93No ratings yet

- Chatterjee, A., & Coslett, H.B. (Eds.) (2014) - The Roots of Cognitive Neuroscience. Behavioral Neurology and Neuropsychology. Oxford University Press PDFDocument431 pagesChatterjee, A., & Coslett, H.B. (Eds.) (2014) - The Roots of Cognitive Neuroscience. Behavioral Neurology and Neuropsychology. Oxford University Press PDFReg A. Derah100% (1)

- Article Writing PowerPointDocument6 pagesArticle Writing PowerPointERIKSON JOSE LAZARO PEREZNo ratings yet

- Sining Silabus AgricultureDocument11 pagesSining Silabus AgricultureJudemarife RicoroyoNo ratings yet

- Lac PlanDocument6 pagesLac PlanIvy Dones100% (1)

- Science 4-DLL Nov, 7-11, 2016Document8 pagesScience 4-DLL Nov, 7-11, 2016larie_kayne5608No ratings yet

- GRADE 6-Peace and Val Ed Catch-Up PlanDocument2 pagesGRADE 6-Peace and Val Ed Catch-Up PlanDenica BebitNo ratings yet

- Aesthetics and The Philosophy of ArtDocument3 pagesAesthetics and The Philosophy of ArtRenzo Pittaluga100% (1)

- 3rd Grading g9 Festival Dancing December 10 14Document6 pages3rd Grading g9 Festival Dancing December 10 14Gene Edrick CastroNo ratings yet

- Unit 3: Vocational GuidanceDocument5 pagesUnit 3: Vocational GuidanceMukul SaikiaNo ratings yet

- Training Writing Skills PDFDocument26 pagesTraining Writing Skills PDFfirja praditiyaNo ratings yet

- Assignment On Design ThinkingDocument2 pagesAssignment On Design ThinkingdhukerhiteshNo ratings yet

- Reading Strategy2Document5 pagesReading Strategy2Lala Khaulani UarNo ratings yet

- Sydelle I Prosopio Resume 2015-WeeblyDocument2 pagesSydelle I Prosopio Resume 2015-Weeblyapi-285366543No ratings yet

- Discussion: Psychology of Social Behaviour Solved McqsDocument23 pagesDiscussion: Psychology of Social Behaviour Solved McqsAmritakar MandalNo ratings yet

- OGL 481 Pro-Seminar I: PCA-Ethical Communities WorksheetDocument5 pagesOGL 481 Pro-Seminar I: PCA-Ethical Communities Worksheetapi-454559101No ratings yet