Professional Documents

Culture Documents

If Software Update Is Offline Due To ATA Chassis: Path A

Uploaded by

liew99Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

If Software Update Is Offline Due To ATA Chassis: Path A

Uploaded by

liew99Copyright:

Available Formats

330584543.doc (184.

00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

If software update is offline due to ATA chassis

.1

.2

upndu050_R011

To complete this procedure, select one of the following two paths:

Path A - If there are FLARE ATA LUNs that span more than one enclosure, there are

special instructions which follow in this section.

Path B - If there are no FLARE ATA LUNs spanning chassis

Path A Procedure:

If there are ATA LUNs that span more than one enclosure, there are special instructions which follow in

this section for Path A.

Reason: When a LUN spans more than one enclosure, it is subject to having one of its disks marked

for rebuild if one of the chassis experiences a power-fail or is temporarily out of service due to a glitch

during NDU. The ATA chassis being updated to FLARE code that includes FRUMON code 1.53 is

subject to this type of Glitch 1-2% of the time. If a chassis has problems during/immediately following

an NDU update, refer to EMC Knowledgebase article emc88535.

Procedure when there are ATAs LUNs that span more than one chassis:

a. Identify which ATA RAID groups span more than 1 chassis.

b. Identify one LUN on one of those RAID groups.

c. Check the sniff rate on that LUN. It will generally be indicative of all the LUNs on the array.

If this array ever ran Release 11 FLARE, then the default sniff rate of 30 is most likely still

set. This means it takes longer to sniff an entire disk. This will determine how far back

you need to look for ATA disk errors in the next step. Use navicli to determine the sniff

rate on the LUN as follows:

navicli h <SP_ip_address> getsniffer <LUN#>

or

naviseccli h <SP_ip_address> getsniffer <LUN#>

The output will include the latest sniffer results for that LUN and will give you the sniffer

settings for that LUN at the top of the report.

VERIFY RESULTS FOR UNIT 1

Sniffing state:ENABLED

Sniffing rate(100 ms/IO):5

Background verify priority:ASAP

Historical Total of all Non-Volatile Recovery Verifies(0

passes

d. Search the SP event logs for the following types of error on any ATA drives:

Data Sector Reconstructed

Stripe Reconstructed

Sector Reconstructed

Done

0x683

0x687

0x689

Check the past 30 days if the sniffing rate from above = 5 ( Default for Release12 and higher )

Check the past 60 days if the sniffing rate from above = 6-20

1

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

Check the past 120 days if the sniffing rate from above = 20-30

e. If any of the above event codes are found within the designated time period, perform a

background verify of all LUNs on any ATA drive reporting one on the above event codes as

follows:

Start a background verify for the LUN by entering the applicable Navisphere CLI command

as follows:

FLARE versions Release 19 and later:

navicli -h <SP_IP_address> setsniffer <lun_number> | -rg

<raid_group_number> | -all -bv -bvtime ASAP

or

naviseccli -h <SP_IP_address> setsniffer <lun_number> | -rg

<raid_group_number> | -all -bv -bvtime ASAP

where

SP_IP_address is the IP address of SP

lun_number is the LUN number in decimal to start background verify on

raid_group_number is the RAID group number in decimal to start background

verify on

-all is an option to apply sniffer parameters to all LUNs in the storage system.

The target SP must own one LUN at minimum.

The progress of the background verify can be monitored by entering the following

command:

navicli -h < SP_IP_address> getsniffer < lun_number> | -rg

<raid_group_number> | -all

or

naviseccli -h < SP_IP_address> getsniffer < lun_number> | -rg

<raid_group_number> | -all

FLARE versions prior to Release 19:

navicli h <SP_IP_address> setsniffer <lun_number> 1 bv bvtime ASAP

or

naviseccli h <SP_IP_address> setsniffer <lun_number> 1 bv bvtime ASAP

where SP_IP_address specifies the IP address or network name of the SP that owns

the target LUN and lun_number specifies the logical unit number of the LUN

NOTE: Using the command line option cr when starting a background verify will

create a new sniffer (or verify) report and reset all counters to 0.

The progress of the background verify can be monitored by entering the following

command:

navicli h <SP_IP_address> getsniffer <lun_number>

or

naviseccli h <SP_IP_address> getsniffer <lun_number>

NOTE: You cannot check information from the non-owning SP. The above command

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

has to be run from both SPs. If the command is only run with SPA's IP address, then

the output will only contain the report from the LUNs owned by SPA. The same must

be done for SPB.

f. Confirm that no new corrected or uncorrectable errors are encountered when the

background verify is completed. If the same type errors occur as noted above, run a

second background verify on just the LUN reporting the reconstruction. If the background

verify on the LUN reports another reconstruction, do not continue with the NDU, call

CLARiiON Tech Support. Otherwise continue to the next step.

g. Stop and prevent ALL I/O to the array.

h. Disable and zero write cache on the array for NDUs designated as offline in the table

in the prior section.

View and note all write cache settings under Array Properties. You will need to reset

them later to the same settings. Disable the write cache in Array Properties Cache tab.

When cache status has changed from disabling to disabled, zero the memory assigned

to write cache in Array Properties Memory tab. The memory cannot be changed to 0mb

until cache has disabled.

Ensure that the write cache has been completely disabled. Confirm that the cache is

disabled and zeroed by using Navisphere CLI to check cache status. Use the CLI

command:

navicli h < SP_IP_address > getcache

or

naviseccli h < SP_IP_address > getcache

Ensure that all caching statuses from the CLI state are DISABLED and that all write

cache sizes are 0MB before continuing.

i. If the array is not a CDL (CLARiiON Disk Library), unbind all ATA hot spares (the RAID

group that held the hot spare can remain).

j. Confirm that there are no ATA drives with a current Status of stuck in power-up or slot

empty when a drive is installed. If there are, remove them by backing them out of their

fully inserted position, leaving them in the enclosure slot to be reinserted later.

k. Return to the previous module that brought you here, to complete the NDU procedure.

Return here after the NDU to determine if ATA FRUMON code has been updated

successfully prior to re-enabling write cache and allowing I/O.

.3

Path B Procedure:

a. Stop and prevent ALL I/O to the array.

b. Disable and zero write cache on the array for NDUs designated as offline in the table

in the prior section.

View and note all write cache settings under Array Properties. You will need to reset

them later to the same settings. Disable the write cache in Array Properties Cache tab.

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

When cache status has changed from disabling to disabled, zero the memory assigned

to write cache in Array Properties Memory tab. The memory cannot be changed to 0mb

until cache has disabled.

Ensure that the write cache has been completely disabled. Confirm that the cache is

disabled and zeroed by using Navisphere CLI to check cache status. Use the CLI

command:

navicli h < SP_IP_address > getcache

or

naviseccli h < SP_IP_address > getcache

Ensure that all caching statuses from the CLI state are DISABLED and that all write

cache sizes are 0MB before continuing.

c. If the array is not a CDL (CLARiiON Disk Library), unbind all ATA hot spares (the RAID

group that held the hot spare can remain).

d. Confirm that there are no ATA drives with a current Status of stuck in power-up or slot

empty when a drive is installed. If there are, remove them by backing them out of their

fully inserted position, leaving them in the enclosure slot to be reinserted later.

e. Return to the previous module that brought you here, to complete the NDU procedure.

Return here after the NDU to determine if ATA FRUMON code has been updated

successfully prior to re-enabling write cache and allowing I/O.

.4

Following the NDU:

WARNING: Write cache must not be re-enabled, hot spares must not be rebound, and host I/O

must not be allowed until the FRUMON code update has been confirmed.

a. Run the following Navisphere CLI commands to confirm that the BCCs now contain the

new FRUMON code:

navicli h <SPA IP address> getcrus lccreva -lccrevb

or

naviseccli h <SPA IP address> getcrus lccreva -lccrevb

navicli h <SPB IP address> getcrus lccreva -lccrevb

or

naviseccli h <SPB IP address> getcrus lccreva -lccrevb

This will report status of all LCCs and BCCs including the FRUMON revision listed as

Revision. ATA enclosures going to this new version should be at Revision 1.53.

b. Confirm that all ATA LCCs have been updated to the new FRUMON code before

continuing. Below is an example of 1 chassis. A navicli getcrus command will

display all the DAE2 chassis, FC and ATA.

DAE2-ATA Bus 1 Enclosure 1

Bus 1 Enclosure 1 Fan A State: Present

Bus 1 Enclosure 1 Fan B State: Present

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

Bus

Bus

Bus

Bus

Bus

Bus

Bus

Bus

c.

1

1

1

1

1

1

1

1

Enclosure

Enclosure

Enclosure

Enclosure

Enclosure

Enclosure

Enclosure

Enclosure

1

1

1

1

1

1

1

1

Power

Power

LCC A

LCC B

LCC A

LCC B

LCC A

LCC B

A State: Present

B State: Present

State: Present

State: Present

Revision: 1.53

Revision: 1.53

Serial #: SCN00041900684

Serial #: SCN00042000067

When the SP event logs report completion and you have confirmed that the LCCs have

the new FRUMON code a seen above, you must also observe all LCCs and ATA drives for

fault LEDs before considering this update complete.

d. If drives were pulled because they were in a state of stuck in power up or slot empty,

reinsert them now.

e. Rebind any hot spares which were unbound for this procedure.

f.

Reset and re-enable write cache to its original settings if you previously disabled it.

g. You can now return again, to the procedure in the previous module that instructed you to

come to this ATA module.

See the event log examples below.

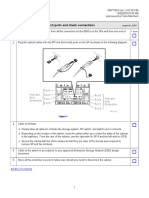

The following figure shows the completed upgrade on one ATA chassis. There will be an entry for each

chassis (ATA and FC):

The next figure is from an SPA event log that clearly shows that LCC Firmware Upgrades are

complete on all.

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

Detail of a FRUMON update:

Sequence of events:

a.

FLARE will read the revision of the FRUMON code of each (DAE-2 and ATA chassis)

FRUMON image file.

b.

FLARE will initiate a request to do an upgrade of all LCCs that are lower revision.

c.

FLARE will pause 8 minutes on SPB and 10 minutes on SPA to allow all parts of the NDU

to complete.

d.

Starting with the highest numbered enclosure on the highest numbered bus, FLARE will

check the revision of FRUMON code on each LCC.

e.

When an LCC is found with a revision lower than that included with the NDU package,

FLARE will begin downloading the code to the LCC. This requires about 2 - 3 minutes to

a DAE-2 (depending on distance from SP to LCC), and approximately a minute to an ATA

DAE.

f.

FLARE will issue the update command, wait for the LCC to reboot and come back online.

This requires about one minute. The LCC is off line 9 to 15 seconds.

g.

The process will pause approximately 85 seconds between upgrading ATA chassis and

40 seconds between DAE-2 chassis to allow I/O operations to catch up.

h.

Continue to next enclosure.

Timing Example:

Example 1 - If the ATA chassis is chassis 3 of 5 on Bus 1

All chassis higher on the loop than chassis 3 on Bus 1 will be done first.

6

330584543.doc (184.00 KB)

9/22/2016 9:16 AM

Last saved by Frank Marchant

10 minutes - to start.

3 minutes -to update Chassis 5

40 seconds - wait until next chassis starts

3 minutes - to do Chassis 4

40 seconds - to start the ATA chassis 3

1 minute - to update the ATA chassis

17 minutes total after the NDU completes, this ATA chassis could resume I/O.

Example 2 - ATA chassis is chassis 6 on Bus 0 and there are 5 DAE-2 chassis on Bus 1

10 minutes - to begin

18 minutes - 3 minutes to do each of the 5 chassis on Bus 1 with 40 seconds between

chassis updates

2 minutes - approximately 2 minutes to start and do the ATA chassis as it is the highest

chassis on Bus 0

30 minutes after the NDU completes, this ATA chassis could resume I/O.

Example 3 - ATA chassis is chassis 1 on Bus 0 and there are 240 drives total (8 chassis on

each backend bus)

10 minutes - to begin

29 minutes - 3 minutes to do each of the 8 DAE-2 chassis on Bus 1 with 40 seconds

between

21 minutes to do the 5 DAE-2 chassis on Bus 0 with 40 seconds between each

1 minute to complete the update of the ATA chassis

61 minutes after the NDU completes, this ATA could resume I/O.

Table of Contents

You might also like

- WAN TECHNOLOGY FRAME-RELAY: An Expert's Handbook of Navigating Frame Relay NetworksFrom EverandWAN TECHNOLOGY FRAME-RELAY: An Expert's Handbook of Navigating Frame Relay NetworksNo ratings yet

- Configuration of a Simple Samba File Server, Quota and Schedule BackupFrom EverandConfiguration of a Simple Samba File Server, Quota and Schedule BackupNo ratings yet

- Upgrade SRX With ISSU (All KB in One GO)Document8 pagesUpgrade SRX With ISSU (All KB in One GO)Asif Hamidul HaqueNo ratings yet

- VNX How To Remote Power On Off A DiskDocument2 pagesVNX How To Remote Power On Off A DiskemcviltNo ratings yet

- Netapp-How To Rescan and Recover LUN Paths in A Host After Modifying SLM Reporting NodesDocument45 pagesNetapp-How To Rescan and Recover LUN Paths in A Host After Modifying SLM Reporting NodeschengabNo ratings yet

- Juniper Networks - How To Run Packet Profiling On Firewall To Determine Cause of HighDocument2 pagesJuniper Networks - How To Run Packet Profiling On Firewall To Determine Cause of HighZhuzhu ZhuNo ratings yet

- Dial Home HandbookDocument20 pagesDial Home HandbookVasu PogulaNo ratings yet

- Emc 257758Document7 pagesEmc 257758Bahman MirNo ratings yet

- Network Appliance - Actualtests.ns0 155.v2014!03!03.by - Brown.189qDocument81 pagesNetwork Appliance - Actualtests.ns0 155.v2014!03!03.by - Brown.189qjency_storageNo ratings yet

- EMC-CX Tips 2Document3 pagesEMC-CX Tips 2liuylNo ratings yet

- MB - V Fas6000 Sa600 C 013Document20 pagesMB - V Fas6000 Sa600 C 013Feston_FNo ratings yet

- SVA Excercise 1Document9 pagesSVA Excercise 1sujaataNo ratings yet

- CLI in Eng ModeDocument13 pagesCLI in Eng ModeSumanta MaitiNo ratings yet

- Ans:-There Is No Direct Answer For This Question But We Shall Do It in Several WayDocument26 pagesAns:-There Is No Direct Answer For This Question But We Shall Do It in Several WayWilliam SmithNo ratings yet

- Dialhome AlertsDocument5 pagesDialhome AlertsSunil GarnayakNo ratings yet

- Factory InstallDocument2 pagesFactory InstallPravesh UpadhyayNo ratings yet

- Configure Lan Monitor: HP APA Provides The FollowingDocument32 pagesConfigure Lan Monitor: HP APA Provides The FollowingajeetaryaNo ratings yet

- TimeFinder From EMCDocument4 pagesTimeFinder From EMCNaseer MohammedNo ratings yet

- P1-P2 H I: Revision HistoryDocument18 pagesP1-P2 H I: Revision HistoryBiplab ParidaNo ratings yet

- Dial Home Handbook-1 PDFDocument20 pagesDial Home Handbook-1 PDFSunil GarnayakNo ratings yet

- Configuring Transparent Web Proxy Using Squid 2Document4 pagesConfiguring Transparent Web Proxy Using Squid 2agunggumilarNo ratings yet

- A Redundant LoadDocument7 pagesA Redundant LoadMohd Iskandar OmarNo ratings yet

- IPL1A RC35 Mechid ArtifactsDocument47 pagesIPL1A RC35 Mechid Artifactsdurga selvaNo ratings yet

- ActionPlan HA Update 2015 V1.3Document19 pagesActionPlan HA Update 2015 V1.3Shailesh RansingNo ratings yet

- Migration Proces EMC To HDSDocument6 pagesMigration Proces EMC To HDSPrasadValluraNo ratings yet

- BSS L1 TroubleshootingDocument39 pagesBSS L1 TroubleshootingVinay Bhardwaj100% (1)

- EMC VNX DataDocument21 pagesEMC VNX Datapadhiary jagannathNo ratings yet

- TimeFinder & SRDF Operations SOPDocument12 pagesTimeFinder & SRDF Operations SOPPeter KidiavaiNo ratings yet

- Procedure For Installing VXVM and Vxfs Patches in Sfrac: Stopping OracleDocument3 pagesProcedure For Installing VXVM and Vxfs Patches in Sfrac: Stopping Oracleakkati123No ratings yet

- Manual Tests PADocument41 pagesManual Tests PAJAMESJANUSGENIUS5678No ratings yet

- Manual Multi-Node Patching of Grid Infrastructure and Rac DB Environment Using OpatchautoDocument7 pagesManual Multi-Node Patching of Grid Infrastructure and Rac DB Environment Using OpatchautoAriel Pacheco RinconNo ratings yet

- HPUX by ShrikantDocument21 pagesHPUX by ShrikantmanmohanmirkarNo ratings yet

- Linux+Recommended+Settings-Multipathing-IO BalanceDocument11 pagesLinux+Recommended+Settings-Multipathing-IO BalanceMeganathan RamanathanNo ratings yet

- Test /acceptance Criteria (TAC) For Implementation (Was EIP-2)Document8 pagesTest /acceptance Criteria (TAC) For Implementation (Was EIP-2)liew99No ratings yet

- Boot - Device AFF - A150 ASA - A150 AFF - C190 AFF - A220 ASA - AFF A220 - FAS2720 50 Ev20 024aDocument19 pagesBoot - Device AFF - A150 ASA - A150 AFF - C190 AFF - A220 ASA - AFF A220 - FAS2720 50 Ev20 024anaret.seaNo ratings yet

- NS0 155Document110 pagesNS0 155ringoletNo ratings yet

- HACMP Storage Migration ProcedureDocument5 pagesHACMP Storage Migration Procedureraja rajanNo ratings yet

- RSP Troubleshooting GuideDocument11 pagesRSP Troubleshooting Guidenitesh dayamaNo ratings yet

- Allocating, Unallocating Storage On A EMC SANDocument5 pagesAllocating, Unallocating Storage On A EMC SANSrinivas KumarNo ratings yet

- MB FAS8040 60 80 C Ev20 050Document19 pagesMB FAS8040 60 80 C Ev20 050nixdorfNo ratings yet

- Backup Cluster Configuration: Task Details Commands DescriptionDocument4 pagesBackup Cluster Configuration: Task Details Commands Descriptionik reddyNo ratings yet

- APG 40 ChangeDocument16 pagesAPG 40 ChangerajNo ratings yet

- Features Supported: Inventory Details Logical Details Monitors Report DetailsDocument3 pagesFeatures Supported: Inventory Details Logical Details Monitors Report DetailsbrpindiaNo ratings yet

- Diplomado CCNPDocument172 pagesDiplomado CCNPalexis pedrozaNo ratings yet

- Netbackup Key PointsDocument10 pagesNetbackup Key PointsSahil AnejaNo ratings yet

- Isilon Cluster ShutdownDocument12 pagesIsilon Cluster ShutdownAmit KumarNo ratings yet

- HPUX MCSG MigHPUX MCSG Migrage Storage From Netapp 3240 To 3270Document4 pagesHPUX MCSG MigHPUX MCSG Migrage Storage From Netapp 3240 To 3270Mq SfsNo ratings yet

- VCS NotesDocument7 pagesVCS Notesmail2cibyNo ratings yet

- Cpu Bottle Neck Issues NetappDocument10 pagesCpu Bottle Neck Issues NetappSrikanth MuthyalaNo ratings yet

- RAC Backup and Recovery Using RMANDocument7 pagesRAC Backup and Recovery Using RMANpentiumonceNo ratings yet

- SCM CONS LC RunbookDocument61 pagesSCM CONS LC Runbookviniec116No ratings yet

- Template Configuration For MA5600Document12 pagesTemplate Configuration For MA5600Hai LuongNo ratings yet

- Hellcat Pilot InstructionsDocument22 pagesHellcat Pilot InstructionsRefugio Hernandez ArceNo ratings yet

- File: /Home/Vissistl/Desktop/Interview-New/Linux - Inter Page 1 of 3Document3 pagesFile: /Home/Vissistl/Desktop/Interview-New/Linux - Inter Page 1 of 3Sistla567No ratings yet

- Configuring Ipv6 Acls: Lab TopologyDocument3 pagesConfiguring Ipv6 Acls: Lab TopologyAye KyawNo ratings yet

- Acro Whoop FC ManualDocument12 pagesAcro Whoop FC ManualChristopher HudginsNo ratings yet

- Lab Exercise 9: Configuring Host Access To Clariion Luns - LinuxDocument9 pagesLab Exercise 9: Configuring Host Access To Clariion Luns - LinuxrasoolvaliskNo ratings yet

- Setup PT Cluster No deDocument19 pagesSetup PT Cluster No deliew99No ratings yet

- CCNPv7 TSHOOT Lab3 1 Assembling Maintenance and Troubleshooting Tools StudentDocument45 pagesCCNPv7 TSHOOT Lab3 1 Assembling Maintenance and Troubleshooting Tools StudentThomas Schougaard TherkildsenNo ratings yet

- Networker Command Line ExamplesDocument6 pagesNetworker Command Line ExamplesShefali MishraNo ratings yet

- CTA - Containers Explained v1Document25 pagesCTA - Containers Explained v1liew99No ratings yet

- Floor Load RequirementsDocument4 pagesFloor Load Requirementsliew99No ratings yet

- How To Access SPP On KCDocument1 pageHow To Access SPP On KCliew99No ratings yet

- Install/Upgrade Array Health Analyzer: Inaha010 - R001Document2 pagesInstall/Upgrade Array Health Analyzer: Inaha010 - R001liew99No ratings yet

- NSD TuningDocument6 pagesNSD Tuningliew99No ratings yet

- Pacemaker CookbookDocument4 pagesPacemaker Cookbookliew99No ratings yet

- SONAS Security Strategy - SecurityDocument38 pagesSONAS Security Strategy - Securityliew99No ratings yet

- Update Software On A CX300 series/CX500 series/CX700/CX3 Series ArrayDocument13 pagesUpdate Software On A CX300 series/CX500 series/CX700/CX3 Series Arrayliew99No ratings yet

- Cable Cx600 Sps To Switch Ports and Check ConnectionsDocument1 pageCable Cx600 Sps To Switch Ports and Check Connectionsliew99No ratings yet

- Configure Distributed Monitor Email-Home Webex Remote AccessDocument6 pagesConfigure Distributed Monitor Email-Home Webex Remote Accessliew99No ratings yet

- Cable CX3-20-Series Data Ports To Switch Ports: cnspr160 - R002Document1 pageCable CX3-20-Series Data Ports To Switch Ports: cnspr160 - R002liew99No ratings yet

- Cable CX3-10c Data Ports To Switch Ports: cnspr170 - R001Document1 pageCable CX3-10c Data Ports To Switch Ports: cnspr170 - R001liew99No ratings yet

- Post Hardware/Software Upgrade Procedure: Collecting Clariion Storage System InformationDocument2 pagesPost Hardware/Software Upgrade Procedure: Collecting Clariion Storage System Informationliew99No ratings yet

- Cable The CX3-40-Series Array To The LAN: Cnlan160 - R006Document2 pagesCable The CX3-40-Series Array To The LAN: Cnlan160 - R006liew99No ratings yet

- Upndu 410Document5 pagesUpndu 410liew99No ratings yet

- Prepare CX300, 500, 700, and CX3 Series For Update To Release 24 and AboveDocument15 pagesPrepare CX300, 500, 700, and CX3 Series For Update To Release 24 and Aboveliew99No ratings yet

- Verify and Restore LUN OwnershipDocument1 pageVerify and Restore LUN Ownershipliew99No ratings yet

- Cable The CX3-80 Array To The LAN: Cnlan150 - R005Document2 pagesCable The CX3-80 Array To The LAN: Cnlan150 - R005liew99No ratings yet

- Cable The CX3-20-Series Array To The LAN: Storage System Serial Number (See Note Below)Document2 pagesCable The CX3-20-Series Array To The LAN: Storage System Serial Number (See Note Below)liew99No ratings yet

- Test /acceptance Criteria (TAC) For Implementation (Was EIP-2)Document8 pagesTest /acceptance Criteria (TAC) For Implementation (Was EIP-2)liew99No ratings yet

- Test and Acceptance Criteria (TAC) Procedure For Installation (Was EIP)Document3 pagesTest and Acceptance Criteria (TAC) Procedure For Installation (Was EIP)liew99No ratings yet

- and Install Software On The Service Laptop: cnhck010 - R006Document1 pageand Install Software On The Service Laptop: cnhck010 - R006liew99No ratings yet

- Hardware/software Upgrade Readiness CheckDocument2 pagesHardware/software Upgrade Readiness Checkliew99No ratings yet

- Disable Array From Calling Home: ImportantDocument2 pagesDisable Array From Calling Home: Importantliew99No ratings yet

- Verify You Have Reviewed The Latest Clariion Activity Guide (Cag)Document1 pageVerify You Have Reviewed The Latest Clariion Activity Guide (Cag)liew99No ratings yet

- Restore Array Call Home Monitoring: ImportantDocument2 pagesRestore Array Call Home Monitoring: Importantliew99No ratings yet

- An Introduction To Intrusion-Detection SystemsDocument19 pagesAn Introduction To Intrusion-Detection SystemsGeraud TchadaNo ratings yet

- CYS-501 - Lab-1Document20 pagesCYS-501 - Lab-1Zahida PerveenNo ratings yet

- Packet Sniffing: - by Aarti DhoneDocument13 pagesPacket Sniffing: - by Aarti Dhonejaveeed0401No ratings yet

- CUCM BK T863AF5C 00 Troubleshooting-Guide-Cucm-90 PDFDocument208 pagesCUCM BK T863AF5C 00 Troubleshooting-Guide-Cucm-90 PDFisamadinNo ratings yet

- DEF CON 24 Marc Newlin MouseJack Injecting Keystrokes Into Wireless Mice UPDATED - PDDocument86 pagesDEF CON 24 Marc Newlin MouseJack Injecting Keystrokes Into Wireless Mice UPDATED - PDWALDEKNo ratings yet

- Assignment6 PDFDocument14 pagesAssignment6 PDFBilalNo ratings yet

- How To Sniff Wireless Packets With WiresharkDocument3 pagesHow To Sniff Wireless Packets With WiresharkSudipta Das100% (1)

- SnifferDocument17 pagesSnifferRavi RavindraNo ratings yet

- T.4.1. QoS Measurement Methods and ToolsDocument26 pagesT.4.1. QoS Measurement Methods and ToolsEmina LučkinNo ratings yet

- Rsa NW 11.6.0.0 Release NotesDocument32 pagesRsa NW 11.6.0.0 Release NotesDiegoNo ratings yet

- Cain and AbelDocument3 pagesCain and AbelGrace RoselioNo ratings yet

- Week 7 Final - AssignmentDocument30 pagesWeek 7 Final - Assignmentapi-414181025No ratings yet

- Laboratory Experiment # 8: Don Bosco Technical College Information Technology DepartmentDocument9 pagesLaboratory Experiment # 8: Don Bosco Technical College Information Technology DepartmentDennis SinnedNo ratings yet

- FortiGate II 5-4-1 Student GuideDocument595 pagesFortiGate II 5-4-1 Student GuideDiego Suarez100% (3)

- Analisis Keamanan Jaringan Pada Fasilitas Internet (Wifi) Terhadap Serangan Packet SniffingDocument16 pagesAnalisis Keamanan Jaringan Pada Fasilitas Internet (Wifi) Terhadap Serangan Packet Sniffingcyber security 99100% (1)

- 49 WiresharkDocument8 pages49 Wiresharkalguacil2013No ratings yet

- Assignment# 01 Wireshark - IntroDocument7 pagesAssignment# 01 Wireshark - IntroAnimentalNo ratings yet

- Fortigate T.shootDocument4 pagesFortigate T.shootneoaltNo ratings yet

- Sniff Your Own Networks With TcpdumpDocument8 pagesSniff Your Own Networks With TcpdumpMahmood MustafaNo ratings yet

- CEH Module 10: SniffersDocument30 pagesCEH Module 10: SniffersAhmad Mahmoud0% (1)

- Siva Wlan Testing ResumeDocument3 pagesSiva Wlan Testing Resumemadhubaddapuri0% (1)

- Arbor APS STT - Unit 07 - Analyzing Packet Captures - 25jan2018 PDFDocument30 pagesArbor APS STT - Unit 07 - Analyzing Packet Captures - 25jan2018 PDFmasterlinh2008No ratings yet

- CLI Commands For Troubleshooting FortiGate FirewallsDocument6 pagesCLI Commands For Troubleshooting FortiGate Firewallschandrakant.raiNo ratings yet

- Lib P Cap Hakin 9 Luis Martin GarciaDocument9 pagesLib P Cap Hakin 9 Luis Martin Garciaf2zubacNo ratings yet

- Sniffing SpoofingDocument6 pagesSniffing SpoofingDeTRoyDNo ratings yet

- Practical 4 Wireshark EXTC 4Document8 pagesPractical 4 Wireshark EXTC 4Darshan AherNo ratings yet

- Principles of Information Security, Fifth EditionDocument55 pagesPrinciples of Information Security, Fifth EditionCharlito MikolliNo ratings yet

- Linux' Packet MmapDocument87 pagesLinux' Packet MmapsmallakeNo ratings yet

- Network LAB SheetDocument10 pagesNetwork LAB SheetDaya Ram BudhathokiNo ratings yet

- KPI Optimization Test Plan For LTEDocument42 pagesKPI Optimization Test Plan For LTEhuzaif zahoorNo ratings yet