Professional Documents

Culture Documents

Deep Reinforcement Learning For Flappy Bird: Pipeline

Uploaded by

Goutham PrasadOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Deep Reinforcement Learning For Flappy Bird: Pipeline

Uploaded by

Goutham PrasadCopyright:

Available Formats

Deep Reinforcement Learning for Flappy Bird

Kevin Chen

Stanford University

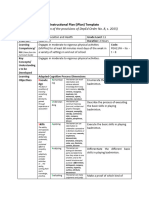

Abstract Pipeline

Reinforcement learning is essential for training an agent to

make smart decisions under uncertainty and to take small sample

initialize

update state choose action minibatch

actions in order to achieve a higher overarching goal. In this replay

new episode next frame and replay based on - from replay

project, we combined reinforcement learning and deep memory and

memory greedy policy memory and

DQN

learning techniques to train an agent to play the game, update DQN

Flappy Bird. The challenge is that the agent only sees the

pixels and the rewards, similar to a human player. Using just if crash if not crash

this information, it is able to successfully play the game at a

human or sometimes super-human level.

Feature extractor Deep Q-Network (DQN)

[1]

Related Work

V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A.K. extract n-channel Deep Q-

convert to downsample Q-value for

Fidjeland, G. Ostrovski, S. Petersen, C. Beattle, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. images from image (n most Network

[2] Legg, D. Hassabis, Human-level control through deep reinforcement learning, Nature 518, 529-533 (2015). grayscale to 84x84 each action

V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, M. Riedmiller. Playing Atari with state recent frames) (DQN)

deep reinforcement learning. arXiv preprint arXiv: 1312.5602, 2013.

Reinforcement Learning Experimental Results

Average Score

State: Sequence of frames and actions Easy Medium Hard Game Human Baseline DQN DQN DQN

(flap every n) (easy) (medium) (hard)

st = x1, a1, x2, a2, xt-1, at-1, xt difficulty

Action: Flap (a = 1) or Do nothing (a = 0) Easy Inf Inf Inf Inf Inf

Rewards: rewardAlive rewardPipe rewardDead Medium Inf Inf 0.7 Inf Inf

Hard 21 0.5 0.1 0.6 82.2

+0.1 +1.0 -1.0

Highest Score Achieved

Q-learning: Q*(s, a) = Es~[r + maxa Q*(s, a) | s, a] Game Human Baseline DQN DQN DQN

(flap every n) (easy) (medium) (hard)

Qi+1(s, a) Es~[r + maxa Qi(s, a) | s, a] difficulty

Loss Li(i) = Es, a~p()[(yi Q(s, a; i))2] Easy Inf Inf Inf Inf Inf

Medium Inf 11 2 Inf Inf

yi = Es~[r + maxa Qtarget(s, a; target) | s, a] Hard 65 1 1 1 215

You might also like

- Case Study C NewwDocument12 pagesCase Study C NewwRudransh SharmaNo ratings yet

- Rainbow - Combining Improvements in Deep Reinforcement Learning (1710.02298)Document14 pagesRainbow - Combining Improvements in Deep Reinforcement Learning (1710.02298)kovejeNo ratings yet

- Deep Q-NetworkDocument15 pagesDeep Q-Networkaishika.ranjan2021No ratings yet

- 111 ReportDocument6 pages111 ReportNguyễn Tự SangNo ratings yet

- SSL 18 Mar 23 PDFDocument50 pagesSSL 18 Mar 23 PDFarpan singhNo ratings yet

- RL Project - Deep Q-Network Agent PresentationDocument15 pagesRL Project - Deep Q-Network Agent PresentationPhạm TịnhNo ratings yet

- Transfer LearningDocument7 pagesTransfer Learningasim zamanNo ratings yet

- Yan Wang Ee367 Win17 ReportDocument8 pagesYan Wang Ee367 Win17 ReportAmissadai ferreiraNo ratings yet

- CS480 Lecture November 21stDocument193 pagesCS480 Lecture November 21stRajeswariNo ratings yet

- Dual Cross-Attention Learning For Fine-Grained Visual Categorization and Object Re-IdentificationDocument15 pagesDual Cross-Attention Learning For Fine-Grained Visual Categorization and Object Re-IdentificationBijayan BhattaraiNo ratings yet

- Pe2 DLP Lesson 08Document4 pagesPe2 DLP Lesson 08Glycelle Urlanda MapiliNo ratings yet

- Towards Vision-Based Deep Reinforcement Learning For Robotic Motion ControlDocument8 pagesTowards Vision-Based Deep Reinforcement Learning For Robotic Motion ControlhadiNo ratings yet

- Introduction To Deep Q-Network (DQN) : by Divyansh PanditDocument10 pagesIntroduction To Deep Q-Network (DQN) : by Divyansh PanditRudransh SharmaNo ratings yet

- Acoustic Detection of Drone: Mel SpectrogramDocument1 pageAcoustic Detection of Drone: Mel SpectrogramLALIT KUMARNo ratings yet

- 1 s2.0 S0925231220303337 MainDocument12 pages1 s2.0 S0925231220303337 MainMaria PilarNo ratings yet

- Distributed Deep Q-Learning: Hao Yi Ong, Kevin Chavez, and Augustus HongDocument8 pagesDistributed Deep Q-Learning: Hao Yi Ong, Kevin Chavez, and Augustus HongpogNo ratings yet

- Playing Geometry Dash With Convolutional Neural NetworksDocument7 pagesPlaying Geometry Dash With Convolutional Neural NetworksfriedmanNo ratings yet

- "Double-DIP": Unsupervised Image Decomposition Via Coupled Deep-Image-PriorsDocument10 pages"Double-DIP": Unsupervised Image Decomposition Via Coupled Deep-Image-PriorsxfgdgfNo ratings yet

- Loss Functions For Image Restoration With Neural Networks: Hang Zhao, Orazio Gallo, Iuri Frosio, and Jan KautzDocument11 pagesLoss Functions For Image Restoration With Neural Networks: Hang Zhao, Orazio Gallo, Iuri Frosio, and Jan KautzStephen LauNo ratings yet

- GeoStat DeepLearn NDesassis 15 06 22Document134 pagesGeoStat DeepLearn NDesassis 15 06 22thomas.romaryNo ratings yet

- Deep Reinforcement Learning On Atari 2600Document3 pagesDeep Reinforcement Learning On Atari 2600International Journal of Innovative Science and Research TechnologyNo ratings yet

- Zhu2018 1Document6 pagesZhu2018 1Ramjan KhandelwalNo ratings yet

- On Improving DRL For POMDPDocument7 pagesOn Improving DRL For POMDPZHANG JUNJIENo ratings yet

- Self Defense TechniquesDocument2 pagesSelf Defense TechniquesNo IntensiveNo ratings yet

- Imdt Project ReportDocument2 pagesImdt Project ReportDevank GargNo ratings yet

- Blind Image Deconvolution Using Deep Generative Priors: Muhammad Asim, Fahad Shamshad, and Ali AhmedDocument20 pagesBlind Image Deconvolution Using Deep Generative Priors: Muhammad Asim, Fahad Shamshad, and Ali AhmedvamsiNo ratings yet

- SampleDocument14 pagesSampleAR GaucherNo ratings yet

- Personal Combat ReferenceDocument2 pagesPersonal Combat ReferenceZander FranklinNo ratings yet

- Ruan 2019Document5 pagesRuan 2019Nirban DasNo ratings yet

- Video Based Emotion Recognition: Submitted ByDocument8 pagesVideo Based Emotion Recognition: Submitted ByAtharva TripathiNo ratings yet

- Deep Reinforcement Learning With Quantum-Inspired Experience Replay Qing Wei, Hailan Ma, Chunlin Chen, Member, IEEE, Daoyi Dong, Senior Member, IEEEDocument12 pagesDeep Reinforcement Learning With Quantum-Inspired Experience Replay Qing Wei, Hailan Ma, Chunlin Chen, Member, IEEE, Daoyi Dong, Senior Member, IEEEchanwengqiuNo ratings yet

- Overcoming Catastrophic Forgetting in Neural Networks PDFDocument6 pagesOvercoming Catastrophic Forgetting in Neural Networks PDFColin LewisNo ratings yet

- Massively Parallel Methods For Deep Reinforcement LearningDocument14 pagesMassively Parallel Methods For Deep Reinforcement LearningVasco Ribeiro da SilvaNo ratings yet

- Image Deblurring Using A Neural Network Approach: ISSN: 2277-3754Document4 pagesImage Deblurring Using A Neural Network Approach: ISSN: 2277-3754Simona StolnicuNo ratings yet

- Thanks To XYZ Agency For FundingDocument5 pagesThanks To XYZ Agency For FundingSerkalem NegusseNo ratings yet

- One-Shot Image Classification: Adv. Computer Vision Term Project PresentationDocument20 pagesOne-Shot Image Classification: Adv. Computer Vision Term Project PresentationNaufal SuryantoNo ratings yet

- A D6 Total Conversion For The Heavy Gear Universe: CompatibleDocument21 pagesA D6 Total Conversion For The Heavy Gear Universe: CompatibleWilliam MyersNo ratings yet

- Deeplearning - Ai Deeplearning - AiDocument53 pagesDeeplearning - Ai Deeplearning - AiYassine ZagnaneNo ratings yet

- Prioritized Experience ReplayDocument21 pagesPrioritized Experience Replaycnt dvsNo ratings yet

- SLChapter8 1Document20 pagesSLChapter8 1dnthrtm3No ratings yet

- Detectors: Detecting Objects With Recursive Feature Pyramid and Switchable Atrous ConvolutionDocument12 pagesDetectors: Detecting Objects With Recursive Feature Pyramid and Switchable Atrous Convolutiona mNo ratings yet

- 1 s2.0 S0925231218300602 MainDocument13 pages1 s2.0 S0925231218300602 MainPiyush BafnaNo ratings yet

- Entity Embeddings of Categorical VariablesDocument9 pagesEntity Embeddings of Categorical VariablesAxel StraminskyNo ratings yet

- Marta Gans: Unsupervised Representation Learning For Remote Sensing Image ClassificationDocument5 pagesMarta Gans: Unsupervised Representation Learning For Remote Sensing Image ClassificationAcharya RabinNo ratings yet

- DeepLearning Tutorial1Document22 pagesDeepLearning Tutorial1Bharath kumarNo ratings yet

- Vid2Avatar: 3D Avatar Reconstruction From Videos in The Wild Via Self-Supervised Scene DecompositionDocument11 pagesVid2Avatar: 3D Avatar Reconstruction From Videos in The Wild Via Self-Supervised Scene DecompositionVetal YeshorNo ratings yet

- Brain Region Segmentation Using Convolutional Neural NetworkDocument6 pagesBrain Region Segmentation Using Convolutional Neural NetworkSAI CHAKRADHAR GNo ratings yet

- Eccv10 Tutorial Part4Document52 pagesEccv10 Tutorial Part4jatinNo ratings yet

- Recurrent Neural NetworksDocument106 pagesRecurrent Neural NetworksManish SinghalNo ratings yet

- Towards A Scalable Discrete Quantum Generative Adversarial Neural NetworkDocument11 pagesTowards A Scalable Discrete Quantum Generative Adversarial Neural NetworkLakshika RathiNo ratings yet

- Lecture06 - CopieDocument52 pagesLecture06 - CopieCharef WidedNo ratings yet

- Process Mining PosterDocument1 pageProcess Mining PosterGiel BeuzelNo ratings yet

- Rug01-002945739 2021 0001 AcDocument80 pagesRug01-002945739 2021 0001 AceasydrawjpsNo ratings yet

- Convolutional Neural Networks: CMSC 733 Fall 2015 Angjoo KanazawaDocument55 pagesConvolutional Neural Networks: CMSC 733 Fall 2015 Angjoo KanazawaSanjeebNo ratings yet

- Speech RecogDocument5 pagesSpeech RecogzxvcbvbNo ratings yet

- Human Activity Classification PosterDocument1 pageHuman Activity Classification Posternikhil singhNo ratings yet

- One-Shot Video Object SegmentationDocument10 pagesOne-Shot Video Object Segmentationtadeas kellyNo ratings yet

- The Strange - Cypher DeckDocument34 pagesThe Strange - Cypher DeckLiam ArkenNo ratings yet

- Capital Structure PDFDocument8 pagesCapital Structure PDFAhmad Bello DogarawaNo ratings yet

- Critical Values of The Chi-Squared DistributionDocument4 pagesCritical Values of The Chi-Squared DistributionGoutham PrasadNo ratings yet

- Avg (MS) Min (MS) Max (MS) : Results of The Simulation Completed At: 06/09/2017 01:02:18Document6 pagesAvg (MS) Min (MS) Max (MS) : Results of The Simulation Completed At: 06/09/2017 01:02:18Goutham PrasadNo ratings yet

- RequirementsDocument1 pageRequirementsGoutham PrasadNo ratings yet

- Deep Learning Youtube Video Tags: Travis Addair Stanford University Taddair@Stanford - EduDocument7 pagesDeep Learning Youtube Video Tags: Travis Addair Stanford University Taddair@Stanford - EduGoutham PrasadNo ratings yet

- Btech (Information Technology) (1) - 1Document2 pagesBtech (Information Technology) (1) - 1Goutham PrasadNo ratings yet

- p742 Goldberg 2Document9 pagesp742 Goldberg 2Goutham PrasadNo ratings yet

- Deep Reinforcement Learning For Flappy Bird: PipelineDocument1 pageDeep Reinforcement Learning For Flappy Bird: PipelineGoutham PrasadNo ratings yet

- Winnie Lin and Timothy Wu: Implementation-ContDocument1 pageWinnie Lin and Timothy Wu: Implementation-ContGoutham PrasadNo ratings yet

- Uttar Pradesh Skill Development PolicyDocument95 pagesUttar Pradesh Skill Development PolicyGoutham PrasadNo ratings yet

- Django Graphos Documentation: Release 0.0.2a0Document19 pagesDjango Graphos Documentation: Release 0.0.2a0Goutham PrasadNo ratings yet

- Advanced Java SlidesDocument134 pagesAdvanced Java SlidesDeepa SubramanyamNo ratings yet

- Testing: Instructor: Iqra JavedDocument32 pagesTesting: Instructor: Iqra Javedzagi techNo ratings yet

- Anthro250J/Soc273E - Ethnography Inside Out: Fall 2005Document10 pagesAnthro250J/Soc273E - Ethnography Inside Out: Fall 2005Raquel Pérez AndradeNo ratings yet

- Ten Strategies For The Top ManagementDocument19 pagesTen Strategies For The Top ManagementAQuh C Jhane67% (3)

- HT 02 Intro Tut 07 Radiation and ConvectionDocument46 pagesHT 02 Intro Tut 07 Radiation and ConvectionrbeckkNo ratings yet

- A Practical Guide To Geostatistical - HenglDocument165 pagesA Practical Guide To Geostatistical - HenglJorge D. MarquesNo ratings yet

- Sustainability Indicators: Are We Measuring What We Ought To Measure?Document8 pagesSustainability Indicators: Are We Measuring What We Ought To Measure?yrperdanaNo ratings yet

- 40 Years of Transit Oriented DevelopmentDocument74 pages40 Years of Transit Oriented DevelopmentTerry MaynardNo ratings yet

- Engineering Council of South Africa: 1 PurposeDocument5 pagesEngineering Council of South Africa: 1 Purpose2lieNo ratings yet

- Nursing 405 EfolioDocument5 pagesNursing 405 Efolioapi-403368398100% (1)

- ESL BOOKS - IELTS Academic Writing Task 1 Vocabulary by ESL Fluency - PreviewDocument7 pagesESL BOOKS - IELTS Academic Writing Task 1 Vocabulary by ESL Fluency - Previewanirudh modhalavalasaNo ratings yet

- Chemistry Chemical EngineeringDocument124 pagesChemistry Chemical Engineeringjrobs314No ratings yet

- Zerkle Dalcroze Workshop HandoutDocument2 pagesZerkle Dalcroze Workshop HandoutEricDoCarmoNo ratings yet

- Daily Lesson Log: Department of EducationDocument10 pagesDaily Lesson Log: Department of EducationStevenson Libranda BarrettoNo ratings yet

- IFEM Ch07 PDFDocument19 pagesIFEM Ch07 PDFNitzOONo ratings yet

- Barthes EiffelTower PDFDocument21 pagesBarthes EiffelTower PDFegr1971No ratings yet

- CM6 - Mathematics As A Tool - Dispersion and CorrelationDocument18 pagesCM6 - Mathematics As A Tool - Dispersion and CorrelationLoeynahcNo ratings yet

- About Karmic Debt Numbers in NumerologyDocument3 pagesAbout Karmic Debt Numbers in NumerologyMarkMadMunki100% (2)

- MCQ in Engineering Economics Part 11 ECE Board ExamDocument19 pagesMCQ in Engineering Economics Part 11 ECE Board ExamDaryl GwapoNo ratings yet

- Feedback For Question 1-MIDTERM 2 AFM 451Document2 pagesFeedback For Question 1-MIDTERM 2 AFM 451jason fNo ratings yet

- Physics - DDPS1713 - Chapter 4-Work, Energy, Momentum and PowerDocument26 pagesPhysics - DDPS1713 - Chapter 4-Work, Energy, Momentum and Powerjimmi_ramliNo ratings yet

- Labour WelfareDocument250 pagesLabour WelfareArundhathi AdarshNo ratings yet

- Unit 3Document9 pagesUnit 3Estefani ZambranoNo ratings yet

- Cerita BugisDocument14 pagesCerita BugisI'dris M11No ratings yet

- French DELF A1 Exam PDFDocument10 pagesFrench DELF A1 Exam PDFMishtiNo ratings yet

- Sample Intern PropDocument7 pagesSample Intern PropmaxshawonNo ratings yet

- 5300 Operation Manual (v1.5)Document486 pages5300 Operation Manual (v1.5)Phan Quan100% (1)

- CP100 Module 2 - Getting Started With Google Cloud PlatformDocument33 pagesCP100 Module 2 - Getting Started With Google Cloud PlatformManjunath BheemappaNo ratings yet

- Ifrs SapDocument6 pagesIfrs Sapravikb01No ratings yet