Professional Documents

Culture Documents

Embedded Systems Virtualization

Uploaded by

Stevan MilinkovicCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Embedded Systems Virtualization

Uploaded by

Stevan MilinkovicCopyright:

Available Formats

Journal of Software Engineering and Applications, 2012, 5, 277-290 277

http://dx.doi.org/10.4236/jsea.2012.54033 Published Online April 2012 (http://www.SciRP.org/journal/jsea)

A State-of-the-Art Survey on Real-Time Issues in

Embedded Systems Virtualization

Zonghua Gu, Qingling Zhao

College of Computer Science, Zhejiang University, Hangzhou, China.

Email: {zgu, ada_zhao}@zju.edu.cn

Received January 1st, 2012; revised February 5th, 2012; accepted March 10th, 2012

ABSTRACT

Virtualization has gained great acceptance in the server and cloud computing arena. In recent years, it has also been

widely applied to real-time embedded systems with stringent timing constraints. We present a comprehensive survey on

real-time issues in virtualization for embedded systems, covering popular virtualization systems including KVM, Xen,

L4 and others.

Keywords: Virtualization; Embedded Systems; Real-Time Scheduling

1. Introduction on L4Ka::Pistachio microkernel; in turn, unmodified

guest OS can run on top of QEMU; Schild et al. [2] used

Platform virtualization refers to the creation of Virtual

Intel VT-d HW extensions to run unmodified guest OS

Machines (VMs), also called domains, guest OSes, or

on L4. There are also Type-2, para-virtualization solu-

partitions, running on the physical machine managed by

tions, e.g., VMWare MVP (Mobile Virtualization Plat-

a Virtual Machine Monitor (VMM), also called a hyper-

form) [3], as well as some attempts at adding para-virtu-

visor. Virtualization technology enables concurrent exe-

alization features to Type-2 virtualization systems to im-

cution of multiple VMs on the same hardware (single or

prove performance, e.g., task-grain scheduling in KVM

multicore) processor. Virtualization technology has been

[4].

widely applied in the enterprise and cloud computing

Since embedded systems often have stringent timing

space. In recent years, it has been increasingly widely de-

and performance constraints, virtualization for embedded

ployed in the embedded systems domain, including avi-

systems must address real-time issues. We focus on real-

onics systems, industrial automation, mobile phones, etc.

time issues in virtualization for embedded systems, and

Compared to the conventional application domain of en-

terprise systems, virtualization in embedded systems leave out certain topics that are more relevant to the ser-

must place strong emphasis on issues like real-time per- ver application space due to space constrains, including:

formance, security and dependability, etc. power and energy-aware scheduling, dynamic adaptive

A VMM can run either on the hardware directly scheduling, multicore scheduling on high-end NUMA

(called bare-metal, or Type-1 virtualization), or run on (Non-Uniform Memory Access) machines, nested virtu-

top of a host operating system (called hosted, or Type-2 alization, etc. In addition, we focus on recent develop-

virtualization). Another way to classify platform-level ments in this field, instead of presenting a historical per-

virtualization technologies is full virtualization vs para- spective.

virtualization. Full virtualization allows the guest OS to This paper is structured as follows: we discuss hard

run on the VMM without any modification, while para- real-time virtualization solutions for safety-critical sys-

virtualization requires the guest OS to be modified by tems in Section 2; Xen-based solutions in Section 3, in-

adding hypercalls into the VMM. Representative Type-2, cluding hard real-time and soft real-time extensions to

full virtualization solutions include KVM, VirtualBox, Xen; KVM-based solutions in Section 4; micro-kernel

Microsoft Virtual PC, VMWare Workstation; Represen- based solutions represented by L4 in Section 5; other

tative Type-1, paravirtualization solutions include Xen, virtualization frameworks for embedded systems in Sec-

L4, VMWare ESX. There are some research attempts at tion 6; OS virtualization in Section 7; task-grain sched-

constructing Type-1, full virtualization solutions, e.g., uling in Section 8; the Lock-Holder Preemption problem

Kinebuchi et al. [1] implemented such a solution by port- in Section 9; and finally, a brief conclusion in Section 10.

ing the QEMU machine emulator to run as an application (The topics of Sections 8 and 9 are cross-cutting issues

Copyright © 2012 SciRes. JSEA

278 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

that are not specific to any virtualization approach, but mum computational overhead, efficient context switch of

we believe they are of sufficient importance to dedicate the partitions (domains), deterministic hypervisor system

separate sections to cover them. ) calls. Zamorano et al. [17,18] ported the Ada-based Open

Ravenscar Kernel (ORK+) to run as a partition on Xtra-

2. Hard Real-Time Virtualization for tuM, forming a software platform conforming to the

Safety-Critical Systems ARINC 653 standard. Campagna et al. [19] implemented

a dual-redundancy system on XtratuM to tolerate tran-

The ARINC 653 standard [5] defines a software archi-

sient faults like Single-Event-Upset, common on the

tecture for spatial and temporal partitioning designed for

high-radiation space environment. Three partitions are

safety-critical IMA (Integrated Modular Avionics) ap-

executed concurrently, two of them run identical copies

plications. It defines services such as partition manage-

of the application software, and the third checks consis-

ment, process management, time management, inter and

tency of their outputs. The OVERSEE (Open Vehicular

intra-partition communication. In the ARINC 653 speci-

Secure Platform) Project [20] aims to bring the avionics

fication for system partitioning and scheduling, each par-

standard to automotive systems by porting FreeOSEK, an

tition (virtual machine) is allocated a time slice, and par-

OSEK/VDX-compliant RTOS, as a paravirtualized guest

titioned are scheduled in a TDMA (Time Division Multi-

OS running on top of the XtratuM hypervisor [21]. While

ple Access) manner. A related standard is the Multiple

most ARINC-653 compliant virtualization solutions are

Independent Levels of Security (MILS) architecture, an

based on para-virtualization, Han et al. [22] presented an

enabling architecture for developing security-critical ap-

implementation of ARINC 653 based on Type-2, full

plications conforming to the Common Criteria security

virtualization architectures, including VM-Ware and Vir-

evaluation. The MILS architecture defines four concep-

tualBox.

tual layers of separation: separation kernel and hardware;

Next, we briefly mention some virtualization solutions

middleware services; trusted applications; distributed

for safety-critical systems that are not specifically de-

communications. Authors from Lockheed Martin [6] pre-

signed for the avionics domain, hence do not conform to

sented a feasibility assessment toward applying the Mi-

the ARINC-653 standard. Authors from Indian Institute

crokernel Hypervisor architecture to enable virtualization

of Technology [23] developed SParK (Safety Partition

for a representative set of avionics applications requiring

Kernel), a Type-1 para-virtualization solution designed

multiple guest OS environments, including a mixture of

for safety-critical systems; an open-source RTOS uC/

safety-critical and non-safety-critical guest OSes. Several

OS-II and a customized version of saRTL (stand-alone

commercial RTOS products conform to the ARINC 653

RT Linux) are ported as guest OSes on SParK. Authors

standard, including LynuxWorks LynxOS-178, Green

from CEA (Atomic Energy Commission), France [24]

Hills INTEGRITY-178B, Wind River VxWorks 653,

developed PharOS, a dependable RTOS designed for

BAE Systems CsLEOS, and DDC-I DEOS, etc. Many

automotive control systems featuring temporal and spa-

vendors also offer commercial virtualization products for

tial isolation in the presence of a mixed workload of both

safety-critical systems, many by adapting existing RTOS

time-triggered and event-triggered tasks. They adapted

products. For example, LynuxWorks implemented a vir-

Trampoline, an OSEK/VDX-compliant RTOS, as a para-

tualization layer to host their LynxOS product, called the

virtualized guest OS running on top of PharOS host OS

LynxSecure hypervisor that supports MILS and ARINC

to form a Type-2 virtualization architecture. To ensure

653. WindRiver [7] provides a hypervisor product for

temporal predictability, Trampoline is run as a time-

both its VxWorks MILS and VxWorks 653 platforms,

triggered task within PharOS. Authors from PUCRS,

including support for multicore. Other similar products

Brazil [25] developed Virtual-Hellfire Hypervisor, a

include: Greenhills INTEGRITY MultiVisor [8], Real-

Type-1 virtualization system based on the microkernel

Time Systems GmbH Hypervisor [9], Tenasys eVM for

HellfireOS featuring spatial and temporal isolation for

Windows [10], National Instruments Real-Time Hyper

safety-critical applications. The target HW platform is

Hypervisor [11], Open Synergy COQOS [12], Enea Hy-

HERMES Network-on-Chip with MIPS-like processing

pervisor [13], SysGO PikeOS [14], IBV Automation

elements [26].

GmbH QWin etc. VanderLeest [15], from a company

named DornerWorks, took a different approach by ada-

3. Xen-Based Solutions

pting the open-source Xen hypervisor to implement the

ARINC 653 standard. Cherkasova et al. [27] introduced and evaluated three

XtratuM [16] is a Type-1 hypervisor targeting safety CPU schedulers in Xen: BVT (Borrowed Virtual Time),

critical avionics embedded systems. It runs on LEON3 SEDF (Simple Earliest Deadline First), and Credit. Since

SPARC V8 processor, a widely used CPU in space appli- the BVT scheduler is now deprecated, we only discuss

cations. It features temporal and spatial separation, effi- Credit and SEDF here.

cient scheduling mechanism, low footprint with mini- The default scheduling algorithm in Xen is the Credit

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 279

Scheduler: it implements a proportional-share scheduling Yoo et al. [33] implemented the Compositional Schedul-

strategy where a user can adjust the CPU share for each ing Framework [32] in Xen-ARM. Jeong et al. [34] de-

VM. It also features automatic workload balancing of veloped PARFAIT, a hierarchical scheduling framework

virtual CPUs (vCPUs) across physical cores (pCPUs) on in Xen-ARM. At the bottom level (near the HW), SEDF

a multicore processor. This algorithm guarantees that no is used to provide CPU bandwidth guarantees to Do-

pCPU will idle when there exists a runnable vCPU in the main0 and real-time VMs; at the higher level, BVT

system. Each VM is associated with a weight and a cap. (Borrowed Virtual Time) is used to schedule all non-real-

When the cap is 0, VM can receive extra CPU time un- time VMs to provide fair distribution of CPU time

used by other VMs, i.e., WC (Work-Conserving) mode; among them, by mapping all vCPUs in the non real-time

when the cap is nonzero (expressed as a percentage), it VMs to a single abstract vCPU scheduled by the under-

limits the amount of CPU time given to a VM to not ex- lying SEDF scheduler. Lee et al. [30], Xi et al. [31], Yoo

ceed the cap, i.e., NWC (Non-Work-Conserving) mode. et al. [33], Jeong et al. [34] only addressed CPU schedul-

By default the credits of all runnable VMs are recalcu- ing issues, but did not consider I/O scheduling.

lated in intervals of 30 ms in proportion to each VM’s Xen has a split-driver architecture for handling I/O,

weight parameter, and the scheduling time slice is 10 ms, where a special driver domain (Domain0) contains the

i.e., the credit of the running virtual CPU is decreased backend driver, and user domains contain the frontend

every 10 ms. driver. Physical interrupts are first handled by the hyper-

In the SEDF scheduler, each domain can specify a visor, which then notifies the target guest domain with

lower bound on the CPU reservations that it requests by virtual interrupts, handled when the guest domain is

specifying a tuple of (slice, period), so that the VM will scheduled by the hypervisor scheduler. Hong et al. [35]

receive at least slice time units in each period time units. improved network I/O performance of Xen-ARM by

A Boolean flag indicates whether the VM is eligible to performing dynamic load balancing of interrupts among

receive extra CPU time. If true, then it is WC (Work- the multiple cores by modifying the ARM11 MPCore

Conserving) mode, and any available slack time is dis- interrupt distributor. In addition, each guest domain con-

tributed in a fair manner after all the runnable VMs have tains its own native device drivers, instead of putting all

received their specified slices. Unlike the Credit Sched- device drivers in Domain0. Yoo et al. [36] proposed sev-

uler, SEDF is a partitioned scheduling algorithm that eral improvements to the Xen Credit Scheduler to im-

does not allow VM migration across multiple cores, hence prove interrupt response time, including: do not de-

there is no global workload balancing on multicore pro- schedule the driver domain when it disables virtual in-

cessors. terrupts; exploit ARM processor support for FIQ, which

Masrur et al. [28] presented improvements to Xen’s has higher priority than regular IRQ, to support real-time

SEDF scheduler so that a domain can utilize its whole I/O devices; ensure that the driver domain is always

budget (slice) within its period even if it blocks for I/O scheduled with the highest priority when it has pending

before using up its whole slice (in the original SEDF interrupts.

scheduler, the unused budget is lost once a task blocks ). Lee et al. [37] enhanced the soft real-time performance

In addition, certain critical domains can be designated as of the Xen Credit Scheduler. They defined a laxity value

real-time domains and be given higher fixed-priority than as the target scheduling latency that the workload desires,

other domains scheduled with SEDF. One limitation is and preferentially schedule the vCPU with smallest laxity.

that each real-time domain is constrained to contain a Furthermore, they take cache-affinity into account for

single real-time task. Masrur et al. [29] removed this real time tasks when performing multicore load-balanc-

limitation, and considered a hierarchical scheduling ar- ing to prevent cache thrashing.

chitecture in Xen, where both the Xen hypervisor and Yu et al. [38] presented real-time improvements to the

guest VMs adopt deadline-monotonic Fixed-Priority Xen Credit Scheduler: real-time vCPUs are always given

scheduling with a tuple of (period, slice) parameters priority over non real-time vCPUs, and can preempt any

(similar to SEDF, a VM is allowed to run for a maximum non real-time vCPUs that may be running. When there

length of slice time units within each period), and pro- are multiple real-time vCPUs, they are load-balanced

posed a method for selecting optimum time slices and across multiple cores to reduce their mutual interference.

periods for each VM in the system to achieve schedula- Wang et al. [39] proposed to insert a workload monitor

bility while minimizing the lengths of time slices. in each guest VM to monitor and calculate both the CPU

The RT-Xen project [30,31] implemented a composi- and GPU resource utilization of all active guest VMs,

tional and hierarchical scheduling architecture based on and give priority to guest VMs that are GPU-intensive in

fixed-priority scheduling within Xen, and extensions of the Xen Credit Scheduler, since those are likely the in-

compositional scheduling framework [32] and periodic teractive tasks that need the shortest response time.

server design for fixed-priority scheduling. Similarly, Chen et al. [40] improved the Xen Credit Scheduler to

Copyright © 2012 SciRes. JSEA

280 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

have better audio-playing performance. The real-time does not need VMM scheduling; fast-tick cores for han-

vCPUs are assigned a shorter time slice, say 1 ms, while dling I/O events, which employs preemptive scheduling

the non-real-time vCPUs keep the regular time slice of with small time slices to ensure fast response to I/O events;

30 ms. The real-time vCPUs are also allowed to stay in and general cores for computation-intensive workloads,

the BOOST state for longer periods of time, while the which use the default Xen Credit Scheduler for fair CPU

regular Credit Scheduler only allows a vCPU to stay in sharing.

the BOOST state for one time slice before demoting it to Lee et al. [46] improved performance of multimedia

UNDER state. Xen provides a mechanism to pin certain applications in Xen by assigning higher fixed-priorities

vCPUs on physical CPUs (pCPUs). Chen et al. [41] to Domain0 and other real-time domains than non-real-

showed that when the number of vCPUs in a domain is time domains, which are scheduled by the default credit

greater than that of pinned pCPUs, the Xen Credit Sche- scheduler.

duler may seriously impair the performance of I/O-in-

tensive applications. To improve performance of pinned 4. KVM-Based Solutions

domains, they added a periodic runtime monitor in the

VMM to monitor the length of time when each domain’s KVM is a Type-2 virtualization solution that uses Linux

vCPUs go into the BOOST state, as well as the bus as the host OS, hence any real-time improvements to the

transactions with the help of HW Performance Monitor- Linux host OS kernel directly translate into real-time

ing Unit, in order to distinguish between CPU-intensive improvements to the KVM VMM. For example, real-

and I/O intensive domains. The former are assigned lar- time kernel patches like Ingo Milnar’s PREEMPT_RT

ger time slices, and the latter are assigned smaller time patch, can be applied to improve the real-time perform-

slices to improve their responsiveness. ance and predictability of the Linux host OS.

Gupta et al. [42] presented a set of techniques for en- Kiszka [4] presented some real-time improvements to

forcing performance isolation among multiple VMs in KVM. Real-time guest VM threads are given real-time

Xen: XenMon measures per-VM resource consumption, priorities, including the main I/O thread and one or more

including work done on behalf of a particular VM in vCPU threads, at the expense of giving lower priorities to

Xen’s driver domains; a SEDF-DC (Debt Collector) sched- threads in the host Linux kernel for various system-wide

uler periodically receives feedback from XenMon about services. A paravirtualized scheduling interface was in-

the CPU consumed by driver domains for I/O processing troduced to allow task-grain scheduling by introducing

on behalf of guest domains, and charges guest domains two hypercalls for the guest VM to inform the host

for the time spent in the driver domain on their behalf VMM about priority of the currently running task in the

when allocating CPU time; ShareGuard limits the total VM, as well as when an interrupt handler is finished

amount of resources consumed in driver domains based execution in the VM. Introduction of these hypercalls

on administrator-specified limits. implies that the resulting system is no longer a strict full-

Ongaro et al. [43] studied impact of the Xen VMM virtualization system as the conventional KVM. Zhang et

scheduler on performance using multiple guest VMs con- al. [47] presented two real-time improvements to KVM

currently running different types of application work- with coexisting RTOS and GPOS guests: giving the

loads, i.e., different combinations of CPU-intensive, I/O- guest RTOS vCPUs higher priority than GPOS vCPUs;

intensive, and latency-sensitive applications. Several op- use CPU shielding to dedicate one CPU core to the

timizations are proposed, including disabling preemption RTOS guest and shield it from GPOS interrupts, by set-

for the privileged driver domain, and optimizing the Cre- ting the CPU affinity parameter of both host OS pro-

dit Scheduler by sorting VMs in run queue based on re- cesses and interrupts. Experimental results indicate that

maining credits to give priority to short-running I/O VMs. the RTOS interrupt response latencies are reduced. Based

Govindan et al. [44] presented a communication-aware on the work of [47], Zuo et al. [48] presented additional

CPU scheduling algorithm and a CPU usage accounting improvements by adding two hypercalls that enable the

mechanism for Xen. They modified the Xen SEDF guest OS to boost priority of its vCPU when a high-

scheduler by preferentially scheduling I/O-intensive VMs priority task is started, e.g., like an interrupt handler, and

over their CPU-intensive VMs, by counting the number deboost it when the high-priority task is finished.

of packets flowing into or out of each VM, and selecting Cucinotta et al. [49] presented the IRMOS scheduler,

the VM with the highest count that has not yet consumed which implements a hard reservation variant of CBS

its entire slice during the current period. (Constant Bandwidth Server) on top of the EDF sched-

Hu et al. [45] proposed a method for improving I/O uler in Linux by extending the Linux CGroup interface.

performance of Xen on a multicore processor. The cores When applied in the context of KVM, inter-VM sched-

in the system are divided into 3 subsets, including the uling is CBS/EDF, while intra-VM task scheduling is

driver core hosting the single driver domain, hence it Fixed-Priority. While Cucinotta et al. [49] only addressed

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 281

CPU scheduling, Cucinotta et al. [50] addressed I/O and Lin et al. [54] presented VSched, an user-space im-

networking issues by grouping both guest VM threads plementation of EDF-based scheduling algorithm in the

and interrupt handler threads in the KVM host OS kernel host OS of a Type-2 virtualization system VMware GSX

into the same CBS reservation, and over-provisioning the Server. The scheduler is a user-level process with highest

CPU budget assigned to each VM by an amount that is priority, and Linux SIGSTOP/SIGCONT signals are used

dependent on the overall networking traffic performed by to implement (optional) hard resource reservations, hence

VMs hosted on the same system. Cucinotta et al. [51] it does not require kernel modification, but carries large

improved the CBS scheduler in the KVM host OS for a runtime overhead due to excessive context-switches be-

mixed workload of both compute-intensive and network- tween the scheduler process and application processes

intensive workloads. In addition to the regular CPU CBS compared to RESCH. (Although VSched is not imple-

reservation of (Q, P), where the VM is guaranteed to re- mented on KVM, we place it here due to its similarity to

ceive budget Q time units of CPU time out of every P RESCH.)

time units, a spare CPU reservation of (Qs, Ps) is attached

to the VM and dynamically activated upon a new packet 5. MicroKernel-Based Solutions

arrival. The spare reservation (Qs, Ps) has much smaller

L4 is a representative microkernel operating system, ex-

budget and period than the regular reservation (Q, P) to

tended to be a Type-1 virtualization architecture. There

provide fast networking response time. Checconi et al.

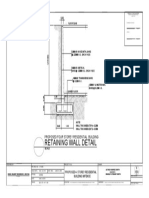

are multiple active variants of L4. Figure 1 shows the

[52] addressed real-time issues in live migration of KVM

evolution lineage of L4 variants.

virtual machines by using a probabilistic model of the

Authors from Avaya Labs [57] implemented a para-

migration process to select an appropriate policy for me-

virtualized Android kernel, and ran it alongside a para-

mory page migration, either based on a simple LRU

virtualized Linux kernel from OK Labs, L4Linux, on an

(Least-Recently Used) order, or a more complex one

ARM processor. Iqbal et al. [58] presented a detailed

based on observed page access frequencies in the past

comparison study between microkernel-based approach,

VM history.

represented by L4, and hypervisor-based approach, rep-

RESCH (REal-time SCHeduler framework) [53] is an

resented by Xen, to embedded virtualization, and con-

approach to implementing new scheduling algorithms

cluded that L4-based approaches have better perfor-

within Linux without modifying the kernel. In contrast to

mance and security properties. Gernot Heiser [59], foun-

typical user-level schedulers [54], RESCH consists of a

der of OKL4, argued for microkernel-based virtualization

loadable kernel module called RESCH core, and a user

for embedded systems. Performance evaluation of OKL4

library called RESCH library. In addition to the built-in

hypervisor on Beagle Board based on 500 MHz Cortex

Fixed-Priority (FP) scheduling algorithm in Linux, 4 new

A8 ARMv7 processor, running netperf benchmark shows

real-time FP-based scheduling algorithms have been im-

plemented with RESCH, including: FP-FF (partitioned that the TCP and UDP throughput degradation due to

scheduling with First-Fit heuristic for mapping tasks to virtualization is only 3% - 4%. Bruns et al. [60] and

processors), FP-PM (when a task cannot be assigned to

any CPU by first-fit allocation, the task can migrate

across multiple CPUs with highest priority on its allo-

cated CPU), G-FP (Global FP scheduling, where tasks

can freely migrate among different processors, and the

task with globally highest priority is executed), FP-US

(global FP scheduling, where tasks are classified into

heavy tasks and light tasks, based on their utilizations

factors. If the CPU utilization of a task is greater than or

equal to m/(3m−2), where m is the number of processors,

it is a heavy task. Otherwise, it is a light task. All heavy

tasks are statically assigned the highest priorities, while

light tasks have the original priorities). Other scheduling

algorithms can also be implemented. Asberg et al. [55]

applied RESCH to KVM to implement a real-time hier-

archical scheduling framework in a uni-processor envi-

ronment, where RESCH is used in both the host OS and

guest OS to implement FP scheduling. Asberg et al. [56]

used RESCH to implement 4 variants of multi-core glo-

bal hierarchical scheduling algorithms in Linux. Figure 1. L4 evolution lineage (from Acharya et al. [57]).

Copyright © 2012 SciRes. JSEA

282 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

Lackorzynski et al. [61] performed performance evalua- tualization Platform) from VMWare [3,66], VLX from

tion of L4Linux. Using L4/Fiasco as the hypervisor, Red Bend [67,68], Xen-ARM from Samsung [69], etc.

L4Linux as the guest OS, an ARM-based Infineon X- L4, VLX and Xen-ARM are all Type-1 hypervisors.

GOLD618 processor for mobile phones, Bruns et al. [60] VMWare MVP is a Type-2 virtualization solution with

compared thread context-switching times and interrupt Linux as both host and guest OSes implemented on

latencies of L4Linux to those of a stand-alone RTOS ARMv7 processor. It adopts a lightweight para-virtuali-

(FreeRTOS), and demonstrated that L4-based system has zation approach: 1) the entire guest OS is run in CPU

relatively small runtime overhead, but requires signifi- user-mode; most privileged instructions are handled via

cantly more cache resources than an RTOS, with cache trap-and-emulate, e.g., privileged coprocessor access in-

contention as the main culprit for performance degrada- structions mcr and mrc; other sensitive instructions are

tions. replaced with hypercalls; 2) all devices are para-virtua-

One important application area of L4 is mobile phone lized; especially, the paravirtualized TCP/IP is different

virtualization. A typical usage scenario is co-existence of from traditional para-virtualized networking by operating

multiple OSes on the same HW platform, e.g., have a at the socket system call level instead of at the device

“rich” OS like Android or Windows for handling user interface level: When a guest VM calls a syscall to open

interface and other control-intensive tasks, and a “sim- a socket, the request is made directly to the offload en-

ple” real-time OS for handling performance and timing- gine in the host OS, which opens a socket and performs

critical tasks like baseband signal processing. Another TCP/IP protocol processing within the host OS kernel.

goal is to save on hardware bill-of-material costs by hav- SPUMONE (Software Processing Unit, Multiplexing

ing a single core instead of two processor cores. Open ONE into two or more) [70,71] is a lightweight Type-1

Kernel Labs implemented the first commercial virtua- virtualization software implemented on a SH-4A proces-

lized phone in 2009 named the Motorola Evoke QA4, sor with the goal of running a GPOS (General-Purpose

which runs two VMs on top of L4 hypervisor, one is OS) like Linux and an RTOS like TOPPERS (an open

Linux for handling user interface tasks, and the other is source implementation of the ITRON RTOS standard

BREW (Binary Runtime Environment for Wireless) for [72]) on the same HW processor. It can either use fixed-

handling baseband signal processing tasks on a single priority scheduling, and always assign the RTOS higher

ARM processor. priority than GPOS, or adopt task-grain scheduling [73],

The company SysGo AG developed PikeOS, which where each task can be assigned a different priority to

evolved from a L4-microkernel, designed for safety- allow more fine-grained control, e.g., an important task

critical applications. Kaiser et al. [62] described the sche- in the GPOS can be assigned a higher priority than a less

duling algorithm in PikeOS, which is a combination of important task in the RTOS. Inter-OS communication is

priority-based, time-driven and proportional share sche- achieved with IPI (Inter-Processor Interrupts) and shared

duling. Each real-time VM is assigned a fixed-priority memory. Aalto [74] developed DynOS SPUMONE, an

decided by the designer, hence a time-driven VM can extension to SPUMONE to enable runtime migration of

have lower or higher priority values than an event-driven guest OSes to different cores on a 4-core RP1 processor

VM. Non real-time VMs execute in the background from Renesas. Lin et al. [75] presented a redesign of

when real-time VMs are not active. Yang et al. [63] de- SPUMONE as a multikernel architecture. (Multikernel

veloped a two-level Compositional Scheduling Architec- means that a separate copy of the kernel or VMM runs on

ture (CSA), using the L4/Fiasco microkernel as a VMM each core on a multicore processor.) Each processor core

and L4Linux as a VM. has its private local memory. To achieve better fault iso-

The NOVA Hypervisor [64] from Technical Univer- lation, the VMM runs in the local memory, while the

sity of Dresden is a Type-1 hypervisor focusing on secu- guest OSes run in the global shared main memory; both

rity issues that shares many similarities with L4, but it is run in kernel mode. To enhance security, Li et al. [76]

a full-virtualization solution based on processor HW sup- added a small trusted OS called xv6 to run a monitoring

port that does not require modification of the guest OS. service to detect any integrity violations of the large and

untrusted Linux OS. Upon detection of such integrity

6. Other Virtualization Frameworks for violations, the monitoring service invokes a recovery

Embedded Systems procedure to recover the integrity of the data structures,

and if that fails, reboot Linux.

Authors from Motorola [65] argued for the use of Type-1 SIGMA System [77] assigns a dedicated CPU core to

hypervisors as opposed to Type-2 hypervisors for mobile each OS, to build a multi-OS environment with native

phone virtualization for their security benefits due to a performance. Since there is no virtualization layer, guest

small TCB (Trusted Computing Base). Representative OSes cannot share devices and interrupts among them,

mobile virtualization solutions include MVP (Mobile Vir- hence all interrupts are bound and directly delivered to

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 283

their target OSes, e.g., for the Intel multiprocessor archi- [85], Linux VServer [86], Linux Containers LXC and

tecture, APIC (Advanced Peripheral Interrupt Controller) Solaris Zones. The key difference between OS virtualiza-

can be used to distribute I/O interrupts among processors tion and system-level virtualization is that the former

by interrupt numbers. only has a single copy of OS kernel at runtime shared

Gandalf [78,79] is a lightweight Type-1 VMM de- among multiple domains, while the latter has multiple

signed for resource-constrained embedded systems. It is OS kernels at runtime. As a result, OS virtualization is

called meso-virtualization, which is more lightweight and more lightweight, but can only run a single OS, e.g.,

requires less modification to the guest OS than typical Linux. Commercial products that incorporate OS virtua-

para-virtualization solutions. Similar to SIGMA, inter- lization technology include: Parallels Virtuozzo Con-

rupts are not virtualized, but instead delivered to each tainers [87] is a commercial version of the open-source

guest OS directly. OpenVZ software; the MontaVista Automotive Techno-

Yoo et al. [80] developed MobiVMM, a lightweight logy Platform [88] adopts Linux Containers in combina-

virtualization solution for mobile phones. It uses preemp- tion with SELinux (Security Enhanced Linux) to run

tive fixed-priority scheduling and assigns the highest multiple Linux or Android OSes on top of MontaVista

priority to the real-time VM (only a single real-time VM Linux host OS.

is supported), and uses pseudo-polling to provide low- Resource control and isolation in OS virtualization are

latency interrupt processing. Physical interrupts are stored often achieved with the Linux kernel mechanism CGroup.

For example, Linux VServer [86] enforces CPU isolation

temporarily inside the VMM, and delivered to the target

by overlaying a token bucket filter (TBF) on top of the

guest VM when it is scheduled to run. Real-time sche-

standard O(1) Linux CPU scheduler. Each VM has a

dulability test is used to determine if certain interrupts

token bucket that accumulates incoming tokens at a spe-

should be discarded to prevent overload. Yoo et al. [81]

cified rate; every timer tick, the VM that owns the run-

improved I/O performance of MobiVMM, by letting the

ning process is charged one token. The authors modified

non-real-time VM preempt the real-time VM whenever a

the TBF to provide fair sharing and/or work-conserving

device interrupt occurs targeting the non real-time VM.

CPU reservations. CPU capacity is effectively partitioned

Li et al. [82] developed Quest-V, a virtualized mul- between two classes of VMs: VMs with reservations get

tikernel for high-confidence systems. Memory virtualiza- what they have reserved, and VMs with shares split the

tion with shadow paging is used for fault isolation. It unreserved CPU capacity proportionally. For network

does not virtualize any other resources like CPU or I/O, I/O, the Hierarchical Token Bucket (HTB) queuing dis-

i.e., processing or I/O handling are performed within the cipline of the Linux Traffic Control facility is used to

kernels or user-spaces on each core without intervention provide network bandwidth reservations and fair service

from the VMM. vCPUs are the fundamental kernel ab- among VMs. Disk I/O is managed using the standard

straction for scheduling and temporal isolation. A hie- Linux CFQ (Completely-Fair Queuing) I/O scheduler,

rarchical approach is adopted where vCPUs are sche- which attempts to divide the bandwidth of each block

duled on pCPUs and threads are scheduled on vCPUs [83] device fairly among the VMs performing I/O to that de-

Quest-V defines two classes of vCPUs: Main vCPUs, vice.

configured as sporadic servers by default, are used to OS virtualization has been traditionally applied in ser-

schedule and track the pCPU usage of conventional ver virtualization, since it is essentially a name-space se-

software threads, while I/O vCPUs are used to account paration technique, but has limited support for I/O device

for, and schedule the execution of, interrupt handlers for virtualization (typically only disk and network devices).

I/O devices. Each device is assigned a separate I/O vCPU, Recently, Andrus et al. [89] developed Cells, an OS vir-

which may be shared among multiple main vCPUs. I/O tualization architecture for enabling multiple virtual An-

vCPUs are scheduled with a Priority-Inheritance Band- droid smart phones to run simultaneously on the same

width-Preserving (PIBS) server algorithm, where each physical cell phone in an isolated, secure manner. The

shared I/O vCPU inherits its priority from the main main technical challenges is to develop kernel-level and

vCPUs of tasks responsible for I/O requests, and is as- user-level device virtualization techniques for a variety

signed a certain CPU bandwidth share that should not be of handset devices like GPU, touch screen, GPS, etc.

exceeded.

8. Task-Grain Scheduling

7. OS Virtualization

Conventional VMM schedulers view each guest VM as

OS virtualization, also called container-based virtualiza- an opaque blackbox and unaware of individual tasks

tion, allows to partition an OS environment into multiple within each VM. This is true for both full-virtualization

domains with independent name spaces to achieve a cer- solutions like KVM and para-virtualization solutions like

tain level of security and protection between different Xen. This opacity is beneficial for system modularity,

domains. Examples include FreeBSD Jails [84], OpenVZ but can sometimes be a impediment to optimal perform-

Copyright © 2012 SciRes. JSEA

284 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

ance. Some authors have implemented task-grain sched- infer graybox knowledge about a VM by monitoring cer-

uling schemes, where the VMM scheduler has visibility tain HW events. Kim et al. [95] presented task-aware

into the internal task-grain details within each VM. This virtual machine scheduling for I/O Performance by in-

work can be further divided into two types: one approach troducing a partial boosting mechanism into the Xen

is to modify the guest OS to inform the VMM about its Credit Scheduler, which enables the VMM to boost the

internal tasks via hypercalls in a paravirtualization ar- priority of a vCPU that contains an inferred I/O-bound

chitecture; another approach is to let the VMM infer the task in response to an incoming event. The correlation

task-grain information transparently without guest OS mechanism for block and network I/O events is best-

modification in a full-virtualization architecture. effort and lightweight. It works by monitoring the CR3

register on x86 CPU for addressing the MMU, in order to

8.1. Approaches That Require Modifying the capture process context-switching events, which are cla-

Guest OS ssified into disjoint classes: positive evidence, negative

Augier et al. [90] implemented task-grain scheduling al- evidence, and ambiguity, to determine a degree of belief

gorithm within VirtualLogix VLX, by removing the on the I/O boundedness of tasks. Kim et al. [96] pre-

scheduler from each guest OS and letting the hypervisor sented an improved credit scheduler that transparently

handle scheduling of all tasks. estimates multimedia quality by inferring frame rates

KimD et al. [91] presented a guest-aware, task-grain from low-level hardware events, and dynamically adjusts

scheduling algorithm in Xen by selecting the next VM to CPU allocation based on the estimates as feedback to

be scheduled based on the highest-priority task within keep the video frame rate around a set point Desired

each VM and the I/O behavior of the guest VMs via in- Frame Rate. For memory-mapped display, the hypervi-

specting the I/O pending status. sor monitors the frequency of frame buffer writes in or-

Kiszka [4] developed a para-virtualized scheduling in- der to estimate the frame rates; for GPU-accelerated dis-

terface for KVM. Two hypercalls are added: one for let- play, the hypervisor monitors the rate of interrupts raised

ting the guest OS inform the VMM about the priority of by a video device, which is shown to exhibit good linear

currently running task in the guest OS, and the VMM correlation with frame rate.

schedules the guest VMs based on task priority informa- Tadokoro et al. [97] implemented the Monarch sched-

tion; another for the guest OS to inform the VMM that it uler in the Xen VMM, which works by monitoring and

has just finished handling an interrupt, and its priority manipulating the run queue and the process data structure

should be lowered to its nominal priority. in guest VMs without modifying guest OSes, using Vir-

Xia et al. [92] presented PaS (Preemption-aware Sche- tual Machine Intraspection (VMI) to monitor process

duling) with two interfaces: one to register VM preemp- execution in the guest VMs, and Direct Kernel Object

tion conditions, the other to check if a VM is preempting. Manipulation (DKOM) to modify the scheduler queue

VMs with incoming pending I/O events are given higher data structure in the guest VMs.

priorities and allowed to preempt the currently running

VM scheduled by the Credit Scheduler. Instead of adding 9. The Lock-Holder Preemption Problem

hypercalls, the PaS interface is implemented by adding Spinlocks are used extensively in the Linux kernel for

two fields in a data structure which is shared between the synchronizing access to shared data. When one thread is

hypervisor and guest VM. in its critical section holding a spinlock, another thread

Wang et al. [93] implemented task-grain scheduling trying to acquire the same spinlock to enter the critical

within Xen by adding a hypercall to inform the VMM section will busy-wait (spin in a polling loop and con-

about the timing attributes of a task at its creation time, tinuously check the locking condition) until the lock-

and using either fixed-priority or EDF to schedule the holding thread releases the spinlock. Since the typical

tasks directly, bypassing the guest VM scheduler. critical sections protected by the spinlocks are kept very

Kim et al. [94] presented a feedback control architec- short, the waiting times are acceptable for today’s Linux

ture for adaptive scheduling in Xen, by adding two hy- kernels, especially with real-time enhancements like the

percalls for the guest VMs to increase or decrease the PREEMPT_RT patch. However, in a virtualized envi-

CPU budget (slice size) requested per period based on ronment, Lock Holder Preemption (LHP) may cause ex-

CPU utilization and deadline miss ratios in the guest cessively long waiting times for guest OSes configured

VMs. with SMP support with multiple vCPUs in the same

guest VM. A VM is called overcommitted when its num-

8.2. Approaches That Do Not Require ber of vCPUs exceeds the available number of physical

Modifying the Guest OS cores. Since the VMM scheduler is not aware of any

To avoid modifying the guest OS, the VMM attempts to spinlocks held within the guest OSes, when thread A in a

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 285

guest OS holding a spinlock being waited for by an- mentation is based on a L4-ka microkernel-based virtu-

other thread B on a different vCPU in the same guest OS alization system with a paravirtualized Linux as guest OS,

is preempted by the VMM scheduler to run another although their techniques do not depend on the specific

vCPU, possibly in a different guest OS, Thread B will virtualization software.

busy-wait for a long time until the VMM scheduler Friebel [99] extended the spinlock waiting code in the

switches back to this guest OS to allow thread A to con- guest OS to issue a yield() hypercall when a vCPU has

tinue execution and release the spinlock. When LHP oc- been waiting longer than a certain threshold of 216 cycles.

curs, the waiting times can be tens of milliseconds in- Upon receiving the hypercall, the VMM schedules an-

stead of the typical tens of microseconds in the Linux other VCPU of the same guest VM, giving preference to

kernel without virtualization. vCPUs preempted in kernel mode because they are likely

There are two general approaches to addressing the to be preempted lock-holders.

LHP problem: preventing it from occurring, or mitigating Coscheduling, also called Gang Scheduling, is a com-

it after detecting that it has occurred. mon technique for preventing the LHP problem, where

Pause Loop Exit (PLE) is an HW mechanism built-in all vCPUs of a VM are simultaneously scheduled on phy-

the latest Intel processors for detecting guest spin-lock. It sical processors (PCPUs) for an equal time slice. Strict

can be used by the VMM software to mitigate LHP after co-scheduling may lead to performance degradation due

detection. It enables the VMM to detect spin-lock by to fragmentation of processor availability. To improve

monitoring the execution of PAUSE instructions in the runtime efficiency, VMWare ESX 4.x implements re-

VM through the PLE_Gap and PLE_Window values. laxed co-scheduling to allow vCPUs to be co-scheduled

PLE_Gap denotes upper bound on the amount of time with slight skews. It maintain synchronous progress of

between two successive executions of PAUSE in a loop. vCPU siblings by deferring the advanced vCPUs until

PLE_Window denotes upper bound on the amount of the slower ones catch up. Several authors developed va-

time a guest is allowed to execute in a PAUSE loop. If rious versions of relaxed co-scheduling, as we discuss

the amount of time between this execution of PAUSE next.

and previous one exceeds the PLE_Gap, then processor Jiang et al. [100] presented several optimizations to

consider this PAUSE belongs to a new loop. Otherwise, the CFS (Completely Fair Scheduler) in KVM to help

processor determines the total execution time of this loop prevent or mitigate LHP. Three techniques were pro-

(since 1st PAUSE in this loop), and triggers a VM exit if posed for LHP prevention: 1) add two hypercalls that

total time exceeds PLE_Window. According to KVM’s enable the guest VM to inform the host VMM that one of

source code, PLE_Gap is set to 41 and PLE_Window is its vCPU threads is currently holding a spinlock or just

4096 by default, which means that this approach can de- released a spinlock. The host VMM will not de-schedule

tect a spinning loop that lasts around 55 microseconds on a vCPU that is currently holding a spinlock; 2) add two

a 3GHz CPU. AMD processors has similar HW support hypercalls for one vCPU thread to boost its priority when

called Pause-Filter-Count. (Since these HW mechanisms acquiring a spinlock, and resume its nominal priority

are relatively new, the techniques discussed below ge- when releasing it; 3) implement approximate co-sched-

nerally do not make use of them.) uling by temporarily changing a VM’s scheduling policy

Uhlig et al. [98] presented techniques to address LHP from SCHED_OTHER (default CFS scheduling class) to

for both fully virtualized and para-virtualized environ- SCHED_RR (real-time scheduling class) when the VM

ments. In the paravirtualization case, the guest OS is has high degree of internal concurrency, and changing it

modified to add a delayed preemption mechanism: before back a short period of time later. Two techniques were

acquiring a spinlock, the guest OS invokes a hypercall to proposed for LHP mitigation after it occurs: 1) Add a

indicate to the VMM that it should not be preempted for hypercall yield() in the vCPU thread source code to let it

the next n microseconds, where n is a configurable pa- give up the CPU when it has been spin-waiting for a

rameter depending how long the guest OS expects to be spinlock for too long, by adding a counter in the source

holding a lock. After releasing the lock, the guest OS in- code to record the spin-waiting time; 2) implement co-

dicates its willingness to be preempted again. In the full descheduling by slowing down or stopping all other

virtualization case where the guest OS cannot be modi- vCPUs of the same VM if one of its vCPUs is desched-

fied, the VMM transparently monitors guest OS switches uled, since if a vCPU thread requires a spinlock, it is

between user space and kernel space execution, and pre- possible for the other vCPUs to require the same spinlock

vents a vCPU from being preempted when it is running later.

in kernel space and may be holding kernel spinlocks. Weng et al. [101] presented a hybrid scheduling frame-

(The case when a user-level application is holding a spin- work in Xen by distinguishing between two types of

lock is not handled, and it is assumed that the application VMs: the high-throughput type and the concurrent type.

itself is responsible for handling this case.) The imple- A VM is set as the concurrent type when the majority of

Copyright © 2012 SciRes. JSEA

286 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

its workload is concurrent applications in order to reduce of GPOS issues a system call, or when an interrupt is

the cost of synchronization, and otherwise set as the raised to the vCPU of GPOS, the vCPU of GPOS is im-

high-throughput type by default. Weng et al. [102] im- mediately migrated away from to another core that does

plemented dynamic co-scheduling by adding vCPU Re- not host any RTOS vCPUs, thus achieving the effect of

lated Degree (VCRD) to reflect the degree of related- co-scheduling on different cores. This is a pessimistic

ness of vCPUs in a VM. When long waiting times occur approach, since the migration takes place even when

in a VM due to spinlocks, the VCRD of the VM is set as there may not be any lock contention.

HIGH, and all vCPUs should be co-scheduled; otherwise,

the VCRD of the VM is set as LOW, and vCPUs can be 10. Conclusion

scheduled asynchronously. A monitoring module is added

In this paper, we have presented a comprehensive survey

in each VM to detect long waiting times due to spinlocks,

on real-time issues in embedded systems virtualization.

and uses a hypercall to inform the host VMM about the

We hope this article can serve as a useful reference to

VCRD values of the guest VM.

researchers in this area.

Bai et al. [103] presented a task-aware co-scheduling

method, where the VMM infers gray-box knowledge

11. Acknowledgements

about the concurrency and synchronization of tasks with-

in guest VMs. By tracking the states of tasks within guest This work was supported by MoE-Intel Information Te-

VMs and combining this information with states of chnology Special Research Fund under Grant Number

vCPUs, they are able to infer occurrence of spinlock wait- MOE-INTEL-10-02; NSFC Project Grant #61070002;

ing without modifying the guest OS. the Fundamental Research Funds for the Central Univer-

Yu et al. [104] presented two approaches: Partial Co- sities.

scheduling and Boost Co-scheduling, both implemented

within the Xen credit scheduler. Partial Co-scheduling

REFERENCES

allows multiple VMs to be co-scheduled concurrently,

but only raises co-scheduling signals to the CPUs whose [1] Y. Kinebuchi, H. Koshimae and T. Nakajima, “Con-

run-queues contain the corresponding co-scheduled VCPUs, structing Machine Emulator on Portable Microkernel,”

Proceedings of the 2007 ACM Symposium on Applied

while other CPUs remain undisturbed; Boost Co-sched- Computing, 11-15 March 2007, Seoul, 2007, pp. 1197-

uling promotes the priorities of vCPUs to be co-sched- 1198. doi:10.1145/1244002.1244261

uled, instead of using co-scheduling signals to force them [2] H. Schild, A. Lackorzynski and A. Warg, “Faithful Virtu-

to be co-scheduled. They performed performance com- alization on a Realtime Operating System,” 11th Real-

parisons with hybrid co-scheduling [101] and co-de-sch- Time Linux Workshop, Dresden, 28-30 September 2009.

eduling [100] and showed clear advantages. [3] K. Barr, P. P. Bungale, S. Deasy, V. Gyuris, P. Hung, C.

Sukwong et al. [105] presented a balance scheduling Newell, H. Tuch and B. Zoppis, “The VMware Mobile

algorithm which attempts to balance vCPU siblings in Virtualization Platform: Is That a Hypervisor in Your

the same VM on different physical CPUs without forcing Pocket?” Operating Systems Review, Vol. 44, No. 4, 2101,

pp. 124-135. doi:10.1145/1899928.1899945

the vCPUs to be scheduled simultaneously by dynami-

[4] J. Kiszka, “Towards Linux as a Real-Time Hypervisor,”

cally setting CPU affinity of vCPUs so that no two vCPU

11th Real-Time Linux Workshop, Dresden, 28-30 September

siblings are in the same CPU run-queue. Balance sched- 2009.

uling is shown to significantly improve application per-

[5] Airlines Electronic Engineering Committee, “ARINC

formance without the complexity and drawbacks found 653—Avionics Application Software Standard Interface,”

in co-scheduling (CPU fragmentation, priority inversion 2003

and execution delay). Balance scheduling is similar to [6] T. Gaska, B. Werner and D. Flagg, “Applying Virtualiza-

relaxed co-scheduling in VMWare ESX 4.x [106], but it tion to Avoinics Systems—The Integration Challenge,”

never delays execution of a vCPU to wait for another Proceedings of the 29th Digital Avionics Systems Con-

vCPU in order to maintain synchronous progress of ference of the IEEE/AIAA, Salt Lake City, 3-7 October

vCPU siblings. It differs from the approximate co-sche- 2010, pp. 1-19. doi:10.1109/DASC.2010.5655297

duling algorithm in [100] in that it does not change [7] Wind River Hypervisor Product Overview.

vCPU scheduling class or priorities. http://www.windriver.com/products/hypervisor

SPUMONE [71] adopts a unique runtime migration [8] INTEGRITY Multivisor Datasheet. http://www.ghs.com

approach to address the LHP (Lock-Holder Preemption) [9] Real-Time Systems GmbH.

problem by migrating a vCPU away from a core where http://www.real-time-systems.com

LHP may occur with another co-located vCPU: if vCPUs [10] Tenasys eVM for Windows.

of a GPOS and an RTOS share the same pCPU core on a http://www.tenasys.com/products/evm.php

multicore processor, and the thread running on the vCPU [11] Quick Start Guide, NI Real-Time Hypervisor.

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 287

http://www.ni.com/pdf/manuals/375174b.pdf. doi:10.1109/ISQED.2011.5770725

[12] OpenSynergy COQOS. [26] F. Moraes, N. Calazans, A. Mello, L. Möller and L. Ost,

http://www.opensynergy.com/en/Products/COQOS “HERMES: An Infrastructure for Low Area Overhead

[13] Enea Hypervisor. Packet-Switching Networks on Chip,” Integration, the VLSI

http://www.enea.com/software/products/hypervisor Journal, Vol. 38, No. 1, 2004, pp. 69-93.

doi:10.1106/j.vlsi.2004.03.003

[14] SysGO GmbH. http://www.sysgo.com

[27] L. Cherkasova, D. Gupta and A. Vahdat, “Comparison of

[15] S. H. VanderLeest, “ARINC 653 Hypervisor,” Proceed- the Three CPU Schedulers in Xen,” ACM SIGMETRICS

ings of 29th Digital Avionics Systems Conference of IE- Performance Evaluation Review, Vol. 35, No. 2, 2007, pp.

EE/AIAA, Salt Lake City, 3-7 October 2010, pp. 1-20. 42-51. doi:10.1145/1330555.1330556

doi:10.1109/DASC.2010.5655298

[28] A. Masrur, S. Drössler, T. Pfeuffer and S. Chakraborty,

[16] M. Masmano, I. Ripoll, A. Crespo and J. J. Metge “Xtra- “VM-Based Real-Time Services for Automotive Control

tuM: A Hypervisor for Safety Critical Embedded Sys- Applications,” Proceedings of the 2010 IEEE 16th Inter-

tems,” Proceedings of Real-Time Linux Workshop, Dres- national Conference on Embedded and Real-Time Com-

den, 28-30 September 2009. puting Systems and Applications, Macau, 23-25 August

[17] J. Zamorano and J. A. de la Puente, “Open Source Im- 2010, pp. 218-223. doi:10.1109/RTCSA.2010.38

plementation of Hierarchical Scheduling for Integrated [29] A. Masrur, T. Pfeuffer, M. Geier, S. Drössler and S.

Modular Avionics,” Proceedings of Real-Time Linux Work-

Chakraborty, “Designing VM Schedulers for Embedded

shop, Nairobi, 25-27 October 2010.

Real-Time Applications,” Proceedings of the 7th IEEE/AC-

[18] Á. Esquinas, J. Zamorano, J. Antonio de la Puente, M. M/IFIP International Conference on Hardware/Software

Masmano, I. Ripoll and A. Crespo, “ORK+/XtratuM: An Codesign and System Synthesis, Taipei, 9-14 October 2011,

Open Partitioning Platform for Ada,” Lecture Notes in pp. 29-38. doi:10.1145/2039370.2039378

Computer Science, Vol. 6652, pp. 160-173.

[30] J. Lee, S. S. Xi, S. J. Chen, L. T. X. Phan, C. Gill, I. Lee,

doi:10.1007/978-3-642-21338-0_12

C. Y. Lu and O. Sokolsky, “Realizing Compositional Sch-

[19] S. Campagna, M. Hussain and M. Violante, “Hypervi- eduling through Virtualization,” Technical Report, Uni-

sor-Based Virtual Hardware for Fault Tolerance in COTS versity of Pennsylvania, Philadelphia, 2011.

Processors Targeting Space Applications,” Proceedings

of the 2010 IEEE 25th International Symposium on De- [31] S. S. Xi, J. Wilson, C. Y. Lu and C. Gill, “RT-Xen: To-

fect and Fault Tolerance in VLSI Systems, Kyoto, 6-8 wards Real-Time Hypervisor Scheduling in Xen,” Pro-

October 2010, pp. 44-51. doi:10.1109/DFT.2010.12 ceedings of the 2011 International Conference on Em-

bedded Software, Taipei, 9-14 October 2011, pp. 39-48.

[20] N. McGuire, A. Platschek and G. Schiesser, “OVER-

SEE—A Generic FLOSS Communication and Applica- [32] I. Shin and I. Lee, “Compositional Real-Time Scheduling

tion Platform for Vehicles,” Proceedings of 12th Real- Framework with Periodic Model,” ACM Transactions on

Time Linux Workshop, Nairobi, 25-27 October 2010. Embedded Computing Systems, Vol. 7, No. 3, 2008.

doi:10.1145/1347375.1347383

[21] A. Platschek and G. Schiesser, “Migrating a OSEK Run-

time environment to the OVERSEE Platform,” Proceed- [33] S. Yoo, Y.-P. Kim and C. Yoo, “Real-time Scheduling in

ings of 13th Real-Time Linux Workshop, Prague, 22-22 a Virtualized CE Device,” Proceedings of 2010 Digest of

October 2011. Technical Papers International Conference on Consumer

Electronics (ICCE), Las Vegas, 9-13 January 2010, pp.

[22] S. Han and H. W. Jin, “Full Virtualization Based ARINC 261-262. doi:10.1109/ICCE.2010.5418991

653 Partitioning,” Proceedings of the 30th Digital Avion-

ics Systems (DASC) Conference of the IEEE/AIAA, Seat- [34] J.-W. Jeong, S. Yoo and C. Yoo, “PARFAIT: A New Sch-

tle, 16-20 October 2011, pp. 1-11. eduler Framework Supporting Heterogeneous Xen-ARM

doi:10.1109/DASC.2011.6096132 Schedulers,” Proceedings of 2011 Consumer Communi-

cations and Networking Conference of the IEEE CCNC,

[23] S. Ghaisas, G. Karmakar, D. Shenai, S. Tirodkar and K. Las Vegas, 9-12 January 2011, pp. 1192-1196.

Ramamritham, “SParK: Safety Partition Kernel for Inte- doi:10.1109/CCNC.2011.5766431

grated Real-Time Systems,” Lecture Notes in Computer

Science, Vol. 6462, 2010, pp. 159-174. [35] C.-H. Hong, M. Park, S. Yoo, C. Yoo and H. D. Consid-

doi:10.1007/978-3-642-17226-7_10 ering, “Hypervisor Design Considering Network Per-

formance for Multi-Core CE Devices,” Proceedings of

[24] M. Lemerre, E. Ohayon, D. Chabrol, M. Jan and M.-B.

2010 Digest of Technical Papers International Confer-

Jacques, “Method and Tools for Mixed-Criticality Real-

ence on Consumer Electronics of the IEEE ICCE, Las

Time Applications within PharOS,” Proceedings of IEEE

Vegas, 9-13 January 2010, pp. 263-264.

International Symposium on Object/Component/Service-Ori-

doi:10.1109/ICCE.2010.5418708

ented Real-Time Distributed Computing Workshops, New-

port Beach, 28-31 March 2011, pp. 41-48. [36] S. Yoo, K.-H. Kwak, J.-H. Jo and C. Yoo, “Toward Un-

doi:10.1109/ISORCW.2011.15 der-Millisecond I/O Latency in Xen-ARM,” The 2nd ACM

[25] A. Aguiar and F. Hessel, “Virtual Hellfire Hypervisor: SIGOPS Asia-Pacific Workshop on Systems, Shanghai,

Extending Hellfire Framework for Embedded Virtualiza- 11-12 July 2011, p. 14.

tion Support,” Proceedings of the 12th International Sym- [37] M. Lee, A. S. Krishnakumar, P. Krishnan, N. Singh and S.

posium on Quality Electronic Design (ISQED), Santa Yajnik, “Supporting Soft Real-Time Tasks in the Xen

Clara, 14-16 March 2011, pp. 129-203. Hypervisor,” Proceedings of the 6th ACM SIGPLAN/SIGO-

Copyright © 2012 SciRes. JSEA

288 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

PS International Conference on Virtual Execution Envi- tion Engineering and Computer Science (ICIECS), Wu-

ronments, Pittsburgh, March 2010, pp. 97-108. han, 25-26 December 2010, pp. 1-4.

doi:10.1145/1837854.1736012 doi:10.1109/ICIECS.2010.5678357

[38] P. J. Yu, M. Y. Xia, Q. Lin, M. Zhu, S. Gao, Z. W. Qi, K. [49] T. Cucinotta, G. Anastasi and L. Abeni, “Respecting Tem-

Chen and H. B. Guan, “Real-Time Enhancement for Xen poral Constraints in Virtualised Services,” Proceedings of

Hypervisor,” Proceedings of the 8th International Confer- the 33rd Annual IEEE International Conference on Com-

ence on Embedded and Ubiquitous Computing of the puter Software and Applications, Seattle, Washington DC,

IEEE/IFIP, Hong Kong, 11-13 December 2010, pp. 23-30. 20-24 July 2009. pp. 73-78.

[39] Y. X. Wang, X. G. Wang and H. Guo, “An Optimized doi:10.1109/COMPSAC.2009.118

Scheduling Strategy Based on Task Type In Xen,” Lec- [50] T. Cucinotta, D. Giani, D. Faggioli and F. Checconi, “Pro-

ture Notes in Electrical Engineering, Vol. 123, 2011, pp. viding Performance Guarantees to Virtual Machines Us-

515-522. doi:10.1007/978-3-642-25646-2_67 ing Real-Time Scheduling,” Proceedings of Euro-Par

[40] H. C. Chen, H. Jin, K. Hu and M. H. Yuan, “Adaptive Workshops, Naples, 31 August-3 September 2010, pp. 657-

Audio-Aware Scheduling in Xen Virtual Environment,” 664.

Proceedings of the International Conference on Computer [51] T. Cucinotta, F. Checconi and D. Giani, “Improving Re-

Systems and Applications (AICCSA) of the IEEE/ACS, sponsiveness for Virtualized Networking under Intensive

Hammamet, 16-19 May 2010, pp. 1-8. Computing Workloads,” Proceedings of the 13th Real-Time

doi:10.1109/AICCSA.2010.5586974 Linux Workshop, Prague, 20-22 October 2011.

[41] H. C. Chen, H. Jin and K. Hu, “Affinity-Aware Propor- [52] F. Checconi, T. Cucinotta and M. Stein, “Real-Time Is-

tional Share Scheduling for Virtual Machine System,” sues in Live Migration of Virtual Machines,” Proceedings

Proceedings of the 9th International Conference on Grid of Euro-Par Workshops, Delft, 29-28 August 2009, pp. 454-

and Cooperative Computing (GCC), Nanjing, 1-5 No- 466.

vember 2010, pp. 75-80. doi:10.1109/GCC.2010.27

[53] S. Kato, R. (Raj) Rajkumar and Y. Ishikawa, “A Loadable

[42] D. Gupta, L. Cherkasova, R. Gardner and A. Vahdat, Real-Time Scheduler Framework for Multicore Plat-

“Enforcing Performance Isolation across Virtual Ma- forms,” Proceedings of the 6th IEEE International Con-

chines in Xen,” Proceedings of the ACM/IFIP/USENIX ference on Embedded and Real-Time Computing Systems

2006 International Conference on Middleware, Melbourne, and Applications (RTCSA), Macau, 23-25 August 2010.

27 November-1 December 2006, pp. 342-362.

[54] B. Lin and P. A. Dinda, “VSched: Mixing Batch And In-

[43] D. Ongaro, A. L. Cox and S. Rixner, “Scheduling I/O in teractive Virtual Machines Using Periodic Real-time Sche-

Virtual Machine Monitors,” Proceedings of the 4th ACM duling,” Proceedings of the ACM/IEEE SC 2005 Confer-

SIGPLAN/SIGOPS International Conference on Virtual ence Supercomputing, Seattle, 12-18 November 2005, p.

Execution Environments, Seattle, 5-7 March 2008, pp. 1- 8. doi:10.1109/SC.2005.80

10. doi:10.1145/134256.1246258

[55] M. Asberg, N. Forsberg, T. Nolte and S. Kato, “Towards

[44] S. Govindan, J. Choi, A. R. Nath, A. Das, B. Urgaonkar, Real-Time Scheduling of Virtual Machines without Ker-

and A. Sivasubramaniam, “Xen and Co.: Communica- nel Modifications,” Proceedings of the 2011 IEEE 16th

tion-Aware CPU Management in Consolidated Xen- Conference on Emerging Technologies & Factory Auto-

Based Hosting Platforms,” IEEE Transactions on Com- mation (ETFA), Toulouse, 5-9 September 2011, pp. 1-4.

puters, Vol. 58, No. 8, 2009, pp. 1111-1125. doi:10.1109/ETFA.2011.6059185

doi:10.1109/TC.2009.53

[56] M. Asberg, T. Nolte and S. Kato, “Towards Hierarchical

[45] Y. Y. Hu, X. Long, J. Zhang, J. He and L. Xia, “I/O Scheduling in Linux/Multi-Core Platform,” Proceedings

Scheduling Model of Virtual Machine Based on Multi- of the 2010 IEEE Conference on Emerging Technologies

Core Dynamic Partitioning,” Proceedings of the 19th AC- and Factory Automation (ETFA), Bilbao, 13-16 Septem-

M International Symposium on High Performance Dis- ber 2010, pp. 1-4. doi:10.1109/ETFA.2010.5640999

tributed Computing, Chicago, 21-25 June 2010, pp. 142-

154. doi:10.1145/1851476.1851494 [57] A. Acharya, J. Buford and V. Krishnaswamy, “Phone

Virtualization Using a Microkernel Hypervisor,” Pro-

[46] J. G. Lee, K. W. Hur and Y. W. Ko, “Minimizing Sched-

ceedings of the 2009 IEEE International Conference on

uling Delay for Multimedia in Xen Hypervisor,” Commu-

Internet Multimedia Services Architecture and Applica-

nications in Computer and Information Science, Vol. 199,

tions (IMSAA), Bangalore, 9-11 December 2009, pp. 1-6.

2011, pp. 96-108. doi:10.1007/978-3-642-23312-8_12

doi:10.1109/IMSAA.2009.5439460

[47] J. Zhang, K. Chen, B. J. Zuo, R. H. Ma, Y. Z. Dong and

[58] A. Iqbal, N. Sadeque and R. I. Mutia, “An Overview of

H. B. Guan, “Performance Analysis towards a KVM-

Microkernel, Hypervisor and Microvisor Virtualization

Based Embedded Real-Time Virtualization Architecture,”

Proceedings of the 5th International Conference on Com- Approaches for Embedded Systems,” Technical Report,

puter Sciences and Convergence Information Technology Lund University, Lund, 2009.

(ICCIT), Seoul, 30 November-2 December 2010, pp. [59] G. Heiser, “Virtualizing Embedded Systems: Why Bo-

421-426. doi:10.1109/ICCIT.2010.5711095 ther?” Proceedings of the 2011 48th ACM/EDAC/IEEE

[48] B. J. Zuo, K. Chen, A. Liang, H. B. Guan, J. Zhang, R. H. Conference on Design Automation, San Diego, 5-9 June

Ma and H. B. Yang, “Performance Tuning towards a 2011, pp. 901-905.

KVM-Based Low Latency Virtualization System,” Pro- [60] F. Bruns, S. Traboulsi, D. Szczesny, M. E. Gonzalez, Y.

ceedings of the 2nd International Conference on Informa- Xu and A. Bilgic, “An Evaluation of Microkernel-Based

Copyright © 2012 SciRes. JSEA

A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization 289

Virtualization for Embedded Real-Time Systems,” Pro- Proceedings of the 16th Pacific Rim International Sym-

ceedings of the 22nd Euromicro Conference on Real-Time posium on Dependable Computing (PRDC), Tokyo, 13-

Systems (ECRTS), Dublin, 6-9 July 2010, pp. 57-65. 15 December 2010, pp. 27-36.

doi:10.1109/ECRTS.2010.28 doi:10.1109/PRDC.2010.34

[61] A. Lackorzynski, J. Danisevskis, J. Nordholz and M. Peter, [73] Y. Kinebuchi, M. Sugaya, S. Oikawa and T. Nakajima,

“Real-Time Performance of L4Linux,” Proceedings of the “Task Grain Scheduling for Hypervisor-Based Embedded

13th Real-Time Linux Workshop, Prague, 20-22 October System,” Proceedings of the 10th IEEE International Con-

2011. ference on High Performance Computing and Communi-

[62] R. Kaiser, “Alternatives for Scheduling Virtual Machines cations, Dalian, 25-27 September 2008, pp. 190-197.

in Real-Time Embedded Systems,” Proceedings of the 1st doi:10.1109/HPCC.2008.144

Workshop on Isolation and Integration in Embedded Sys- [74] A. Aalto, “Dynamic Management of Multiple Operating

tems, Glasgow, 1 April 2008, pp. 5-10. Systems in an Embedded Multi-Core Environment,” Mas-

doi:10.1145/1435458.1435460 ter’s Thesis, Aalto University, Finland, 2010.

[63] J. Yang, H. Kim, S. Park, C. K. Hong and I. Shin, “Im- [75] T.-H. Lin, Y. Kinebuchi, H. Shimada, H. Mitake, C.-Y.

plementation of Compositional Scheduling Framework on Lee and T. Nakajima, “Hardware-Assisted Reliability

Virtualization,” SIGBED Review, Vol. 8, No. 1, 2011, pp. Enhancement for Embedded Multi-Core Virtualization

30-37. doi:10.1145/1967021.1967025 Design,” Proceedings of the 17th International Confer-

[64] U. Steinberg and B. Kauer, “NOVA: A Microhypervisor- ence on Embedded and Real-Time Computing Systems

Based Secure Virtualization Architecture,” Proceedings and Applications of the IEEE RTCSA, Toyama, 28-31

of the 5th European Conference on Computer Systems, Paris, August 2011, pp. 241-249. doi:10.1109/RTCSA.2011.24

13-16 April 2010, pp. 209-222. [76] N. Li, Y. Kinebuchi and T. Nakajima, “Enhancing Secu-

doi:10.1145/1755913.1755935 rity of Embedded Linux on a Multi-Core Processor,” Pro-

[65] K. Gudeth, M. Pirretti, K. Hoeper and R. Buskey, “De- ceedings of the 17th International Conference on Embed-

livering Secure Applications on Commercial Mobile De- ded and Real-Time Computing Systems and Applications

vices: The Case for Bare Metal Hypervisors,” Proceed- of the IEEE RTCSA, Toyama, 28-31 August 2011, pp.

ings of the 1st ACM Workshop on Security and Privacy in 117-121. doi:10.1109/RTCSA.2011.36

Smartphones and Mobile Devices, Chicago, 17-21 Octo- [77] W. Kanda, Y. Murata and T. Nakajima, “SIGMA System:

ber 2011, pp. 33-38. doi:10.1145/2046614.2046622 A Multi-OS Environment for Embedded Systems,” Jour-

[66] VMware MVP (Mobile Virtualization Platform) nal Of Signal Processing Systems, Vol. 59, No. 1, 2010,

http://www.vmware.com/products/mobile pp. 33-43. doi:10.1007/s11265-008-0272-9

[67] F. Armand and M. Gien, “A Practical Look at Micro-Ker- [78] M. Ito and S. Oikawa, “Mesovirtualization: Lightweight

nels and Virtual Machine Monitors,” Proceedings of the Virtualization Technique for Embedded Systems,” So-

6th IEEE Consumer Communications and Networking Con- ciedad Española de UitraSonidos, Vol. 4761, 2007, pp. 496-

ference, Las Vegas, 10-13 January 2009, pp. 1-7. 505.

doi:10.1109/CCNC.2009.4784874 [79] M. Ito and S. Oikawa, “Making a Virtual Machine Moni-

[68] Red Bend VLX for Mobile Handsets. tor Interruptible,” Journal of Information Processing, Vol.

http://www.virtuallogix.com/products/vlx-for-mobile-han 19, 2011, pp. 411-420.

dsets.html [80] S.-H. Yoo, Y. X. Liu, C.-H. Hong, C. Yoo and Y. G. Zh-

[69] J.-Y. Hwang, S.-B. Suh, S.-K. Heo, C.-J. Park, J.-M. Ryu, ang, “MobiVMM: A Virtual Machine Monitor for Mo-

S.-Y. Park and C.-R. Kim, “Xen on ARM: System Virtu- bile Phones,” Proceedings of the 1st Workshop on Virtu-

alization Using Xen Hypervisor for ARM-Based Secure alization in Mobile Computing, Breckenridge, 17 June 2008,

Mobile Phones,” Proceedings of the 5th IEEE Consumer pp. 1-5.

Communications and Networking Conference, Las Vegas, [81] S. Yoo, M. Park and C. Yoo, “A Step to Support Real-

10-12 January 2008, pp. 257-261. Time in Virtual Machine,” Proceedings of the 6th Inter-

doi:10.1109/ccnc08.2007.64 national Conference on Consumer Communications and

[70] W. Kanda, Y. Yumura, Y. Kinebuchi, K. Makijima and T. Networking of the IEEE CCNC, Las Vegas, 10-13 Janu-

Nakajima, “SPUMONE: Lightweight CPU Virtualization ary 2009, pp. 1-7. doi:10.1109/CCNC.2009.4784876

Layer for Embedded Systems,” Proceedings of the [82] Y. Li, M. Danish and R. West, “Quest-V: A Virtualized

IEEE/IFIP International Conference on Embedded and Multikernel for High-Confidence Systems,” Technical Re-

Ubiquitous Computing, Shanghai, 17-20 December 2008, port, Boston University, Boston, 2011.

pp. 144-151. doi:10.1109/EUC.2008.157 [83] M. Danish, Y. Li and R. West, “Virtual-CPU Scheduling

[71] H. Mitake, Y. Kinebuchi, A. Courbot and T. Nakajima, in the Quest Operating System,” Proceedings of the 17th

“Coexisting Real-Time OS and General Purpose OS on International Conference on Real-Time and Embedded

an Embedded Virtualization Layer for a Multicore Proc- Technology and Applications Symposium of the IEEE RTAS,

essor,” Proceedings of the 2011 ACM Symposium on Ap- Chicago, 11-14 April 2011, pp. 169-179.

plied Computing, Taichung, 21-24 March 2011, pp. 629- doi:10.1109/RTAS.2011.24

630. doi:10.1145/1982185.1982322 [84] P.-H. Kamp and R. N. M. Watson, “Jails: Confining the

[72] H. Tadokoro, K. Kourai and S. Chiba, “A Secure Sys- Omnipotent Root,” Proceedings of the 2nd International

tem-wide Process Scheduler across Virtual Machines,” System Administration and Networking Conference, Maas-

Copyright © 2012 SciRes. JSEA

290 A State-of-the-Art Survey on Real-Time Issues in Embedded Systems Virtualization

tricht, 22-25 May 2000. Systems Conference, Orange County, 22 February 2012.

[85] OpenVZ Linux Containers. http://wiki.openvz.org doi:10.1145/2155555.2155566

[86] S. Soltesz, H. Pötzl, M. E. Fiuczynski, A. C. Bavier and L. [97] H. Tadokoro, K. Kourai and S. Chiba, “A Secure Sys-

L. Peterson, “Container-Based Operating System Virtual- tem-wide Process Scheduler across Virtual Machines,”

ization: A Scalable, High-Performance Alternative to Hy- Proceedings of the 16th Pacific Rim International Sym-

pervisors,” Proceedings of EuroSys 2007, Lisbon, 21-23 posium on Dependable Computing of the IEEE PRDC, To-

March 2007, pp. 275-287. kyo, 13-15 December 2010, pp. 27-36.

doi:10.1109/PRDC.2010.34

[87] Parallels Virtuozzo Containers.

http://www.parallels.com/products/pvc [98] V. Uhlig, J. LeVasseur, E. Skoglund and U. Dannowski,

“Towards Scalable Multiprocessor Virtual Machines,”

[88] MontaVista Automotive Technology Platform. Proceedings of the 3rd Conference on Virtual Machine

http://mvista.com/sol_detail_ivi.php Research and Technology Symposium, San Jose, 6-12 May

[89] J. Andrus, C. Dall, A. V. Hof, O. Laadan and J. Nieh, “Cells: 2004, pp. 43-56.

A Virtual Mobile Smartphone Architecture,” Columbia [99] T. Friebel and S. Biemueller, “How to Deal with Lock

University Computer Science Technical Reports, Colum- Holder Preemption,” Proceedings of the Xen Summit, Bos-

bia University, Columbia, 2011, pp. 173-187. ton, 23-24 June 2008.

[90] C. Augier, “Real-Time Scheduling in a Virtual Machine [100] W. Jiang, Y. S. Zhou, Y. Cui, W. Feng, Y. Chen, Y. C.

Environment,” Proceedings of Junior Researcher Work- Shi and Q. B. Wu, “CFS Optimizations to KVM Threads

shop on Real-Time Computing (JRWRTC), Nancy, 29-30 on Multi-Core Environment,” Proceedings of the 15th In-

March 2007. ternational Conference on Parallel and Distributed Sy-

[91] D. Kim, H. Kim, M. Jeon, E. Seo and J. Lee, “Guest- stems of the IEEE ICPADS, Shenzhen, 8-11 December

Aware Priority-Based Virtual Machine Scheduling for 2009, pp. 348-354. doi:10.1109/ICPADS.2009.83