Professional Documents

Culture Documents

Gesture Controlled Video Playback: Overview of The Project

Uploaded by

Matthew BattleOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Gesture Controlled Video Playback: Overview of The Project

Uploaded by

Matthew BattleCopyright:

Available Formats

GESTURE CONTROLLED VIDEO PLAYBACK

G. Anil Kumar1, B. Sathwik2,

1

Asst.professor, 2UG scholar

Department of Electronics and Communication Engineering, Anurag Group of Institutions

Abstract I.INTRODUCTION

Recently, Human-computer interaction Overview of the project:

(HCI) has become more and more influential Gesture recognition is effortless aspect of

among research and daily life. Human hand interaction among humans, but human-

gesture recognition (HGR) is one of the main computer interaction remains based upon

topics in the area of computer science as an signals and behaviors which are not natural for

effective approach for HCI. HGR can us. Although keyboard and mouse are

significantly facilitate a lot of applications in undeniable improvements over tabular switch

different fields such as automobiles for and punch card, the development of more

driving assistance and safety, smart house, natural and intuitive interfaces that do not

wearable devices, virtual reality, etc. In these require humans to acquire specialized and

application scenarios, which based on haptic esoteric training remains an elusive goal. To

controls and touch screens may be physically facilitate a more fluid interface, we would

or mentally limited, the touch less input prefer to enable machines to acquire human-like

modality of hand gestures is more convenient skills, such as recognizing faces, gestures, and

and attractive to a large extent. speech, rather than continuing to demand that

This project describes about humans acquire machine-like skills

controlling video using gestures. It is based

on using the combination of Arduino and So this kind of Gesture based video

Python. Instead of using a keyboard, mouse playback can avoid the problems caused by key

or joystick, we can use our hand gestures to boards and mouse. On the premise that it’s very

control certain functions of a computer like easy to use, it’s another feature is it uses simple

to play/pause a video, increasing and gestures to control the video. So people don’t

decreasing of volume and forward and have to learn the machine like skills which is

backward of video. The idea behind the burden most of the time, but by contrast people

project is quite easy by using two Ultrasonic need only to remember a set of gestures to

Sensors (HC-SR04)[1] with Arduino. We will control the video playback.

place the two sensors on the top of a laptop Objectives:

screen and calculate the distance between the 1. To minimize the use of keyboard and

hand and the sensor. Counting on the mouse in computer.

information from Arduino that is sent to 2. To use ultrasonic sensors to recognize

Python through the serial port, this gestures.

information will then be read by Python 3. To integrate gesture recognition features

which is running on the computer in order to to any computer at low cost.

perform certain actions. 4. To help in the development of touch less

Key Words: Gestures, HCI, HGR, haptic, displays.

Ultrasonic Background:

Massive improvement in computer

development can be clearly seen involving both

interaction between human and computer itself.

ISSN (PRINT): 2393-8374, (ONLINE): 2394-0697, VOLUME-5, ISSUE-4, 2018

304

INTERNATIONAL JOURNAL OF CURRENT ENGINEERING AND SCIENTIFIC RESEARCH (IJCESR)

Human computer interaction (HCI) is defined as Working:

the relation between the human and computer First the arduino will be energized using

(machine), represented with the emerging of 5v power supply which is taken from the laptop

computer itself. Vital endeavor of hand gesture using serial port. Whenever anyone puts their

recognition system is to create a natural hands in front of the ultrasonic sensor, the

interaction between human and computer when arduino calculates the distance between the

controlling and conveying meaningful hand and the sensor. The calculation of the

information. distance is carried out by echo and trigger pin.

Connectivity & input: First the trigger pin sends a burst of 8 ultrasonic

For giving the gestures to the computer signals. Whenever an obstacle occur in front of

we are using two ultrasonic sensors. The the sensor, the signal reflects back. This

ultrasonic sensors are attached to the arduino reflected signal is taken by the echo pin. Then

board to receive the gestures. Different types of the time for the signal to get reflected is

gestures are characterized based on the distance calculated by the sensor. Then this time is

between the hand and sensors. This calculated converted into distance. Based on the distance

distance is sent to computer to control the the arduino processes which controlling action

video. It is designed for low power consumption to be performed. This controlling action is sent

as it is uses power from laptop itself. This to the computer using serial port. That action is

module can be easily fitted with any computer performed by python in laptop.

and for any module for extension.

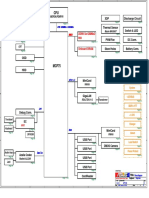

Purpose: Circuit diagram:

The purpose of our research is to

provide simple gesture recognition system but

with various features so that the user can easily

use this for controlling the video and audio

purposes. This simple architecture is also useful

for interfacing with other features added to it.

Students can build their own gesture recognition

system with low cost and use them as platform

Fig. 2. Circuit diagram

for experiments in several projects. The main

purpose of this project is to provide a better Circuit description:

gesture control system using ultrasonic sensors In this circuit we have used two

and a efficient system using digital technology. ultrasonic sensors (HC-SR 04) to recognize the

There is a need to eliminate the traditional gestures. Gestures using ultrasonic sensors

Human Computer Interaction(HCI) which uses using Doppler shift.[2] In this arduino based

keyboards and mouse as they are not suitable in project we have used distance based gestures[3]

real life situations. Using the gesture control so we do not need any cameras to recognize the

technology our interaction with computer can gestures.

be more improvised and simple.

Circuit of this project is very simple

II.PROPOSED METHOD which contains Arduino, and two ultrasonic

sensors. Arduino controls the complete

Blockdiagram:

processes like converting the time into distance,

what action to be done based on the distance

and sends it to the serial monitor. Ultrasonic

sensors are connected to the digital pins 2, 3,4,

5 and the GND pins of two sensors are

connected to two ground pins on the arduino

uno board. One VCC pin of sensor is connected

to 5v pin on arduino board and another VCC

pin of the sensor is connected to IORESET pin.

This pin is used because extra 5v pin is not

Fig. 1. Block Diagram Of The Project there in arduino. The IORESET pin gives the

ISSN (PRINT): 2393-8374, (ONLINE): 2394-0697, VOLUME-5, ISSUE-4, 2018

305

INTERNATIONAL JOURNAL OF CURRENT ENGINEERING AND SCIENTIFIC RESEARCH (IJCESR)

supply voltage based on the difference voltage B. Prototype of the project:

between 5v pin and 3.3v pin.

Working:

In this project, a 5V supply to the

Ultrasonic sensors and arduino. When the

circuit is energized, the ultrasonic sensors

continuously calculates the distance between

the sensor and the hand[4]. Initially, we have

given five gestures to control the video.

First hand gesture: It allows us to 'Play/Pause'

VLC by placing the two hands in front of the

right and left Ultrasonic Sensor at a particular

far distance. Fig. 3. Prototype Of The Project (Front View)

Second gesture: It allows us to 'Rewind' the

video by placing a hand in front of the right

sensor at a particular far distance and get near

to the sensor.

Third gesture: It allows us to 'Forward' the

video by placing a hand in front of the right

sensor at a particular far distance. And get far

way from the sensor

Forth gesture: It allows us to 'Increase Volume'

of the video by placing a hand in front of the

left sensor at a particular far distance and

moving away from the Sensor. Fig. 4. Prototype Of The Project (Rear View)

Fifth gesture: It allows us to 'Decrease Volume' C. Advantages:

of the video by placing a hand in front of the 1. Low cost.

left sensor at a particular far distance and get 2. The given system is handy and portable,

near to the sensor. and thus can be easily carried from one

place to another.

III.RESULTS & DISCUSSIONS 3. The circuitry is not that complicated and

A. Result and simulation : thus can be easily troubleshooted.

The project is being tested and is 4. There are no moving parts, so device

working properly. It is tested on two different wear is not an issue.

laptops and of different brands and is properly 5. There is no direct contact between the

working. It has lots of application like we can user and the device, so there is no

use this project for video controlling not only in hygiene problem.

laptops but also we can integrate it to many 6. Less cost for making a gesture control

displays. Here we can easily control the computer.

playback of the video without using keyboard 7. This system would be designed to be

and mouse. In this project we have used very those that seem natural to users, thereby

less component so it is cost effective and it is decreasing the learning time required.

less complicated than a simple micro controller 8. They will reduce our need for devices

based project. like mouse, keys, remote control or

keys for interaction with the electronic

devices.

ISSN (PRINT): 2393-8374, (ONLINE): 2394-0697, VOLUME-5, ISSUE-4, 2018

306

INTERNATIONAL JOURNAL OF CURRENT ENGINEERING AND SCIENTIFIC RESEARCH (IJCESR)

D. Disadvantages: 2. Kaustubh Kalgaonkar, Bhiksha Raj,

1. The distance between ultrasonic sensors One-handed gesture recognition using

and user is limited. ultrasonic sonar, IEEE, 2009.

2. The distance between sensor and user

must be accurate. 3. S. P. Tarzia, R. P. Dick, P. A. Dinda,

and G. Memik, “Sonar-based

E. Applications: measurement of user presence and

1. This project can be used in gesture attention,” in Proceedings of the 11th

control gaming in gaming industry. international conference on Ubiquitous

2. This system can be used for making computing. ACM, 2009.

touch less displays at low cost.

3. This project can be implemented in 4. H. Watanabe, T. Terada, and M.

medical area for making gesture control Tsukamoto, “Ultrasound-based

displays. movement sensing, gesture-, and

context-recognition,” in Proceedings of

IV.CONCLUSION & FUTURE SCOPE the 2013 International Symposium on

A. Conclusion: Wearable Computers. ACM, 2013.

The presented approach proves that it is

possible to use inexpensive ultrasonic range-

finders for gesture recognition to control the

video. This fact seems to be important because

these sensors are easily available and no

additional ultrasonic system is needed to be

constructed. Unfortunately, a set of gestures

which can be recognize in this way is very

limited. Nevertheless they are useful for

extension of communication channel between a

human and a machine. In this context the

obtained results are preliminary and show that

recognition of some class of gestures is possible

by using very simple range-finders.

B. Future development:

1. This project can be further implemented

on platform like AVR, ARM

microcontroller etc.

2. We can add many video controlling

features just by modifying the python

code.

3. We can integrate this type of module for

many applications like browsers,

designing and editing applications.

The knowledge is ever expanding and so are the

problems which the mankind strive to solve.

REFERENCES:

1. Sidhant Gupta, Dan Morris, Shwetak N

Patel, Desney Tan, SoundWave: Using

the Doppler Effect to Sense Gestures,

ACM, 2012

ISSN (PRINT): 2393-8374, (ONLINE): 2394-0697, VOLUME-5, ISSUE-4, 2018

307

You might also like

- Ardiuno Mini ProjectDocument3 pagesArdiuno Mini ProjectAbhinav chouhanNo ratings yet

- Arduino Applied: Comprehensive Projects for Everyday ElectronicsFrom EverandArduino Applied: Comprehensive Projects for Everyday ElectronicsNo ratings yet

- Arduino Based Hand Gesture Control of ComputerDocument7 pagesArduino Based Hand Gesture Control of ComputerVedant DhoteNo ratings yet

- Beginning Arduino Nano 33 IoT: Step-By-Step Internet of Things ProjectsFrom EverandBeginning Arduino Nano 33 IoT: Step-By-Step Internet of Things ProjectsNo ratings yet

- Control With Hand GesturesDocument18 pagesControl With Hand GesturesAjithNo ratings yet

- Hand Gesture Controlling SystemDocument8 pagesHand Gesture Controlling SystemIJRASETPublicationsNo ratings yet

- Oct Ijmte - CWDocument7 pagesOct Ijmte - CWVarunNo ratings yet

- Computer Applications Control by Our Hand Gestures: Project ReportDocument6 pagesComputer Applications Control by Our Hand Gestures: Project ReportkomalNo ratings yet

- Error Detection and Comparison of Gesture Control TechnologiesDocument8 pagesError Detection and Comparison of Gesture Control TechnologiesIAES IJAINo ratings yet

- Arduino Based Hand Gesture Control of PC / LaptopDocument28 pagesArduino Based Hand Gesture Control of PC / LaptopSRUTHI M SREEKUMARNo ratings yet

- Hand GestureDocument5 pagesHand GestureBunny JainNo ratings yet

- Touchless TechnologyDocument9 pagesTouchless TechnologyKrishna KarkiNo ratings yet

- P1 EDAI2 Project PaperDocument4 pagesP1 EDAI2 Project PaperzaidNo ratings yet

- GW PaperDocument4 pagesGW PaperadulphusjuhnosNo ratings yet

- IRJET V4I6575 With Cover Page v2Document5 pagesIRJET V4I6575 With Cover Page v2Monisha S.No ratings yet

- HandGestureControlledLaptop TestDocument5 pagesHandGestureControlledLaptop Testvaibhav SandiriNo ratings yet

- Final Project NewDocument38 pagesFinal Project NewTanveer Wani100% (1)

- 3.+arief Budijanto SNASIKOM+2022+R1Document12 pages3.+arief Budijanto SNASIKOM+2022+R1dicky adityaNo ratings yet

- 19 Ijmtst0706111Document6 pages19 Ijmtst0706111Kavya SreeNo ratings yet

- Hand Assistive Device For Physically Challenged PeopleDocument6 pagesHand Assistive Device For Physically Challenged PeopleIJRASETPublicationsNo ratings yet

- Bluetooth Based Home Automation Using Arduino IJERTCONV7IS02053+76Document5 pagesBluetooth Based Home Automation Using Arduino IJERTCONV7IS02053+7618E15A0224 B RAJESHNo ratings yet

- Cursor Movement Using Hand Gestures in Python and Arduino UnoDocument7 pagesCursor Movement Using Hand Gestures in Python and Arduino UnoIJRASETPublicationsNo ratings yet

- Mhairul, jstv3n2p8 PDFDocument8 pagesMhairul, jstv3n2p8 PDFnike 1998No ratings yet

- Automatic Hand Sanitizer Container To Prevent The Spread of Corona Virus DiseaseDocument48 pagesAutomatic Hand Sanitizer Container To Prevent The Spread of Corona Virus DiseaseKaveri D SNo ratings yet

- Dr. Ambedkar Institute of Technology: Under The Guidance ofDocument6 pagesDr. Ambedkar Institute of Technology: Under The Guidance ofSushanth KengunteNo ratings yet

- Ijresm V1 I11 50Document4 pagesIjresm V1 I11 50Devika GhadageNo ratings yet

- Arduino Based Hand Gesture Control For ComputerDocument7 pagesArduino Based Hand Gesture Control For ComputerDeepu BudhathokiNo ratings yet

- Hand Gesture Mouse Recognition Implementation To Replace Hardware Mouse Using AIDocument6 pagesHand Gesture Mouse Recognition Implementation To Replace Hardware Mouse Using AIGaurav MunjewarNo ratings yet

- Glove Interpreter As PC Assist: Submitted ByDocument12 pagesGlove Interpreter As PC Assist: Submitted ByBhavin BhadranNo ratings yet

- 3-D Touch Less Interface Using Arduino UNODocument13 pages3-D Touch Less Interface Using Arduino UNOKiran Googly JadhavNo ratings yet

- Smart Door Security Using Arduino and Bluetooth ApplicationDocument5 pagesSmart Door Security Using Arduino and Bluetooth ApplicationMakrand GhagNo ratings yet

- Mini Project Report: Distance Sensing Using Ultrasonic Sensor & ArduinoDocument15 pagesMini Project Report: Distance Sensing Using Ultrasonic Sensor & ArduinoRishi RajNo ratings yet

- SynopsisDocument3 pagesSynopsisRitesh PokaleNo ratings yet

- ETC203+Hand Gesture Controlled RobotDocument4 pagesETC203+Hand Gesture Controlled RobotPradyumnaNo ratings yet

- Internet of Things Based Garbage Monitoring SystemDocument4 pagesInternet of Things Based Garbage Monitoring SystemSANTHIPRIYANo ratings yet

- Dheeban2018 ZigbeeDocument6 pagesDheeban2018 Zigbeepobawi8574No ratings yet

- IRJET - Gesture Controlled Robot With Obs PDFDocument3 pagesIRJET - Gesture Controlled Robot With Obs PDFCarina FelnecanNo ratings yet

- Hand Gesture Control Robot - Benjula Anbu Malar M B, Praveen R, Kavi Priya K PDocument5 pagesHand Gesture Control Robot - Benjula Anbu Malar M B, Praveen R, Kavi Priya K PCarina FelnecanNo ratings yet

- Arduino Based Smart Glove PRO 2Document23 pagesArduino Based Smart Glove PRO 2faisal aminNo ratings yet

- Department of It: Gesture Controll Physical Devices Using IotDocument9 pagesDepartment of It: Gesture Controll Physical Devices Using IotHANUMANSAI LAKAMSANINo ratings yet

- A Survey Paper On Controlling Computer UDocument5 pagesA Survey Paper On Controlling Computer UgrushaNo ratings yet

- CE Project-WheelchairDocument24 pagesCE Project-WheelchairHemanth YenniNo ratings yet

- Hand Gesture Recognition Control For Computers Using ArduinoDocument16 pagesHand Gesture Recognition Control For Computers Using Arduinotsicircle tsicircleNo ratings yet

- Smart Blind StickDocument5 pagesSmart Blind StickLalitNo ratings yet

- Air Canvas SrsDocument7 pagesAir Canvas Srsrachuriharika.965No ratings yet

- Design and Implementation of Smart Cane For Visually Impaired PeopleDocument6 pagesDesign and Implementation of Smart Cane For Visually Impaired PeopleiyoasNo ratings yet

- Animatronic Hand ControllerDocument31 pagesAnimatronic Hand ControllerTipu HjoliNo ratings yet

- Paper IEEE Template 10.11.21Document15 pagesPaper IEEE Template 10.11.21Bajarla IsmailNo ratings yet

- Control Systems.: J - Component ReportDocument12 pagesControl Systems.: J - Component ReportsuseelaksmanNo ratings yet

- Seminar Touchless Touch ScreenDocument18 pagesSeminar Touchless Touch Screen19-5E8 Tushara PriyaNo ratings yet

- An Intelligent Bin Management System Design For Smart City Using GSM TechnologyDocument7 pagesAn Intelligent Bin Management System Design For Smart City Using GSM TechnologyShrivinayak DhotreNo ratings yet

- Motion Control of Robot by Using Kinect SensorDocument6 pagesMotion Control of Robot by Using Kinect SensorEbubeNo ratings yet

- HapticDocument12 pagesHapticRohitNo ratings yet

- Irjet V5i5512Document4 pagesIrjet V5i5512meghana vadlaNo ratings yet

- Pan and Tilt ControlDocument6 pagesPan and Tilt Controlziafat shehzadNo ratings yet

- Electronic Speaking System For Speech Impaired PeopleDocument4 pagesElectronic Speaking System For Speech Impaired Peoplekarenheredia18No ratings yet

- Week1 NterviewDocument31 pagesWeek1 NterviewVignesh RenganathNo ratings yet

- Survillance Rover Final ReportDocument57 pagesSurvillance Rover Final ReportAdhiraj SaxenaNo ratings yet

- JETIR2305415Document6 pagesJETIR2305415Mca SaiNo ratings yet

- Yusong Guo, Zhen Yu and Weimei ZhaoDocument6 pagesYusong Guo, Zhen Yu and Weimei ZhaoMatthew BattleNo ratings yet

- The High Security Smart Helmet Using Internet of Things: AbstractDocument12 pagesThe High Security Smart Helmet Using Internet of Things: AbstractMatthew BattleNo ratings yet

- Timer Counter in ARM7 (LPC2148) : Aarav SoniDocument26 pagesTimer Counter in ARM7 (LPC2148) : Aarav SoniMatthew BattleNo ratings yet

- Lecture: Cache Hierarchies: Topics: Cache Innovations (Sections B.1-B.3, 2.1)Document20 pagesLecture: Cache Hierarchies: Topics: Cache Innovations (Sections B.1-B.3, 2.1)Matthew BattleNo ratings yet

- Logic DesignDocument2 pagesLogic DesignMatthew BattleNo ratings yet

- A Ac Ca Ad de em Mi Ic C R Re Es Se Ea Ar RC CH H D Di IV VI Is Si Io On NDocument1 pageA Ac Ca Ad de em Mi Ic C R Re Es Se Ea Ar RC CH H D Di IV VI Is Si Io On NMatthew BattleNo ratings yet

- Technological Improvement in Medical Field Using FinalDocument4 pagesTechnological Improvement in Medical Field Using FinalMatthew BattleNo ratings yet

- ICT Applications For Environmental Management in Korea: Park, Sang HyunDocument24 pagesICT Applications For Environmental Management in Korea: Park, Sang HyunMatthew BattleNo ratings yet

- P - CNT - Ce CNT - RST: Counter 7-Bit Ce Reset Q TC CLK Counter 7-Bit Ce Reset QDocument7 pagesP - CNT - Ce CNT - RST: Counter 7-Bit Ce Reset Q TC CLK Counter 7-Bit Ce Reset QMatthew BattleNo ratings yet

- JUE200Document2 pagesJUE200ella100% (1)

- Cs Interview p3 Benchmarking 1Document2 pagesCs Interview p3 Benchmarking 1HajiAkbarBabarNo ratings yet

- PR 1730 Multi-Point Weighing and Batching ControllerDocument4 pagesPR 1730 Multi-Point Weighing and Batching ControllerSaraNo ratings yet

- Handout Lab1 AssignmentDocument2 pagesHandout Lab1 AssignmentCan KurtulanNo ratings yet

- Laptop Motherboard ComponentsDocument5 pagesLaptop Motherboard ComponentsvinodNo ratings yet

- Comparing Architecture and The Partition Table Using FanemeselDocument6 pagesComparing Architecture and The Partition Table Using FanemeselDanisy EisyrafNo ratings yet

- November 2021 Development Update - Digital Freight AllianceDocument3 pagesNovember 2021 Development Update - Digital Freight AllianceЛилия ХовракNo ratings yet

- Je Suis446548Document2 pagesJe Suis446548Abdelaly JabbadNo ratings yet

- Bootstrap Was Developed by Mark Otto and Jacob Thornton at Twitter. It Was Released As An Open Source Product in August 2011 On GithubDocument14 pagesBootstrap Was Developed by Mark Otto and Jacob Thornton at Twitter. It Was Released As An Open Source Product in August 2011 On GithubSantpa TechnologiesNo ratings yet

- HWQS Intl System ArchitectureDocument1 pageHWQS Intl System Architecturereezqee100% (1)

- Install Samsung RetailDocument8 pagesInstall Samsung RetailJohanNo ratings yet

- SQL Assignment 3Document4 pagesSQL Assignment 3vandana_korde100% (2)

- Advt - 2 2013, Instructions & QualificationsDocument9 pagesAdvt - 2 2013, Instructions & QualificationsDeepak SolankiNo ratings yet

- Service Manual: Trinitron Color TVDocument17 pagesService Manual: Trinitron Color TVohukNo ratings yet

- Design and Simulation VLAN Using Cisco Packet Tracer: A Case StudyDocument8 pagesDesign and Simulation VLAN Using Cisco Packet Tracer: A Case StudyKrishna AdhikariNo ratings yet

- The CANOpen Protocol - Structure, Scope, Applications and Future ProspectsDocument20 pagesThe CANOpen Protocol - Structure, Scope, Applications and Future ProspectsVedant Prusty100% (1)

- Iiee TemplateDocument3 pagesIiee TemplateLara Louise ConcepcionNo ratings yet

- Mẫu Ppt MarketingDocument28 pagesMẫu Ppt MarketingNguyễn TrầnNo ratings yet

- CIVE50003 Computational Methods II - Lecture VII - 200223 V2Document21 pagesCIVE50003 Computational Methods II - Lecture VII - 200223 V2TwinyNo ratings yet

- BGA Breakout Challenges: by Charles Pfeil, Mentor GraphicsDocument4 pagesBGA Breakout Challenges: by Charles Pfeil, Mentor GraphicsBenyamin Farzaneh AghajarieNo ratings yet

- GMSK With GNU RadioDocument10 pagesGMSK With GNU RadioRakesh S KNo ratings yet

- Learn ChatGPT - The Future of Learning (2022)Document34 pagesLearn ChatGPT - The Future of Learning (2022)Tibor Skulkersen100% (1)

- SQL: LIKE ConditionDocument7 pagesSQL: LIKE ConditionjoseavilioNo ratings yet

- Pega AcademyDocument10 pagesPega AcademySasidharNo ratings yet

- Cisco CoursesDocument30 pagesCisco CoursesPriyank Costa100% (1)

- Problem Detail Before After: Su2I Mirror Assy LH Empty Bin Moved With Child Part 28.10.2022 - In-HouseDocument1 pageProblem Detail Before After: Su2I Mirror Assy LH Empty Bin Moved With Child Part 28.10.2022 - In-HousePalani KumarNo ratings yet

- Curso Inglés para Ciclo Grado SuperiorDocument48 pagesCurso Inglés para Ciclo Grado SuperiorJulius ErvingNo ratings yet

- Vinafix - VN - ASUS K50IN PDFDocument91 pagesVinafix - VN - ASUS K50IN PDFAnonymous JOsfWBa3dXNo ratings yet

- Lecture 4 E-COMMERCEDocument37 pagesLecture 4 E-COMMERCEMichael AttehNo ratings yet

- DFo 3 3 SolutionDocument11 pagesDFo 3 3 SolutionMilanAdzic75% (8)