Professional Documents

Culture Documents

Markov Decision Processes Explained

Uploaded by

bsudheertec0 ratings0% found this document useful (0 votes)

41 views24 pagesThis document discusses Markov decision processes (MDPs) and related concepts:

1. MDPs model situations where outcomes depend on both chance and active choices. They allow optimizing actions to find the best outcome despite random elements.

2. MDPs exhibit the Markov property - the probabilities of outcomes depend only on the present state, not past states.

3. Key aspects of an MDP include states, actions, probability distributions determining state transitions, and rewards for states. Simulations run the MDP by choosing actions and monitoring state transitions over time steps.

4. Partially observable MDPs (POMDPs) model situations where the current state is unknown. POMDPs add a belief state

Original Description:

Original Title

5.9MDPs.pdf

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document discusses Markov decision processes (MDPs) and related concepts:

1. MDPs model situations where outcomes depend on both chance and active choices. They allow optimizing actions to find the best outcome despite random elements.

2. MDPs exhibit the Markov property - the probabilities of outcomes depend only on the present state, not past states.

3. Key aspects of an MDP include states, actions, probability distributions determining state transitions, and rewards for states. Simulations run the MDP by choosing actions and monitoring state transitions over time steps.

4. Partially observable MDPs (POMDPs) model situations where the current state is unknown. POMDPs add a belief state

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

41 views24 pagesMarkov Decision Processes Explained

Uploaded by

bsudheertecThis document discusses Markov decision processes (MDPs) and related concepts:

1. MDPs model situations where outcomes depend on both chance and active choices. They allow optimizing actions to find the best outcome despite random elements.

2. MDPs exhibit the Markov property - the probabilities of outcomes depend only on the present state, not past states.

3. Key aspects of an MDP include states, actions, probability distributions determining state transitions, and rewards for states. Simulations run the MDP by choosing actions and monitoring state transitions over time steps.

4. Partially observable MDPs (POMDPs) model situations where the current state is unknown. POMDPs add a belief state

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 24

Markov Decision Processes

• The Markov Property

• The Markov Decision Process

• Partially Observable MDPs

The Premise

Much of the time, statistics are thought of as being

very deterministic, for example:

79.8% of Stanford students graduate in 4 years.

(www.collegeresults.org, 2014)

It’s very tempting to read this sort of statistic as if

graduating from Stanford in four years is a

randomly determined event. In fact, it’s a

combination of two things:

• random chance, which does play a role

• the actions of the student

The Premise

Situations like this, in which outcomes are a

combination of random chance and active

choices, can still be analyzed and optimized.

You can’t optimize the part that happens

randomly, but you can still find the best actions

to take: in other words, you can optimize your

actions to find the best outcome in spite of the

random element.

One way to do this is by using a Markov Decision

Process.

The Markov Property

Markov Decision Processes (MDPs) are stochastic

processes that exhibit the Markov Property.

• Recall that stochastic processes, in unit 2, were

processes that involve randomness. The examples

in unit 2 were not influenced by any active

choices – everything was random. This is why

they could be analyzed without using MDPs.

• The Markov Property is used to refer to situations

where the probabilities of different outcomes are

not dependent on past states: the current state is

all you need to know. This property is sometimes

called “memorylessness”.

The Markov Property

For example, if an unmanned aircraft is trying to

remain level, all it needs to know is its current state,

which might include how level it currently is, and

what influences (momentum, wind, gravity) are

acting upon its state of level-ness. This analysis

displays the Markov Property.

In contrast, if an unmanned aircraft is trying to

figure out how long until its battery dies, it would

need to know not only how much charge it has now,

but also how fast that charge has declined from a

past state. Therefore, this does not display the

Markov Property.

Practice Problem 1

1. For each scenario:

• tell whether the outcome is influenced by chance only

or a combination of chance and action

• tell whether the outcomes’ probabilities depend only

on the present or partially on the past as well

• argue whether this scenario can or can not be analyzed

with an MDP

a. A Stanford freshman wants to graduate in 4 years.

b. An unmanned vehicle wants to avoid collision.

c. A gambler wants to win at roulette.

d. A truck driver wants to get from his current location to

Los Angeles.

Aspects of an MDP

Some important aspects of a Markov Decision

Process:

State: a set of existing or theoretical conditions, like

position, color, velocity, environment, amount of

resources, etc.

One of the challenges in designing an MDP is to

figure out what all the possible states are. The

current state is only one of a large set of possible

states, some more desirable than others.

Even though an object only has one current state, it

will probably end up in a different state at some

point.

Aspects of an MDP

Action: just like there is a large set of possible

states, there is also a large set of possible

actions that might be taken.

The current state often influences which actions

are available. For example, if you are driving a

car, your options for turning left or right are

often restricted by what lane you are in.

Aspects of an MDP

A Probability Distribution is used to determine the

transition from the current state to the next state.

The probability of one state (flying sideways)

leading to another (crashing horribly) is influenced

by both action and chance. The same state (flying

sideways) may also lead to other states (recovering,

turning, landing safely) with different probabilities,

which also depend on both actions and chance.

All of these different probabilities are called the

probability distribution, which is often contained in

a matrix.

Aspects of an MDP

The last aspect of an MDP is an artificially

generated reward. This reward is calculated

based on the value of the next state compared

to the current state. More favorable states

generate better rewards.

For example, if a plane is flying sideways, the

reward for recovering would be much higher

than the reward for crashing horribly.

The Markov Decision Process

Once the states, actions, probability distribution, and

rewards have been determined, the last task is to run the

process. A time step is determined and the state is

monitored at each time step.

In a simulation,

1. the initial state is chosen randomly from the set of

possible states.

2. Based on that state, an action is chosen.

3. The next state is determined based on the probability

distribution for the given state and the action chosen.

4. A reward is granted for the next state.

5. The entire process is repeated from step 2.

Practice Problem 2

2. Suppose a system has two states, A (good) and B

(bad). There are also two actions, x and y.

From state A, x leads to A (60%) or B (40%); y leads

to A (50%) or B (50%).

From state B, x leads to A (30%) or B(70%); y leads to

A(80%) or B(20%).

a) Run a simulation starting in state A and repeating

action x ten times. Use rand() to generate the

probabilities. For each time step you are in state

A, give yourself 1 point.

b) Run the same simulation using action y ten times.

c) Run the same simulation alternating x and y.

Practice Problem 3

Write two functions in Julia, actionx(k) and

actiony(k), that take an input k (the state A or B)

and return an output “A” or “B” based on the

probabilities from the preceding problem:

From state A, x leads to A (60%) or B (40%); y leads

to A (50%) or B (50%). From state B, x leads to A

(30%) or B(70%); y leads to A(80%) or B(20%).

Use your program in a simulation to find the total

reward value of repeating x 100 times or repeating

y 100 times. Use multiple simulations. Which is

better?

Probability Distribution Matrices

In the previous problem, the probabilities for

action x were as follows: From state A, x leads to

A (60%) or B (40%); from state B, x leads to A

(30%) or B(70%).

This information can be summarized more briefly

in a matrix: A B next state

current state A .6 .4

B .3 .7

Matrices enable neat probability summaries

even for very large sets of possible events or

“event spaces”.

Practice Problem 4

Given this probability distribution for action k:

A B C

A .1 .2 .7

B .8 0 .2

C 0 .4 .6

tell the probability that action k will move from

state…

a) A to B b) A to C c) C to A

d) C to C e) B to C f) B to A

g) Why do the rows have to add up to 1, but the

columns not?

Intelligent Systems

In practice problem 2, the action taken did not

depend on the state: x was chosen or y was chosen

regardless of the current state.

However, given that A was the desired state, the

most intelligent course of action would be the one

most likely to return A, which was a different

depending on whether the current state was A or B.

A system that monitors its own state and chooses

the best action based on its state is said to display

intelligence.

Practice Problem 5

Here are the matrices for actions x and y again:

.6 .4 .5 .5

action x: , action y:

.3 .7 .8 .2

a. In state A, what action is the best?

b. In state B, what action is the best?

c. Write a program in Julia that chooses the

best action based on the current state. Run

several 100-time-step simulations using your

intelligent program.

Applications of MDPs

Most systems, of course, are far more complex

than the two-state, two-action example given

here. As you can imagine, running simulations

for every possible action in every possible state

requires some powerful computing.

MDPs are used extensively in robotics,

automated systems, manufacturing, and

economics, among other fields.

POMDPs

A variation on the traditional MDP is a Partially

Observable Markov Decision Process (POMDP,

pronounced “Pom D.P.”. In these scenarios, the

system does not know exactly what state it is

currently in, and therefore has to guess.

This is like the difference between thinking,

“I’m going in the right direction”

and

“I think I’m going in the right direction”.

POMDPs

POMDPs have the same elements as a traditional

MDP, plus two more.

The first is the belief state, which is the state the

system believes it is in. The belief state is a

probability distribution.

For example,

“I think I’m going in the right direction”

might really mean:

• 80% chance this is the right direction

probability

• 15% mostly-right direction distribution!

• 5% completely wrong direction

POMDPs

The second additional element is a set of

observations. After the system takes an action

based on its belief state, it observes what

happens next and updates its belief state

accordingly.

For example, if you took a right turn and didn’t

see the freeway you expected, you would then

change your probability distribution for whether

you were going in the right direction.

POMDPs

Otherwise, a POMDP works much like a

traditional MDP:

• An action is chosen based on the belief traditional:

state current state

• The next state is reached and the

reward granted

traditional:

• an observation is made and the belief this step

state updated. absent

Then the next action is chosen based on

the belief state and the process repeats.

Applications of POMDPs

POMDPs are used in robotics, automated systems,

medicine, machine maintenance, biology,

networks, linguistics, and many other fields.

There are also many variations on POMDP

frameworks depending on the application. Some

rely on Monte Carlo-style simulations. Others are

used in machine learning applications (in which

computers learn from trial and error rather than

relying only on programmers’ instructions).

Because POMDPs are widely applicable and also

fairly new on the scene, fresh variations are in

constant development.

Practice Problem 6

Imagine that you are a doctor trying to help a

sneezy patient.

a. Name three possible states for “Why this patient

sneezes” and assign a probability to each state.

(This is your belief state.)

b. Choose an action based on your belief state.

c. Suppose the patient is still sneezing after your

action. Update your belief state.

d. Write a paragraph explaining your reasoning in a

– c, and what other steps you might take

(including questions to ask or other actions to

try) to refine your belief state.

You might also like

- Trauma Stewardship: An Everyday Guide To Caring For Self While Caring For Others - Laura Van Dernoot LipskyDocument5 pagesTrauma Stewardship: An Everyday Guide To Caring For Self While Caring For Others - Laura Van Dernoot Lipskyhyficypo0% (3)

- E-Health Care ManagementDocument92 pagesE-Health Care ManagementbsudheertecNo ratings yet

- Solution Manual Automata Computability PDFDocument20 pagesSolution Manual Automata Computability PDFpraneeth kumar reddyNo ratings yet

- Advamce Diploma in Industrial Safety & Security Management (FF)Document21 pagesAdvamce Diploma in Industrial Safety & Security Management (FF)Dr Shabbir75% (4)

- Reinforcement Learning ExplainedDocument64 pagesReinforcement Learning ExplainedChandra Prakash MeenaNo ratings yet

- Rich Automata SolnsDocument196 pagesRich Automata SolnsAshaNo ratings yet

- Reinforcement Learning: A Short CutDocument7 pagesReinforcement Learning: A Short CutSon KrishnaNo ratings yet

- H5a - Markov Analysis - TheoryDocument15 pagesH5a - Markov Analysis - TheoryChadi AboukrrroumNo ratings yet

- FTI 2021 Tech Trends Volume AllDocument504 pagesFTI 2021 Tech Trends Volume AlljapamecNo ratings yet

- Unit 4Document56 pagesUnit 4randyyNo ratings yet

- Markovian Decision ProcessDocument27 pagesMarkovian Decision Processkoookie barNo ratings yet

- RL Unit 2Document11 pagesRL Unit 2HarshithaNo ratings yet

- CSD311: Artificial IntelligenceDocument11 pagesCSD311: Artificial IntelligenceAyaan KhanNo ratings yet

- DW 01Document14 pagesDW 01Seyed Hossein KhastehNo ratings yet

- Markov Decision Processes & Reinforcement Learning: Megan Smith Lehigh University, Fall 2006Document40 pagesMarkov Decision Processes & Reinforcement Learning: Megan Smith Lehigh University, Fall 2006Sanja Lazarova-MolnarNo ratings yet

- A Tutorial For Reinforcement LearningDocument14 pagesA Tutorial For Reinforcement Learning2guitar2No ratings yet

- A Brief Introduction To Reinforcement LearningDocument4 pagesA Brief Introduction To Reinforcement LearningNarendra PatelNo ratings yet

- System Sequence of Events State: e Relates TheDocument4 pagesSystem Sequence of Events State: e Relates TheHong JooNo ratings yet

- Bandit-Based Monte-Carlo PlanningDocument12 pagesBandit-Based Monte-Carlo PlanningArias JoseNo ratings yet

- Unit-5 Genetic Reinforcement Markov Q-LearningDocument39 pagesUnit-5 Genetic Reinforcement Markov Q-LearningAyush Rao RajputNo ratings yet

- Solving Problems by Searching StrategiesDocument82 pagesSolving Problems by Searching StrategiesJoyfulness EphaNo ratings yet

- 5.4-Reinforcement Learning-Part2-Learning-AlgorithmsDocument15 pages5.4-Reinforcement Learning-Part2-Learning-Algorithmspolinati.vinesh2023No ratings yet

- Unit 3 - Machine Learning - WWW - Rgpvnotes.in PDFDocument18 pagesUnit 3 - Machine Learning - WWW - Rgpvnotes.in PDFYouth MakerNo ratings yet

- Topic13-Markov AnalysisDocument14 pagesTopic13-Markov AnalysisMG MoanaNo ratings yet

- RL TutorialDocument17 pagesRL TutorialNirajDhotreNo ratings yet

- AnwermlDocument10 pagesAnwermlrajeswari reddypatilNo ratings yet

- Bayesian, Markov, Poisson processes & genetic algorithmsDocument3 pagesBayesian, Markov, Poisson processes & genetic algorithmsAkshay YadavNo ratings yet

- Reinforcement LearningDocument32 pagesReinforcement Learningvedang maheshwariNo ratings yet

- Assignment 2, EDAP01: 1 StatementDocument4 pagesAssignment 2, EDAP01: 1 StatementAxel RosenqvistNo ratings yet

- Textbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkDocument6 pagesTextbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkMalik AsadNo ratings yet

- Assignment 2, EDAP01: 1 StatementDocument3 pagesAssignment 2, EDAP01: 1 StatementAxel RosenqvistNo ratings yet

- Reinforcement Learning-1Document13 pagesReinforcement Learning-1Sushant VyasNo ratings yet

- Files 1 2019 June NotesHubDocument 1559416363Document6 pagesFiles 1 2019 June NotesHubDocument 1559416363mail.sushilk8403No ratings yet

- DD2431 Machine Learning Lab 4: Reinforcement Learning Python VersionDocument9 pagesDD2431 Machine Learning Lab 4: Reinforcement Learning Python VersionbboyvnNo ratings yet

- Machine Learning Mod 5Document15 pagesMachine Learning Mod 5Vishnu ChNo ratings yet

- Chapter15 1Document36 pagesChapter15 1Reyazul HasanNo ratings yet

- Markov Decision Process TutorialDocument22 pagesMarkov Decision Process TutorialVinitha VasudevanNo ratings yet

- Part 1 - Key Concepts in RL - Spinning Up DocumentationDocument9 pagesPart 1 - Key Concepts in RL - Spinning Up DocumentationFahim SaNo ratings yet

- Syllabus of Machine LearningDocument19 pagesSyllabus of Machine Learninggauri krishnamoorthyNo ratings yet

- CS6700___Reinforcement_Learning_PA1_Jan_May_2024_Document4 pagesCS6700___Reinforcement_Learning_PA1_Jan_May_2024_Rahul me20b145No ratings yet

- The Implementation of A Hidden Markov MoDocument14 pagesThe Implementation of A Hidden Markov MoFito RhNo ratings yet

- Reinforcement LN-6Document13 pagesReinforcement LN-6M S PrasadNo ratings yet

- Reinforcement Learning Robot Walks with Q-LearningDocument5 pagesReinforcement Learning Robot Walks with Q-LearningMohamedLahouaouiNo ratings yet

- Introduction To PlanningDocument44 pagesIntroduction To PlanningKhushbu TandonNo ratings yet

- Dynamic Programming (DP)Document32 pagesDynamic Programming (DP)Lyka AlvarezNo ratings yet

- pptDocument92 pagespptsanudantal42003No ratings yet

- Multiobjective Genetic Algorithm gamultiobj ExplainedDocument6 pagesMultiobjective Genetic Algorithm gamultiobj ExplainedPaolo FlorentinNo ratings yet

- Stochastic Optimization Lecture NotesDocument23 pagesStochastic Optimization Lecture NotesShipra AgrawalNo ratings yet

- Lecture 2: Problem Solving: Delivered by Joel Anandraj.E Ap/ItDocument32 pagesLecture 2: Problem Solving: Delivered by Joel Anandraj.E Ap/ItjoelanandrajNo ratings yet

- ML Unit 4Document9 pagesML Unit 4themojlvlNo ratings yet

- Unit III. Heuristic Search TechniqueDocument15 pagesUnit III. Heuristic Search Techniqueszckhw2mmcNo ratings yet

- Ee126 Project 1Document5 pagesEe126 Project 1api-286637373No ratings yet

- Markov Chain Monte Carlo in RDocument14 pagesMarkov Chain Monte Carlo in RCươngNguyễnBáNo ratings yet

- RL QB Ise-1Document10 pagesRL QB Ise-1Lavkush yadavNo ratings yet

- Metropolis HastingsDocument9 pagesMetropolis HastingsMingyu LiuNo ratings yet

- Chapter 2 Problem SolvingDocument205 pagesChapter 2 Problem SolvingheadaidsNo ratings yet

- Solving Problems by Searching: Reflex Agent Is SimpleDocument91 pagesSolving Problems by Searching: Reflex Agent Is Simplepratima depaNo ratings yet

- Greg Czerniak's Website - Kalman Filters For Undergrads 1 PDFDocument9 pagesGreg Czerniak's Website - Kalman Filters For Undergrads 1 PDFMuhammad ShahidNo ratings yet

- Solving Problems Through Search TechniquesDocument78 pagesSolving Problems Through Search TechniquesTariq IqbalNo ratings yet

- A Short Tutorial On Reinforcement Learning: Review and ApplicationsDocument5 pagesA Short Tutorial On Reinforcement Learning: Review and ApplicationsDanelysNo ratings yet

- RL FrraDocument9 pagesRL FrraVishal TarwatkarNo ratings yet

- Linear Programming, Decision Theory, Monte Carlo SimulationDocument4 pagesLinear Programming, Decision Theory, Monte Carlo SimulationApurva JawadeNo ratings yet

- SPPM Unit 1Document11 pagesSPPM Unit 1bsudheertecNo ratings yet

- Trends and Research Issues in SOA Validation: January 2011Document23 pagesTrends and Research Issues in SOA Validation: January 2011bsudheertecNo ratings yet

- M.E (FT) 2021 Regulation-Cse SyllabusDocument88 pagesM.E (FT) 2021 Regulation-Cse SyllabusbsudheertecNo ratings yet

- Unit - I: 1. Conventional Software ManagementDocument10 pagesUnit - I: 1. Conventional Software ManagementAkash SanjeevNo ratings yet

- Webinar On AI Using MatlabDocument1 pageWebinar On AI Using MatlabbsudheertecNo ratings yet

- Smart Social Distancing TechniqueDocument86 pagesSmart Social Distancing TechniquebsudheertecNo ratings yet

- M.E (FT) 2021 Regulation-Ece SyllabusDocument64 pagesM.E (FT) 2021 Regulation-Ece SyllabusbsudheertecNo ratings yet

- Question Paper Code:: (10×2 20 Marks)Document2 pagesQuestion Paper Code:: (10×2 20 Marks)bsudheertecNo ratings yet

- MDP PDFDocument37 pagesMDP PDFbsudheertecNo ratings yet

- CS554 - Advanced Database Systems Homework 8: Undo-Log RecordsDocument15 pagesCS554 - Advanced Database Systems Homework 8: Undo-Log RecordsbsudheertecNo ratings yet

- A Network Architecture For Heterogeneous Mobile ComputingDocument19 pagesA Network Architecture For Heterogeneous Mobile ComputingYuvraj SahniNo ratings yet

- Cmu CS 99 143 PDFDocument150 pagesCmu CS 99 143 PDFbsudheertecNo ratings yet

- CS8261 - C Programming Laboratory - MCQDocument6 pagesCS8261 - C Programming Laboratory - MCQbsudheertecNo ratings yet

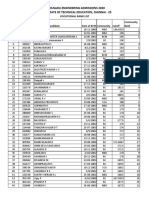

- Tamilnadu Engineering Admissions 2020 Vocational Rank ListDocument35 pagesTamilnadu Engineering Admissions 2020 Vocational Rank ListbsudheertecNo ratings yet

- OOAD exam questions on use cases, UML diagramsDocument2 pagesOOAD exam questions on use cases, UML diagramsbsudheertecNo ratings yet

- Fork System Call and Processes: CS449 Spring 2016Document21 pagesFork System Call and Processes: CS449 Spring 2016Raven MercadoNo ratings yet

- HW3 Sol PDFDocument44 pagesHW3 Sol PDFbsudheertecNo ratings yet

- Index of algorithms and concepts in theoretical computer scienceDocument19 pagesIndex of algorithms and concepts in theoretical computer sciencebsudheertecNo ratings yet

- Academic Allotments PDFDocument259 pagesAcademic Allotments PDFbsudheertecNo ratings yet

- Waside Graphs PDFDocument4 pagesWaside Graphs PDFbsudheertecNo ratings yet

- Deepiris: Iris Recognition Using A Deep Learning ApproachDocument4 pagesDeepiris: Iris Recognition Using A Deep Learning ApproachbsudheertecNo ratings yet

- Maths assignment on subtraction from Srimathi Sundaravalli Memorial SchoolDocument2 pagesMaths assignment on subtraction from Srimathi Sundaravalli Memorial SchoolbsudheertecNo ratings yet

- Lecture 13-14: Pipelines Hazards": Suggested Reading:" (HP Chapter 4.5-4.7) "Document51 pagesLecture 13-14: Pipelines Hazards": Suggested Reading:" (HP Chapter 4.5-4.7) "Max MarkNo ratings yet

- Youngjoong PDFDocument161 pagesYoungjoong PDFbsudheertecNo ratings yet

- Pipeline Review: Here Is The Example Instruction Sequence Used To Illustrate Pipelining On The Previous PageDocument11 pagesPipeline Review: Here Is The Example Instruction Sequence Used To Illustrate Pipelining On The Previous PagebsudheertecNo ratings yet

- ECE 341 Final Exam Solution: Problem No. 1 (10 Points)Document9 pagesECE 341 Final Exam Solution: Problem No. 1 (10 Points)bsudheertecNo ratings yet

- Sanei SK1-31 Technical ManualDocument60 pagesSanei SK1-31 Technical Manualcompu sanaNo ratings yet

- Unit SpaceDocument7 pagesUnit SpaceEnglish DepNo ratings yet

- School of Mathematics and Statistics Spring Semester 2013-2014 Mathematical Methods For Statistics 2 HoursDocument2 pagesSchool of Mathematics and Statistics Spring Semester 2013-2014 Mathematical Methods For Statistics 2 HoursNico NicoNo ratings yet

- Quarter 4 - MELC 11: Mathematics Activity SheetDocument9 pagesQuarter 4 - MELC 11: Mathematics Activity SheetSHAIREL GESIMNo ratings yet

- Ucla Dissertation CommitteeDocument7 pagesUcla Dissertation CommitteeWriteMyPapersDiscountCodeCleveland100% (1)

- Comparing Male and Female Reproductive SystemsDocument11 pagesComparing Male and Female Reproductive SystemsRia Biong TaripeNo ratings yet

- QC OF SUPPOSITORIESDocument23 pagesQC OF SUPPOSITORIESHassan kamalNo ratings yet

- COVID-19 vs WorldDocument2 pagesCOVID-19 vs WorldSJ RimasNo ratings yet

- MTH002 Core MathematicsDocument11 pagesMTH002 Core MathematicsRicardo HoustonNo ratings yet

- Tokenomics Expert - Optimize AI Network ModelDocument3 pagesTokenomics Expert - Optimize AI Network Modelsudhakar kNo ratings yet

- Green MarketingDocument20 pagesGreen MarketingMukesh PrajapatiNo ratings yet

- Research Leadership As A Predictor of Research CompetenceDocument7 pagesResearch Leadership As A Predictor of Research CompetenceMonika GuptaNo ratings yet

- Understanding Philosophy Through Different MethodsDocument5 pagesUnderstanding Philosophy Through Different MethodsRivera DyanaNo ratings yet

- WorkbookDocument7 pagesWorkbookᜃᜋᜒᜎ᜔ ᜀᜂᜇᜒᜈᜓ ᜋᜓᜆ᜔ᜑNo ratings yet

- Apollo / Saturn V: Insert Disk Q and The Fold OverDocument4 pagesApollo / Saturn V: Insert Disk Q and The Fold OverRob ClarkNo ratings yet

- SPEX 201 Lab 1 - Motion Analysis Techniques - STUDENT - 2023Document8 pagesSPEX 201 Lab 1 - Motion Analysis Techniques - STUDENT - 2023tom mallardNo ratings yet

- Fy2023 CBLD-9 RevisedDocument11 pagesFy2023 CBLD-9 Revisedbengalyadama15No ratings yet

- Jul Dec 2021 SK HRH Crec v8 2Document2,264 pagesJul Dec 2021 SK HRH Crec v8 2San DeeNo ratings yet

- Catholic High School of Pilar Brix James DoralDocument3 pagesCatholic High School of Pilar Brix James DoralMarlon James TobiasNo ratings yet

- Élan VitalDocument3 pagesÉlan Vitalleclub IntelligenteNo ratings yet

- Energy Resources Conversion and Utilization: Liq-Liq Extract. & Other Liq-Liq Op. and EquipDocument3 pagesEnergy Resources Conversion and Utilization: Liq-Liq Extract. & Other Liq-Liq Op. and EquipyanyanNo ratings yet

- Volvo - A40F-A40G - Parts CatalogDocument1,586 pagesVolvo - A40F-A40G - Parts Catalog7crwhczghzNo ratings yet

- Power AdministrationDocument17 pagesPower AdministrationWaseem KhanNo ratings yet

- Probability TheoryDocument9 pagesProbability TheoryAlamin AhmadNo ratings yet

- Probability and Statistics Tutorial for EQT 272Document2 pagesProbability and Statistics Tutorial for EQT 272Jared KuyislNo ratings yet

- Adoc - Pub - Bagaimana Motivasi Berprestasi Mendorong KeberhasiDocument18 pagesAdoc - Pub - Bagaimana Motivasi Berprestasi Mendorong KeberhasiDWIKI RIYADINo ratings yet

- Tutorial - Time Series Analysis With Pandas - DataquestDocument32 pagesTutorial - Time Series Analysis With Pandas - DataquestHoussem ZEKIRINo ratings yet