Professional Documents

Culture Documents

Psychological Assessment - Validity

Uploaded by

Ai Huasca0 ratings0% found this document useful (0 votes)

9 views3 pagesCopyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views3 pagesPsychological Assessment - Validity

Uploaded by

Ai HuascaCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 3

I.

The Concept of Validity

• Validity: A judgment or estimate of how well a test measures what it purports to

measure in a particular context

• Validation: The process of gathering and evaluating evidence about validity

• Both test developers and test users may play a role in the validation of a test • Local

validation: Test users may validate a test with their own group of testtakers

• Concept of Validity.

Validity is often conceptualized according to three categories:

1. Content validity - This is a measure of validity based on an evaluation of the subjects,

topics, or content covered by the items in the test

2. Criterion-related validity - This is a measure of validity obtained by evaluating the

relationship of scores obtained on the test to scores on other tests or measures

3. Construct validity - This is a measure of validity that is arrived at by executing a

comprehensive analysis of:

a. How scores on the test relate to other test scores and measures

b. How scores on the test can be understood within some theoretical framework for

understanding the construct that the test was designed to measur

A. Face Validity

• Face validity: A judgment concerning how relevant the test items appear to be

• If a test appears to measure what it purports to measure “on the face of it,” it could be

said to be high in face validity

B. Content Validity

• Content validity: A judgment of how adequately a test samples behavior representative

of the universe of behavior that the test was designed to sample

• Do the test items adequately represent the content that should be included in the test?

• Test blueprint: A plan regarding the types of information to be covered by the items, the

number of items tapping each area of coverage, and the organization of the items in the test

C. Culture and the relativity of content validity

• The content validity of a test varies across cultures and time

• Political considerations may also play a role

III. Criterion-Related Validity

A. Criterion-related validity

• A judgment of how adequately a test score can be used to infer an individual’s most

probable standing on some measure of interest (i.e., the criterion)

• A criterion is the standard against which a test or a test score is evaluated

• Characteristics of a criterion o An adequate criterion should be relevant for the matter

at hand, valid for the purpose for which it is being used, and uncontaminated, meaning it is

not based on predictor measures

B. Concurrent Validity

• Form of criterion related validity that is an index of the degree to which a test score is

related to some criterion measure obtained at the same time (concurrently),

C. Predictive Validity

• Validity coefficient: A correlation coefficient that provides a measure of the

relationship between test scores and scores on the criterion measure

• Validity coefficients are affected by restriction or inflation of range

• Incremental validity: The degree to which an additional predictor explains something

about the criterion measure that is not explained by predictors already in use

• To what extent does a test predict the criterion over and above other variables?

III. Construct Validity

• Judgment about the appropriateness of inferences drawn from test scores regarding

individual standings on a construct

• If a test is a valid measure of a construct, then high scorers and low scorers should

behave as theorized

• All types of validity evidence, including evidence from the content- and criterion-related

varieties of validity, come under the umbrella of construct validity

A. Evidence of Construct Validity

• Evidence of homogeneity - How uniform a test is in measuring a single concept

• Evidence of changes with age - Some constructs are expected to change over time (e.g.,

reading rate)

• Evidence of pretest–posttest changes - Test scores change as a result of some

experience between a pretest and a posttest (e.g., therapy)

• Evidence from distinct groups - Scores on a test vary in a predictable way as a function

of membership in some group

• Convergent evidence: Scores on the test undergoing construct validation tend to

correlate highly in the predicted direction with scores on older, more established tests

designed to measure the same (or a similar) construct

• Discriminant evidence: Validity coefficient showing little relationship between test

scores and/or other variables with which scores on the test should not theoretically be

correlated

• Factor analysis: Class of mathematical procedures designed to identify specific

variables

on which people may differ.

IV. Validity, Bias, and Fairness

A. Test Bias

• A factor inherent in a test that systematically prevents accurate, impartial measurement

• Bias implies systematic variation in test scores

• Prevention during test development is the best cure for test bias

B. Rating Error

• A judgment resulting from the intentional or unintentional misuse of a rating scale

• Raters may be either too lenient, too severe, or reluctant to give ratings at the extremes

(central tendency error)

• Halo effect: A tendency to give a particular person a higher rating than he or she

objectively deserves because of a favorable overall impression

C. Test Fairness

• Fairness: The extent to which a test is used in an impartial, just, and equitable way

Term to Learn

• Validity: As applied to a test, validity is a judgment or estimate of how well a test

measures what it purports to measure in a particular context.

From <https://adamson.blackboard.com/ultra/courses/_3408_1/outline/edit/document/_359375_1?courseId=_3408_1>

You might also like

- Chapter 5: Validity For TeachersDocument31 pagesChapter 5: Validity For TeachersmargotNo ratings yet

- Validity LectureDocument4 pagesValidity LectureMaica Ann Joy SimbulanNo ratings yet

- Validity: PSY 112: Psychological AssessmentDocument61 pagesValidity: PSY 112: Psychological AssessmentAngel Love HernandezNo ratings yet

- Chapter 6 ValidityDocument39 pagesChapter 6 ValidityLikith Kumar LikithNo ratings yet

- ValidityDocument17 pagesValidityifatimanaqvi1472100% (1)

- Unit 4: Qualities of A Good Test: Validity, Reliability, and UsabilityDocument18 pagesUnit 4: Qualities of A Good Test: Validity, Reliability, and Usabilitymekit bekeleNo ratings yet

- Validity and Reliability of Research InstrumentDocument47 pagesValidity and Reliability of Research Instrumentjeffersonsubiate100% (4)

- RMM Lecture 17 Criteria For Good Measurement 2006Document31 pagesRMM Lecture 17 Criteria For Good Measurement 2006Shaheena SanaNo ratings yet

- MeasurementDocument34 pagesMeasurementsnehalNo ratings yet

- Evaluation: Prepared By: Usha Kiran Poudel SBA, 2079Document121 pagesEvaluation: Prepared By: Usha Kiran Poudel SBA, 2079Sushila Hamal ThakuriNo ratings yet

- What Is TestDocument35 pagesWhat Is TestZane FavilaNo ratings yet

- Validity and ReliabilityDocument70 pagesValidity and ReliabilitysreenivasnkgNo ratings yet

- Lecture 1 - Introd. Reliability - and - ValiditDocument15 pagesLecture 1 - Introd. Reliability - and - Validitnickokinyunyu11No ratings yet

- Validity and Reliability in ResearchDocument18 pagesValidity and Reliability in ResearchMoud KhalfaniNo ratings yet

- How Are Reliability and Validity AssessedDocument9 pagesHow Are Reliability and Validity AssessedEiya BaharudinNo ratings yet

- Chap 6 (1) April 20Document59 pagesChap 6 (1) April 20sumayyah ariffinNo ratings yet

- 5 Reserach DesignsDocument33 pages5 Reserach DesignsPrakash NaikNo ratings yet

- Validity and ReliabilityDocument3 pagesValidity and Reliabilitysunshinesamares28No ratings yet

- Research ppt2Document31 pagesResearch ppt2sana shabirNo ratings yet

- 4 Reliability ValidityDocument47 pages4 Reliability ValidityMin MinNo ratings yet

- Validation, CH 8Document19 pagesValidation, CH 8Veronica FeltesNo ratings yet

- ReliabilityDocument10 pagesReliabilitykhyatiNo ratings yet

- Data Collection, Reliability and Validity Metpen 2017Document44 pagesData Collection, Reliability and Validity Metpen 2017Miranda MetriaNo ratings yet

- 601 4 Research Reliability & ValidityDocument13 pages601 4 Research Reliability & ValidityDe ZerNo ratings yet

- Test Construction and Administration Suman VPDocument34 pagesTest Construction and Administration Suman VPRihana DilshadNo ratings yet

- W4 Validity and ReliabilityDocument28 pagesW4 Validity and ReliabilityNgọc A.ThưNo ratings yet

- BRM Measurement and Scale 2019Document50 pagesBRM Measurement and Scale 2019MEDISHETTY MANICHANDANANo ratings yet

- M2L7 - Establishing Validity and Reliability of TestsDocument37 pagesM2L7 - Establishing Validity and Reliability of TestsAVEGAIL SALUDONo ratings yet

- Psychometric Properties of A Good TestDocument44 pagesPsychometric Properties of A Good TestJobelle MalihanNo ratings yet

- Unit 9 Measurements - ShortDocument27 pagesUnit 9 Measurements - ShortMitiku TekaNo ratings yet

- Importance of Validity Presentation RealDocument11 pagesImportance of Validity Presentation RealNurul SaniahNo ratings yet

- Concept of Test Validity: Sowunmi E. TDocument14 pagesConcept of Test Validity: Sowunmi E. TJohnry DayupayNo ratings yet

- Lecture-10 (Reliability & Validity)Document17 pagesLecture-10 (Reliability & Validity)Kamran Yousaf AwanNo ratings yet

- P-7 Econ PratishthaDocument28 pagesP-7 Econ Pratishthaप्रतिष्ठा वर्माNo ratings yet

- Characteristics of A Good TestDocument5 pagesCharacteristics of A Good TestAileen Cañones100% (1)

- Chapter 6 ValidityDocument2 pagesChapter 6 Validityd092184No ratings yet

- Lecture 8 - Measurement X 6 PDFDocument10 pagesLecture 8 - Measurement X 6 PDFjstgivemesleepNo ratings yet

- Week 016 Validity of Measurement and ReliabilityDocument7 pagesWeek 016 Validity of Measurement and ReliabilityIrene PayadNo ratings yet

- Educational Research Validity & Types of Validity: Ayaz Muhammad KhanDocument17 pagesEducational Research Validity & Types of Validity: Ayaz Muhammad KhanFahad MushtaqNo ratings yet

- ValidityDocument36 pagesValidityMuhammad FaisalNo ratings yet

- Aie DR OoiDocument19 pagesAie DR OoiThivyah ThiruchelvanNo ratings yet

- Reliabiity and ValidityDocument23 pagesReliabiity and ValiditySyed AliNo ratings yet

- Types of NormDocument9 pagesTypes of NormMARICRIS AQUINONo ratings yet

- 5 A Assessment PracticesDocument24 pages5 A Assessment Practicesstephanie dawn cachuelaNo ratings yet

- ReliabilityDocument2 pagesReliabilityAnna CrisNo ratings yet

- Lucyana Simarmata Edres C Week 11Document4 pagesLucyana Simarmata Edres C Week 11Lucyana SimarmataNo ratings yet

- Chapter 6.assessment of Learning 1Document11 pagesChapter 6.assessment of Learning 1Emmanuel MolinaNo ratings yet

- Quantitative Analysis Quantitative Analysis: Prepared By: AUDRHEY N. RAMIREZDocument33 pagesQuantitative Analysis Quantitative Analysis: Prepared By: AUDRHEY N. RAMIREZLyth LythNo ratings yet

- Item Analysis and ValidationDocument18 pagesItem Analysis and ValidationRemy SaclausaNo ratings yet

- CH IV. Measurement and ScalingDocument51 pagesCH IV. Measurement and ScalingBandana SigdelNo ratings yet

- What Is ResearchDocument8 pagesWhat Is ResearchsayyedparasaliNo ratings yet

- Course Code 503 Achievement Test Microsoft PowerPoint PresentationDocument49 pagesCourse Code 503 Achievement Test Microsoft PowerPoint PresentationMi KhanNo ratings yet

- Tahapan Konseptual Penyusunan Alat Ukur (Kuesioner)Document21 pagesTahapan Konseptual Penyusunan Alat Ukur (Kuesioner)Anton Thon BotaxNo ratings yet

- Chapter 5 - Measurement TechniquesDocument46 pagesChapter 5 - Measurement TechniquesFarah A. RadiNo ratings yet

- Research Methodology: ValidityDocument24 pagesResearch Methodology: ValidityVJ SoniNo ratings yet

- Module 5 ValidityDocument17 pagesModule 5 ValidityVismaya RaghavanNo ratings yet

- Chapter 5 ReliabilityDocument38 pagesChapter 5 ReliabilityJomar SayamanNo ratings yet

- Validity: MEAM 607 - Advanced Test and Measurement By: Sherwin TrinidadDocument38 pagesValidity: MEAM 607 - Advanced Test and Measurement By: Sherwin TrinidadShear WindNo ratings yet

- CISA EXAM-Testing Concept-Knowledge of Compliance & Substantive Testing AspectsFrom EverandCISA EXAM-Testing Concept-Knowledge of Compliance & Substantive Testing AspectsRating: 3 out of 5 stars3/5 (4)

- Healthcare Staffing Candidate Screening and Interviewing HandbookFrom EverandHealthcare Staffing Candidate Screening and Interviewing HandbookNo ratings yet

- MCO-3 ENG CompressedDocument2 pagesMCO-3 ENG CompressedKripa Shankar MishraNo ratings yet

- Moral IntuitionismDocument24 pagesMoral IntuitionismAshjasheen JamenourNo ratings yet

- Blonote Vocabulary BookDocument74 pagesBlonote Vocabulary BookNurul Hikmah100% (7)

- Holistic Grammar TeachingDocument3 pagesHolistic Grammar Teachingmangum1No ratings yet

- Chisquare Indep Sol1Document13 pagesChisquare Indep Sol1Munawar100% (1)

- Besiness Management ExamDocument7 pagesBesiness Management ExamBrenda HarveyNo ratings yet

- Avon Cosmetics Inc F. Pimentel Ave., Purok 1, Bgry. Cobangbang, Daet, Camarines Norte 4600 PH. VAT Reg.: TIN 000-107-629-014Document4 pagesAvon Cosmetics Inc F. Pimentel Ave., Purok 1, Bgry. Cobangbang, Daet, Camarines Norte 4600 PH. VAT Reg.: TIN 000-107-629-014Patricia Nicole De JuanNo ratings yet

- DURAND, Gilbert. The Anthropological Structures of The Imaginary.Document226 pagesDURAND, Gilbert. The Anthropological Structures of The Imaginary.Haku Macan100% (2)

- Assessment of LearningDocument2 pagesAssessment of LearningHannah JuanitasNo ratings yet

- Comprehensive Care PlanDocument10 pagesComprehensive Care Planapi-381124066No ratings yet

- ResourcesDocument104 pagesResourcesAbdoul Hakim BeyNo ratings yet

- Scale For Measuring Transformational Leadership inDocument13 pagesScale For Measuring Transformational Leadership ingedleNo ratings yet

- Innovation Culture at 3M: by 5FMDocument9 pagesInnovation Culture at 3M: by 5FMindratetsuNo ratings yet

- Ridera DLP Eng g10 q1 Melc 5 Week 5Document7 pagesRidera DLP Eng g10 q1 Melc 5 Week 5Lalaine RideraNo ratings yet

- Yigitmert Karaman: Prof IleDocument1 pageYigitmert Karaman: Prof IleAnonymous hNKOU31No ratings yet

- Chanakya National Law UniversityDocument20 pagesChanakya National Law UniversityManini JaiswalNo ratings yet

- When I Look at A Strawberry I Think of A TongueDocument6 pagesWhen I Look at A Strawberry I Think of A TongueYusuf Ersel BalNo ratings yet

- The Snowball Effect: Communication Techniques To Make You UnstoppableDocument29 pagesThe Snowball Effect: Communication Techniques To Make You UnstoppableCapstone PublishingNo ratings yet

- Caselet 1Document3 pagesCaselet 1123nickil100% (1)

- Digital Lesson PlanDocument5 pagesDigital Lesson Planapi-325767293No ratings yet

- Consumer InvolvementDocument14 pagesConsumer InvolvementkaranchouhanNo ratings yet

- A Thousand Years of Good Prayers Dialectical JournalDocument2 pagesA Thousand Years of Good Prayers Dialectical Journalapi-327824054No ratings yet

- An Analysis of Kelly McGonigals TED Talk - How To Make Stress YouDocument7 pagesAn Analysis of Kelly McGonigals TED Talk - How To Make Stress YouTeffi Boyer MontoyaNo ratings yet

- English 10 - Q1 - Module 3 - Identify The Features of Persuasive Texts - PDF - 13pagesDocument15 pagesEnglish 10 - Q1 - Module 3 - Identify The Features of Persuasive Texts - PDF - 13pagesMarife Magsino100% (4)

- Evoluia Creierului Prin Encefalizare Sau de Ce Schimbarea e DureroasDocument7 pagesEvoluia Creierului Prin Encefalizare Sau de Ce Schimbarea e DureroasCatalin PopNo ratings yet

- Forgiveness and InterpretationDocument24 pagesForgiveness and InterpretationCarlos PerezNo ratings yet

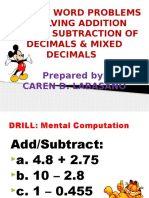

- Solving Word Problems Involving Addition andDocument11 pagesSolving Word Problems Involving Addition andJeffrey Catacutan Flores100% (2)

- Activity 1.1-Erika Joie PelagiaDocument2 pagesActivity 1.1-Erika Joie PelagiaErika Joie PelagiaNo ratings yet

- Cadbury SMDocument8 pagesCadbury SMSmrutiranjan MeherNo ratings yet

- The Spheres of Influence and Wisdom 3Document2 pagesThe Spheres of Influence and Wisdom 3idonotconsent1957No ratings yet