Professional Documents

Culture Documents

10error in Measurement

Uploaded by

Mehmet Akif DemirlekCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

10error in Measurement

Uploaded by

Mehmet Akif DemirlekCopyright:

Available Formats

MEE104 Measurement and Evaluation Techniques

Errors in measurement

Errors will creep into all experiments regardless of the care exerted. Some of these errors are of a random nature,

and some will be due to gross blunders on the part of the experimenter. Bad data due to obvious blunders may

be discarded immediately. But what of the data points that just “look” bad? We cannot throw out data because

they do not conform with our hopes and expectations unless we see something obviously wrong. But, they may

be discarded on the basis of some consistent statistical data analysis

1. Errors

Error is the difference between the measured value and the ‘true value’ of the thing being measured. The word

'error' does not imply that any mistakes have been made. There are two general categories of error: systematic

(or bias) errors and random (or precision) errors.

Systematic errors (also called bias errors) are consistent, repeatable errors. Systematic errors can be

revealed when the conditions are varied, whether deliberately or unintentionally. For example,

suppose the first two millimeters of a ruler are broken off, and the user is not aware of it. Everything

measured will be short by two millimeters under the effect of this systematic error. The measured

values with systematic errors can be related to true values via some algebraic equation

Random errors (also called precision errors) are caused by a lack of repeatability in the output of the

measuring system. Random errors may arise from uncontrolled test conditions in the measurement system,

measurement methods, environmental conditions, data reduction techniques. The most common sign of

random errors is scatter in the measured data. For example, background electrical noise often results

in small random errors in the measured output.

A. Systematic (or Bias) Errors

The systematic error is defined as the average of measured values minus the true value.

Systematic errors arise for many reasons:

Calibration errors – perhaps due to nonlinearity or errors in the calibration method.

Loading or intrusion errors – the sensor may actually change the very thing it is trying to measure.

Spatial errors – these arise when a quantity varies in space, but a measurement is taken only at one

location (e.g. temperature in a room - usually the top of a room is warmer than the bottom).

Human errors – these can arise if a person consistently reads a scale on the low side, for example.

Defective equipment errors – these arise if the instrument consistently reads too high or too low due to

some internal problem or damage (such as our defective ruler example above).

It is reported the following four methodologies to reduce systematic errors [1]:

Calibration, by checking the output(s) for known input(s).

Concomitant method, by using different methods of estimating the same thing and comparing the results.

Inter-laboratory comparison, by comparing the results of similar measurements.

Experience.

B.Random (or Precision) Errors

Random errors are unrepeatable, inconsistent errors in the measurements, resulting in scatter in the data. The

random error of one data point is defined as the reading minus the average of readings.

MEE104 Measurement and Evaluation Techniques

Thus, precision error is identical to random error.

Example:

Given: Five temperature readings are taken of some snow: -1.30, -1.50, -1.20, -1.60, and -1.50oC.

To do: Find the maximum magnitude of random error in oC.

C. Accuracy revisited

Accuracy is the closeness of agreement between a measured value and the true value. Accuracy error is formally

defined as the measured value minus the true value.

The accuracy error of a reading (which may also be called inaccuracy or uncertainty) represents a combination of

bias and precision errors.

The overall accuracy error (or the overall inaccuracy) of a set of readings is defined as the average of all readings

minus the true value. Thus, overall accuracy error is identical to systematic or bias error.

Example:

Given: Consider the same five temperature readings as in the previous example, i.e., -1.30, -1.50, -1.20, -1.60, and -

1.50oC. Also suppose that the true temperature of the snow was -1.45oC.

To do: Calculate the accuracy error of the third data point in oC. What is the overall accuracy error?

Precision characterizes the random error of the instrument’s output. Precision error (of one reading) is defined as

the reading minus the average of readings.

D. Other Sources for Errors

There are many other errors, which all have technical names, as defined here:

Linearity error: The output deviates from the calibrated linear relationship between the input and the

output (see further discussion in the next section on calibration). Linearity error is a type of bias error, but

unlike zero error, the degree of error varies with the magnitude of the reading.

Sensitivity error: The slope of the output vs. input curve is not calibrated exactly in the first place. Since

this affects all readings by the instrument, this is a type of systematic or bias error.

Resolution error: The output precision is limited to discrete steps (e.g., if one reads to the nearest

millimeter on a ruler, the resolution error is around +/- 1 mm). Resolution error is a type of random or

precision error.

Hysteresis error: The output is different, depending on whether the input is increasing or decreasing at the

time of measurement. This is a separate error from instrument repeatability error.

For example, a motor-driven traverse may fall short of its reading due to friction, and the effect would be of

opposite sign when the traverse arrives at the same point from the opposite direction; thus, hysteresis error is a

systematic error, not a random error.

Instrument repeatability error: The instrument gives a different output, when the input returns to the

same value, and the procedure to get to that value is the same. The reasons for the differences are usually

random, so instrument repeatability error is a type of random error.

Drift error: The output changes (drifts) from its correct value, even though the input remains constant.

Drift error can often be seen in the zero reading, which may fluctuate randomly due to electrical noise and

other random causes, or it can drift higher or lower (zero drift) due to on random causes, such as a slow

increase in air temperature in the room. Thus, drift error can be either random or systematic.

MEE104 Measurement and Evaluation Techniques

2. Statistical Representation of Experimental Data

Statisticsistheareaofsciencethatdealswithcollection,organization,analysis,and interpretation of data. Suppose a

large box containing a population of thousands of similar-valued resistors. To get an idea of the value of the

resistors, we might measure a certain number of samples from thousands. The result of measured resistor values

form a data set, which can be used to imply something about the entire population of the bearings in the box,

such as average value and variations.

The probability and statistics allows us to convert (or reduce) raw data (in)to results which provides information

for a large class of engineering judgments.

A. Basic Definitions for Data Analysis using Statistics

Basic terms that define the groups that are subject of statistical analysis are as follows:

Population: defined collection about which we want to draw a conclusion

Sample: subject of random subset of population

Element: an individual in sample set

Parameter: numerical quantity measuring some aspect of a population

Data: All measured values

Statistic: quantity calculated from data collected

A population consists of series of measurements (or readings) of some variable x is available. Variable x can be

anything that is measurable, such as a length, time, voltage, current, resistance, etc.

Asampling of the variable x under controlled, fixed operating conditions renders a finite number of data points.

As a result, a sample of these measurements consists of some portion of the population that is to be analyzed

statistically. The measurements are x1, x2, x3, ..., xn, where n is the number of measurements in the sample under

consideration.

Data is the collection of the observations or measurements on a variable and refers to quantitative or qualitative

attributes of a variable or set of variables.(Eg. the scores of students).

A data set result of observations usually looks non informative and called as raw data (is also called as

ungrouped data).

Most of the time, it is often useful to distribute the data into groups when summarizing large quantities of

raw data (groupped data)

B.Statistics Definitions Associated with Random Error

Statistics are numerical values used to summarize and compare sets of of data. Two important

types of statistics are measures of central tendency and measures of dispersion.

A measure of central tendency is a number used to represent the center or middle of a set of data values.

The mean, median, and mode are three commonly used measures of central tendency.

Measures of Dispersion A measure of dispersion is a statistic that tells you how dispersed, or spread out,

data values are

Measures Of Central Tendency

Mean: the sample mean is simply the arithmetic average, as is commonly calculated

MEE104 Measurement and Evaluation Techniques

n

xi

i 1

x avg x

n

where i is one of the n measurements of the sample.

• The MEAN of a set of values or measurements is the sum of all the measurements divided by the number

of measurements in the set. The mean is the most popular and widely used. It is sometimes called the

arithmetic mean.

• If we get the mean of the sample, we call it the sample mean and it is denoted by (read “x bar”).

• If we compute the mean of the population, we call it the parametric or population mean, denoted by μ

(read “mu”).

Weighted mean is the mean of a set of values where in each value or measurement has a different weight or

degree of importance. The following is its formula:

∑ 𝑥𝑤

𝑚𝑒𝑎𝑛 =

∑𝑤

Where x is measurement or value and w number of measurements of that value. The weighted mean can be

used to calculate mean of grouped data.

Trimmed Mean: If there are extreme values (also called outlier) in a sample, then the mean is influenced greatly

by those values. To offset the effect caused by those extreme values, we can use the concept of trimmed mean.

Trimmed mean is defined as the mean obtained after chopping off values at the high and low extremes.

You might also like

- 15confidence LevelDocument1 page15confidence LevelMehmet Akif DemirlekNo ratings yet

- Histograms: Range 65-12 53 Choose To Have 6 Groups Bin WidthDocument2 pagesHistograms: Range 65-12 53 Choose To Have 6 Groups Bin WidthMehmet Akif DemirlekNo ratings yet

- 14what Graphical Representation ProvidesDocument2 pages14what Graphical Representation ProvidesMehmet Akif DemirlekNo ratings yet

- 12frequency Distributions and Their GraphsDocument3 pages12frequency Distributions and Their GraphsMehmet Akif DemirlekNo ratings yet

- Uncertaintiy Analysis: A. How Can We Describe Our Process or Products When They Are Random?Document3 pagesUncertaintiy Analysis: A. How Can We Describe Our Process or Products When They Are Random?Mehmet Akif DemirlekNo ratings yet

- 6graphical Representation of Measurement ResultsDocument5 pages6graphical Representation of Measurement ResultsMehmet Akif DemirlekNo ratings yet

- Measures of Central Tendency:: Median and ModeDocument2 pagesMeasures of Central Tendency:: Median and ModeMehmet Akif DemirlekNo ratings yet

- 3dimesional AnalysisDocument3 pages3dimesional AnalysisMehmet Akif DemirlekNo ratings yet

- A. Introduction: The Experiment and Observation Require Direct or Indirect Interaction With Physical System or ObjectDocument7 pagesA. Introduction: The Experiment and Observation Require Direct or Indirect Interaction With Physical System or ObjectMehmet Akif DemirlekNo ratings yet

- 2intro To Physical SystemDocument4 pages2intro To Physical SystemMehmet Akif DemirlekNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- PPB 3193 Operation Management - Group 10Document11 pagesPPB 3193 Operation Management - Group 10树荫世界No ratings yet

- Floor Paln ModelDocument15 pagesFloor Paln ModelSaurav RanjanNo ratings yet

- Labor CasesDocument47 pagesLabor CasesAnna Marie DayanghirangNo ratings yet

- Gravity Based Foundations For Offshore Wind FarmsDocument121 pagesGravity Based Foundations For Offshore Wind FarmsBent1988No ratings yet

- Liga NG Mga Barangay: Resolution No. 30Document2 pagesLiga NG Mga Barangay: Resolution No. 30Rey PerezNo ratings yet

- Study of Risk Perception and Potfolio Management of Equity InvestorsDocument58 pagesStudy of Risk Perception and Potfolio Management of Equity InvestorsAqshay Bachhav100% (1)

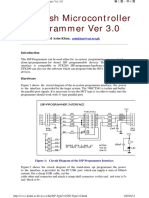

- ISP Flash Microcontroller Programmer Ver 3.0: M Asim KhanDocument4 pagesISP Flash Microcontroller Programmer Ver 3.0: M Asim KhanSrđan PavićNo ratings yet

- 1980WB58Document167 pages1980WB58AKSNo ratings yet

- Assessment 21GES1475Document4 pagesAssessment 21GES1475kavindupunsara02No ratings yet

- UBITX V6 MainDocument15 pagesUBITX V6 MainEngaf ProcurementNo ratings yet

- MEMORANDUMDocument8 pagesMEMORANDUMAdee JocsonNo ratings yet

- Notice For AsssingmentDocument21 pagesNotice For AsssingmentViraj HibareNo ratings yet

- Tendernotice 1Document42 pagesTendernotice 1Hanu MittalNo ratings yet

- Time-Dependent Deformation of Shaly Rocks in Southern Ontario 1978Document11 pagesTime-Dependent Deformation of Shaly Rocks in Southern Ontario 1978myplaxisNo ratings yet

- Intelligent Smoke & Heat Detectors: Open, Digital Protocol Addressed by The Patented XPERT Card Electronics Free BaseDocument4 pagesIntelligent Smoke & Heat Detectors: Open, Digital Protocol Addressed by The Patented XPERT Card Electronics Free BaseBabali MedNo ratings yet

- Electrical Estimate Template PDFDocument1 pageElectrical Estimate Template PDFMEGAWATT CONTRACTING AND ELECTRICITY COMPANYNo ratings yet

- 88 - 02 Exhaust Manifold Gasket Service BulletinDocument3 pages88 - 02 Exhaust Manifold Gasket Service BulletinGerrit DekkerNo ratings yet

- Ssasaaaxaaa11111......... Desingconstructionof33kv11kvlines 150329033645 Conversion Gate01Document167 pagesSsasaaaxaaa11111......... Desingconstructionof33kv11kvlines 150329033645 Conversion Gate01Sunil Singh100% (1)

- Week 7 Apple Case Study FinalDocument18 pagesWeek 7 Apple Case Study Finalgopika surendranathNo ratings yet

- Kompetensi Sumber Daya Manusia SDM Dalam Meningkatkan Kinerja Tenaga Kependidika PDFDocument13 pagesKompetensi Sumber Daya Manusia SDM Dalam Meningkatkan Kinerja Tenaga Kependidika PDFEka IdrisNo ratings yet

- Microeconomics: Production, Cost Minimisation, Profit MaximisationDocument19 pagesMicroeconomics: Production, Cost Minimisation, Profit Maximisationhishamsauk50% (2)

- Service Manual Lumenis Pulse 30HDocument99 pagesService Manual Lumenis Pulse 30HNodir AkhundjanovNo ratings yet

- MSA Chair's Report 2012Document56 pagesMSA Chair's Report 2012Imaad IsaacsNo ratings yet

- Question Paper: Hygiene, Health and SafetyDocument2 pagesQuestion Paper: Hygiene, Health and Safetywf4sr4rNo ratings yet

- Active Directory FactsDocument171 pagesActive Directory FactsVincent HiltonNo ratings yet

- Gis Tabels 2014 15Document24 pagesGis Tabels 2014 15seprwglNo ratings yet

- Interest Rates and Bond Valuation: All Rights ReservedDocument22 pagesInterest Rates and Bond Valuation: All Rights ReservedAnonymous f7wV1lQKRNo ratings yet

- CT018 3 1itcpDocument31 pagesCT018 3 1itcpraghav rajNo ratings yet

- COGELSA Food Industry Catalogue LDDocument9 pagesCOGELSA Food Industry Catalogue LDandriyanto.wisnuNo ratings yet

- Aman 5Document1 pageAman 5HamidNo ratings yet