Professional Documents

Culture Documents

Booth's Multiplication Algorithm

Uploaded by

Nikita vermaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Booth's Multiplication Algorithm

Uploaded by

Nikita vermaCopyright:

Available Formats

Booth’s Multiplication Algorithm

Booth algorithm gives a procedure for multiplying binary integers in signed 2’s complement

representation in efficient way, i.e., less number of additions/subtractions required. It

operates on the fact that strings of 0’s in the multiplier require no addition but just shifting and

a string of 1’s in the multiplier from bit weight 2^k to weight 2^m can be treated as 2^(k+1 ) to

2^m.

As in all multiplication schemes, booth algorithm requires examination of the multiplier

bits and shifting of the partial product. Prior to the shifting, the multiplicand may be added to

the partial product, subtracted from the partial product, or left unchanged according to

following rules:

1. The multiplicand is subtracted from the partial product upon encountering the first least

significant 1 in a string of 1’s in the multiplier

2. The multiplicand is added to the partial product upon encountering the first 0 (provided

that there was a previous ‘1’) in a string of 0’s in the multiplier.

3. The partial product does not change when the multiplier bit is identical to the previous

multiplier bit.

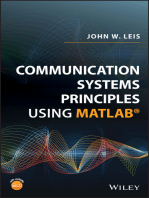

Hardware Implementation of Booths Algorithm – The hardware implementation of the booth

algorithm requires the register configuration shown in the figure below.

Booth’s Algorithm Flowchart

SSIPMT RAIPUR | Computer System Architecture Notes

We name the register as A, B and Q, AC, BR and QR respectively. Qn designates the least

significant bit of multiplier in the register QR. An extra flip-flop Qn+1is appended to QR to

facilitate a double inspection of the multiplier. The flowchart for the booth algorithm is shown

below.

SSIPMT RAIPUR | Computer System Architecture Notes

AC and the appended bit Qn+1 are initially cleared to 0 and the sequence SC is set to a number

n equal to the number of bits in the multiplier. The two bits of the multiplier in Qn and Qn+1are

inspected. If the two bits are equal to 10, it means that the first 1 in a string has been

encountered. This requires subtraction of the multiplicand from the partial product in AC. If the

2 bits are equal to 01, it means that the first 0 in a string of 0’s has been encountered. This

requires the addition of the multiplicand to the partial product in AC.

When the two bits are equal, the partial product does not change. An overflow cannot occur

because the addition and subtraction of the multiplicand follow each other. As a consequence,

the 2 numbers that are added always have a opposite signs, a condition that excludes an

overflow. The next step is to shift right the partial product and the multiplier (including Qn+1).

This is an arithmetic shift right (ashr) operation which AC and QR ti the right and leaves the sign

bit in AC unchanged. The sequence counter is decremented and the computational loop is

repeated n times.

Example – A numerical example of booth’s algorithm is shown below for n = 4. It shows the

step by step multiplication of -5 and -7.

MD = -5 = 1011, MD = 1011, MD'+1 = 0101

MR = -7 = 1001

The explanation of first step is as follows: Qn+1

AC = 0000, MR = 1001, Qn+1 = 0, SC = 4

Qn Qn+1 = 10

So, we do AC + (MD)'+1, which gives AC = 0101

On right shifting AC and MR, we get

AC = 0010, MR = 1100 and Qn+1 = 1

OPERATION AC MR Qn+1 SC

0000 1001 0 4

AC + MD’ + 1 0101 1001 0

A SH R 0010 1100 1 3

AC + MR 1101 1100 1

A SH R 1110 1110 0 2

A SH R 1111 0111 0 1

AC + MD’ + 1 0010 0011 1 0

Product is calculated as follows:

Product = AC MR

Product = 0010 0011 = 35

SSIPMT RAIPUR | Computer System Architecture Notes

Example: Multiply the two numbers 7 and 3 by using the Booth's multiplication

algorithm.

Ans. Here we have two numbers, 7 and 3. First of all, we need to convert 7 and 3 into

binary numbers like 7 = (0111) and 3 = (0011). Now set 7 (in binary 0111) as multiplicand

(M) and 3 (in binary 0011) as a multiplier (Q). And SC (Sequence Count) represents the

number of bits, and here we have 4 bits, so set the SC = 4. Also, it shows the number of

iteration cycles of the booth's algorithms and then cycles run SC = SC - 1 time.

Qn Qn + 1 M = (0111) AC Q Qn + 1 SC

M' + 1 = (1001) & Operation

1 0 Initial 0000 0011 0 4

Subtract (M' + 1) 1001

1001

Perform Arithmetic Right Shift operations 1100 1001 1 3

(ashr)

1 1 Perform Arithmetic Right Shift operations 1110 0100 1 2

(ashr)

0 1 Addition (A + M) 0111

0101 0100

Perform Arithmetic right shift operation 0010 1010 0 1

0 0 Perform Arithmetic right shift operation 0001 0101 0 0

The numerical example of the Booth's Multiplication Algorithm is 7 x 3 = 21 and the binary

representation of 21 is 10101. Here, we get the resultant in binary 00010101. Now we

convert it into decimal, as (000010101)10 = 2*4 + 2*3 + 2*2 + 2*1 + 2*0 => 21.

SSIPMT RAIPUR | Computer System Architecture Notes

Example: Multiply the two numbers 23 and -9 by using the Booth's multiplication

algorithm.

Here, M = 23 = (010111) and Q = -9 = (110111)

Qn Qn + 1 M=010111 AC Q Qn + 1 SC

M' + 1 = 1 0 1 0 0 1

Initially 000000 110111 0 6

1 0 Subtract M 101001

101001

Perform Arithmetic right shift 110100 111011 1 5

operation

1 1 Perform Arithmetic right shift 111010 011101 1 4

operation

1 1 Perform Arithmetic right shift 111101 001110 1 3

operation

0 1 Addition (A + M) 010111

010100

Perform Arithmetic right shift 001010 000111 0 2

operation

1 0 Subtract M 101001

110011

Perform Arithmetic right shift 111001 100011 1 1

operation

1 1 Perform Arithmetic right shift 111100 110001 1 0

operation

Qn + 1 = 1, it means the output is negative

Hence, 23 * -9 = 2's complement of 111100110001 => (00001100111)

SSIPMT RAIPUR | Computer System Architecture Notes

Bit pair recoding

Bit pair recoding halves the maximum number of summands. Group the Booth-recoded multiplier

bits in pairs and observe the following: The pair (+1 -1) is equivalent to the pair (0 +1). That is

instead of adding -1 times the multiplicand m at shift position i to +1 (M at position i+1, the same

result is obtained by adding +1 (M at position i. Eg: 11010 – Bit Pair recoding value is 0 -1 -2

Bit-pair recoding is the product of the multiplier results in using at most one summand for each

pair of bits in the multiplier. It is derived directly from the Booth algorithm. Grouping the Booth-

recoded multiplier bits in pairs will decrease the multiplication only by summands.

Fast multiplication (Bit – pair recoding of multiplier) - This is derived from the Booth’s algorithm. It pairs

the multiplier bits and gives one multiplier bit per pair, thus reducing the number of summands by half.

This is shown below.

SSIPMT RAIPUR | Computer System Architecture Notes

Multiplication requiring only n/2 summands

Example: Multiply each of the following pairs of signed 2’s-complement numbers using the Booth

algorithm. In each case, assume that A is the multiplicand and B is the multiplier.

(a) A = 010111 and B = 110110

(b) A = 110011 and B = 101100

(a)Consider the following binary numbers:

Multiply the signed 2’s complement numbers using the bit-pair recoding of the multiplier.

SSIPMT RAIPUR | Computer System Architecture Notes

Thus, the resultant value is .

(b)

Consider the following binary numbers:

Multiply the signed 2’s complement numbers using the bit-pair recoding of the multiplier.

Thus, the resultant value is .

SSIPMT RAIPUR | Computer System Architecture Notes

Restoring Division Algorithm for Unsigned

Integer

A division algorithm provides a quotient and a remainder when we divide two number. They are

generally of two type slow algorithm and fast algorithm. Slow division algorithm are restoring,

non-restoring, non-performing restoring, SRT algorithm and under fast comes Newton–Raphson

and Goldschmidt.

In this article, will be performing restoring algorithm for unsigned integer. Restoring term is due

to fact that value of register A is restored after each iteration.

Here, register Q contain quotient and register A contain remainder. Here, n-bit dividend is loaded

in Q and divisor is loaded in M. Value of Register is initially kept 0 and this is the register whose

value is restored during iteration due to which it is named Restoring.

Let’s pick the step involved:

Step-1: First the registers are initialized with corresponding values (Q = Dividend, M = Divisor,

A = 0, n = number of bits in dividend)

Step-2: Then the content of register A and Q is shifted left as if they are a single unit

Step-3: Then content of register M is subtracted from A and result is stored in A

Step-4: Then the most significant bit of the A is checked if it is 0 the least significant bit of Q

is set to 1 otherwise if it is 1 the least significant bit of Q is set to 0 and value of register A is

restored i.e the value of A before the subtraction with M

Step-5: The value of counter n is decremented

Step-6: If the value of n becomes zero we get of the loop otherwise we repeat from step 2

Step-7: Finally, the register Q contain the quotient and A contain remainder

SSIPMT RAIPUR | Computer System Architecture Notes

SSIPMT RAIPUR | Computer System Architecture Notes

Examples:

Perform Division Restoring Algorithm

Dividend = 11

Divisor = 3

n M A Q Operation

4 00011 00000 1011 initialize

00011 00001 011_ shift left AQ

00011 11110 011_ A=A-M

00011 00001 0110 Q[0]=0 And restore A

3 00011 00010 110_ shift left AQ

00011 11111 110_ A=A-M

00011 00010 1100 Q[0]=0

2 00011 00101 100_ shift left AQ

00011 00010 100_ A=A-M

00011 00010 1001 Q[0]=1

1 00011 00101 001_ shift left AQ

00011 00010 001_ A=A-M

00011 00010 0011 Q[0]=1

Remember to restore the value of A most significant bit of A is 1. As that register Q contain the

quotient, i.e. 3 and register A contain remainder 2.

Non - Restoring Division Algorithm for

Unsigned Integer

Non-Restoring division, it is less complex than the restoring one because simpler operation are

involved i.e. addition and subtraction, also now restoring step is performed. In the method,

rely on the sign bit of the register which initially contain zero named as A.

Here is the flow chart given below.

SSIPMT RAIPUR | Computer System Architecture Notes

SSIPMT RAIPUR | Computer System Architecture Notes

Let’s pick the step involved:

Step-1: First the registers are initialized with corresponding values (Q = Dividend, M =

Divisor, A = 0, n = number of bits in dividend)

Step-2: Check the sign bit of register A

Step-3: If it is 1 shift left content of AQ and perform A = A+M, otherwise shift left AQ and

perform A = A-M (means add 2’s complement of M to A and store it to A)

Step-4: Again the sign bit of register A

Step-5: If sign bit is 1 Q[0] become 0 otherwise Q[0] become 1 (Q[0] means least significant

bit of register Q)

Step-6: Decrements value of N by 1

Step-7: If N is not equal to zero go to Step 2 otherwise go to next step

Step-8: If sign bit of A is 1 then perform A = A+M

Step-9: Register Q contain quotient and A contain remainder

Examples: Perform Non-Restoring Division for Unsigned Integer

Dividend =11

Divisor =3

-M =11101

N M A Q Action

4 00011 00000 1011 Start

00001 011_ Left shift AQ

11110 011_ A=A-M

3 11110 0110 Q[0]=0

11100 110_ Left shift AQ

11111 110_ A=A+M

2 11111 1100 Q[0]=0

11111 100_ Left Shift AQ

00010 100_ A=A+M

1 00010 1001 Q[0]=1

00101 001_ Left Shift AQ

00010 001_ A=A-M

0 00010 0011 Q[0]=1

Quotient = 3 (Q), Remainder = 2 (A)

SSIPMT RAIPUR | Computer System Architecture Notes

IEEE Standard 754 Floating Point Numbers

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-

point computation which was established in 1985 by the Institute of Electrical and Electronics

Engineers (IEEE). The standard addressed many problems found in the diverse floating point

implementations that made them difficult to use reliably and reduced their portability. IEEE

Standard 754 floating point is the most common representation today for real numbers on

computers, including Intel-based PC’s, Macs, and most Unix platforms.

There are several ways to represent floating point number but IEEE 754 is the most efficient in

most cases. IEEE 754 has 3 basic components:

1. The Sign of Mantissa –

This is as simple as the name. 0 represents a positive number while 1 represents a negative

number.

2. The Biased exponent –

The exponent field needs to represent both positive and negative exponents. A bias is added

to the actual exponent in order to get the stored exponent.

3. The Normalized Mantissa –

The mantissa is part of a number in scientific notation or a floating-point number, consisting

of its significant digits. Here we have only 2 digits, i.e. O and 1. So a normalized mantissa is

one with only one 1 to the left of the decimal.

IEEE 754 numbers are divided into two based on the above three components: single precision

and double precision.

SSIPMT RAIPUR | Computer System Architecture Notes

TYPES SIGN BIASED EXPONENT NORMALISED MANTISA BIAS

Single precision 1(31st bit) 8(30-23) 23(22-0) 127

1(63rd bit)

Double precision 11(62-52) 52(51-0) 1023

Example –

85.125

85 = 1010101

0.125 = 001

85.125 = 1010101.001

=1.010101001 x 2^6

sign = 0

1. Single precision:

biased exponent 127+6=133

133 = 10000101

Normalised mantisa = 010101001

we will add 0's to complete the 23 bits

SSIPMT RAIPUR | Computer System Architecture Notes

The IEEE 754 Single precision is:

= 0 10000101 01010100100000000000000

This can be written in hexadecimal form 42AA4000

2. Double precision:

biased exponent 1023+6=1029

1029 = 10000000101

Normalised mantisa = 010101001

we will add 0's to complete the 52 bits

The IEEE 754 Double precision is:

= 0 10000000101 0101010010000000000000000000000000000000000000000000

This can be written in hexadecimal form 4055480000000000

FLOATING POINT ADDITION AND

SUBTRACTION

FLOATING POINT ADDITION

To understand floating point addition, first we see addition of real numbers in decimal as

same logic is applied in both cases.

For example, we have to add 1.1 * 103 and 50.

We cannot add these numbers directly. First, we need to align the exponent and then, we can

add significand.

After aligning exponent, we get 50 = 0.05 * 103

Now adding significand, 0.05 + 1.1 = 1.15

So, finally we get (1.1 * 103 + 50) = 1.15 * 103

Here, notice that we shifted 50 and made it 0.05 to add these numbers.

Now let us take example of floating point number addition

We follow these steps to add two numbers:

SSIPMT RAIPUR | Computer System Architecture Notes

1. Align the significand

2. Add the significands

3. Normalize the result

Let the two numbers be

x = 9.75

y = 0.5625

Converting them into 32-bit floating point representation,

9.75’s representation in 32-bit format = 0 10000010 00111000000000000000000

0.5625’s representation in 32-bit format = 0 01111110 00100000000000000000000

Now we get the difference of exponents to know how much shifting is required.

(10000010 – 01111110)2 = (4)10

Now, we shift the mantissa of lesser number right side by 4 units.

Mantissa of 0.5625 = 1.00100000000000000000000

(note that 1 before decimal point is understood in 32-bit representation)

Shifting right by 4 units, we get 0.00010010000000000000000

Mantissa of 9.75 = 1. 00111000000000000000000

Adding mantissa of both

0. 00010010000000000000000

+ 1. 00111000000000000000000

————————————————-

1. 01001010000000000000000

In final answer, we take exponent of bigger number

So, final answer consist of :

Sign bit = 0

Exponent of bigger number = 10000010

Mantissa = 01001010000000000000000

32 bit representation of answer = x + y = 0 10000010 01001010000000000000000

SSIPMT RAIPUR | Computer System Architecture Notes

FLOATING POINT SUBTRACTION

Subtraction is similar to addition with some differences like we subtract mantissa unlike

addition and in sign bit we put the sign of greater number.

Let the two numbers be

x = 9.75

y = – 0.5625

Converting them into 32-bit floating point representation

9.75’s representation in 32-bit format = 0 10000010 00111000000000000000000

– 0.5625’s representation in 32-bit format = 1 01111110 00100000000000000000000

Now, we find the difference of exponents to know how much shifting is required.

(10000010 – 01111110)2 = (4)10

Now, we shift the mantissa of lesser number right side by 4 units.

Mantissa of – 0.5625 = 1.00100000000000000000000

(note that 1 before decimal point is understood in 32-bit representation)

Shifting right by 4 units, 0.00010010000000000000000

Mantissa of 9.75= 1. 00111000000000000000000

Subtracting mantissa of both

0. 00010010000000000000000

– 1. 00111000000000000000000

————————————————

1. 00100110000000000000000

Sign bit of bigger number = 0

So, finally the answer = x – y = 0 10000010 00100110000000000000000

SSIPMT RAIPUR | Computer System Architecture Notes

Multiplication and Division

Multiplication and division are simple because the mantissa and exponents can be processed

independently. FP multiplication requires fixed point multiplication of mantissa and fixed-point

addition of exponents. As discussed in chapter 3 (Data representation) the exponents are stored

in the biased form. The bias is +127 for IEEE single precision and +1023 for double precision.

During multiplication, when both the exponents are added it results in excess 127. Hence the bias

is to be adjusted by subtracting 127 or 1023 from the resulting exponent.

SSIPMT RAIPUR | Computer System Architecture Notes

Floating Point division requires fixed-point division of mantissa and fixed point subtraction of

exponents. The bias adjustment is done by adding +127 to the resulting mantissa. Normalization

of the result is necessary in both the cases of multiplication and division. Thus FP division and

subtraction are not much complicated to implement.

All the examples are in base10 (decimal) to enhance the understanding. Doing in binary is similar.

SSIPMT RAIPUR | Computer System Architecture Notes

Carry-Look ahead Adder

In case of parallel adders, the binary addition of two numbers is initiated when all the bits of the

augend and the addend must be available at the same time to perform the computation. In a

parallel adder circuit, the carry output of each full adder stage is connected to the carry input of

the next higher-order stage, hence it is also called as ripple carry type adder.

In such adder circuits, it is not possible to produce the sum and carry outputs of any stage until

the input carry occurs. So there will be a considerable time delay in the addition process , which

SSIPMT RAIPUR | Computer System Architecture Notes

is known as , carry propagation delay. In any combinational circuit , signal must propagate

through the gates before the correct output sum is available in the output terminals.

Consider the above figure, in which the sum S4 is produced by the corresponding full adder as

soon as the input signals are applied to it. But the carry input C4 is not available on its final steady

state value until carry c3 is available at its steady state value. Similarly C3 depends on C2 and C2

on C1. Therefore, carry must propagate to all the stages in order that output S4 and carry C5

settle their final steady-state value.

The propagation time is equal to the propagation delay of the typical gate times the number of

gate levels in the circuit. For example, if each full adder stage has a propagation delay of 20n

seconds, then S4 will reach its final correct value after 80n (20 × 4) seconds. If we extend the

number of stages for adding more number of bits then this situation becomes much worse.

So the speed at which the number of bits added in the parallel adder depends on the carry

propagation time. However, signals must be propagated through the gates at a given enough

time to produce the correct or desired output.

SSIPMT RAIPUR | Computer System Architecture Notes

The following are the methods to get the high speed in the parallel adder to produce the binary

addition.

1. By employing faster gates with reduced delays, we can reduce the propagation delay. But

there will be a capability limit for every physical logic gate.

2. Another way is to increase the circuit complexity in order to reduce the carry delay time.

There are several methods available to speeding up the parallel adder, one commonly

used method employs the principle of look ahead-carry addition by eliminating inter stage

carry logic.

Back to top

Carry-Lookahead Adder

A carry-Lookahead adder is a fast parallel adder as it reduces the propagation delay by more

complex hardware, hence it is costlier. In this design, the carry logic over fixed groups of bits of

the adder is reduced to two-level logic, which is nothing but a transformation of the ripple carry

design.

This method makes use of logic gates so as to look at the lower order bits of the augend and

addend to see whether a higher order carry is to be generated or not. Let us discuss in detail.

SSIPMT RAIPUR | Computer System Architecture Notes

Consider the full adder circuit shown above with corresponding truth table. If we define two

variables as carry generate Gi and carry propagate Pi then,

Pi = Ai ⊕ Bi

Gi = Ai Bi

The sum output and carry output can be expressed as

Si = Pi ⊕ Ci

C i +1 = Gi + Pi Ci

Where Gi is a carry generate which produces the carry when both Ai, Bi are one regardless of the

input carry. Pi is a carry propagate and it is associate with the propagation of carry from Ci to Ci

+1.

SSIPMT RAIPUR | Computer System Architecture Notes

The carry output Boolean function of each stage in a 4 stage carry-Lookahead adder can be

expressed as

C1 = G0 + P0 Cin

C2 = G1 + P1 C1

= G1 + P1 G0 + P1 P0 Cin

C3 = G2 + P2 C2

= G2 + P2 G1+ P2 P1 G0 + P2 P1 P0 Cin

C4 = G3 + P3 C3

= G3 + P3 G2+ P3 P2 G1 + P3 P2 P1 G0 + P3 P2 P1 P0 Cin

From the above Boolean equations we can observe that C4 does not have to wait for C3 and C2

to propagate but actually C4 is propagated at the same time as C3 and C2. Since the Boolean

expression for each carry output is the sum of products so these can be implemented with one

level of AND gates followed by an OR gate.

The implementation of three Boolean functions for each carry output (C2, C3 and C4) for a carry-

Lookahead carry generator shown in below figure.

SSIPMT RAIPUR | Computer System Architecture Notes

Therefore, a 4 bit parallel adder can be implemented with the carry-Lookahead scheme to

increase the speed of binary addition as shown in below figure. In this, two Ex-OR gates are

required by each sum output. The first Ex-OR gate generates Pi variable output and the AND gate

generates Gi variable.

Hence, in two gates levels all these P’s and G’s are generated. The carry-Lookahead generators

allows all these P and G signals to propagate after they settle into their steady state values and

produces the output carriers at a delay of two levels of gates. Therefore, the sum outputs S2 to

S4 have equal propagation delay times.

SSIPMT RAIPUR | Computer System Architecture Notes

It is also possible to construct 16 bit and 32 bit parallel adders by cascading the number of 4 bit

adders with carry logic. A 16 bit carry-Lookahead adder is constructed by cascading the four 4 bit

adders with two more gate delays, whereas the 32 bit carry-Lookahead adder is formed by

cascading of two 16 bit adders. In a 16 bit carry-Lookahead adder, 5 and 8 gate delays are

required to get C16 and S15 respectively, which are less as compared to the 9 and 10 gate delay

for C16 and S15 respectively in cascaded four bit carry-Lookahead adder blocks. Similarly, in 32

bit adder, 7 and 10 gate delays are required by C32 and S31 which are less compared to 18 and

17 gate delays for the same outputs if the 32 bit adder is implemented by eight 4 bit adders.

SSIPMT RAIPUR | Computer System Architecture Notes

You might also like

- COA Unit 2Document25 pagesCOA Unit 2Shivam KumarNo ratings yet

- COA Module4Document26 pagesCOA Module4Aditya Dhanaraj KunduNo ratings yet

- COA Mod 3Document25 pagesCOA Mod 3Rosh Roy GeorgeNo ratings yet

- Pipelining - Basic Principles, Classification of Pipeline ProcessorsDocument30 pagesPipelining - Basic Principles, Classification of Pipeline ProcessorsJoel K SanthoshNo ratings yet

- COA Mod 3Document30 pagesCOA Mod 3BasithNo ratings yet

- Computer Arithmetic 1. Addition and Subtraction of Unsigned NumbersDocument19 pagesComputer Arithmetic 1. Addition and Subtraction of Unsigned NumbersSai TrilokNo ratings yet

- Chapter 4 Computer Arithmetic: 4.1 Integer RepresentationDocument10 pagesChapter 4 Computer Arithmetic: 4.1 Integer RepresentationLui PascherNo ratings yet

- Unit 3Document87 pagesUnit 3rohanrec92No ratings yet

- Multiplier Bits and Shifting of The Partial Product. Prior To The Shifting, TheDocument4 pagesMultiplier Bits and Shifting of The Partial Product. Prior To The Shifting, TheMAHESH SNo ratings yet

- Vishal BoothDocument12 pagesVishal BoothKishor SarawadekarNo ratings yet

- Vlsi Iiest SBDocument45 pagesVlsi Iiest SBUshaRaniDashNo ratings yet

- Running Addition ON Partial-Products (Multiplication)Document16 pagesRunning Addition ON Partial-Products (Multiplication)Niel Roel VelascoNo ratings yet

- Computer Arithmetic: Part II: Integer Arithmetic & Floating PointDocument30 pagesComputer Arithmetic: Part II: Integer Arithmetic & Floating PointtesfuNo ratings yet

- Booth's AlgorithmDocument9 pagesBooth's AlgorithmPULKIT GUPTA100% (1)

- COA Unit 2Document57 pagesCOA Unit 2a2021cse7814No ratings yet

- William Stallings Computer Organization and Architecture 8 Edition Computer ArithmeticDocument26 pagesWilliam Stallings Computer Organization and Architecture 8 Edition Computer ArithmeticAqib abdullahNo ratings yet

- 15-Booth Multiplication AlgorithmDocument10 pages15-Booth Multiplication AlgorithmDevansh b BajpaiNo ratings yet

- Imd3 Booths AlgorithmDocument5 pagesImd3 Booths AlgorithmAlzheimer B. BoonthNo ratings yet

- FALLSEM2018-19 CSE2001 TH SJT502 VL2018191005001 Reference Material I 2.4 Boothalg1Document24 pagesFALLSEM2018-19 CSE2001 TH SJT502 VL2018191005001 Reference Material I 2.4 Boothalg1Abhradeep Nag 16BEE0087No ratings yet

- 9 Multiplication and DivisionDocument11 pages9 Multiplication and DivisionAkhilReddy SankatiNo ratings yet

- 6-Booth''s Multiplication and Booth''s Modified Algorithm-18-01-2024Document14 pages6-Booth''s Multiplication and Booth''s Modified Algorithm-18-01-2024Baladhithya TNo ratings yet

- Switching Circuits & Logic Design: 18 Circuits For Arithmetic OperationsDocument8 pagesSwitching Circuits & Logic Design: 18 Circuits For Arithmetic OperationsGajinder SinghNo ratings yet

- SSCE Shift MultDocument4 pagesSSCE Shift MultDhinesh DhamodarNo ratings yet

- Unit 3Document49 pagesUnit 3zccoffin007No ratings yet

- Submitted To: Submitted byDocument8 pagesSubmitted To: Submitted byrajput12345No ratings yet

- CA Assignment2Document6 pagesCA Assignment2Sam5127No ratings yet

- CS205 Assignment1 SolutionDocument7 pagesCS205 Assignment1 SolutionjohnnybyzhangNo ratings yet

- Computer Architecture: ALU (2) - Integer ArithmeticDocument24 pagesComputer Architecture: ALU (2) - Integer ArithmeticSulochana JangraNo ratings yet

- DivisionDocument19 pagesDivisionKomal SwamiNo ratings yet

- C++ Bitwise OperatorsDocument19 pagesC++ Bitwise OperatorsÄbûýé SêñdèkúNo ratings yet

- 2-Binary Multiplication-22-01-2022 (22-Jan-2022) Material - I - 22-01-2022 - Binary - MultiplicationDocument10 pages2-Binary Multiplication-22-01-2022 (22-Jan-2022) Material - I - 22-01-2022 - Binary - MultiplicationRandom SigninsNo ratings yet

- Chapter 8Document39 pagesChapter 8arif muttaqinNo ratings yet

- BoothDocument29 pagesBoothgurbeer_sandhu_15No ratings yet

- DLD03 ComplementDocument34 pagesDLD03 ComplementShan Anwer100% (1)

- A NumberingDocument32 pagesA NumberingshashaNo ratings yet

- Mod 1Document29 pagesMod 1Abin KurianNo ratings yet

- Booth Algorithm, Toom Cook, Restoring and Non Restoring, KaratsubaDocument9 pagesBooth Algorithm, Toom Cook, Restoring and Non Restoring, KaratsubadileshwarNo ratings yet

- ALU Inputs and OutputsDocument63 pagesALU Inputs and OutputsattappNo ratings yet

- Bitwise Complement: The Bitwise Complement Operator, The Tilde,, Flips Every BitDocument30 pagesBitwise Complement: The Bitwise Complement Operator, The Tilde,, Flips Every BitGeorge NădejdeNo ratings yet

- Lec9 Fixed Point RepresentationDocument19 pagesLec9 Fixed Point RepresentationRaman KalraNo ratings yet

- Assignment 1 CAP 208: Part-ADocument4 pagesAssignment 1 CAP 208: Part-APreet AroraNo ratings yet

- Quiz For Chapter 3 With Solutions PDFDocument8 pagesQuiz For Chapter 3 With Solutions PDFSiddharth SinghNo ratings yet

- Introduction To Mechatronics Assignment (Model Answers)Document4 pagesIntroduction To Mechatronics Assignment (Model Answers)Phenias ManyashaNo ratings yet

- Background:: Figure 1: Wireless Transmission of A SignalDocument21 pagesBackground:: Figure 1: Wireless Transmission of A SignalRAVINo ratings yet

- COA Module 2Document65 pagesCOA Module 2Rohan BalasubramanianNo ratings yet

- Booth's Multiplication (Data Path + Control Path)Document19 pagesBooth's Multiplication (Data Path + Control Path)Bhavya Madan100% (1)

- EEE 105 Machine Problem 1: 1 SpecificationsDocument4 pagesEEE 105 Machine Problem 1: 1 SpecificationsJaniessy ZozobradoNo ratings yet

- LogicDesign 6Document38 pagesLogicDesign 6hwangmbwNo ratings yet

- CO Unit 1-1Document17 pagesCO Unit 1-1Aravinder Reddy SuramNo ratings yet

- 10-Floating Point Representation With IEEE Standards and Algorithms For Common Arithmetic operations-30-Jul-2019Material - IDocument34 pages10-Floating Point Representation With IEEE Standards and Algorithms For Common Arithmetic operations-30-Jul-2019Material - Ividhi agarwalNo ratings yet

- FALLSEM2019-20 CSE2001 TH VL2019201000585 Reference Material I 13-Aug-2019 Data Representation and Computer Arithmetic 2019 2010Document34 pagesFALLSEM2019-20 CSE2001 TH VL2019201000585 Reference Material I 13-Aug-2019 Data Representation and Computer Arithmetic 2019 2010Puneeth SaiNo ratings yet

- Arithmetic Processor: 10-2 Addition and SubtractionDocument9 pagesArithmetic Processor: 10-2 Addition and SubtractionSarbesh ChaudharyNo ratings yet

- Multiplication & Division AlgorithmsDocument27 pagesMultiplication & Division AlgorithmsPramod SrinivasanNo ratings yet

- BoothDocument28 pagesBoothAnshul JainNo ratings yet

- Computer Arithmatic1Document38 pagesComputer Arithmatic1dp06vnsNo ratings yet

- CD Module4 Part2Document13 pagesCD Module4 Part2Aditya Dhanaraj KunduNo ratings yet

- Adders and Subtractors PDFDocument8 pagesAdders and Subtractors PDFrakshithaNo ratings yet

- 19ec4202 Digital Ic DesignDocument5 pages19ec4202 Digital Ic Designsrihari_56657801No ratings yet

- Modified Low-Power and Area-Efficient Carry Select Adder Using D-LatchDocument8 pagesModified Low-Power and Area-Efficient Carry Select Adder Using D-LatchhemanthbbcNo ratings yet

- Design of Power and Area Efficient Approximate MultipliersDocument22 pagesDesign of Power and Area Efficient Approximate MultipliersGaurav Dhoot0% (1)

- UNIT-II 2 MarksDocument39 pagesUNIT-II 2 MarksKumar MadhuNo ratings yet

- Computer Organization and Architecture LabExperimentsDocument31 pagesComputer Organization and Architecture LabExperimentsfun time [funny videos]No ratings yet

- Question Bank DSD III SemDocument4 pagesQuestion Bank DSD III Semasymtodegaming0501No ratings yet

- Question Bank: Siddharth Group of Institutions:: PutturDocument23 pagesQuestion Bank: Siddharth Group of Institutions:: PutturArindam SenNo ratings yet

- f37 Book Intarch Pres pt3 PDFDocument95 pagesf37 Book Intarch Pres pt3 PDFS Joe Patrick GnanarajNo ratings yet

- Digital Logics and Circuit Design - Practical FileDocument21 pagesDigital Logics and Circuit Design - Practical FileSagar SharmaNo ratings yet

- Answer Key Afternoon SlotDocument37 pagesAnswer Key Afternoon SlotSamiksha vcNo ratings yet

- Karatsuba Algorithm and Urdhva-Tiryagbhyam AlgorithmDocument6 pagesKaratsuba Algorithm and Urdhva-Tiryagbhyam AlgorithmAMIT KUMAR PANDANo ratings yet

- ISC 2016 Computer Science Theory Paper 1 Solved PaperDocument27 pagesISC 2016 Computer Science Theory Paper 1 Solved PaperAdit Sarkar0% (1)

- Boolean and GateDocument69 pagesBoolean and GatevsbistNo ratings yet

- CS1312Document5 pagesCS1312Abhimanyu YadavNo ratings yet

- Lecture 2 - Combinational and Sequential LogicDocument27 pagesLecture 2 - Combinational and Sequential LogicGowthu GowthamiNo ratings yet

- ContentsDocument77 pagesContentsRajasekar PichaimuthuNo ratings yet

- UT Dallas Syllabus For cs4341.001.08s Taught by Galigekere Dattatreya (Datta)Document2 pagesUT Dallas Syllabus For cs4341.001.08s Taught by Galigekere Dattatreya (Datta)UT Dallas Provost's Technology GroupNo ratings yet

- Brent Kung AdderDocument60 pagesBrent Kung AdderAnonymous gLVMeN2hNo ratings yet

- DE-unit 1 PART - 1Document44 pagesDE-unit 1 PART - 1Parameshwar ReddyNo ratings yet

- 06 CSL38 Manual LDDocument9 pages06 CSL38 Manual LDJoshua Daniel RajNo ratings yet

- Chapter - 1: Ece Department, AcoeDocument55 pagesChapter - 1: Ece Department, AcoeSana SriRamyaNo ratings yet

- ENGIN 112 Intro To Electrical and Computer Engineering: Binary Adders and SubtractorsDocument20 pagesENGIN 112 Intro To Electrical and Computer Engineering: Binary Adders and SubtractorsRichie LatchmanNo ratings yet

- Digital LogicDocument40 pagesDigital LogicNIRALI100% (1)

- Skee 2263 Final Exam 1920-2 SDocument19 pagesSkee 2263 Final Exam 1920-2 SNG JIAN RONG A20EE0177No ratings yet

- Desoders & MultiplexersDocument44 pagesDesoders & MultiplexersrathaiNo ratings yet

- LAB With Experiments DetailsDocument14 pagesLAB With Experiments DetailsMohd Helmy Hakimie RozlanNo ratings yet

- EGEE 281: VHDL & Digital System Design Fall 2020Document9 pagesEGEE 281: VHDL & Digital System Design Fall 2020Muaz ShahidNo ratings yet

- Assignment ModelDocument8 pagesAssignment ModelKarthik KarthiksNo ratings yet

- Digital Electronics: Question and Answers (Question Bank)Document12 pagesDigital Electronics: Question and Answers (Question Bank)api-297153951100% (1)