Professional Documents

Culture Documents

Machine Learning Practicals for Government Engineering College

Uploaded by

ronakOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Machine Learning Practicals for Government Engineering College

Uploaded by

ronakCopyright:

Available Formats

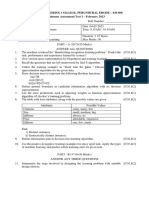

Government Engineering College, Modasa

B.E. – Computer Engineering (Semester – VII)

3170724 – Machine Learning

LIST OF PRACTICALS

# Description

1 Given the following vectors:

A = [1, 2, 3, 4, 5, 6, 7, 8, 9 10]

B = [4, 8, 12, 16, 20, 24, 28, 32, 36, 40]

C = [10, 9, 8, 7, 6, 5, 4, 3, 2, 1]

Ex. 1: Find the arithmetic mean of vector A, B and C

Ex. 2: Find the variance of the vector A, B and C

Ex. 3: Find the Euclidean distance between vector A and B

Ex. 4: Find the correlation between vectors A & B and A & C

2 Load breast cancer dataset and perform classification using Euclidean distance. Use 70% data as

training and 30% for testing.

3 Repeat the above experiment with 10-fold cross validation and find the standard deviation in

accuracy.

4 Repeat the experiment 2 and build the confusion matrix. Also derive Precision, Recall and Specificity

of the algorithm.

5 Predict the class for X = < Sunny, Cool, High, Strong > using Naïve Bayes Classifier for given data

𝑃(𝑋 | 𝐶). 𝑃(𝐶)

𝑃(𝐶 | 𝑋) =

𝑃(𝑋)

# Outlook Temp. Humidity Windy Play

D1 Sunny Hot High False No

D2 Sunny Hot High True No

D3 Overcast Hot High False Yes

D4 Rainy Mild High False Yes

D5 Rainy Cool Normal False Yes

D6 Rainy Cool Normal True No

D7 Overcast Cool Normal True Yes

MACHINE LEARNING | DR. MAHESH GOYANI

D8 Sunny Mild High False No

D9 Sunny Cool Normal False Yes

D10 Rainy Mild Normal False Yes

D11 Sunny Mild Normal True Yes

D12 Overcast Mild High True Yes

D13 Overcast Hot Normal False Yes

D14 Rainy Mild High True No

Ans: Label = NO

6 For the data given in Exercise 5, find the splitting attribute at first level:

𝑃 𝑃 𝑁 𝑁

Information Gain: 𝐼(𝑃, 𝑁) = − 𝑆 log 2 𝑆 − 𝑆 log 2 𝑆 = 0.940

𝑣

𝑃𝑖 + 𝑁𝑖

𝐸𝑛𝑡𝑟𝑜𝑝𝑦: 𝐸(𝑂𝑢𝑡𝑙𝑜𝑜𝑘) = ∑ 𝐼(𝑃𝑖 , 𝑁𝑖 )) = 0.694

𝑃+𝑁

𝑖=1

𝐺𝑎𝑖𝑛 (𝑂𝑢𝑡𝑙𝑜𝑜𝑘) = 𝐼(𝑃, 𝑁) − 𝐸(𝑂𝑢𝑡𝑙𝑜𝑜𝑘) = 0.246

Ans:

Attribute Gain

Outlook 0.246

Temperature 0.029

Humidity 0.151

Windy 0.048

7 Generate and test decision tree for the dataset in exercise 5

8 Find the clusters for following data with k = 2: Start with points 1 and 4 as two separate clusters.

i A B

1 1.0 1.0

2 1.5 2.0

3 3.0 4.0

4 5.0 7.0

5 3.5 5.0

6 4.5 5.0

7 3.5 4.5

Ans:

i Point

C1 1, 2

C2 3, 4, 5, 6, 7

MACHINE LEARNING | DR. MAHESH GOYANI

9 Find following statistics for the data given in Exercise 1

𝐶

𝑊𝑖𝑡ℎ𝑖𝑛 𝐶𝑙𝑎𝑠𝑠 𝑆𝑐𝑎𝑡𝑡𝑒𝑟: 𝑆𝑊 = ∑ ∑ (𝑥 − 𝑚𝑖 ) (𝑥 − 𝑚𝑖 )𝑇

𝑖=1 𝑥 ∈𝑤𝑖

𝐶

𝐵𝑒𝑡𝑤𝑒𝑒𝑛 𝐶𝑙𝑎𝑠𝑠 𝑆𝑐𝑎𝑡𝑡𝑒𝑟: 𝑆𝐵 = ∑ 𝑛𝑖 (𝑚𝑖 − 𝑚) (𝑚𝑖 − 𝑚)𝑇

𝑖=1

𝑀

𝑇𝑜𝑡𝑎𝑙 𝑆𝑐𝑎𝑡𝑡𝑒𝑟: 𝑆𝑇 = ∑(𝑥𝑖 − 𝑚) (𝑥𝑖 − 𝑚)𝑇

𝑖=1

10 Given the following vectors:

X = [340, 230, 405, 325, 280, 195, 265, 300, 350, 310]; %sale

Y = [71, 65, 83, 74, 67, 56, 57, 78, 84, 65];

Ex. 1: Find the Linear Regression model for independent variable X and dependent variable Y.

Ex. 2: Predict the value of y for x = 250. Also find the residual for y4.

Additional Tasks:

Mini Project in a group of max. 3 students

MACHINE LEARNING | DR. MAHESH GOYANI

You might also like

- Let's Practise: Maths Workbook Coursebook 7From EverandLet's Practise: Maths Workbook Coursebook 7No ratings yet

- Government Engineering College Modasa Semester:7 (C.E) Machine Learning (3170724)Document27 pagesGovernment Engineering College Modasa Semester:7 (C.E) Machine Learning (3170724)Bhargav BharadiyaNo ratings yet

- Data Minig Haris Arshad BSSEDocument9 pagesData Minig Haris Arshad BSSEArsh SyedNo ratings yet

- 15-381 Spring 2007 Assignment 6: LearningDocument14 pages15-381 Spring 2007 Assignment 6: LearningsandeepanNo ratings yet

- (Lec 6) Decision Tree MLDocument26 pages(Lec 6) Decision Tree MLMuhtasim Jawad NafiNo ratings yet

- Module 3-Decision Tree LearningDocument33 pagesModule 3-Decision Tree Learningramya100% (1)

- Lec 3&4Document20 pagesLec 3&4Hariharan RavichandranNo ratings yet

- Machine Learning - Part 1Document80 pagesMachine Learning - Part 1cjon100% (1)

- R20 Iii-Ii ML Lab ManualDocument79 pagesR20 Iii-Ii ML Lab ManualEswar Vineet100% (1)

- MLAll PracticalDocument27 pagesMLAll Practical55 ManviNo ratings yet

- Machine Learning exam questions cover classification, regression and clusteringDocument3 pagesMachine Learning exam questions cover classification, regression and clusteringAnnika Rai 4PS19CS013No ratings yet

- Assignment 2 Updated AIMLDocument2 pagesAssignment 2 Updated AIMLVismitha GowdaNo ratings yet

- AT3 - Decision Tree ExampleDocument17 pagesAT3 - Decision Tree ExampleramadeviNo ratings yet

- Decision Tree LectureDocument44 pagesDecision Tree LectureWaqas HameedNo ratings yet

- Lab Program 3Document6 pagesLab Program 3Sushmith ShettigarNo ratings yet

- MLlab Manual LIETDocument52 pagesMLlab Manual LIETEthical KhoriyaNo ratings yet

- Ch4 SupervisedDocument78 pagesCh4 Supervisedhareem iftikharNo ratings yet

- DtreeDocument67 pagesDtreekaran sharmaNo ratings yet

- Naive BayesDocument62 pagesNaive BayesAnonymous jTERdcNo ratings yet

- Sample Final AiDocument7 pagesSample Final Aisaid Abdulkadir GacalNo ratings yet

- Mid-Semester Regular Data Mining QP v1 PDFDocument2 pagesMid-Semester Regular Data Mining QP v1 PDFsangeetha kNo ratings yet

- decisionTreeDocument5 pagesdecisionTreePriyanka pawarNo ratings yet

- L5 - Decision Tree - BDocument51 pagesL5 - Decision Tree - BBùi Tấn PhátNo ratings yet

- Predictive Modeling Week3Document68 pagesPredictive Modeling Week3Kunwar RawatNo ratings yet

- A Detailed Lesson Plan in Math VDocument5 pagesA Detailed Lesson Plan in Math VRose AjengikapNo ratings yet

- Exercises695Clas SolutionDocument13 pagesExercises695Clas SolutionHunAina Irshad100% (2)

- Decision Tree - JKDocument17 pagesDecision Tree - JKPranav KasliwalNo ratings yet

- Data DescriptionDocument1 pageData DescriptionAyele NugusieNo ratings yet

- Decision Tree c45Document30 pagesDecision Tree c45AhmadRizalAfaniNo ratings yet

- ML Model Question PaperDocument2 pagesML Model Question PaperM. Muntasir RahmanNo ratings yet

- LM Pre Calculus Q2 M14 V2-LaconsayDocument17 pagesLM Pre Calculus Q2 M14 V2-LaconsayTERESA N. DELOS SANTOSNo ratings yet

- Group6 - Laboratory Activity No.6Document11 pagesGroup6 - Laboratory Activity No.6Jam ConventoNo ratings yet

- Ii Semester 2004-2005CS C415/is C415 - Data MiningDocument6 pagesIi Semester 2004-2005CS C415/is C415 - Data MiningramanadkNo ratings yet

- C-3 Pap365erDocument4 pagesC-3 Pap365erMOHAN AGARWALANo ratings yet

- Seminar 3Document43 pagesSeminar 3manojmani380No ratings yet

- Naive BayesDocument62 pagesNaive BayesArthinathan SaamNo ratings yet

- Naive BayesDocument62 pagesNaive BayessaRIKANo ratings yet

- Module 3-1 PDFDocument43 pagesModule 3-1 PDFBirendra Kumar Baniya 1EW19CS024No ratings yet

- Classification: Decision TreesDocument30 pagesClassification: Decision TreesAshish TiwariNo ratings yet

- CASIO FX-82ZA PLUS General WorksheetDocument11 pagesCASIO FX-82ZA PLUS General WorksheetCHRISTOPHER TEBIT SEMBINo ratings yet

- IB Physics Unit 1. Units and Measurments (All Slides)Document38 pagesIB Physics Unit 1. Units and Measurments (All Slides)JustCallMeLarryNo ratings yet

- Decision_Tree_(Class_37-38)_169692509554958626652505a71d481Document45 pagesDecision_Tree_(Class_37-38)_169692509554958626652505a71d48123mb0072No ratings yet

- Math Q2 W9Document32 pagesMath Q2 W9Anabelle De TorresNo ratings yet

- Divide and ConquerDocument50 pagesDivide and Conquer21dce106No ratings yet

- Basic Calculus Q4 Week 3 Module 11Document13 pagesBasic Calculus Q4 Week 3 Module 11Christian EstabilloNo ratings yet

- Decision and Regression Tree LearningDocument51 pagesDecision and Regression Tree LearningMOOKAMBIGA A100% (1)

- P1 Chapter 7::: Algebraic MethodsDocument24 pagesP1 Chapter 7::: Algebraic MethodsMushraf HussainNo ratings yet

- Pgm3 With OutputDocument5 pagesPgm3 With OutputManojNo ratings yet

- ID3 Lecture4Document25 pagesID3 Lecture4jameelkhalidalanesyNo ratings yet

- BE Lab Manual 2016Document90 pagesBE Lab Manual 2016PubgNo ratings yet

- Decision Tree and CartDocument6 pagesDecision Tree and CartMonisha JoseNo ratings yet

- Answer Activity For Module 4Document5 pagesAnswer Activity For Module 4Jewel FornillasNo ratings yet

- 03 Motion of A Freely Falling Body Lab WorkDocument7 pages03 Motion of A Freely Falling Body Lab Workkaramict8No ratings yet

- Detailed Lesson Plan in Mathematics (Multiplication and Division of Integers)Document8 pagesDetailed Lesson Plan in Mathematics (Multiplication and Division of Integers)Jhaniza PobleteNo ratings yet

- EC395 Lab 6Document4 pagesEC395 Lab 6Ray HeNo ratings yet

- 21SM HW13 Group5 FridayMorningDocument7 pages21SM HW13 Group5 FridayMorningtung456bopNo ratings yet

- Quiz 1 Solutions: and Analysis of AlgorithmsDocument13 pagesQuiz 1 Solutions: and Analysis of Algorithmstestuser4realNo ratings yet

- Measures of Position PartT 2Document21 pagesMeasures of Position PartT 2Jessele suarezNo ratings yet

- 20IST63 - Machine LearningDocument4 pages20IST63 - Machine LearningHARSHA VARDHINI M 20ISR020No ratings yet

- Autocorrelation (1) FinalDocument34 pagesAutocorrelation (1) FinalSumaiya AkterNo ratings yet

- AI Practical ListDocument2 pagesAI Practical ListShalin SirwaniNo ratings yet

- MAD Practical ListDocument2 pagesMAD Practical ListronakNo ratings yet

- Government Engineering College Practical ListDocument3 pagesGovernment Engineering College Practical ListronakNo ratings yet

- AI Practical ListDocument2 pagesAI Practical ListShalin SirwaniNo ratings yet

- Cloud Computing Course GTUDocument2 pagesCloud Computing Course GTURukhsar BombaywalaNo ratings yet

- Gujarat Technological University: W.E.F. AY 2018-19Document3 pagesGujarat Technological University: W.E.F. AY 2018-19Mit PhanaseNo ratings yet

- Gujarat Technological University: W.E.F. AY 2018-19Document3 pagesGujarat Technological University: W.E.F. AY 2018-19Mit PhanaseNo ratings yet

- Gujarat Technological University: W.E.F. AY 2020-21Document4 pagesGujarat Technological University: W.E.F. AY 2020-21Shalin SirwaniNo ratings yet

- Cloud Computing Course GTUDocument2 pagesCloud Computing Course GTURukhsar BombaywalaNo ratings yet

- Info. SecurityDocument3 pagesInfo. Securitytirth_diwaniNo ratings yet

- DataScience CourseraDocument16 pagesDataScience Courseraalmanojkashyap0% (1)

- STAT 230 - SyllabusDocument2 pagesSTAT 230 - SyllabusRakanNo ratings yet

- Inline: Import As Import As Import As Import As Matplotlib ImportDocument15 pagesInline: Import As Import As Import As Import As Matplotlib Importhoussam ziouany100% (1)

- L3 - Correlation & Rank CorrelationDocument11 pagesL3 - Correlation & Rank CorrelationMaliha FarzanaNo ratings yet

- Introduction To Seaborn: Chris MoDocument18 pagesIntroduction To Seaborn: Chris MovrhdzvNo ratings yet

- Wentsworth Medical CenterDocument7 pagesWentsworth Medical CenterLeonardo Campos PoNo ratings yet

- Weighted PcaDocument1 pageWeighted PcaSunil ChowdaryNo ratings yet

- MCQ'S ON PRESENTATION OF DATA CLASSIFICATIONDocument59 pagesMCQ'S ON PRESENTATION OF DATA CLASSIFICATIONahmed5577100% (6)

- Assignment I IVDocument3 pagesAssignment I IVVishal ChudasamaNo ratings yet

- Answers To The International Green Belt Practice QuestionsDocument2 pagesAnswers To The International Green Belt Practice QuestionsJHCPNo ratings yet

- Business Statistics MCQs Set-1Document6 pagesBusiness Statistics MCQs Set-1Sajid WajidNo ratings yet

- Student - S T DistributionDocument12 pagesStudent - S T DistributionDani Michaela100% (1)

- Chapter 6 RecitationDocument7 pagesChapter 6 RecitationNagulNo ratings yet

- Dose Response Article DiscussionDocument13 pagesDose Response Article Discussionapi-352920738No ratings yet

- Multivariate Geostatistics: Mg. Sc. Marco A. CotrinaDocument51 pagesMultivariate Geostatistics: Mg. Sc. Marco A. CotrinaEdgarNo ratings yet

- A Sensitivity Analysis of Cross-Country Growth Regressions (Levine and Renelt, 1992)Document23 pagesA Sensitivity Analysis of Cross-Country Growth Regressions (Levine and Renelt, 1992)Deyvid WilliamNo ratings yet

- MATH4425 Homework 2: Alvin Lo Hei Chun Feb 2018Document8 pagesMATH4425 Homework 2: Alvin Lo Hei Chun Feb 2018Alvin Lo Hei ChunNo ratings yet

- Master Tabel Penelitian: Descriptive StatisticsDocument2 pagesMaster Tabel Penelitian: Descriptive Statisticsmustakim gmaNo ratings yet

- Z Score Calculation in Sri LankaDocument18 pagesZ Score Calculation in Sri LankaAchala Uthsuka100% (1)

- 1941 CirDocument279 pages1941 CirAnandkumar PNo ratings yet

- MCQ Testing of Hypothesis PDFDocument10 pagesMCQ Testing of Hypothesis PDFKenzie AlmajedaNo ratings yet

- MS 8 PDFDocument3 pagesMS 8 PDFsrinivasa annamayyaNo ratings yet

- Multivariate Analysis (Minitab)Document43 pagesMultivariate Analysis (Minitab)wisujNo ratings yet

- CFA Exercise With Solution - Chap 08Document2 pagesCFA Exercise With Solution - Chap 08Fagbola Oluwatobi Omolaja100% (1)

- Multiple Linear Regression CaseDocument7 pagesMultiple Linear Regression Casekanika07electro0% (1)

- Lhuriely Bautista STAT 2100 QUIZ 3Document11 pagesLhuriely Bautista STAT 2100 QUIZ 3MEL KIAN CAESAR DUPOLNo ratings yet

- Introductory Econometrics A Modern Approach 4Th Edition Wooldridge Solutions Manual Full Chapter PDFDocument38 pagesIntroductory Econometrics A Modern Approach 4Th Edition Wooldridge Solutions Manual Full Chapter PDFmrissaancun100% (9)

- Set 3Document3 pagesSet 3Janhavi Doshi80% (10)

- 4 Sampling DistributionsDocument30 pages4 Sampling Distributionskristel lina100% (1)

- 3830 14928 1 PBDocument13 pages3830 14928 1 PBPutri FauziahsipNo ratings yet