Professional Documents

Culture Documents

An Algorithm For Minimization of A Nondifferentiab

Uploaded by

Aldo Gutiérrez ConchaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

An Algorithm For Minimization of A Nondifferentiab

Uploaded by

Aldo Gutiérrez ConchaCopyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/44260181

An Algorithm For Minimization Of A Nondifferentiable Convex Function

Article · July 2009

Source: DOAJ

CITATIONS READS

3 87

2 authors, including:

Milanka Gardašević - Filipović

Univerzitet Union - Računarski Fakultet

16 PUBLICATIONS 117 CITATIONS

SEE PROFILE

All content following this page was uploaded by Milanka Gardašević - Filipović on 27 May 2014.

The user has requested enhancement of the downloaded file.

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

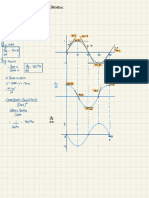

An Algorithm For Minimization Of

A Nondifferentiable Convex Function

Nada DJURANOVIC-MILICIC 1, Milanka GARDASEVIC-FILIPOVIC2

Abstract— In this paper an algorithm for minimization of a xk +1 = xk + α k d k k = 0,1,..., d k ≠ 0 (2)

nondifferentiable function is presented. The algorithm uses the

Moreau-Yoshida regularization of the objective function and its where the step-size αk and the directional vector d k are

second order Dini upper directional derivative. It is proved that

defined by the particular algorithms.

the algorithm is well defined, as well as the convergence of the

sequence of points generated by the algorithm to an optimal Paper is organized as follows: in the second section some

point. An estimate of the rate of convergence is given, too. basic theoretical preliminaries are given; in the third section

the Moreau-Yoshida regularization and its properties are

Index Terms— Moreau-Yoshida regularization, non-smooth described; in the fourth section the definition of the second

convex optimization, second order Dini upper directional order Dini upper directional derivative and the basic

derivative.

properties are given; in the fifth section the semi-smooth

functions and conditions for their minimization are

I. INTRODUCTION described. Finally in the sixth section a model algorithm is

suggested and its convergence is proved, and an estimate rate

The following minimization problem is considered: of its convergence is given, too.

minn f (x) (1)

x∈R

where f : R → R ∪ {+ ∞} is a convex and not necessary

n II. THEORETICAL PRELIMINARIES

* Throughout the paper we will use the following notation.

differentiable function with a nonempty set X of minima.

A vector s refers to a column vector, and ∇ denotes the

For nonsmooth programs, many approaches have been ⎛ ⎞

T

presented so far and they are often restricted to the convex gradient operator ⎜⎜ ∂ , ∂ ,..., ∂ ⎟⎟ . The Euclidean product

⎝ ∂x1 ∂x 2 ∂x n ⎠

unconstrained case. In general, the various approaches are

based on combinations of the following methods: is denoted by ⋅,⋅ and ⋅ is the associated norm; B ( x, ρ ) is

subgradient methods; bundle techniques and the the ball centred at x with radius ρ . For a given symmetric

Moreau-Yoshida regularization.

For a function f it is very important that its positive definite linear operator M we set ⋅,⋅ M

:= M ⋅,⋅ ;

2

Moreau-Yoshida regularization is a new function which has hence it is shortly denoted by x := x, x .The smallest

M M

the same set of minima as f and is differentiable with

and the largest eigenvalue of M we denote by λ and Λ

Lipschitz continuous gradient, even when f is not respectively.

The domain of a given function f : R → R ∪ {+ ∞} is

differentiable. In [10], [11] and [19] the second order n

properties of the Moreau-Yoshida regularization of a given

function f are considered. { }

the set dom( f ) = x ∈ R f ( x ) < +∞ . We say that f is

n

Having in mind that the Moreau-Yoshida regularization of proper if its domain is nonempty.

The point x = arg min f ( x ) refers to the minimum

1 *

a proper closed convex function is an LC function, we

present an optimization algorithm (using the second order x∈R n

point of a given function f : R → R ∪ {+ ∞} .

n

Dini upper directional derivative (described in [1] and [2]))

The epigraph of a given function f : R → R ∪ {+ ∞}

based on the results from [3]. That is the main idea of this n

paper.

We shall present an iterative algorithm for finding an is the set {

epi f = (α , x ) ∈ R × R n α ≥ f ( x ) . The }

optimal solution of problem (1) by generating the sequence

concept of the epigraph gives us a possibility to define

of points {xk } of the following form: convexity and closure of a function in a new way. We say that

f is convex if its epigraph is a convex set, and f is closed

Manuscript received February 27, 2009. This work was supported by

Science Fund of Serbia, grant number 144015G, through Institute of if its epigraph is a closed set.

Mathematics, SANU In this section we will give the definitions and statements

1

Nada DJURANOVIC-MILICIC is with Faculty of Technology and necessary in this work.

Metallurgy, University of Belgrade, Serbia (corresponding author to provide

phone: +381-11-33-03-865; e-mail: nmilicic@elab.tmf.bg.ac.rs

2

Milanka GARDASEVIC-FILIPOVIC is with Faculty of Architecture,

University of Belgrade, Serbia; e-mail: milanka@arh.bg.ac.rs

ISBN:978-988-18210-1-0 WCE 2009

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

Definition 1. A vector g ∈ R is said to be a subgradient of

n for ∀x ∈ B ⊂ int S , where B is a compact.

a given proper convex function f : R → R ∪ {+ ∞} at a

n Proof. See in [7] or [9].

Proposition 1 Let f : R → R ∪ {+ ∞} be a proper convex

n

point x ∈ R if the next inequality

n

function. The condition:

f (z ) ≥ f (x ) + g T ⋅ (z − x ) (3) 0 ∈ ∂f ( x ) (7)

holds for all z ∈ R . The set of all subgradients of f ( x ) at

n

is a first order necessary and sufficient condition for a global

the point x , called the subdifferential at the point x , is minimizer at x ∈ R . This can be stated alternatively as:

n

denoted by ∂f ( x ) . The subdifferential ∂f ( x ) is a

∀s ∈ R n , s = 1 max sT g ≥ 0 .

nonempty set if and only if x ∈ dom( f ) . g ∈∂f ( x )

For a convex function f it follows that (8)

z∈R

{ }

f ( x ) = maxn f ( z ) + g T ( x − z ) holds, where g ∈ ∂f ( z ) Proof. See [13].

Lemma 4. If a proper convex function

(see [4]). f : R → R ∪ {+ ∞} is a differentiable function at a point

n

The concept of the subgradient is a simple generalization

of the gradient for nondifferentiable convex functions. x ∈ dom ( f ) , then:

Lemma 1. Let f : S → R ∪ {+ ∞} be a convex function ∂f ( x ) = {∇f ( x )} . (9)

defined on a convex set S ⊆ R , and x′ ∈ int S . Let {xk }

n

Proof. The statement follows directly from Definition 2.

n 1

be a sequence such that xk → x′ , where xk = x′ + ε k s k , Definition 3. The real function f defined on R is LC

and s k → s , and g k ∈ ∂f ( xk ) . Then all function on the open set D ⊆ R

n

ε k > 0 ,ε k → 0 if it is continuously

differentiable and its gradient ∇f is locally Lipschitz, i.e.

accumulation points of the sequence {g k } lie in the set

∂f ( x′) . ∇f ( x ) − ∇f ( y ) ≤ L x − y for x, y ∈ D (10)

Proof. See in [7] or [6].

Definition 2. The directional derivative of a real function for some L > 0 .

f defined on R n at the point x′ ∈ R n in the direction

s ∈ R n , denoted by f ′( x′, s ) , is III. THE MOREAU-YOSHIDA REGULARIZATION

f ( x′ + t ⋅ s ) − f ( x′) Definition 4. Let f : R → R ∪ {+ ∞} be a proper closed

n

f ′( x′, s ) = lim (4) convex function. The Moreau-Yoshida regularization of a

t ↓0 t

given function f , associated to the metric defined by M,

when this limit exists. denoted by F, is defined as follows:

Hence, it follows that if the function f is convex and

⎧ 1 ⎫

x′ ∈ dom f , then F ( x ) := minn ⎨ f ( y ) + y − x

2

M ⎬ (11)

y∈R ⎩ 2 ⎭

f ( x′ + t ⋅ s ) = f ( x′) + t ⋅ f ′( x′, s ) + o(t ) (5)

The above function is an infimal convolution. In [15] it is

holds, which can be considered as one linearization of the proved that the infimal convolution of a convex function is

function f (see in [5]). also a convex function. Hence the function defined by (11) is

Lemma 2. Let f : S → R ∪ {+ ∞} be a convex function

a convex function and has the same set of minima as the

function f (see in [5]), so the motivation of the study of

defined on a convex set S ⊆ R , and x ′ ∈ int S . If the

n

Moreau-Yoshida regularization is due to the fact that

sequence xk → x′ , where xk = x′ + ε k s k , εk > 0 , minn f ( x ) is equal to minn F ( x ) .

x∈R x∈R

ε k → 0 and sk → s then the next formula: Definition 5. The minimum point p ( x ) of the function (11):

f ( xk ) − f ( x ′) ⎧ 1

p(x ) := argmin ⎨ f ( y ) + y − x

⎫

f ′( x′, s ) = lim

2

= max s T g (6) ⎬ (12)

M

k →∞ εk g∈∂f ( x′ ) y∈R n

⎩ 2 ⎭

holds. is called the proximal point of x .

Proof. See in [6] or [14]. Proposition 2. The function F defined by (11) is always

Lemma 3. Let f : S → R ∪ {+ ∞} be a convex function

differentiable.

Proof. See in [5].

defined on a convex set S ⊆ R . Then ∂f ( x ) is bounded

n

The first order regularity of F is well known (see in [5] and

ISBN:978-988-18210-1-0 WCE 2009

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

[10]): without any further assumptions, F has a Lipschitzian the point x ∈ R in the direction d ∈ R , i.e.:

n n

n

gradient on the whole space R . More precisely, for all

g1 − g 2

x1 , x2 ∈ R n the next formula: FD′′ ( x, d ) = limsup d,

α ↓0 α

∇F ( x1 ) − ∇F (x2 ) ≤ Λ ∇F (x1 ) − ∇F ( x2 ), x1 − x2 (13)

2

g1 ∈ ∂f ( p( x + αd )), g 2 ∈ ∂f ( p ( x ))

holds (see in [10]), where ∇F ( x) has the following form: where F ( x ) is defined by (11).

Lemma 6: Let f : R → R be a closed convex proper

n

G := ∇F ( x ) = M ( x − p ( x)) ∈ ∂f ( p ( x )) (14)

function and F is its Moreau –Yoshida regularization in the

and p ( x ) is the unique minimum in (11). So, according to sense of definition 5. Then the next statements are valid.

(i) FD′′ ( x k , kd ) = k FD′′ ( xk , d )

2

above consideration and Definition 3, we conclude that F is

(ii) FD′′( xk , d1 + d 2 ) ≤ 2(FD′′ ( xk , d1 ) + FD′′( xk , d 2 ))

1

an LC function (see in [11]).

Note that the function f has nonempty subdifferential at

(iii) FD′′( xk , d ) ≤ K ⋅ d , where K is some constant.

2

any point p of the form p ( x ) . Since p ( x ) is the minimum

Proof. See in [18] and [2].

point of the function (11) then (see in [5] and [10]):

Lemma 7. Let f : R → R be a closed convex proper

n

p( x ) = x − M g where g ∈ ∂f ( p ( x )) .

−1

(15) function and let F be its Moreau –Yoshida regularization.

Then the next statements are valid.

In [10] it is also proved that for all x1 , x2 ∈ R the next (i) FD′′ ( x, d ) is upper semicontinous with respect to

n

formula:

(x, d ) i.e. if xi → x and d i → d , then

p( x1 ) − p( x2 ) M ≤ M ( x1 − x2 ), p( x1 ) − p( x2 ) (16) lim sup FD′′(xi , di ) ≤ FD′′(x, d )

2

i →∞

is valid, namely the mapping x → p ( x ) , where p ( x ) is {

(ii) FD′′ ( x, d ) = max d Vd V ∈ ∂ F ( x )

T 2

}

Λ Proof. See in [18] and [2].

defined by (12), is Lipschitzian with constant (see

λ

Proposition 2.3. in [10]).

Lemma 5: The following statements are equivalent: V. SEMI-SMOOTH FUNCTIONS AND OPTIMALITY CONDITIONS

(i) x minimizes f ; (iv) x minimizes F ;

Definition 7: A function ∇F : R → R

n n

is said to be

(ii) p ( x ) = x (v) f ( p( x )) = f ( x ) ; semi-smooth at the point x ∈ R

n

if ∇F is locally

(iii) ∇F ( x ) = 0 (vi) F ( x ) = f ( x ) Lipschitzian x∈R at and the limit

n

Proof. See in [5] or [19].

lim{Vh}, V ∈ ∂ F ( x + λh ) exists for any d ∈ R .

2 n

h→d

λ ↓0

IV. DINI SECOND UPPER DIRECTIONAL DERIVATIVE Note that for a closed convex proper function, the gradient

of its Moreau-Yoshida regularization is a semi-smooth

We shall give some preliminaries that will be used in the

function.

remainder of the paper.

Lemma 8. [18]: If ∇F : R → R is semi-smooth at the

n n

Definition 6. [18] The second order Dini upper directional

point x ∈ R then ∇F is directionally differentiable at

n

derivative of the function f ∈ LC at the point x ∈ R in

1 n

the direction d ∈ R is defined to be

n x ∈ R and for any V ∈ ∂ 2 F ( x + h ), h → 0 we have:

n

′

[∇f (x + αd ) − ∇f (x )]T ⋅ d . Vh − (∇F ) ( x, h ) = o( h ) . Similarly we have that

f D′′ ( x, d ) = lim sup

α ↓0 α h T Vh − F ′′( x, h ) = o h ( ). 2

If ∇f is directionally differentiable at xk , we have

Lemma 9: Let f : R → R be a proper closed convex

n

f D′′( xk , d ) = f ′′(xk , d ) = lim

[∇f (x + αd ) − ∇f (x )]T ⋅ d function and let F be its Moreau-Yoshida regularization.

So, if x ∈ R

n

α ↓0 α is solution of the problem (1) then

for all d ∈ R .

n F ′( x, d ) = 0 and FD′′ (x, d ) ≥ 0 for all d ∈ R n .

Since the Moreau-Yoshida regularization of a proper Proof. From the definition of the directional derivative

and by Lemma 5 we have that F ′( x, d ) = ∇F ( x ) d = 0 .

1 T

closed convex function f is an LC function, we can

Since x ∈ R is a solution of the problem (1) then according

n

consider its second order Dini upper directional derivative at

ISBN:978-988-18210-1-0 WCE 2009

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

to Lemma 5, theorem 23.1 in [15] and the fact that the next

1 ( ) 1

F xk + q i (k )d k − F (xk ) ≤ − q i (k )σ (FD′′( xk , d k )) (18)

inequalities F ′( x + td , d ) ≥ (F ( x + td ) − F ( x )) ≥ 0 2

t

hold we have where σ : [0,+∞) →[0,+∞) is a continuous function

F ′( x + td , d ) − F ′( x, d ) satisfying δ1t ≤ σ (t ) ≤ δ 2t and 0 < δ1 < δ 2 < 1 .

FD′′ ( x, d ) = limsup ≥0■

t ↓0 t We suppose that

Lemma 10. Let f : R → R be a proper closed convex

n

≤ FD′′( xk , d ) ≤ c2 d

2 2

c1 d (19)

function, F its Moreau-Yoshida regularization, and x a

hold for some c1 and c2 such that 0 < c1 < c2 .

point from R . If F ′( x, d ) = 0 and FD′′ ( x, d ) > 0 for all

n

Lemma 11. Under the assumption (19) the function Φ k (⋅) is

d ∈ R n , then x ∈ R n is a strict local minimizer of the

coercive.

problem (1).

Proof. From the assumption K >0

there exists

Proof. Suppose that x ∈ R is not a strict minimum of the

n

( 0 < c1 ≤ K ≤ c2 ) such that FD′′( x, d ) = max d Vd = K d .

2 T

function f . According to Lemma 5 that means that x ∈ R

n 2

V ∈∂ F ( x )

is not a strict minimum of the function F, nor a proximal point Since ∇F is locally Lipschitzian we have that

of the function F. Then there exists a sequence

∇F ( xk ) d ≤ Λ d

T

holds (see in [17]), therefore

{xk }, xk → x such that F (xk ) ≤ F (x ) holds for every k .

1

If we define the sequence {x k }, xk → x by xk = x + t k d , ∇F ( xk ) d + ⋅ 0 ≤ Λ d holds and hence we have that:

T

2

xk − x

where t k = then by Lemma 8 and Lemma 6 it 1 1

∇F (xk ) d + FD′′(x, d ) − K d ≤ Λ d . Hence, we have:

T 2

d

2 2

follows that

1 1 1

( ) − Λ d + K d ≤ ∇F(xk ) d + FD′′(x, d )≤ Λ d + K d

2 T 2

1

F ( xk ) − F (x ) − t k ∇F ( x ) d = t k2 FD′′ ( x, d ) + o d

T 2

2 2 2

2

1 Φ (d ) 1

holds. Since ∇F ( x ) = 0 (from assumption of Lemma 10) it and − Λ + K d ≤ k ≤Λ+ K d .

2 d 2

1 2

follows that t k FD′′ (x, d ) ≤ 0 , which contradicts the This establish coercivity of the function Φ k .■

2

assumption.■

Remark. Coercivity of the function Φ k assures that the

optimal solution of problem (17) exists (see in [18]). It also

VI. A MODEL ALGORITHM means that, under the assumption (19) the direction sequence

In this section an algorithm for solving the problem (1) is {d k } is bounded sequence on R n (proof is analogous to the

introduced. We suppose that at each x ∈ R it is possible to

n

proof in [18]).

compute f ( x), F ( x ), ∇F ( x ) and FD′′ ( x, d ) for a given Proposition 3. If the Moreau-Yoshida regularization F (⋅) of

d ∈R . n

the proper closed convex function f (⋅) satisfies the

At the k-th iteration we consider the following problem

condition (19), then:

1 (i) the function F (⋅) is uniformly and, hence, strictly

minn Φ k (d ), Φ k (d ) = ∇F ( xk ) d + FD′′ ( xk , d ) (17)

T

d ∈R 2 convex;

where FD′′ ( xk , d ) stands for the second order Dini upper {

(ii) the level set L( x0 ) = x ∈ R : F ( x ) ≤ F ( x0 ) is a

n

}

compact convex set, and

directional derivative at xk in the direction d . Note that if

(iii) there exists a unique point x* such that

Λ is a Lipschitzian constant for F it is also a Lipschitzian

constant for ∇F . The function Φ k (d ) is called an

( )

F x* = min F ( x ) .

x∈ L ( x 0 )

iteration function. It is easy to see that Φ k (0 ) = 0 and Proof. (i) From the assumption (19) and the mean value

theorem it follows that for all x ∈ L( x0 ) ( x ≠ x0 ) there

Φ k (d ) is Lipschitzian on R n . We generate the sequence

exists θ ∈ (0,1) such that:

{xk } of the form xk +1 = xk + α k d k , where the direction

1

F ( x) − F (x0 ) == ∇F ( x0 ) ( x − x0 ) + FD′′(x0 + θ (x − x0 ), x − x0 )

T

vector d k is a solution of the problem (17), and the step-size 2

αk is a number satisfying α k = q i (k ) , 0 < q < 1 , where 1

≥ ∇F (x0 ) (x − x0 ) + c1 x − x0 > ∇F ( x0 ) ( x − x0 )

T 2 T

i(k ) is the smallest integer from {0,1,2,...} such that 2

ISBN:978-988-18210-1-0 WCE 2009

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

that is, F (⋅) is uniformly and consequently strictly convex well-defined.

Proposition 4. . If d k ≠ 0 is a solution of (17), then for any

on L( x0 ) .

(ii) From [16] it follows that the level set L( x0 ) is

continuous σ : [0,+∞) →[0,+∞) satisfying

function

bounded. The set L( x0 ) is closed and convex because the

δ1t ≤ σ (t ) ≤ δ 2t (where 0 < δ1 < δ 2 < 1 ) there exists a

function F (⋅) is continuous and convex. Therefore the set finite i (k ) such that for all q

*

∈ 0, q i (k ) i (k )

( *

)

L( x0 ) is a compact convex set. ( ) 1

F xk + q i (k )d k − F ( xk ) ≤ − q i (k )σ (FD′′( xk , d k ))

2

*

(iii) The existence of x follows from the continuity of the

holds.

function f (⋅) , and therefore and F (⋅) , on the bounded set Proof . According to Lemma 9.3 from [8] and from the

L( x0 ) . From definition of the level set it follows that: definition of d k it follows that for xk +1 = xk + td k , t > 0

F (x* ) = min F ( x ) = min F ( x ) we have

x∈L ( x 0 ) x∈D Λ 1 Λ

F(xk+1) −F(xk ) ≤t∇F(xk ) dk + t2 dk ≤ −t FD′ (xk,dk ) + t2 dk

T 2 2

Since F (⋅) is strictly convex it follows from [15] that 2 2 2 (23)

* 1 Λ2 2

x is a unique minimizer.■ ≤ −t σ(FD′ (xk,dk )) + t dk

Lemma 12. The following statements are equivalent: 2δ2 2

(i) d = 0 is globally optimal solution of the problem (17)

If we choose t =

σ (FD′′( xk , d k )) and put in (23), we get

(ii) 0 is the optimum of the objective function in (17) Λ dk

2

(iii) the corresponding xk is such that 0 ∈ ∂f ( x k )

1 δ2 −1 σ 2 (FD′′(xk , dk )) K

Proof. (i) ⇒ (ii:) is obvious F(xk+1 ) − F(xk ) ≤ = − tσ(FD′ (xk ,dk ))

(ii) ⇒ (iii): Let 0 be a global optimum value of (17), then 2 δ2 Λ dk

2

2

for any λ >0 and d ∈ Rn :

where

δ2 −1

= −K < 0 . Taking q

i* (k )

=

[Kt ] , i.e.

1 δ2 q

0 = Φ k (0 ) ≤ Φ k (λd ) = λ∇F ( xk ) d + FD′′ (xk , λd )

T

2

i * (k ) = log q

[Kt ] we have that the claim of the theorem

1 2

= λ∇F (xk ) d + λ FD′′ ( xk , d ) q

T

2

where the last equality holds by Lemma 6. Hence dividing

holds for all q

i (k )

(

∈ 0, q i

*

(k )

).■

both sides by λ and letting λ↓0 we have that Convergence theorem. Suppose that f is a proper closed

∇F ( xk ) d ≥ 0 holds for any d ∈ R , and consequently

T n

convex function and F is its Moreau-Yoshida regularization

satisfies (19). Then for any initial point x0 ∈ R , xk → x∞ ,

n

it follows that xk is a stationary point of the function F , i.e.

∇F ( xk ) = 0 . Hence, by Lemma 5 it follows that xk is a as k → +∞ , where x∞ is a unique minimal point of the

minimum point of the function f . function f .

(iii) ⇒ (i): Let xk be a point such that 0 ∈ ∂f ( x k ) . Then Proof. . If d k ≠ 0 is a solution of (17), it follows that

by (14), Lemma 5 and Lemma 9 it follows that Φ k (d k ) ≤ 0 = Φ k (0) . Consequently, we have by the

condition (19) that

∇F ( x k ) d ≥ 0

T

(20)

1 1

∇F ( xk ) d k ≤ − FD′′(xk , d k ) ≤ − c1 d k

T 2

<0

for any d ∈ R . Suppose that d ≠ 0 is the optimal solution

n 2 2

of the problem (17). From the property of the iterative From the above inequality it follows that the vector d k is a

function Φ it follows that: descent direction at xk , i.e. from the relations (18) and (19)

1

∇F ( xk ) d k ≤ − FD′′ ( xk , d k ) we get

T

(21)

2 ( ) 1

F( xk +1 ) − F(xk ) = F xk + qi(k )dk − F (xk ) ≤ − qi(k )σ (FD′′(xk , dk ))

2

Hence, (21) implies:

1 i(k ) 1 i(k )

∇F ( x k ) d k < 0 . ≤ − q δ1FD′′(xk , dk ) ≤ − q c1 dk

T 2

(22)

2 2

The above two inequalities (20) and (22) are for every d k ≠ 0 . Hence the sequence {F ( xk )} has the

contradictory.■

descent property, and, consequently, the sequence

Now we shall prove that there exists a finite i (k ) , i.e.

{xk } ⊂ L(x0 ) . Since L( x0 ) is by the Proposition 3 a

since d k is defined by (17), that the algorithm is

compact convex set, it follows that the sequence {xk } is

ISBN:978-988-18210-1-0 WCE 2009

Proceedings of the World Congress on Engineering 2009 Vol II

WCE 2009, July 1 - 3, 2009, London, U.K.

bounded. Therefore there exist accumulation points of the But, from the property of the iterative function in (17), we

sequence {xk } . have ∇ F ( x∞ ) d ∞ ≤ −

1

FD′′ ( x∞ , d ∞ ) . Therefore, we get a

T

Since ∇F is continuous, then, if ∇F ( xk ) → 0, k → +∞ 2

contradiction.■

it follows that every accumulation point x∞ of the sequence

{xk } satisfies ∇F ( x∞ ) = 0 . Since F is (by the Convergence rate theorem. Under the assumptions of the

previous theorem we have that the following estimate holds

Proposition 3) strictly convex, there exists a unique point

for the sequence {xk } generated by the algorithm.

x∞ ∈ L( x0 ) such that ∇F (x∞ ) = 0 . Hence, the sequence

−1

{xk } has a unique limit point x∞ and it is a global ⎡ 1 n−1 F (x ) − F (xk +1 )⎤

F ( xn ) − F (x∞ ) ≤ μ0 ⎢1 + μ0 2 ∑ k ⎥

minimizer of F and by Lemma 5 it is a global minimizer of ⎢⎣ η k =0 ∇F (xk ) 2 ⎥⎦

the function f .

for n = 1,2,3,...

Therefore we have to prove that ∇F ( xk ) → 0, k → +∞ .

where μ0 = F(x0 ) − F(x∞ ) and diamL(x0 ) =η < +∞ (since

Let K1 be a set of indices such that lim xk = x∞ . Then

k∈K 1 by Proposition 3 it follows that L( x0 ) is bounded).

there are two cases to consider: Proof . The proof directly follows from the Theorem 9.2,

a) The set of indices {i (k )} for k ∈ K1 , is uniformly page 167, in [8].

bounded above by a number I . Because of the descent

REFERENCES

property, it follows that all points of accumulation have the

[1] Browien J., Lewis A.: “Convex analysis and nonlinear optimization”,

same function value and Canada 1999

+∞ [2] Clarke F. H.: “Optimization and Nonsmooth Analysis”, Wiley, New

F(x∞ ) − F(x0 ) = ∑[F(xk+1 ) − F(xk )] York, 1983

[3] Djuranovic Milicic Nada: “On An Optimization Algorithm For LC 1

k =0

Unconstrained Optimization”, FACTA UNIVERSITATIS Ser. Math.

≤ ∑[F(xk+1 ) − F(xk )] [4]

Inform. 20 , pp 129-135, 2005

Демьянов В. Ф., Васильев: „Недифференцируемая оптимизация“,

k∈K1 Москва „Наука“, 1981.

[5] Fuduli A.: “Metodi Numerici per la Minimizzazione di Funzioni

1

≤ − ∑qi(k )σ(FD′′(xk , dk ))

Convesse Nondifferenziabili”, PhD Thesis, Departimento di

Electronica Informatica e Sistemistica, Univerzita della Calabria

2 k∈K1 (1998)

[6] Fletcher R.: “Practical Methods of Optimization, Volume 2,

1 1 I

≤ − q I δ1 ∑ FD′′ (xk , d k ) ≤ − q c1 ∑ d k

2 Constrained Optimization”, Department of Mathematics, University of

Dundee, Scotland, U.K,Wiley-Interscience, Publication, (1981)

2 k∈K1 2 k ∈K1 [7] Иоффе А. Д., Тихомиров В. М.: „Теория экстремальныџ задач“,

Москва „Наука“, 1974

Since F(x∞) is finite, it follows that dk →0 . , k → ∞, k ∈ K1 [8] Karmanov V.G: “Mathematical Programming”. Nauka, Moscow,

1975 (in Russian).

By Lemma 12 it follows that d∞ = 0 is a globally optimal [9] Кусарев А. Г., Кутателадзе С. С.:Субдифференциальное

исчисление, Новосибирск, Издатеьство „Наука“, (1987)

point of the problem (17) and, that the corresponding [10] Lemare´chal C. , Sagastiza C.: “Practical aspects of the

Moreau-Yoshida regularization I: theoretical preliminaries”, SIAM

accumulation point x∞ is a stationary point of the objective Journal of Optimization, Vol.7, pp 367-385, 1997

function F, i.e. ∇F( x∞ ) = 0 . From Lemma 5 and Lemma 12 [11] Meng F., Zhao G.:”On Second-order Properties of the

Moreau-Yoshida Regularization for Constrained Nonsmooth Convex

it follows that x∞ is a unique optimal point of the function Programs”, Numer. Funct. Anal. Optim , VOL 25; NUMB 5/6, pages

515-530, 2004

f. [12] Пшеничнй Б. Н.: „Выпуклый анализ и экстремальные задачи“,

b) There is a subset K 2 ⊂ K1 such that lim i (k ) = +∞ . By

Москва „Наука“, 1980.

[13] Пшеничнй Б. Н.: Неопходимые условя экстремума, Москва

k →∞ „Наука“, 1982.

definition of i(k ) , we have for k ∈ K 2 that [14] Пшеничнй Б. Н.: Метод линеаризации, Москва „Наука“, 1983.

[15] Rockafellar R.: “Convex Analysis”, Princeton University Press, New

F (xk + qi (k )−1dk ) − F (xk ) > − qi (k )−1σ (FD′′(xk , dk )) (24).

1 Jersey (1970) (Russ. trans.: Mir, Moscow 1973)

[16] Polak E.: “Computational Methods in Optimization. A Unified

2 Approach”, Mathematics in Science and Engineering, Vol. 77,

Suppose that x∞ is an arbitrary accumulation point of {xk } , Academic Press, New York - London, 1971 [Russ. trans.: Mir,

Moscow, 1974].

but not a stationary point of F , i.e. f . Then, from Lemma [17] Pytlak R.:”Conjugate Gradient Algorithms in Nonconvex

Optimization”, Library of Congress Control Number: 2008937497,

12 it follows that the corresponding direction vector d ≠ 0 . Springer-Verlag Berlin Heidelberg, 2009

i ( k )−1 [18] Sun W., Sampaio R.J.B., Yuan J.: “Two Algorithms for LC 1

Now, dividing both sides in the expression (24) by q Unconstrained Optimization”, Journal of Computational Mathematics,

i ( k )−1 Vol. 18, No. 6, pp. 621-632, 2000

and using lim q = 0, k ∈ K 2 , we get [19] Qi L.: “Second Order Analysis of the Moreau-Yoshida

k →∞

Regularization”, School of Mathematics, The University of New

1 1 1

∇F(x∞ ) d∞ > − σ(FD′′(x∞, d∞ )) > − δ2FD′′(x∞, d∞ ) > − FD′′(x∞, d∞ ) Wales Sydney, New South Wales 2052, Australia, 1998.

T

2 2 2

ISBN:978-988-18210-1-0 WCE 2009

View publication stats

You might also like

- A Generic Proximal Algorithm For Convex Optimization - Application To Total Variation MinimizationDocument5 pagesA Generic Proximal Algorithm For Convex Optimization - Application To Total Variation MinimizationAugusto ZebadúaNo ratings yet

- Me 8692-Finite Element Analysis University Questions - Part A S.No Univ QN Apr - May 16Document17 pagesMe 8692-Finite Element Analysis University Questions - Part A S.No Univ QN Apr - May 16Saravana Kumar MNo ratings yet

- An Introduction To Liouville Gravity On The Pseudosphere: Final Project: Physics 211 Kenan DiabDocument9 pagesAn Introduction To Liouville Gravity On The Pseudosphere: Final Project: Physics 211 Kenan DiabVaibhav WasnikNo ratings yet

- On Equivalence of Second Order Linear Differential Operators, Acting in Vector BundlesDocument10 pagesOn Equivalence of Second Order Linear Differential Operators, Acting in Vector Bundles0x01 bitNo ratings yet

- Cal3 Ch1 G1 (5 Nov)Document59 pagesCal3 Ch1 G1 (5 Nov)Gm SanuNo ratings yet

- Connexions Module: m11240Document4 pagesConnexions Module: m11240rajeshagri100% (2)

- Lecture Notes On Multivariable CalculusDocument36 pagesLecture Notes On Multivariable CalculusAnwar ShahNo ratings yet

- ChoudharyDocument25 pagesChoudharyemilioNo ratings yet

- An Introduction To Fractional CalculusDocument29 pagesAn Introduction To Fractional CalculusFredrik Joachim GjestlandNo ratings yet

- Goemans - Approximating SubmodularDocument11 pagesGoemans - Approximating SubmodulardfmolinaNo ratings yet

- Notes 1Document30 pagesNotes 1Antal TóthNo ratings yet

- Optimization QFin Lecture NotesDocument33 pagesOptimization QFin Lecture NotesCarlos CadenaNo ratings yet

- JO Ao Lopes DiasDocument19 pagesJO Ao Lopes DiasRosita Salas TuanamaNo ratings yet

- Mikhailov1994-Finite Difference Method by Using MathematicsDocument5 pagesMikhailov1994-Finite Difference Method by Using MathematicsRenato Santos de SouzaNo ratings yet

- On Deep Learning As A Remedy For The Curse of Dimensionality in Nonparametric RegressionDocument25 pagesOn Deep Learning As A Remedy For The Curse of Dimensionality in Nonparametric RegressionQardawi HamzahNo ratings yet

- LearnEnvelope Arxiv PDFDocument20 pagesLearnEnvelope Arxiv PDFABET DTETINo ratings yet

- A Nonsmooth Inexact Newton Method For The Solution of Large-Scale Nonlinear Complementarity ProblemsDocument24 pagesA Nonsmooth Inexact Newton Method For The Solution of Large-Scale Nonlinear Complementarity ProblemsAnonymous zDHwfez7NCNo ratings yet

- Maharani 2019Document6 pagesMaharani 2019angelNo ratings yet

- Solving PDEs On ManifoldsDocument13 pagesSolving PDEs On Manifoldsdr_s_m_afzali8662No ratings yet

- Point-Based Manifold HarmonicsDocument20 pagesPoint-Based Manifold HarmonicsENo ratings yet

- Approximation of Multivariate FunctionsDocument10 pagesApproximation of Multivariate FunctionssiddbnNo ratings yet

- Belkhelfa GoodDocument7 pagesBelkhelfa GoodMohammed AbdelmalekNo ratings yet

- Unit-11 IGNOU STATISTICSDocument23 pagesUnit-11 IGNOU STATISTICSCarbidemanNo ratings yet

- Of The Conmuting of OperatorDocument13 pagesOf The Conmuting of OperatorGabriel LeonNo ratings yet

- Difference Equations in Normed Spaces: Stability and OscillationsFrom EverandDifference Equations in Normed Spaces: Stability and OscillationsNo ratings yet

- Chapter 2 DifferentiationDocument4 pagesChapter 2 DifferentiationManel KricheneNo ratings yet

- Electrodynamics-Point ParticlesDocument15 pagesElectrodynamics-Point ParticlesIrvin MartinezNo ratings yet

- An Improved Evolutionary Programming Algorithm For Fuzzy Programming Problems and Its ApplicationDocument4 pagesAn Improved Evolutionary Programming Algorithm For Fuzzy Programming Problems and Its ApplicationIzz DanialNo ratings yet

- Application of Differential Equation in Computer ScienceDocument6 pagesApplication of Differential Equation in Computer ScienceUzair Razzaq100% (1)

- The Connections Between Lyapunov Functions For Some Optimization Algorithms and Differential EquationsDocument18 pagesThe Connections Between Lyapunov Functions For Some Optimization Algorithms and Differential Equationsth onorimiNo ratings yet

- 1 RVDocument8 pages1 RVrani.dian.raniNo ratings yet

- On Finite Termination of An Iterative Method For Linear Complementarity ProblemsDocument17 pagesOn Finite Termination of An Iterative Method For Linear Complementarity ProblemsJesse LyonsNo ratings yet

- Mathematical Association of America The American Mathematical MonthlyDocument11 pagesMathematical Association of America The American Mathematical MonthlyJhon Edison Bravo BuitragoNo ratings yet

- Fuzzy Optimal Control For Double Inverted PendulumDocument5 pagesFuzzy Optimal Control For Double Inverted PendulumJulioroncalNo ratings yet

- Gradient Based OptimizationDocument24 pagesGradient Based OptimizationMarc RomaníNo ratings yet

- 1 s2.0 S0377042714002489 MainDocument18 pages1 s2.0 S0377042714002489 MainKubra AksoyNo ratings yet

- Andreani2010 - Constant-Rank Condition and Second-Order Constraint QualificationDocument12 pagesAndreani2010 - Constant-Rank Condition and Second-Order Constraint QualificationvananhnguyenNo ratings yet

- A Note On The Smoothness of The Minkowski FunctionDocument6 pagesA Note On The Smoothness of The Minkowski Function212305009No ratings yet

- A5 Open RombergDocument2 pagesA5 Open RombergvishnuNo ratings yet

- Baccari2004 - On The Classical Necessary Second-Order Optimality ConditionsDocument9 pagesBaccari2004 - On The Classical Necessary Second-Order Optimality ConditionsvananhnguyenNo ratings yet

- Mathematics of Deep Learning: Lecture 2 - Depth SeparationDocument13 pagesMathematics of Deep Learning: Lecture 2 - Depth Separationflowh_No ratings yet

- SmirnovTurbiner-Hidden Sl2-Algebra of Finite-Difference Equations-Funct-An9512002 PDFDocument7 pagesSmirnovTurbiner-Hidden Sl2-Algebra of Finite-Difference Equations-Funct-An9512002 PDFhelmantico1970No ratings yet

- Geometry of Warped Products As Riemannian SubmanifoldsDocument32 pagesGeometry of Warped Products As Riemannian SubmanifoldsLinda MoraNo ratings yet

- Peters-Schmitz-Wagner-1977-DETERMINATION OF FLOOR RESPONSE SPECTRA PDFDocument8 pagesPeters-Schmitz-Wagner-1977-DETERMINATION OF FLOOR RESPONSE SPECTRA PDFpvecciNo ratings yet

- Ward Identities of Liouville Gravity Coupled To Minimal Conformal MatterDocument24 pagesWard Identities of Liouville Gravity Coupled To Minimal Conformal MatterbhavarajuanuraagNo ratings yet

- L No 18 PDFDocument18 pagesL No 18 PDFaustin00012No ratings yet

- Inverse TrignometryDocument18 pagesInverse TrignometryPRAKASHNo ratings yet

- The Role of The Implicit Function in The Lagrange Multiplier RuleDocument5 pagesThe Role of The Implicit Function in The Lagrange Multiplier RuleDaniel Lee Eisenberg JacobsNo ratings yet

- Meshfree Chapter 11Document48 pagesMeshfree Chapter 11ZenPhiNo ratings yet

- Regularization of Feynman IntegralsDocument28 pagesRegularization of Feynman IntegralsRonaldo Rêgo100% (1)

- Self-Adjointness and Quantum MechanicsDocument25 pagesSelf-Adjointness and Quantum MechanicsSalman SufiNo ratings yet

- Reduction-Based Creative Telescoping For Definite Summation of D-Finite FunctionsDocument15 pagesReduction-Based Creative Telescoping For Definite Summation of D-Finite FunctionsAnderson RufinoNo ratings yet

- Matrix Methods For Field Problems: Roger F. HarringtonDocument14 pagesMatrix Methods For Field Problems: Roger F. HarringtondilaawaizNo ratings yet

- Aqm - Rotations in Quantum Mechanics PDFDocument4 pagesAqm - Rotations in Quantum Mechanics PDFTudor PatuleanuNo ratings yet

- An Algorithm For The Computation of EigenvaluesDocument44 pagesAn Algorithm For The Computation of Eigenvalues孔翔鸿No ratings yet

- Barendregt Barendsen - 2000 - Introduction To Lambda CalculusDocument53 pagesBarendregt Barendsen - 2000 - Introduction To Lambda CalculusAnatoly RessinNo ratings yet

- Zhi-Hua Zhou (Auth.) - Machine Learning (2021, Springer) (10.1007 - 978-981!15!1967-3) - Libgen - LiDocument460 pagesZhi-Hua Zhou (Auth.) - Machine Learning (2021, Springer) (10.1007 - 978-981!15!1967-3) - Libgen - LiAldo Gutiérrez ConchaNo ratings yet

- Partial Differential Equations 2020 Solutions To CW 2: 1 Week 5, Problem 5Document6 pagesPartial Differential Equations 2020 Solutions To CW 2: 1 Week 5, Problem 5Aldo Gutiérrez ConchaNo ratings yet

- Nonlinear Partial Differential Equations Their Solutions and PRDocument65 pagesNonlinear Partial Differential Equations Their Solutions and PRAldo Gutiérrez ConchaNo ratings yet

- McOwen PDE Chapters 1 Through 5Document95 pagesMcOwen PDE Chapters 1 Through 5Aldo Gutiérrez Concha0% (1)

- MATH 534H: Introduction To Partial Differential Equations Homework SolutionsDocument61 pagesMATH 534H: Introduction To Partial Differential Equations Homework SolutionsAldo Gutiérrez ConchaNo ratings yet

- HKU MATH1853 - Brief Linear Algebra Notes: 1 Eigenvalues and EigenvectorsDocument6 pagesHKU MATH1853 - Brief Linear Algebra Notes: 1 Eigenvalues and EigenvectorsfirelumiaNo ratings yet

- Quadratic Graphic OrganizerDocument2 pagesQuadratic Graphic Organizerjessicarrudolph100% (4)

- TTK4115 SummaryDocument5 pagesTTK4115 SummaryManoj GuptaNo ratings yet

- 3.3 ExerciseDocument47 pages3.3 ExerciseAvnish BhasinNo ratings yet

- Calculus With Analytic Geometry 1Document2 pagesCalculus With Analytic Geometry 1Queen Ann JumuadNo ratings yet

- Engineering Mathematics With SolutionsDocument35 pagesEngineering Mathematics With SolutionsSindhu Velayudham100% (1)

- A Calculus Misprint Ten Years LaterDocument4 pagesA Calculus Misprint Ten Years Latersobaseki1No ratings yet

- Insight 2017 Mathematical Methods Examination 1 SolutionsDocument15 pagesInsight 2017 Mathematical Methods Examination 1 Solutionsnochnoch50% (2)

- Logarithms and Exponential Functions (Solutions)Document8 pagesLogarithms and Exponential Functions (Solutions)HanishqNo ratings yet

- Differentiation Formula MathongoDocument3 pagesDifferentiation Formula MathongoArya NairNo ratings yet

- Rounding Errors and Stability of AlgorithmsDocument24 pagesRounding Errors and Stability of AlgorithmsmahfooNo ratings yet

- 1 SolnDocument3 pages1 SolnDhruv RaiNo ratings yet

- MATH 304 Linear Algebra Lecture 9 - Subspaces of Vector Spaces (Continued) - Span. Spanning Set PDFDocument20 pagesMATH 304 Linear Algebra Lecture 9 - Subspaces of Vector Spaces (Continued) - Span. Spanning Set PDFmurugan2284No ratings yet

- Practice MidtermDocument1 pagePractice Midterm3dd2ef652ed6f7No ratings yet

- Advanced Algebra and Trigonometry Quarter 1: Self-Learning Module 16Document14 pagesAdvanced Algebra and Trigonometry Quarter 1: Self-Learning Module 16Dog GodNo ratings yet

- Morse Homology 2010-2011Document137 pagesMorse Homology 2010-2011Eric ParkerNo ratings yet

- 01 Handout 1Document16 pages01 Handout 1Aou Kuaim NohNo ratings yet

- Differentiation Logs Trig EquationsDocument4 pagesDifferentiation Logs Trig EquationsVerdict On WeedNo ratings yet

- Torpedo ManualDocument30 pagesTorpedo ManualKent StateNo ratings yet

- Conformal MappingDocument22 pagesConformal Mappingwaku74No ratings yet

- Basic Simulation Lab ManualDocument97 pagesBasic Simulation Lab Manualshiva4105100% (1)

- Lesson Plans ALGEBRASeptember 20221Document2 pagesLesson Plans ALGEBRASeptember 20221Dev MauniNo ratings yet

- Nptel OptimizationDocument486 pagesNptel OptimizationDebajyoti DattaNo ratings yet

- Existence and Multiplicity Results For Some Nonlinear Elliptic Equations: A SurveyDocument32 pagesExistence and Multiplicity Results For Some Nonlinear Elliptic Equations: A SurveyChris00No ratings yet

- Exercise Set 2.7: Functions and GraphsDocument3 pagesExercise Set 2.7: Functions and Graphsunknown :)No ratings yet

- Chain Rule, Implicit Differentiation and Linear Approximation and DifferentialsDocument39 pagesChain Rule, Implicit Differentiation and Linear Approximation and Differentialsanon-923611100% (1)

- 2010 Summer SchoolDocument31 pages2010 Summer SchoolAlbanita MendesNo ratings yet

- Real Analysis II HW 2 - Bahran - University of MinnesotaDocument6 pagesReal Analysis II HW 2 - Bahran - University of MinnesotaLinden JavaScriptNo ratings yet

- Hw9+Solutions 1Document3 pagesHw9+Solutions 1skazlaNo ratings yet

- Lecture Three LU Decomposition: Numerical Analysis Math351/352Document15 pagesLecture Three LU Decomposition: Numerical Analysis Math351/352Samuel Mawutor GamorNo ratings yet