Professional Documents

Culture Documents

Adaboost Dengan R

Uploaded by

sasha benedictOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Adaboost Dengan R

Uploaded by

sasha benedictCopyright:

Available Formats

CONTOH IMPLEMENTASI ADABOOST DENGAN R

library(adabag)

## Warning: package 'adabag' was built under R version 4.1.3

## Loading required package: rpart

## Loading required package: caret

## Warning: package 'caret' was built under R version 4.1.3

## Loading required package: ggplot2

## Warning: package 'ggplot2' was built under R version 4.1.3

## Loading required package: lattice

## Loading required package: foreach

## Warning: package 'foreach' was built under R version 4.1.3

## Loading required package: doParallel

## Warning: package 'doParallel' was built under R version 4.1.3

## Loading required package: iterators

## Warning: package 'iterators' was built under R version 4.1.3

## Loading required package: parallel

library(caret)

library(car)

## Warning: package 'car' was built under R version 4.1.3

## Loading required package: carData

## Warning: package 'carData' was built under R version 4.1.3

##Contoh Code Di R

#menggunakan data Iris

data1<- iris

head(data1)

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

#membuat partisi secara random dengan ketentuan data training 90% dan data

test 10%

parts = createDataPartition(data1$Species, p = 0.9, list = F)

train = data1[parts, ]

test = data1[-parts, ]

#membuat model menggunakan data training

model_adaboost <- boosting(Species~., data=train, boos=TRUE, mfinal=2)

model_adaboost$trees

## [[1]]

## n= 135

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 135 84 setosa (0.37777778 0.28148148 0.34074074)

## 2) Petal.Length< 2.35 51 0 setosa (1.00000000 0.00000000 0.00000000) *

## 3) Petal.Length>=2.35 84 38 virginica (0.00000000 0.45238095 0.54761905)

## 6) Petal.Width< 1.7 41 3 versicolor (0.00000000 0.92682927

0.07317073) *

## 7) Petal.Width>=1.7 43 0 virginica (0.00000000 0.00000000 1.00000000)

*

##

## [[2]]

## n= 135

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 135 80 virginica (0.29629630 0.29629630 0.40740741)

## 2) Petal.Length< 2.35 40 0 setosa (1.00000000 0.00000000 0.00000000) *

## 3) Petal.Length>=2.35 95 40 virginica (0.00000000 0.42105263

0.57894737)

## 6) Petal.Length< 4.85 47 7 versicolor (0.00000000 0.85106383

0.14893617)

## 12) Sepal.Length>=4.95 39 1 versicolor (0.00000000 0.97435897

0.02564103) *

## 13) Sepal.Length< 4.95 8 2 virginica (0.00000000 0.25000000

0.75000000) *

## 7) Petal.Length>=4.85 48 0 virginica (0.00000000 0.00000000

1.00000000) *

model_adaboost$weights

## [1] 1.534026 1.531765

##Akurasi

#menggunakan model untuk memprediksi data test

pred_test = predict(model_adaboost, test)

pred_test$confusion

## Observed Class

## Predicted Class setosa versicolor virginica

## setosa 5 0 0

## versicolor 0 5 0

## virginica 0 0 5

pred_test$error

## [1] 0

#menggunakan Cross-Validation

cvmodel <- boosting.cv(Species~., data=data1, boos=TRUE, mfinal=2, v=10)

## i: 1 Sun Sep 18 22:42:07 2022

## i: 2 Sun Sep 18 22:42:07 2022

## i: 3 Sun Sep 18 22:42:07 2022

## i: 4 Sun Sep 18 22:42:07 2022

## i: 5 Sun Sep 18 22:42:07 2022

## i: 6 Sun Sep 18 22:42:07 2022

## i: 7 Sun Sep 18 22:42:07 2022

## i: 8 Sun Sep 18 22:42:07 2022

## i: 9 Sun Sep 18 22:42:07 2022

## i: 10 Sun Sep 18 22:42:08 2022

print(cvmodel[-1])

## $confusion

## Observed Class

## Predicted Class setosa versicolor virginica

## setosa 50 0 0

## versicolor 0 46 6

## virginica 0 4 44

##

## $error

## [1] 0.06666667

Sumber :

https://www.datatechnotes.com/2018/03/classification-with-adaboost-model-in-r.html

You might also like

- Divided States: Strategic Divisions in EU-Russia RelationsFrom EverandDivided States: Strategic Divisions in EU-Russia RelationsNo ratings yet

- Intro to R & TidyverseDocument17 pagesIntro to R & Tidyversevinay kumarNo ratings yet

- Pertemuan 8 (BAB 7)Document7 pagesPertemuan 8 (BAB 7)Rifa'i RaufNo ratings yet

- Clase 08Document14 pagesClase 08Yanely CanalesNo ratings yet

- PCA and FA CommandsDocument13 pagesPCA and FA CommandsNguyễn OanhNo ratings yet

- Kanseei Tahapt 2Document8 pagesKanseei Tahapt 2ryusrianammarNo ratings yet

- R ProgramsDocument30 pagesR ProgramsRanga SwamyNo ratings yet

- Practical Assignment 04Document15 pagesPractical Assignment 04SRIKANTH RAJKUMAR 2140869No ratings yet

- Data Science Comp 2Document13 pagesData Science Comp 2tobiasNo ratings yet

- Tugas MPS Variabel JurnalDocument5 pagesTugas MPS Variabel JurnalIrfan HermawanNo ratings yet

- DCA ScriptDocument5 pagesDCA ScriptSegundo Artidoro Guerrero CiezaNo ratings yet

- Practical 4Document9 pagesPractical 4YuvikaNo ratings yet

- R Practice 2Document3 pagesR Practice 2focuseuanNo ratings yet

- RSQLite TutorialDocument3 pagesRSQLite Tutorialzarcone7No ratings yet

- Panel 2Document26 pagesPanel 2tilfaniNo ratings yet

- Debarghya Das (Ba-1), 18021141033Document12 pagesDebarghya Das (Ba-1), 18021141033Rocking Heartbroker DebNo ratings yet

- 20BCE1205 Lab9Document9 pages20BCE1205 Lab9SHUBHAM OJHANo ratings yet

- Home ConstructionDocument8 pagesHome Constructionphatakpriya108No ratings yet

- Antlion bs2460Document3 pagesAntlion bs2460calvinleow1205No ratings yet

- Exercise-10..Study and Implementation of Data Transpose Operation.Document1 pageExercise-10..Study and Implementation of Data Transpose Operation.Sri RamNo ratings yet

- Config ManagerDocument14 pagesConfig Managergabryelmg30No ratings yet

- Cia DoeDocument2 pagesCia Doeshathriya.narayananNo ratings yet

- Latihan PDF 1,3Document10 pagesLatihan PDF 1,3Mohamad CarfineNo ratings yet

- Compiled CDocument4 pagesCompiled CGaurav SinghNo ratings yet

- Ensayo Abrotanella: Cargar Un Arbol FilogeneticoDocument12 pagesEnsayo Abrotanella: Cargar Un Arbol FilogeneticovshaliskoNo ratings yet

- ditk_ppDocument24 pagesditk_ppYna ForondaNo ratings yet

- Covid Data AnalysisDocument24 pagesCovid Data AnalysisBryan MedinaNo ratings yet

- Ex 10 - Decision Tree With Rpart and Fancy Plot and Cardio DataDocument4 pagesEx 10 - Decision Tree With Rpart and Fancy Plot and Cardio DataNopeNo ratings yet

- Transferencia 2023 ExatasDocument22 pagesTransferencia 2023 ExatasinejeldaryaNo ratings yet

- Tambo High School inventory receipt of technical scientific equipmentDocument11 pagesTambo High School inventory receipt of technical scientific equipmentSharikSalvoNo ratings yet

- How To Find Floating - Dangling Nets, Pins, IO Ports, and Instances Using Get - DB CommandsDocument4 pagesHow To Find Floating - Dangling Nets, Pins, IO Ports, and Instances Using Get - DB CommandsRuchy ShahNo ratings yet

- FUVEST23Document24 pagesFUVEST23Estaçao Coruja NewsNo ratings yet

- Transferencia 2023 HumanasDocument24 pagesTransferencia 2023 HumanasLucas SerafimNo ratings yet

- GuideToIRTinvarianceUsingMIRT (ANCHOR)Document10 pagesGuideToIRTinvarianceUsingMIRT (ANCHOR)Ampeh SarokNo ratings yet

- Cluster RDocument1 pageCluster Rmail.information0101No ratings yet

- Chemo Mortality AnalysisDocument5 pagesChemo Mortality AnalysisAyu HutamiNo ratings yet

- Output 9Document8 pagesOutput 9Juhi SinghNo ratings yet

- Important Libraies After R UpdateDocument3 pagesImportant Libraies After R UpdateMÉLIDA NÚÑEZNo ratings yet

- Bot ScannerDocument31 pagesBot ScannerRolando LewandoswkyNo ratings yet

- StaticsTestDocument6 pagesStaticsTestSOWMIA R 2248056No ratings yet

- Final Ep4Document47 pagesFinal Ep4Alvaro HuamanNo ratings yet

- Screenshot 2020-10-13 at 12.56.37 PMDocument1 pageScreenshot 2020-10-13 at 12.56.37 PMSamara SheltonNo ratings yet

- Coding Analisis SpasialDocument9 pagesCoding Analisis SpasialRochmanto_HaniNo ratings yet

- AGNES and SPECTRAL CLUSTERING IN R PDFDocument1 pageAGNES and SPECTRAL CLUSTERING IN R PDFSahas ParabNo ratings yet

- E Bapeda Lembaga-111218090041-60705873 PDFDocument918 pagesE Bapeda Lembaga-111218090041-60705873 PDFAgenx Rihast IsagengNo ratings yet

- SabDocument69 pagesSabpaulasabrina805No ratings yet

- C C Expand - Grid C: "Solucion" "DIAS" "Y"Document3 pagesC C Expand - Grid C: "Solucion" "DIAS" "Y"elsaunachiNo ratings yet

- FCC Bulletin 4.11.10Document2 pagesFCC Bulletin 4.11.10Dennis SandersNo ratings yet

- Examen-Regresion MiguelEsparzaDocument9 pagesExamen-Regresion MiguelEsparzamaesparza2307No ratings yet

- MPDFDocument131 pagesMPDFNqobile KhanyileNo ratings yet

- Factor-Hair-Revised: Salma Mohiuddin 27/08/2019 Setting Up The Working DirectoryyDocument37 pagesFactor-Hair-Revised: Salma Mohiuddin 27/08/2019 Setting Up The Working DirectoryysalmagmNo ratings yet

- Tarea 9 Estadistica Javier MorejonDocument23 pagesTarea 9 Estadistica Javier MorejonJumper777《No ratings yet

- R Code For Canonical Correlation AnalysisDocument10 pagesR Code For Canonical Correlation AnalysisJose Luis Jurado ZuritaNo ratings yet

- Cluster Analysis of Customer Spending DataDocument4 pagesCluster Analysis of Customer Spending DataVisalakshi VenkatNo ratings yet

- EM622 Data Analysis and Visualization Techniques For Decision-MakingDocument47 pagesEM622 Data Analysis and Visualization Techniques For Decision-MakingRidhi BNo ratings yet

- AIX Performance ScriptDocument5 pagesAIX Performance ScriptSathish PillaiNo ratings yet

- USACODocument94 pagesUSACOLyokoPotterNo ratings yet

- Spore Game Configuration RulesDocument13 pagesSpore Game Configuration RulesBrunoMedinaNo ratings yet

- DATA CLASSIFICATION USING SUPERVISED LEARNING (DATA MININGDocument31 pagesDATA CLASSIFICATION USING SUPERVISED LEARNING (DATA MININGFadhlurrohman HenriwanNo ratings yet

- Point Query & Tiles Over Kerla: Manish Modani: Ts Timeslice Fts Forecasted Time SliceDocument8 pagesPoint Query & Tiles Over Kerla: Manish Modani: Ts Timeslice Fts Forecasted Time Sliceuser0xNo ratings yet

- American Cows in Antarctica R. ByrdDocument28 pagesAmerican Cows in Antarctica R. ByrdBlaze CNo ratings yet

- Product Brand Management 427 v1Document484 pagesProduct Brand Management 427 v1Adii AdityaNo ratings yet

- List of NgosDocument97 pagesList of Ngosjaivikpatel11No ratings yet

- Verification and Validation in Computational SimulationDocument42 pagesVerification and Validation in Computational SimulationazminalNo ratings yet

- Levitator Ino - InoDocument2 pagesLevitator Ino - InoSUBHANKAR BAGNo ratings yet

- SPEC 2 - Module 1Document21 pagesSPEC 2 - Module 1Margie Anne ClaudNo ratings yet

- Cook's Illustrated 090Document36 pagesCook's Illustrated 090vicky610100% (3)

- 3D Solar System With Opengl and C#Document4 pages3D Solar System With Opengl and C#Shylaja GNo ratings yet

- 302340KWDocument22 pages302340KWValarmathiNo ratings yet

- VachanamruthaDocument5 pagesVachanamruthaypraviNo ratings yet

- Operation ManualDocument83 pagesOperation ManualAn Son100% (1)

- Why We Can't Stop Obsessing Over CelebritiesDocument2 pagesWhy We Can't Stop Obsessing Over CelebritiesJoseMa AralNo ratings yet

- Picture DescriptionDocument7 pagesPicture DescriptionAida Mustafina100% (3)

- HR Interview QuestionsDocument6 pagesHR Interview QuestionsnavneetjadonNo ratings yet

- Spent Caustic Recycle at Farleigh MillDocument9 pagesSpent Caustic Recycle at Farleigh MillyamakunNo ratings yet

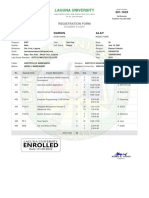

- Darius Registration Form 201 1623 2nd Semester A.Y. 2021 2022Document1 pageDarius Registration Form 201 1623 2nd Semester A.Y. 2021 2022Kristilla Anonuevo CardonaNo ratings yet

- FINANCE Company SlidersDocument10 pagesFINANCE Company SlidersKartik PanwarNo ratings yet

- From Verse Into A Prose, English Translations of Louis Labe (Gerard Sharpling)Document22 pagesFrom Verse Into A Prose, English Translations of Louis Labe (Gerard Sharpling)billypilgrim_sfeNo ratings yet

- Advanced Features and Troubleshooting ManualDocument138 pagesAdvanced Features and Troubleshooting ManualHugo Manuel Sánchez MartínezNo ratings yet

- Introduction To AIX Mirror Pools 201009Document11 pagesIntroduction To AIX Mirror Pools 201009Marcus BennettNo ratings yet

- Yu Gi Oh Card DetailDocument112 pagesYu Gi Oh Card DetailLandel SmithNo ratings yet

- Research Day AbstractsDocument287 pagesResearch Day AbstractsStoriesofsuperheroesNo ratings yet

- English AssignmentDocument79 pagesEnglish AssignmentAnime TubeNo ratings yet

- Xray Order FormDocument1 pageXray Order FormSN Malenadu CreationNo ratings yet

- Choose The Word Whose Main Stress Pattern Is Different From The Rest in Each of The Following QuestionsDocument8 pagesChoose The Word Whose Main Stress Pattern Is Different From The Rest in Each of The Following QuestionsKim Ngân NguyễnNo ratings yet

- Final MTech ProjectDocument30 pagesFinal MTech ProjectArunSharmaNo ratings yet

- Module #5 Formal Post-Lab ReportDocument10 pagesModule #5 Formal Post-Lab Reportaiden dunnNo ratings yet

- RuelliaDocument21 pagesRuelliabioandreyNo ratings yet

- 5054 On 2022 P22Document14 pages5054 On 2022 P22Raahin RahimNo ratings yet

- Ayushi HR DCXDocument40 pagesAyushi HR DCX1048 Adarsh SinghNo ratings yet