Professional Documents

Culture Documents

The Derivation and Choice of Appropriate Test Statistic (Z, T, F and Chi-Square Test) in Research Methodology

Uploaded by

TeshomeOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Derivation and Choice of Appropriate Test Statistic (Z, T, F and Chi-Square Test) in Research Methodology

Uploaded by

TeshomeCopyright:

Available Formats

International Journal of Scientific Research in ____________________________ Research Paper

Mathematical and Statistical Sciences

Vol.6, Issue.6, pp.87-95, December (2019) E-ISSN: 2348-4519

The Derivation and Choice of Appropriate Test Statistic (Z, t, F and

Chi-Square Test) in Research Methodology

Teshome Hailemeskel Abebe

Department of Economics, Ambo University, Ambo, Ethiopia

Author: teshome251990@gmail.com

Available online at: www.isroset.org

Received: 30/Nov/2019, Accepted: 15/Dec/2019, Online: 31/Dec/2019

Abstract- This paper aims to choose an appropriate test statistic for research methodology. Specifically, it tries to explore the

concept of statistical hypothesis test, derivation of the test statistic and identify their application on research methodology. It also

try to show the basic formulating and testing of hypothesis using test statistic since choosing appropriate test statistic is the most

important tool of research. Among the common statistical tests: Z-test, Student’s t-test, F test (like ANOVA), and Chi square test

were identified. In testing the mean of a population or comparing the means from two continuous populations, the z-test and t-test

were used, while the F test is used for comparing more than two means and equality of variance. The chi-square test was used for

testing independence, goodness of fit and population variance of single sample in categorical data. Therefore, choosing an

appropriate test statistic gives valid results about hypothesis testing.

Keywords- Test Statistic, Z-test, Student’s t-test, F test (like ANOVA), Chi-square test, Research Methodology

I. INTRODUCTION derivation of test statistic and their relationships, section III

contain the theoretical application of each test statistic for

The application of statistical test in scientific research has research methodology, and section IV concludes research

been increasing dramatically in recent years almost in every work.

science. Despite, its applicability in every science, there were

misunderstanding in choosing of appropriate test statistic for II. DERIVATION OF Z, t, F AND CHI-SQUARE

the given research problems. Thus, this study gives a TEST STATISTIC

direction which test statistic is appropriate in conducting

statistical hypothesis testing in research methodology. Theorem 1: If we draw independent random samples,

Choosing appropriate statistical test may be depending on the from a population and compute the mean ̅

types of data (continuous or categorical), i.e. whether a

and repeat this process many times, then ̅ is approximately

t-test, z-test, F-test or a chi-square test should be used depend normal. Since assumptions are part of the conditions used to

on the nature of data. For example the two-sample

deduce sampling distribution of statistic, then the t, and F

independent t-test and z-test used if the two samples are

distributions all depend on normal “parent” population.

independent, a paired z-test and t-test used if two samples are

dependent, while F-test can applied for an independent

sample. Chi-Square Distribution

Therefore, testing hypothesis about the mean of a single The chi-Square distribution is a theoretical distribution,

population, comparing the means from two populations such which has wide application in statistical work. The term

as one-way ANOVA, testing the variance of a single `chi-square' (denoted by Greek letter and pronounced

population using chi-square test and test of two or several with `chi') is used to define this distribution. The chi-square

mean and variance using F-test are an important topic in this theoretical distribution is a continuous distribution, but the

paper. statistic is obtained in a discrete manner based on discrete

Thus, the main objective of the study is to derive an differences between the observed and expected values.

appropriate test statistic and give a direction when each test Chi-square ) is the sum of independent squared normal

statistic should be applicable in research methodology. random variables with mean and variance

The paper is organized into four sections. Section I contains (i.e., standard normal random variables).

the introduction part of the paper, section II contain the

© 2019, IJSRMSS All Rights Reserved 87

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

Suppose we pick from a normal distribution ̅ )

with mean ) and variance ) , that is ⁄

) . Then it turns out that be ∑ ̅)

independent standard normal variables ( )) and if )

)

the sum of independent standard random variable ⁄ )

(∑ = ) have a chi-square distribution with K degree of ̅ ) ̅ )

freedom given as follows: ⁄ ⁄

) ⁄ ) ⁄ )

̅) ) ⁄ )

∑

Based on the Central Limit Theorem, the “limit of the ⁄ )

distribution is normal (as ). So that the chi-square test

where is the degree of freedom

is given by

)

(1) A squared random variable equals the ratio of squared

standard normal variable divided by chi-squared with

degree of freedom.

The F Distribution

is the ratio of two independent chi-squared random

As , ) because ⁄ . In this

variables each divided by their respective degrees of freedom

case, squared root of both side results, the Student’s based

as given by: ̅

⁄ on normal distribution defined as: ⁄√ )

⁄

(2)

Since ’s depend on the normal distribution, the ⁄ ⁄

distribution also depends on normal distribution. The limiting Moreover, , then the ⁄ ⁄

distribution of as is ⁄ because as .

⁄ .

̅

For t-distribution, , where ⁄√

)

√ ⁄

Student’s 𝓽-Distribution )

The t-distribution is a probability distribution, which is and , then the test statistic:

frequently used to evaluate hypothesis regarding the mean of

continuous variables. Student’s t-distribution is quite similar (3)

√ ⁄

to normal distribution, but the exact shape of t-distribution

depends on sample size, i.e., as the sample size increases then

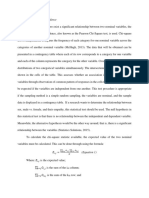

t-distribution approaches normal distribution. Unlike the Table 1: Summary of relationships

normal distribution, however, which has a standard deviation Distribution Definition Parent Limiting

of 1, the standard deviation of the t-distribution varies with an ∑ , Independent As

entity known as the degrees of freedom. Normal

⁄ )⁄ ⁄ ) Chi- As ,

Proof: Independent squared ⁄

̅ ̅ ) As ,

⁄√ ⁄ Normal

( )

⁄√ ∑ ̅) ⁄

Note: Let ), ,

̅ )

∑ ̅) ⁄ III. TEST STATISTIC APPROACH OF

HYPOTHESIS TESTING

̅ )

̅ ) ⁄ Hypotheses are predictions about the relationship among two

∑ ̅) ⁄ ∑ ̅) or more variables or groups based on a theory or previous

⁄ ) research [1]. Hence, hypothesis testing is the art of testing if

variation between two sampling distributions can be

explained through random chance or not. The three

approaches of hypothesis testing are test statistic, p-value and

© 2019, IJSRMSS All Rights Reserved 88

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

confidence interval. In this paper, we focus only test statistic

approach of hypotheses testing. A test statistic is a function of ̅) ( ∑ ) (∑ )

a random sample, and is therefore a random variable. When

we compute the statistic for a given sample, we obtain an )

outcome of the test statistic. In order to perform a statistical (∑ )) )

test we should to know the distribution of the test statistic

under the null hypothesis. This distribution depends largely

on the assumptions made on the model. If the specification of The first step is to state the null hypothesis which is a

the model includes the assumption of normality, then the statement about a parameter of the population (s) labelled as

appropriate statistical distribution is the normal distribution and alternative hypothesis which is a statement about a

or any of the distributions associated with it, such as the parameter of the population (s) that is opposite to the null

Chi-square, Student’s t, or Snedecor’s F. hypothesis labelled . The null and alternatives hypotheses

(two-tail test) are given by; against .

Theorem 2: If are normally distributed random

A. 𝔃-tests (Large Sample Case)

variable with and variance , then the standard random

A Z-test is any statistical test for which the distribution of the variable Z (one-sample Z-test) has a standard normal

test statistic under the null hypothesis can be approximated by distribution given by:

a normal distribution [2]. In this paper, the -test is used for ̅

testing significance of single population mean and difference ⁄√

) (4)

of two population means if our sample is taken from a normal

distribution with known variance or if our sample size is large where ̅ is the sample mean, is the hypothesized

enough to invoke the Central Limit Theorem (usually population mean under the null hypothesis, is the

is a good rule of thumb). population standard deviation and is the sample size,

⁄√ ̅ is standard error.

One Sample 𝔃-test for Mean However, according to Joginder K. [3] if the population

A one-sample -test helps to determine whether the standard deviation is unknown, but , given the

population mean, is equal to a hypothesized value when Central Limit Theorem, the test statistic is given by:

̅

the sample is taken from a normal distribution with known (5)

⁄√

variance .

where √ ∑ ̅)

Recall Theorem 1. If are a random variables,

∑

then, ̅ . In this case, the mean of the sample from

Two-Sample 𝔃-test (When Variances are Unequal)

the population is not constant rather it varies depend on which

sample drawn from the population is chosen. Therefore, the A two-sample test can be applied to investigate the significant

sample mean is a random variable with its own distribution is of the difference between the mean of two populations when

called sampling distribution. the population variances are known and unequal, but both

distributions are normal and independently drawn. Suppose

Lemma 1. If ̅ is the random variable of the sample mean of we have independent random sample of size n and m from

all the random samples of size n from the population with two normal populations having means and and

expected value ) and variance ) , then the known variances and , respectively, and that we want

expected value and variance of ̅ are ̅) ) and to test the null hypothesis , where is a

) given constant, against one of the alternatives ,

̅) , respectively. now we can derive a test statistic as follow.

Proof: Consider all of the possible samples of size n from a Lemma 2. If and are

population with expected value ) and variance ). independent normally distributed random variables with

If a sample are chosen, each comes from ) and ) , then ̅ ̅

the same population so each has the same expected values,

) and variance ). ).

Proof: By Lemma 1. ̅ )and ̅ ). Then

̅) ( ∑ ) (∑ ) (∑ ))

̅ ̅ ̅ )̅ ) ).

)) ) The appropriate test statistic for this test is given by:

© 2019, IJSRMSS All Rights Reserved 89

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

̅ ̅)

) (6) Theorem 3. If and are normally

√

distributed random variables, but and are dependent,

then the standard random variable is:

̅

where ∑ ̅ ) and ∑ ( ̅) ) (10)

⁄√

If we do not know the variance of both distributions, then we

may use: where is a pairwise difference (is the sample

̅ ̅) )

mean of the sum of differences between the first results and

√

(7) the second results of participants in the study) also given by

̅ ∑ , is the mean value of pair wise difference

provided n and m are large enough to invoke the Central under the null hypothesis, and is the population standard

Limit Theorem, where is the sample size of the one group deviation of the pairwise difference. The basic reason is that

(X) and is the sample size of the other group (Y), ̅ is the and are normal random variable, so is

sample mean of one group and calculated as: also normal random variable, thus are thought

to be a sequence of random variable.

∑

̅ , and the sample mean of the other group is

B. Student’s t 𝓽-test

denoted by ̅ and can be estimated as: Unfortunately, -test requires either the population is

∑ normally distributed with a known variance, or the sample

̅ size is large. However, when the sample size is small

), the value for the area under the normal curve is

Two sample Z-test (when Variances are Equal) for Mean not accurate. Thus, instead of a z value, the t values are more

It can be applied to investigate the significance of the accurate for small sample sizes since the value depend upon

difference between the mean of two populations when the the number of cases in the sample, i.e., as the sample size

population variances are known and equal, but both change; the value will change [5]. However, the -test

distributions are normal. The null and alternatives hypotheses needs an assumption that the sample value should be drawn

(two-tail test) are given by and randomly and distributed normally (since the data is

, respectively. Then test statistic is: continuous). Therefore, the -test is a useful technique for

̅ ̅) comparing the mean value of a group against some

) (8)

√ hypothesized mean (one-sample) or of two separate sets of

numbers against each other (two-sample) in case of small

where standard error of difference is √ samples ( ) taken from a normal distribution of

unknown variance.

If we don't know the variance of both distributions, then we

may use: A t-test defined as a statistical test used for testing of

̅ ̅) )

(9) hypothesis in which the test statistic follows a Student’s

√

t-distribution under the assumption that the null hypothesis is

provided and are large enough to invoke the Central true [6].

Limit Theorem.

One-Sample t-test

Paired Sample z Test Banda Gerald [4] suggested that a one-sample -test can be

According to Banda Gerald [4], the words like dependent, used to compares the mean of a sample to a predefined value.

repeated, before and after, matched pairs, paired and so on are Thus, we use one sample t-test to investigate the significance

hints for dependent samples. Paired samples also called of the difference between an assumed population mean and

dependent samples used for testing the population mean of a sample mean. The population characteristics are known

paired differences. A paired -test helps to determine from theory or are calculated from the population. Thus, a

whether the mean difference between randomly drawn researcher can use one-sample t-test to compare the mean of a

samples of paired observations is significant. Statistically, it sample with a hypothesized population mean to see if the

is equivalent to a one-sample -test on the differences sample is significantly different. The null and alternative

between the paired observations. The null and alternative hypotheses (two-tail test) are given; and

hypotheses (two-tail test) are given by and , respectively. Then the t-test statistic is:

̅

, respectively. ⁄√ ) (11)

© 2019, IJSRMSS All Rights Reserved 90

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

where ̅ is the sample mean, is the population mean or Then ̅ ̅ ̅ )̅ ) ).

hypothesized value, is the sample size and is the sample

standard deviation which is calculated as:

∑ ̅) Lemma 4. If and are normally

and independent distributed random variables with

) and )

̅ ̅)

Then ).

Independent Two Sample 𝓽-test (Variances Unknown but √

Equal)

A two-sample t-test is used to compare two sample means Theorem 4. If and are normally

when the independent variable is nominal level data while the and independent distributed random variables with

dependent variable is interval/ratio level data [7]. Thus, ) and ), then the test statistic is

independent samples -test is used to compare two groups given as:

whose means are not dependent on one another. Two samples

̅ ̅)

are independent if the sample values selected from one (13)

population are not related with the sample values selected √

from the other population. Thus, an independent sample t-test ∑ ̅) ∑ ̅)

) )

helps to investigate the significance of the difference between where √ √

the means of two populations when the population variance is has a -distribution with degree of freedom.

unknown but equal.

Proof:

Let assume a group of independent normal samples given by ̅ ̅)

and distributed as

) and ), respectively. Then, the √ ) )

√

null and alternative hypotheses (two-tail test) are given by

and , respectively. If ̅ ̅) ) )

sample sizes and are not large enough to invoke the ⁄√( )

Central Limit Theorem, then the test statistic is: √

̅ ̅)

) (12) ̅ ̅)

√ Let , by lemma 4. ) and if

√

where refers to sample size from the first group (X), is

) )

sample size from the second group (Y), ̅ is the sample mean ( ).

of the first group (X), ̅ is the sample mean of the second ) )

Then since and .

group (Y), and is the pooled standard deviation for both

groups which is calculated as follows: Therefore, )

√

) ) which has a -distribution with degree of

√

freedom.

∑ ̅) ∑ ̅)

√ Independent Two sample t-test (Variances unknown but

Unequal)

where ̅ ∑ ̅) ∑ However, if the variances are unknown and unequal, then we

use a t-test for two population mean given the null hypothesis

̅)

of the independent sample t-test (two-tail test)

Let us derived -distribution in two sample t-test (variances .

unknown but equal).

The test statistic is:

Lemma 3. If and are normally ̅ ̅)

and independent distributed random variables with )

(14)

) and ), then ̅ ̅ √

( )). where ∑ ̅) & ∑ ̅)

Proof: By Lemma 1. ̅ )and ̅ ).

© 2019, IJSRMSS All Rights Reserved 91

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

Paired (Dependent) Sample 𝓽 Test Effect Size of Dependent Sample t-test

Two samples are dependent (or consist of matched pairs) if The most commonly used method for calculating effect size

the members of one sample can be used to determine the independent sample t-test is the Eta Squared [10].

members of the other sample. A paired t-test is used to

compare two population means when we have two samples in The formula for simplified Eta Squared is given by:

which observations in one sample can be paired with (17)

observations in the other sample under Gaussian distribution

[8, 9]. When two variables are paired, the difference of these

two variables, , is treated as if it were a single where is the calculated value of the dependent sample

sample. This test is appropriate for pre-post treatment -test, n is the sample size. The calculated value of the Eta

responses. The null hypothesis is that the true mean Squared should be between 0 and 1.

difference of the two variables is as given by; , and are small, medium, and large effect,

. respectively.

Then the test statistic is: C. Chi-Square Test Statistic

̅

̅ ) (15) Karl Pearson in 1900 proposed the famous chi-squared test

̅

∑ ∑ ̅)

for comparing an observed frequency distribution to a

where ̅ , , ̅ , is the mean of theoretically assumed distribution in large-sample

√

the pairwise difference under the null hypothesis and is approximation with the chi-squared distribution. According

sample standard deviation of the pairwise differences. to Pearson [11], chi-square test is a non-parametric test used

for testing the hypothesis of no association between two or

Proof: Let and are normal random variable. Therefore, more groups, population or criteria (i.e. to check

is also a normal random variable. Then independence between two variables) and how likely the

, can be thought as the sequence of random observed distribution of data fits with the distribution that is

variable, . expected (i.e., to test the goodness‑of‑fit) in categorical data.

In this case, we apply chi-square test for testing population

variance, independence and goodness of fit.

Effect Size

Both independent and dependent sample t-test will give the Chi-Square Test of Population Variance (One-sample test

researcher an indication of whether the difference between

the two groups is statistically significant, but not indicates the for variance)

size (magnitude) of the effect. However, effect size explains The chi-square test can be used to judge if a random sample

the degree to which the two variables are associated with one has been drawn from a normal population (interval and ratio

another. Thus, effect size is the statistic that indicates the data) with mean ( ) and with specified variance ( ) [12].

relative magnitude of the differences between means or the Thus, it used to compare the sample variance to a

amount of total variance in the dependent variable that is hypothesized variance (i.e. it is a test for a single sample

predictable from the knowledge of the levels of independent variance). Assume the data are independent

variable. normal samples with ) and we know that

)

∑ ∑ if ). The null and

Effect Size of an Independent Sample t-test

alternative hypotheses (two-tail) given by and

An effect size is a standardized measure of the magnitude of , respectively. Then the test statistic:

observed effect. The Pearson’s correlation coefficient r is

used as common measures of effect size. Correlation

coefficient is used to measure the strength of relationship )

∑ ∑

between two variables. From the researcher’s point of view,

correlation coefficient of 0means there is no effect, and a ∑

However, we don’t know , so we use ̅ as an

value of 1 means that there is a perfect effect. The following is

∑ ̅)

the formula used to calculate the effect size; estimate of and .

√ (16) Thus, sampling distribution of test statistic if is true:

)

(18)

where is the calculated value of the independent sample

t-test, and is the sample size, if refers to small The decision rule is to compare the obtained test statistic to

effect, refers to medium effect and refers the chi-squared distribution with degree of

to large effect. freedom. If we construct the critical region of size in the

© 2019, IJSRMSS All Rights Reserved 92

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

two-tail case, our critical region of size will be all values of D. The F-test Statistic

such that either ⁄ or ⁄ . F-tests are used when comparing statistical models that have

been fit to a data set to determine models that best fit the

Chi-Square Test of Independence population from the data being sampled [15]. F distribution is

a joint distribution of two independent variables with each

The chi-square test of independence is a non-parametric

having a chi-square distribution [16]. F Distribution needs

statistical test used for deciding whether two categorical both parent populations to be normal, have the same variance

(nominal) variables are associated or independent [13]. Let and independent sample. F-test is applicable for testing

the two variables in the cross classification are X and Y, then equality of variances and testing equality of several means.

the null against

alternative . The

chi-square statistic used to conduct this test is the same as in Testing for Equality of Variances

the goodness of fit test given by: The chi-square test used for testing a single population

( )

variance, while -test for testing the equality of variances of

∑ ∑ ) ) (19) two independent populations (comparing two variances).

Thus, it helps to make inferences about a population variance

where is the test statistic that asymptotically approaches a or the sampling distribution of the sample variance from a

chi-square distribution, is the observed frequency of the normal population given the null against the

alternativehypothesis . Suppose two

ith row and jth column, representing the numbers of

independent random samples drawn from each population

respondents that take on each of the various combinations of

(normally and identically distributed population) as given by

values for the two variables.

) ) ) and ). Moreover, let and

represent the sample variances of two different populations. If

both populations are normal and the population variances

where is the expected (theoretical) frequency of the ith and are equal, but unknown, then the sampling

row and jth column, is the number of rows in the distribution of is called an -distribution.

contingency table, and is the number of columns in the

contingency table. The first samples are normally and

If )

) ) , then the null hypothesis is rejected independent distributed random variables,

and we conclude the existence of relationship between the ∑ ̅)

variables. If ) ) , the null hypothesis cannot

with d.f.

be rejected and conclude that there is insufficient evidence of The second samples are normally and

a relationship between the variables. ) ∑ ( ̅)

independent random variables

Chi-Square Test for Goodness of Fit with d.f.

A chi-square test for goodness of fit is used to compare the

Let assume and are two independent random variables

frequencies (counts) among multiple categories of nominal or

ordinal level data for one-sample. Moreover, a chi-square test & given the null hypothesis ( ) holds true, then

for goodness of fit used to compares the expected and -test for population variance is given by:

⁄ ⁄

observed values to determine how well the experimenter’s ⁄

(21)

⁄

predictions fit the data [14].

where and are samples from population X and Y,

= numerator degrees of freedom, =

The null hypothesis ( ) is stated as the

denominator degrees of freedom.

population distribution of the variable is the same as the

proposed distribution against the alternative ( )

Testing Equality of Several Means (ANOVA)

the distributions are different.

Analysis of variance (ANOVA) is statistical technique used

The chi square statistic is defined as: for comparing means of more than two groups (usually at

least three) given the assumption of normality, independency

( )

∑ ∑ (20) and equality of the error variances. In one-way ANOVA,

group means are compared by comparing the variability

between groups with that of variability within the groups.

Large values of this statistic lead rejection of the null

This is done by computing an F-statistic. The F-value is

hypothesis, meaning the observed values are not equal to

computed by dividing the mean sum of squares between the

theoretical values (expected).

groups by the mean sum of squares within the groups [17].

© 2019, IJSRMSS All Rights Reserved 93

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

A one-way analysis of variance (ANOVA) is given by: hypothesis tests to the right data is the main role of statistical

tools in empirical research of every science. Specifically, the

where th th

is the j observation for the i income level, is researcher apply -test, which is appropriate to test the

a constant term, is the effect on the ith income level, existence of population mean difference in the case of large

) . sample size, while t-test is used for testing the mean value

The null hypothesis given by

with independent and dependent small sample size.

against .

Total variation of the data is partitioned as variation within Moreover, -test is used for test of several mean and

levels (groups) and between levels (groups). Mathematically, variance, while Chi-square test is for testing single variance,

̅ ∑ ∑ and portion of total variance, goodness of fit and independence. In general, the researcher

∑ ∑ ( ̅) try to address on the problems of identify an appropriate test

statistic for data analysis, which is the main problems of

∑ ̅ ̅) ∑ ∑( ̅)

researchers who apply a statistical test in research

̅) methodology.

where ∑ ∑ ( is sum of square

variation about the grand mean across all n observations,

∑ ̅ ̅) is sum of squared deviation for ACKNOWLEDGEMENT

each group mean about the grand mean, ∑ ∑ (

̅) is sum of squared deviation for all observation The author thanks the anonymous referees for their valuable

within each group from that group mean, summed across all comments.

groups. Then the mean sum of square between or

∑ ̅ ̅)

denotes the sum of squares that represents the REFERENCES

variation among the different samples, while within mean

∑ ∑ ( ̅) [1] David, J. Pittenger, "Hypothesis Testing as a Moral Choice",

sum square or which refers to the Ethics & Behavior, Vol. 11, Issue. 2, PP.151-162, 2001, DOI:

sum of squares that represents the variation within samples 10.1207/S15327019EB1102_3.

that is due to chance. Then the test statistic is the ratio of [2] Vinay Pandit, "A Study on Statistical Z Test to Analyses

group and error mean squares: Behavioral Finance Using Psychological Theories", Journal of

Research in Applied Mathematics, Vol. 2, Issue. 1, PP. 01-07,

(22) 2015, ISSN(Online), 2394-0743 ISSN (Print): 2394-0735.

[3] Joginder Kaur, "Techniques Used in Hypothesis Testing in

Table 2: One-Way ANOVA Table Research Methodology A Review", International Journal of

Source of Sum of Science and Research (IJSR), ISSN (Online): 2319-7064 Index

DF Mean squares Copernicus Value: 6.14 | Impact Factor: 4.438, 2013.

Variation square

[4] Banda Gerald, "A Brief Review of Independent, Dependent and

Between

SSB k-1 MSSB= One Sample t-test", International Journal of Applied

(between group)

Mathematics and Theoretical Physics, Vol. 4, Issue. 2, PP. 50-54,

Within (error) SSW n-k MSSW= 2018, doi: 10.11648/j.ijamtp.20180402.13.

[5] McDonald, J. H, "Handbook of Biological Statistics", 3rd ed.,

Total TSS n-1

Sparky House Publishing, Baltimore, Maryland, 2008.

[6] J. P. Verma, "Data Analysis in Management with SPSS

Reject if the calculated is greater than the tabulate Software", Springer, India, DOI 10.1007/978-81-322-0786-3_7,

value ( ), meaning variability between groups is large 2013.

compared to variation within groups, while fail to reject [7] Philip, E. Crewson, "Applied Statistics", 1st Ed., United States

if the calculated is less than the tabulate value ( ), Department of Justice, 2014.

[8] Kousar, J. B. and Azeez Ahmed, "The Importance of Statistical

meaning the variability between groups is negligible

Tools in Research Work", International Journal of Scientific and

compared to variation within groups. Innovative Mathematical Research (IJSIMR), Vol. 3, Issue. 12,

PP. 50-58, ISSN 2347-307X (Print) & ISSN 2347-3142

IV. CONCLUSIONS (Online), 2015.

In this paper, the derivation and choice of appropriate a test [9] Tae Kyun Kim, "T test as a parametric statistic". Korean

Society of Anesthesiologists, ISSN 2005-6419, eISSN

statistic were reviewed since application of right statistical 2005-7563, 2015.

© 2019, IJSRMSS All Rights Reserved 94

Int. J. Sci. Res. in Mathematical and Statistical Sciences Vol. 6(6), Dec 2019, ISSN: 2348-4519

[10] Pallant, J., "SPSS Survival Manual", A Step by Step to Data AUTHOR PROFILE

Analysis Using SPSS for Windows (Version 15), Sydney,

Allen .and Unwin, 2007. Mr. Teshome Hailemeskel Abebe working as lecturer in the

[11] Pearson, K., "On the criterion that a given system of deviations

deprtment of economics, Ambo University, Ambo, Ethiopia.

from the probable in the case of a correlated system of variables

that arisen from random sampling", Philos, Vol. 50, pp.157-175, He has 7 years teaching experience. He has a B.A. degree in

1900,.Reprinted in K. Pearson (1956), pp. 339-357.

economics from Debre Markos University and M.Sc. degree

[12] Sureiman Onchiri, "Conceptual model on application of

chi-square test in education and social sciences", Academic in Satatistics (Econometrics) from Haramaya University. His

Journals, Educational Research and Reviews, Vol. 8, Issue. 15,

area of interest is econometrics, especially financial and time

pp. 1231-1241, DOI: 10.5897/ERR11.0305; ISSN 1990-3839,

2013. series econometrics.

[13] Alena Košťálová, “Reliability and Statistics in Transportation

and Communication” In Proceedings of the 10th International

Conference, Riga, Latvia, pp. 163-171. ISBN

978-9984-818-34-4, 2013.

[14] Sorana, D. B., Lorentz, J., Adriana, F. S., Radu, E. S. and Doru,

C. P., “Pearson-Fisher Chi-Square Statistic Revisited“, Journal

of Information,Vol. 2: pp.528-545; doi: 10.3390/info2030528;

ISSN 2078-2489, 2011.

[15] Downward, L. B., Lukens, W. W. & Bridges, F., “A Variation

of the F-test for Determining Statistical Relevance of Particular

Parameters in EXAFS Fits“, 13th International Conference on

X-ray Absorption Fine Structure, pp.129-131, 2006.

[16] LING, M., “Compendium of Distributions: Beta, Binomial,

Chi-Square, F, Gamma, Geometric, Poisson, Student's t, and

Uniform“, The Python Papers, Source Codes 1:4, 2009.

[17] Liang, J., “Testing the Mean for Business Data: Should One

Use the Z-Test, T-Test, F-Test, The Chi-Square Test, Or The

P-Value Method? “, Journal of College Teaching and Learning,

Vol. 3, pp. 7, doi:10.19030/tlc.v3i7.1704, 2011.

© 2019, IJSRMSS All Rights Reserved 95

You might also like

- X2 Test Analysis of Male and Female Fruit Fly RatioDocument5 pagesX2 Test Analysis of Male and Female Fruit Fly RatioPaewa PanennungiNo ratings yet

- AbisolaDocument12 pagesAbisolaAbiodun KomolafeNo ratings yet

- Chi-Squared Test Explained for Goodness of FitDocument19 pagesChi-Squared Test Explained for Goodness of FitJiisbbbduNo ratings yet

- LESSON 7: Non-Parametric Statistics: Tests of Association & Test of HomogeneityDocument21 pagesLESSON 7: Non-Parametric Statistics: Tests of Association & Test of HomogeneityJiyahnBayNo ratings yet

- Chi - Square Test: Dr. Md. NazimDocument9 pagesChi - Square Test: Dr. Md. Nazimdiksha vermaNo ratings yet

- 123 T F Z Chi Test 2Document5 pages123 T F Z Chi Test 2izzyguyNo ratings yet

- Chi Square Test - Definition, Chi Square Distribution, Types and Applications (Short Notes)Document7 pagesChi Square Test - Definition, Chi Square Distribution, Types and Applications (Short Notes)Imran Afzal BhatNo ratings yet

- Chi-Squared DistributionDocument15 pagesChi-Squared Distributionshiena8181No ratings yet

- Univariate Statistics: Statistical Inference: Testing HypothesisDocument28 pagesUnivariate Statistics: Statistical Inference: Testing HypothesisEyasu DestaNo ratings yet

- Chi-squared test explainedDocument10 pagesChi-squared test explainedGena ClarishNo ratings yet

- บทความภาษาอังกฤษDocument9 pagesบทความภาษาอังกฤษTANAWAT SUKKHAONo ratings yet

- 5 HypothesisDocument3 pages5 HypothesisashujilhawarNo ratings yet

- Making Inferences about Population VariancesDocument19 pagesMaking Inferences about Population VariancesEhsan KarimNo ratings yet

- Chi-Square Test: Advance StatisticsDocument26 pagesChi-Square Test: Advance StatisticsRobert Carl GarciaNo ratings yet

- Module 5a Chi Square - Introduction - Goodness of Fit TestDocument39 pagesModule 5a Chi Square - Introduction - Goodness of Fit TestShaun LeeNo ratings yet

- Definition of Chi-Square TestDocument8 pagesDefinition of Chi-Square TestDharshu Rajkumar100% (1)

- Chapter11 StatsDocument6 pagesChapter11 StatsPoonam NaiduNo ratings yet

- qm2 NotesDocument9 pagesqm2 NotesdeltathebestNo ratings yet

- Overview of Common Probability DistributionsDocument6 pagesOverview of Common Probability DistributionsRamos LeonaNo ratings yet

- DISTRIBUTIONSDocument20 pagesDISTRIBUTIONSAyush GuptaNo ratings yet

- Art (Dupont, Plummer, 1990) Power and Sample Size Calculations - A Review and Computer ProgramDocument13 pagesArt (Dupont, Plummer, 1990) Power and Sample Size Calculations - A Review and Computer ProgramIsmael NeuNo ratings yet

- Chi-square and T-tests in analytical chemistryDocument7 pagesChi-square and T-tests in analytical chemistryPirate 001No ratings yet

- Chi Square Test Chpter 9Document10 pagesChi Square Test Chpter 9Shaa RullNo ratings yet

- Lecture 4 PDFDocument21 pagesLecture 4 PDFAngelo DucoNo ratings yet

- Parametric TestDocument2 pagesParametric TestPankaj2cNo ratings yet

- Skewness and kurtosis measures for non-normal distributionsDocument5 pagesSkewness and kurtosis measures for non-normal distributionsManuel CanelaNo ratings yet

- HT Part 2Document76 pagesHT Part 2marioma1752000No ratings yet

- Salinan Terjemahan Pengertian Uji NormalitasDocument8 pagesSalinan Terjemahan Pengertian Uji NormalitasNabila LathifahNo ratings yet

- Chapter - Six The Chi-Square Distribution ObjectivesDocument16 pagesChapter - Six The Chi-Square Distribution ObjectivestemedebereNo ratings yet

- Sampling Notes Part 01Document13 pagesSampling Notes Part 01rahulNo ratings yet

- Comparing Areas Under Correlated ROC Curves Using Nonparametric ApproachDocument10 pagesComparing Areas Under Correlated ROC Curves Using Nonparametric Approachajax_telamonioNo ratings yet

- Mini Project Statistics)Document22 pagesMini Project Statistics)manas_samantaray28100% (1)

- Stats Chapter 17 Chi SquaredDocument21 pagesStats Chapter 17 Chi SquaredMadison HartfieldNo ratings yet

- Application of The Bootstrap Method in The Site Characterization ProgramDocument14 pagesApplication of The Bootstrap Method in The Site Characterization ProgramSebestyén ZoltánNo ratings yet

- Unit 3 HypothesisDocument41 pagesUnit 3 Hypothesisabhinavkapoor101No ratings yet

- Non Parametric TestsDocument12 pagesNon Parametric TestsHoney Elizabeth JoseNo ratings yet

- Chi SquareDocument34 pagesChi Squarenico huelmaNo ratings yet

- Standard DeviationDocument12 pagesStandard DeviationCarlos BustamanteNo ratings yet

- Test of HypothesisDocument85 pagesTest of HypothesisJyoti Prasad Sahu64% (11)

- Chapter 9 - Chi-Square TestDocument3 pagesChapter 9 - Chi-Square TestTrish PrincilloNo ratings yet

- 6.2 Students - T TestDocument15 pages6.2 Students - T TestTahmina KhatunNo ratings yet

- Sample and Sampling Procedure: PopulationDocument21 pagesSample and Sampling Procedure: Populationsumeet bhardwajNo ratings yet

- Chi SquareDocument3 pagesChi SquareMikaila Denise LoanzonNo ratings yet

- Level of SignificanceDocument4 pagesLevel of SignificanceAstha VermaNo ratings yet

- Chapter 8 and 9Document7 pagesChapter 8 and 9Ellii YouTube channelNo ratings yet

- Fand TtestsDocument19 pagesFand TtestsHamsaveni ArulNo ratings yet

- 1 s2.0 S0378375806002205 MainDocument10 pages1 s2.0 S0378375806002205 Mainjps.mathematicsNo ratings yet

- Comparison of Tests For Univariate NormalityDocument17 pagesComparison of Tests For Univariate NormalitysnsksjNo ratings yet

- Sampling Distribution and Simulation in RDocument10 pagesSampling Distribution and Simulation in RPremier PublishersNo ratings yet

- ch04 Sampling DistributionsDocument60 pagesch04 Sampling DistributionslollloNo ratings yet

- Chi-Squared Test ExplainedDocument53 pagesChi-Squared Test ExplainedgeorgianapetrescuNo ratings yet

- Testul Chi PatratDocument9 pagesTestul Chi PatratgeorgianapetrescuNo ratings yet

- Chi Square TestDocument19 pagesChi Square TestRahul KumarNo ratings yet

- Chapter 8 Sampling and EstimationDocument14 pagesChapter 8 Sampling and Estimationfree fireNo ratings yet

- WikipediaDocument29 pagesWikipediaradhakodirekka8732No ratings yet

- Chi-Square Goodness-of-Fit Test ExplainedDocument3 pagesChi-Square Goodness-of-Fit Test ExplainedParul MittalNo ratings yet

- Introduction To T Test: (Single Sample and Dependent Means)Document37 pagesIntroduction To T Test: (Single Sample and Dependent Means)Jhunar John TauyNo ratings yet

- Probability DistributionsDocument79 pagesProbability DistributionsGeleni Shalaine BelloNo ratings yet

- Sampling Distributions and Hypothesis TestingDocument9 pagesSampling Distributions and Hypothesis TestingJHANVI LAKRANo ratings yet

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignFrom EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignNo ratings yet

- ENGR 201: Statistics For Engineers: Chapter 10: Statistical Inference For Two SamplesDocument40 pagesENGR 201: Statistics For Engineers: Chapter 10: Statistical Inference For Two Samplesديوان الرئاسةNo ratings yet

- Confidence IntervalsDocument50 pagesConfidence IntervalsDr. POONAM KAUSHALNo ratings yet

- San Pablo Catholic Schools Research ModuleDocument8 pagesSan Pablo Catholic Schools Research ModuleAdrian James NaranjoNo ratings yet

- Wabah TBC Di VietnamDocument8 pagesWabah TBC Di VietnamSatya WangsaNo ratings yet

- Research ProposalDocument41 pagesResearch Proposalzahid_49775% (4)

- RM2Document85 pagesRM2Hareesh MadathilNo ratings yet

- Confidence Intervals For KappaDocument10 pagesConfidence Intervals For KappascjofyWFawlroa2r06YFVabfbajNo ratings yet

- 1 Nature of StatisticsDocument26 pages1 Nature of StatisticsRenjie OliverosNo ratings yet

- 800 Data Science QuestionsDocument258 pages800 Data Science QuestionsPrathmesh Sardeshmukh100% (1)

- 6.1 Confidence Intervals For The Mean (Large Samples)Document30 pages6.1 Confidence Intervals For The Mean (Large Samples)Vinícius BastosNo ratings yet

- Class Lecture 7Document49 pagesClass Lecture 7planets of frogNo ratings yet

- How To Establish Sample Sizes For Process Validation Using Statistical T...Document10 pagesHow To Establish Sample Sizes For Process Validation Using Statistical T...Anh Tran Thi VanNo ratings yet

- Business Statistics AssignmentDocument17 pagesBusiness Statistics AssignmentShailabh Verma100% (1)

- Stat 1x1 Final Exam Review Questions (Units 13, 14, 15)Document8 pagesStat 1x1 Final Exam Review Questions (Units 13, 14, 15)Ho Uyen Thu NguyenNo ratings yet

- Confidence IntervalDocument13 pagesConfidence IntervalBhaskar Lal DasNo ratings yet

- Finkel 1Document24 pagesFinkel 1Manuel Ruiz-Huidobro VeraNo ratings yet

- Ch13 SamplingDocument17 pagesCh13 SamplingMohammad Al-arrabi Aldhidi67% (3)

- Effect of CSR Economic Responsibility on Customer Loyalty in Telecom FirmsDocument15 pagesEffect of CSR Economic Responsibility on Customer Loyalty in Telecom FirmsNef SeniorNo ratings yet

- Statistics and Probability: Quarter 3 - Module 6: Central Limit TheoremDocument17 pagesStatistics and Probability: Quarter 3 - Module 6: Central Limit TheoremYdzel Jay Dela TorreNo ratings yet

- CH10BDocument20 pagesCH10BFaustine Angela ZipaganNo ratings yet

- CHAPTER 10 (Papa and Saflor)Document27 pagesCHAPTER 10 (Papa and Saflor)Merrill Ojeda SaflorNo ratings yet

- Research Methodology Bio Statistics NetDocument100 pagesResearch Methodology Bio Statistics Netdrsanjeev15No ratings yet

- HRM SynopsisDocument30 pagesHRM SynopsisRobby SinghNo ratings yet

- R Hypothesis TestingDocument61 pagesR Hypothesis TestingAnshul SaxenaNo ratings yet

- Iso 11979-9Document27 pagesIso 11979-9Alberto FernándezNo ratings yet

- How To Choose An Effective and Sufficient Sample For An AML Program AuditDocument15 pagesHow To Choose An Effective and Sufficient Sample For An AML Program AuditBAS ExpNo ratings yet

- HSE MANAGEMENT STANDARDS INDICATOR TOOL USER MANUALDocument8 pagesHSE MANAGEMENT STANDARDS INDICATOR TOOL USER MANUALnaravichandran3662No ratings yet

- Sample Size and Sample Power Considerations in Factor AnalysisDocument24 pagesSample Size and Sample Power Considerations in Factor AnalysisJay GalangNo ratings yet

- Sta 210 Final Exam InformationDocument7 pagesSta 210 Final Exam InformationNikunj JadawalaNo ratings yet

- Six Sigma Green Belt Exam Study NotesDocument11 pagesSix Sigma Green Belt Exam Study NotesKumaran VelNo ratings yet