Professional Documents

Culture Documents

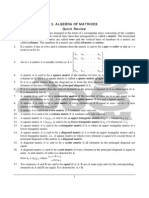

Matrix and Determinants Notes

Uploaded by

Yash TiwariOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Matrix and Determinants Notes

Uploaded by

Yash TiwariCopyright:

Available Formats

Revision Notes on Matrices & Determinants

Two matrices are said to be equal if they have the same order and each element of

one is equal to the corresponding element of the other.

An m x n matrix A is said to be a square matrix if m = n i.e. number of rows = numb er

of columns.

In a square matrix the diagonal from left hand side upper corner to right hand side

lower corner is known as leading diagonal or principal diagonal.

The sum of the elements of a square matrix A lying along the principal diagonal is

called the trace of A i.e. tr(A). Thus if A = [a ij ]n× n , then tr(A) = ∑ n i=1 a ii = a 11 + a 22 +......+ a nn .

For a square matrix A = [a ij ] n×n , if all the elements other than in the leading diagonal

are zero i.e. a ij = 0, whenever i ≠ j then A is said to be a diagonal matrix.

A matrix A = [a ij ]n×n is said to be a scalar matrix if a ij = 0, i ≠ j

= m, i = j, where m ≠ 0

Properties of various types of matrices:

Given a square matrix A = [a ij ] n×n ,

For upper triangular matrix, a ij = 0, ∀ i > j

For lower triangular matrix, a ij = 0, ∀ i < j

Diagonal matrix is both upper and lower triangular.

A triangular matrix A = [a ij ]n×n is called strictly triangular if a ii = 0 for ∀1 < i < n.

Transpose of a matrix and its properties:

If A = [a ij ]m×n and transpose of A i.e. A' = [b ij ] n ×m then b ij =a ji , ∀i, j.

(A')' = A

(A + B)' = A' + B', A and B being conformable matrices

(αA)' = αA', α being scalar

(AB)' = B'A', A and B being conformable for multiplication

Properties of Conjugate of A i.e.

where α is any number real or complex

where A and B are comformable for multiplication

Properties of Transpose conjugate:

The transpose conjugate of A is denoted by A Θ.

If A = [aij ]m × n then A Θ = [b ji ]n× m where

, i.e. the (j, i) th element of A Θ = the conjugate

of (i, j) th element of A

1) (A θ) θ = A

2) (A + B) θ = A θ + B θ

3) (kA) θ = A θ , k being any number

4) (AB) θ = Bθ Aθ

Addition of matrices:

1) Only matrices of the same order can be added or subtracted.

2) Addition of matrices is commutative as well as associative.

3) Cancellation laws hold well in case of addition.

4) The equation A + X = 0 has a unique solution in the set of all m × n matric es.

5) All the laws of ordinary algebra hold for the addition or subtraction of matrices and

their multiplication by scalar.

Matrix Multiplication:

1) Matrix multiplication may or may not be commutative. i.e., AB may or may not be

equal to BA

2) If AB = BA, then matrices A and B are called Commutative Matrices.

3) If AB ≠ BA, then matrices A and B are called Anti-Commutative Matrices.

4) Matrix multiplication is Associative

5) Matrix multiplication is Distributive over Matrix Addition.

6) Cancellation Laws need not hold good in case of matrix multiplication i.e., if AB = AC

then B may or may not be equal to C even if A ≠ 0.

7) AB = 0 i.e., Null Matrix, does not necessarily imply that either A or B is a null matrix.

Special Matrices

A square matrix A = [a ij ] is said to be symmetric when a ij = a ji for all i and j.

If a ij = -aji for all i and j and all the leading diagonal elements are zero, then the matrix

is called a skew symmetric matrix.

A square matrix A = [a ij ] is said to be Hermitian matrix if A θ = A.

1) Every diagonal element of a Hermitian Matrix is real.

2) A Hermitian matrix over the set of real numbers is actually a real symmetric

matrix.

A square matrix, A = [a ij ] is said to be a skew-Hermitian matrix if A θ = -A.

1) If A is a skew-Hermitian matrix then the diagonal elements must be either

purely imaginary or zero.

2) A skew-Hermitian Matrix over the set of real numbers is actually a real skew -

symmetric matrix.

Any square matrix A of order n is said to be orthogonal if AA' = A'A = I n .

A matrix such that A 2 = I is called involuntary matrix.

Let A be a square matrix of order n. Then A(adj A) = |A| I n = (adj A)A.

The adjoint of a square matrix of order 2 can be easily obtained by interchanging the

diagonal elements and changing the signs of off-diagonal (left hand side lower corner

to right hand side upper corner) elements.

A non-singular square matrix of order n is invertible if there exists a square matrix B

of the same order such that AB = I n = BA.

Elementary row/column operations:

The following three operations can be applied on rows or columns of a matrix:

1) Interchange of any two rows (columns)

2) Multiplying all elements of a row (column) of a matrix by a non -zero scalar. If the

elements of ith row (column) are multiplied by non -zero scalar k, it will be denoted by

R i →R i (k) [C i →Ci (k)] or R i →kR i [C i →kCi ].

3) Adding to the elements of a row (column), the corresponding elements of any other

row (column) multiplied by any scalar k.

Rank of a matrix:

A number ‘r’ is called the rank of a matrix if:

1) Every square sub matrix of order (r +1) or more is singular

2) There exists at least one square sub matrix of order r which is non -singular.

It also equals the number of non-zero rows in the row echelon form of the matrix.

The rank of the null matrix is not defined and the rank of every non null matrix is

greater than or equal to 1.

Elementary transformations do not alter the rank of amtrix.

Minors, Cofactors and Determinant:

Minor of the element at the ith row is the determinant obtained by deleting the ith

row and the jth column

The cofactor of this element is (-1) i+j (minor).

where A 1 , B 1 and C 1 are the cofactors of a 1 , b 1 and c 1 respectively.

The determinant can be expanded along any row or column, i.e.

Δ = a 2 A 2 + b 2 B 2 + c 2 C2 or Δ = a 1 A 1 + a 2 A 2 + a3 A 3 etc.

The following result holds true for determinants of any order:

The following result holds true for determinants of any order:

ai A j + b i B j + c i Cj = Δ if i = j,

= 0 if i ≠ j.

Adjoint and Inverse of a matrix:

Let A = [a ij ] be a square matrix of order n and let Cij be cofactor of aij in A. Then the

transpose of the matrix of cofactors of elements of A is called the adjoint of A an d is

denoted by adj A.

The inverse of A is given by A -1 = 1/|A|.adj A.

1) Every invertible matrix possesses a unique inverse.

2) If A and B are invertible matrices of the same order, then AB is invertible and (AB) -1 =

B -1 A -1 . This is also termed as the reversal law.

3) In general,if A,B,C,...are invertible matrices then (ABC....) -1 =..... C -1 B -1 A -1 .

4) If A is an invertible square matrix, then A T is also invertible and (A T) -1 = (A -1 )T .

(5) If A is a non-singular square matrix of order n, then |adj A| = |A| n-1 .

(6) If A and B are non-singular square matrices of the same order, then adj (AB) = (adj B)

(adj A).

(7) If A is an invertible square matrix, then adj(A T) = (adj A) T .

(8) If A is a non-singular square matrix, then adj(adjA) = |A| n-1 A.

Important Properties:

If rows be changed into columns and columns into the rows, then the values of the

determinant remains unaltered.

If any two rows (or columns) of a determinant are interchanged, the resulting

determinant is the negative of the original determinant.

If two rows (or two columns) in a determinant have corresponding elements that are

equal, the value of determinant is equal to zero.

If each element in a row (or column) of a determinant is written as the sum of two or

more terms then the determinant can be written as the sum of two or more

determinants.

If to each element of a line (row or column) of a determinant, some mul tiples of

corresponding elements of one or more parallel lines are added, then the

determinant remains unaltered.

If each element in any row (or any column) of determinant is zero, then the value of

determinant is equal to zero.

If a determinant D vanishes for x = a, then (x - a) is a factor of D, in other words, if

two rows (or two columns) become identical for x = a, then (x-a) is a factor of D.

In general, if r rows (or r columns) become identical when a is substituted for x, then

(x-) r-1 is a factor of D.

The minor of an element of a determinant is again a determinant (of lesser order)

formed by excluding the row and column of the element.

The determinant can be evaluated by multiplying the elements of a single row or a

column with their respective co-factors and then adding them, i.e.

Δ = ∑ m i =1 aij .Ci j , j = 1, 2, ...... m.

=∑ m

j =1 a ij .Ci j , i = 1, 2, ...... m.

Sarrus Rule: This is the rule for evaluating the determinant of order 3. The process is

as given below:

1. Write down the three rows of a determinant.

2. Rewrite the first two rows.

3. The three diagonals sloping down the right give the three positive terms and the three

diagonals sloping down to the left give the three negative terms.

Multiplication of two Determinants:

1. Two determinants can be multiplied together only if they are of same order.

2. Take the first row of determinant and multiply it successively with 1st, 2nd & 3rd rows of

other determinant.

3. The three expressions thus obtained will be elements of 1st row of resultant

determinant. In a similar manner the element of 2nd & 3rd rows of determinant are

obtained.

Symmetric determinant:

The elements situated at equal distance from the diagonal are equal both in magnitude

and sign. Eg:

Skew- Symmetric determinant:

In a skew symmetric determinant, all the diagonal elements are zero and the

elements situated at equal distance from the diagonal are equal in magnitude but

opposite in sign. The value of a skew symmetric determinant of odd order is zero.

If the elements of a determinant are in cyclic arrangement, such a determinant is

termed as a Circulant determinant.

A system of equations AX = D is called a homogeneous system if D = O. Otherwise it is

called a non-homogeneous systems of equations.

If the system of equations has one or more solutions, then it is said to be a consistent

system of equations, otherwise it is an inconsistent system of equations.

Let A be the co-efficient matrix of the linear system:

ax + by = e &

cx + dy = f.

If det A ≠ 0, then the system has exactly one solution. The solution is:

Let A be the co-efficient matrix of the linear system:

ax + by + cz = j,

dx + ey + fz = k, and

gx + hy + iz = l.

If det A ≠ 0, then the system has exactly one solution. The solution is:

We have the following two cases:

Case I. When Δ ≠ 0

In this case we have,

x = Δ 1 /Δ, y = Δ 2 /Δ, z = Δ 3 /Δ

Hence unique value of x, y, z will be obtained.

Case II: When Δ = 0

(a) When at least one of Δ 1 , Δ 2 and Δ 3 is non zero then the system is inconsistent.

Let Δ 1 ≠ 0, then from case I, Δ 1 = x Δ will not be satisfied for any value of x because Δ = 0

and Δ1 ≠ 0 and hence no value of x is possible in this case.

Similarly when Δ 2 ≠ 0, and Δ 2 = yΔ and similarly for Δ 3 ≠ 0.

(b) When Δ = 0 and Δ 1 = Δ 2 = Δ 3 = 0 and we have,

Δ 1 = xΔ, Δ 2 = yΔ and Δ 3 = zΔ will be true for all values of x, y and z. But then only two of x,

y, z will be independent and third will be dependent on other two, therefore if Δ = Δ 1 =

Δ 2 = Δ 3 = 0, then the system of equations will be consistent and it will have infinitely

many solutions.

If A is a non-singular matrix, then the system of equations given by AX = D has a

unique solutions given by X = A -1 D.

If A is a singular matrix, and (adj A) D = O, then the system of equations given by AX =

D is consistent, with infinitely many solutions.

If A is a singular matrix, and (adj A) D ≠ O, then the system of equation given by AX =

D is inconsistent.

Let AX = O be a homogeneous system of n linear equation with n unknowns. Now if A

is non-singular then the system of equations will have a unique solution i.e. trivial

solution and if A is singular then the system of equations will have infinitely many

solutions.

Suppose we have the following system:

a11 x 1 +a 12 x 2 +.... + a 1n x n = b 1

a2 1 x 1 +a2 2 x 2 +.... + a2 n x n = b2

….... …..... ….... …....

am 1 x 1 +am 2 x 2 +.... + am n x n = bn

Then the system is consistent iff the coefficient matrix A and the augmented matrix

(A|B) have the same rank. We then have the following caes:

Case 1: The system is consistent and m ≥ n

1. If r(a) = r((A|B) = n, then the system has a unique solution

2. If r(a) = r((A|B) = k < n ,then (n-k) unknowns are assigned arbitrary values.

Case 2: The system is consistent and m < n

1. If r(a) = r((A|B) = m, then (n-m) unknowns can be assigned arbitrary values.

2. If r(a) = r((A|B) = k < m ,then (n-k) unknowns are assigned arbitrary values.

Note: here ‘r’ denotes the “rank”.

You might also like

- Revision Notes On Matrices: Properties of Various Types of MatricesDocument7 pagesRevision Notes On Matrices: Properties of Various Types of MatricesVincent VetterNo ratings yet

- Matrix Theory and Applications for Scientists and EngineersFrom EverandMatrix Theory and Applications for Scientists and EngineersNo ratings yet

- Determinant Basic ConceptDocument12 pagesDeterminant Basic ConceptReena SharmaNo ratings yet

- MatricesDocument14 pagesMatricesthinkiitNo ratings yet

- Maths 2 For Eng - Lesson 1Document9 pagesMaths 2 For Eng - Lesson 1Abdulkader TukaleNo ratings yet

- Algebra MatricialDocument17 pagesAlgebra MatricialjoelmulatosanchezNo ratings yet

- 1 Linear AlgebraDocument5 pages1 Linear AlgebraSonali VasisthaNo ratings yet

- Mathematics II-matricesDocument52 pagesMathematics II-matricesBagus RizalNo ratings yet

- Theory of Errors and AdjustmentDocument80 pagesTheory of Errors and AdjustmentGabriela CanareNo ratings yet

- Matrices and Determinants: Ij Ij Ij Ij IjDocument23 pagesMatrices and Determinants: Ij Ij Ij Ij Ijumeshmalla2006No ratings yet

- Usme, Dtu Project On Matrices and Determinants Analysis BY Bharat Saini Tanmay ChhikaraDocument17 pagesUsme, Dtu Project On Matrices and Determinants Analysis BY Bharat Saini Tanmay ChhikaraTanmay ChhikaraNo ratings yet

- MatricesDocument12 pagesMatricesPranivoid100% (1)

- Chapter 15 Linear AlgebraDocument59 pagesChapter 15 Linear AlgebraAk ChintuNo ratings yet

- Matrices and Linear AlgebraDocument13 pagesMatrices and Linear AlgebraRaulNo ratings yet

- Eamcet QR Maths SR Maths Iia-3. MatricesDocument7 pagesEamcet QR Maths SR Maths Iia-3. MatricessanagavarapuNo ratings yet

- 2 (I) MatricesDocument11 pages2 (I) Matriceskps evilNo ratings yet

- 3 MatricesDocument15 pages3 MatricesHarsh Ravi100% (1)

- MatricesDocument34 pagesMatricesTej PratapNo ratings yet

- 5 Matrices PDFDocument14 pages5 Matrices PDFthinkiitNo ratings yet

- Chapter 3 MatricesDocument12 pagesChapter 3 MatricesXinhui PongNo ratings yet

- MatricesDocument76 pagesMatricesNaveenNo ratings yet

- Matrices: Definition and ClassificationDocument4 pagesMatrices: Definition and ClassificationGyanendra VermaNo ratings yet

- Engineering Mathematics For Gate Chapter1Document52 pagesEngineering Mathematics For Gate Chapter1Charan Reddy100% (1)

- Matrix Algebra ReviewDocument16 pagesMatrix Algebra ReviewGourav GuptaNo ratings yet

- Chapter OneDocument14 pagesChapter OneBiruk YidnekachewNo ratings yet

- 5 System of Linear EquationDocument12 pages5 System of Linear EquationAl Asad Nur RiyadNo ratings yet

- NT 1Document13 pagesNT 1arya1234No ratings yet

- Matrices PreseDocument9 pagesMatrices Presesachin Singhal 42No ratings yet

- M 1Document38 pagesM 1Divya TejaNo ratings yet

- MatrixDocument44 pagesMatrixJomil John ReyesNo ratings yet

- Notes On Matric AlgebraDocument11 pagesNotes On Matric AlgebraMaesha ArmeenNo ratings yet

- MR 2015matricesDocument9 pagesMR 2015matricesManisha AldaNo ratings yet

- Class 12 Maths MatricesDocument52 pagesClass 12 Maths MatricesXxftf FdffcNo ratings yet

- MatricesDocument3 pagesMatricesMaths Home Work 123No ratings yet

- BCA - 1st - SEMESTER - MATH NotesDocument25 pagesBCA - 1st - SEMESTER - MATH Noteskiller botNo ratings yet

- 3matrices ResultDocument3 pages3matrices ResultAman SinghNo ratings yet

- Matrix NotesDocument52 pagesMatrix NotesSURYA KNo ratings yet

- Matrices One Shot #BBDocument158 pagesMatrices One Shot #BBPoonam JainNo ratings yet

- Math Majorship LinearDocument12 pagesMath Majorship LinearMaybelene NavaresNo ratings yet

- Chapter 3 PDFDocument6 pagesChapter 3 PDFtecht0n1cNo ratings yet

- CH 3 Matrices (Notes)Document3 pagesCH 3 Matrices (Notes)A & ANo ratings yet

- LinearAlgebra2000 Bookmatter ModellingAndControlOfRobotManiDocument41 pagesLinearAlgebra2000 Bookmatter ModellingAndControlOfRobotManidoudikidNo ratings yet

- Matrix AlgebraDocument39 pagesMatrix Algebraamosoundo59No ratings yet

- 20 Matrices Formula Sheets QuizrrDocument8 pages20 Matrices Formula Sheets Quizrrbharasha mahantaNo ratings yet

- 5.matrix AlgebraDocument31 pages5.matrix AlgebraBalasingam PrahalathanNo ratings yet

- Matrices Basic ConceptsDocument14 pagesMatrices Basic ConceptsReena SharmaNo ratings yet

- 020 Matrix AlgibraDocument33 pages020 Matrix AlgibraShyamkantVasekarNo ratings yet

- Operations: 1. Row MatrixDocument4 pagesOperations: 1. Row MatrixArpit AgrawalNo ratings yet

- Class 12 Chapter 3. MatricesDocument17 pagesClass 12 Chapter 3. MatricesShreya SinghNo ratings yet

- Chap 1 LinearAlgebra ECDocument21 pagesChap 1 LinearAlgebra ECMidhun BabuNo ratings yet

- Mathematics Notes and Formula For Class 12 Chapter 3. MatricesDocument11 pagesMathematics Notes and Formula For Class 12 Chapter 3. MatricesAbhinav AgrahariNo ratings yet

- Review Materi Matriks 1Document23 pagesReview Materi Matriks 1Nafiah Sholikhatun jamilNo ratings yet

- MatricesDocument2 pagesMatricesSanjoy BrahmaNo ratings yet

- Basic Concept of MatricesDocument19 pagesBasic Concept of MatricesSiddharth palNo ratings yet

- matrixAlgebraReview PDFDocument16 pagesmatrixAlgebraReview PDFRocket FireNo ratings yet

- Algebra of MatrixDocument14 pagesAlgebra of MatrixRajeenaNayimNo ratings yet

- Class 12 CH 4 DeterminantsDocument6 pagesClass 12 CH 4 DeterminantsSai Swaroop MandalNo ratings yet

- India China RelationsDocument2 pagesIndia China RelationsYash TiwariNo ratings yet

- Maths Sample Paper AnalysisDocument1 pageMaths Sample Paper AnalysisYash TiwariNo ratings yet

- Mathematical Keys For Windows KeyboardDocument8 pagesMathematical Keys For Windows KeyboardYash TiwariNo ratings yet

- It File Part 3 Relational Detabase Management SystemDocument3 pagesIt File Part 3 Relational Detabase Management SystemYash TiwariNo ratings yet

- Nodia and Company: Gate Solved Paper Electrical Engineering 2015-1Document50 pagesNodia and Company: Gate Solved Paper Electrical Engineering 2015-1pankajNo ratings yet

- Matrices and DeterminantsDocument2 pagesMatrices and DeterminantsAbhayNo ratings yet

- Matrices and Its ApplicationDocument38 pagesMatrices and Its Applicationspurtbd67% (6)

- Subject 1: Statistical TechniquesDocument6 pagesSubject 1: Statistical TechniquesAkash AgarwalNo ratings yet

- UNIT - 3 Advanced Algorithm PDFDocument29 pagesUNIT - 3 Advanced Algorithm PDFbharat emandiNo ratings yet

- Treatise On The Theory of Determinants by Thomas MuirDocument256 pagesTreatise On The Theory of Determinants by Thomas MuirUday Kumar100% (1)

- Measure TheoryDocument70 pagesMeasure TheoryAbarna TNo ratings yet

- DeterminationDocument17 pagesDeterminationayshakabir004No ratings yet

- Generalisations of Recursive Sequences Using Diagonalizations of 2 X 2 MatricesDocument15 pagesGeneralisations of Recursive Sequences Using Diagonalizations of 2 X 2 MatricesMelvi Jhuanel LESCANO HUERTONo ratings yet

- Vector AlgebraDocument19 pagesVector Algebraapi-26411618100% (1)

- Linear AlgebraDocument446 pagesLinear AlgebraAndre BeaureauNo ratings yet

- 5.2 Determinant OF Matrices: Ab CD A A 2 A A2 A 22 A 2 A A 2 ADocument2 pages5.2 Determinant OF Matrices: Ab CD A A 2 A A2 A 22 A 2 A A 2 ANur ShafineeNo ratings yet

- Review of Linear Algebra & Introduction To Matrix.: 1 Basic DefinitionsDocument31 pagesReview of Linear Algebra & Introduction To Matrix.: 1 Basic Definitionsyared sitotawNo ratings yet

- Algebra Quadratic EquationDocument86 pagesAlgebra Quadratic EquationSanjay Gupta100% (4)

- Multiple-Choice Test Direct Method Interpolation: Complete Solution SetDocument9 pagesMultiple-Choice Test Direct Method Interpolation: Complete Solution SetPankaj KaleNo ratings yet

- Chapter 5 DBM 10063Document14 pagesChapter 5 DBM 10063LimitverseNo ratings yet

- Expanded Tertiary Education Equivalency and Accreditation Program (ETEEAP)Document6 pagesExpanded Tertiary Education Equivalency and Accreditation Program (ETEEAP)Jayson Jonson AraojoNo ratings yet

- HW of Example 3.10Document6 pagesHW of Example 3.10Ahmed AlzaidiNo ratings yet

- Exercises FonT LatexDocument56 pagesExercises FonT LatexRamezNo ratings yet

- Chapter1 Mathtype CopyrightDocument67 pagesChapter1 Mathtype Copyright44alonewolf44No ratings yet

- Baldani Cap 01 e 02 PDFDocument82 pagesBaldani Cap 01 e 02 PDFAnonymous b0cKPzDMqf100% (1)

- Matrix and DeterminantDocument51 pagesMatrix and DeterminantLastrella RueNo ratings yet

- Determinant Mat 285Document3 pagesDeterminant Mat 285ZAIM IMANI ZAWAWINo ratings yet

- B Pharmacy SyllabusDocument189 pagesB Pharmacy SyllabusSatyam SachanNo ratings yet

- KGCE SyllabusDocument49 pagesKGCE SyllabusRenganathanPadmanabhanNo ratings yet

- AIEEE 2012 Mathematics Syllabus: Unit 1: Sets, Relations and FunctionsDocument4 pagesAIEEE 2012 Mathematics Syllabus: Unit 1: Sets, Relations and FunctionsAjay Singh GurjarNo ratings yet

- Special Types of Matrix PDFDocument20 pagesSpecial Types of Matrix PDFCharmaine Cruz33% (3)

- Final Exam - Dela Cruz, RegelleDocument11 pagesFinal Exam - Dela Cruz, RegelleDela Cruz, Sophia Alexisse O.No ratings yet

- Prelims2014-15 Final 1Document37 pagesPrelims2014-15 Final 1SayantanNo ratings yet

- Mini-Introduction To Matrix AlgebraDocument10 pagesMini-Introduction To Matrix AlgebraYHTRTRNo ratings yet

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeFrom EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticeRating: 4 out of 5 stars4/5 (2)

- Calculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusFrom EverandCalculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusRating: 4.5 out of 5 stars4.5/5 (2)

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsFrom EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsRating: 4.5 out of 5 stars4.5/5 (3)

- Limitless Mind: Learn, Lead, and Live Without BarriersFrom EverandLimitless Mind: Learn, Lead, and Live Without BarriersRating: 4 out of 5 stars4/5 (6)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.From EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Rating: 5 out of 5 stars5/5 (1)

- Images of Mathematics Viewed Through Number, Algebra, and GeometryFrom EverandImages of Mathematics Viewed Through Number, Algebra, and GeometryNo ratings yet