Professional Documents

Culture Documents

Chapter 7 - Factor Analysis

Uploaded by

POONJI JAINOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter 7 - Factor Analysis

Uploaded by

POONJI JAINCopyright:

Available Formats

University of Sharjah - DBA Quantitative Business Research Methods II

Chapter 7: Factor Analysis

Contents

▪ Factor Analysis Assumptions

▪ Principal Component Analysis (PCA)

▪ Principal Axis Factoring (PAF)

▪ Rotation methods (i.e., Orthogonal and Oblique)

▪ Numerical, Ordinal, Nominal variables

▪ Categorical Principal Components Analysis (CATPCA)

▪ Reverse-coded variables (Appendix)

Factor Analysis

Objective: Factor analysis is a variable reduction technique where a large number of

variables (namely, columns of a data set; please see Table 1 below) can be summarized

into a smaller set of factors. Factor analysis can also be used to identify

interrelationships between variables in a data set.

Note: Factor analysis is based on correlation analysis.

Factor analysis draws on the assumption that all variables correlate to some degree.

Hence, those variables that express the same or similar concepts could be highly

correlated. The role of factor analysis is to identify factors. In other words, to identify

groups of highly correlated variables.

Attention: Suppose we want to run regression analysis. After factor analysis, we will

not introduce the original variables in the regression model but the factors or latent

variables.

Please mind that factor analysis reduces (not always) multicollinearity (advantage) and

𝑅 2 (disadvantage). Factor analysis is not recommended when the regression model’s

𝑅 2 < 0.5.

Dr. Panagiotis Zervopoulos 1

University of Sharjah - DBA Quantitative Business Research Methods II

To be confident that multicollinearity has been addressed after extracting factors from

the original variables, you can rerun the regression model using the factors as

independent variables instead of the original variables.

Further reading about the failures of factor analysis to reduce multicollinearity

Hadi, A.S., & Ling, R.F. (1998). Some cautionary notes on the use of principal

components regression. American Statistical Association, 52(1), 15–19.

Table 1. Sample questionnaire

Factors or

Q1 Q2 Q3

Latent variables

Variables V6 V7 V8 V9 V10 V11 V12 V13 V14 V15 V16 V17

R1 4 5 4 4 4 4 5 5 5 4 4 5

R2 5 5 5 5 5 5 5 5 5 4 5 4

R3 5 5 5 5 5 5 5 5 5 3 4 4

R4 5 5 5 5 5 5 5 5 5 4 5 4

R5 5 5 5 5 5 5 5 5 5 5 4 5

R6 4 5 5 5 4 5 5 5 5 5 5 5

R7 5 5 5 4 4 5 5 5 5 5 5 5

R8 4 4 4 4 4 4 4 4 4 4 4 5

R9 4 4 5 5 5 5 4 4 4 4 4 5

R10 4 4 4 5 5 5 5 5 4 5 5 5

R11 5 5 5 5 5 5 5 5 5 5 5 5

R12 5 5 5 4 4 4 4 5 5 5 5 5

R13 4 5 5 5 5 5 5 5 5 3 4 3

R14 5 5 5 5 5 5 5 5 5 3 5 5

R15 5 5 5 5 5 5 5 5 5 3 5 5

Note: Latent variables (or factors) are variables that are inferred, not directly observed,

from other variables that are observed.

Steps to apply Factor Analysis

There are the following steps to apply factor analysis (kindly mind that SPSS performs

the steps below):

1. Construct a correlation matrix for all variables

2. Extract initial factors. We should decide on the extraction method. The basic

methods for extracting factors are:

(a) the Principal Component Analysis (PCA)

(b) the Principal Axis Factoring (PAF)

Dr. Panagiotis Zervopoulos 2

University of Sharjah - DBA Quantitative Business Research Methods II

3. Rotate the extracted factors to reach a terminal (optimal) solution. There are two

factor-rotation methods:

(a) the Orthogonal (e.g., Varimax, Quartimax, Equamax).

Note: the Orthogonal rotation methods assume that factors are not correlated.

(b) the Oblique (e.g., Direct Oblimin, Promax).

Note: the Oblique rotation methods assume that factors are correlated.

Attention: In social science research, the Oblique rotation is preferred as factors

are correlated.

Question: What is factor rotation, and why do we use it?

Answer: We rotate the axes to identify factors that fit the actual variables better (the

figure above shows a two-factor analysis)

Further Insight

▪ Principal Component Analysis (PCA) is appropriate if the purpose is no more than

to reduce the number of variables in the data set. In other words, we use PCA to

obtain the minimum number of factors (groups of variables) needed to represent the

original data set.

Attention: PCA assumes no error in the data or the measurement. This is not

consistent with social sciences, as error exists.

Attention: the factors extracted using the PCA do not have any theoretical support

or validity but are justified statistically only.

Dr. Panagiotis Zervopoulos 3

University of Sharjah - DBA Quantitative Business Research Methods II

Note: The PCA is the most widely used factor extraction approach as it is less

restrictive than other methods. However, there are many cases where PAF is

preferred. In social sciences, many scholars prefer to use PAF than PCA.

Note: If you select PCA, it is better to use the Orthogonal rotation method – most

commonly the Varimax.

▪ Principal Axis Factoring (PAF) is appropriate if the objective is to identify

theoretically meaningful factors.

Attention: PAF allows errors in the data or measurements.

Note: If you select PAF, it is better to use the Oblique rotation – most commonly the

Promax. Kindly mind that the Promax is a relaxed Orthogonal rotation method.

Assumptions

Assumption #1: The variables should be continuous. However, it is common to use

factor analysis with ordinal variables as well. We can use factor

analysis even with nominal variables (Note: only when a few

nominal variables are present in the data set we can use factor

analysis. If a considerable number of nominal and/or ordinal variables

is present, we can use Categorical Principal Components Analysis

instead).

Assumption #2: Sample size: 𝑛 ≥ 𝑚𝑎𝑥{150 𝑝𝑎𝑟𝑡𝑖𝑐𝑖𝑝𝑎𝑛𝑡𝑠, 10 × #𝑜𝑓 𝑣𝑎𝑟𝑖𝑎𝑏𝑙𝑒𝑠}

For instance, if the number (#) of variables is 10, then 10 × 10 =

100. In that case, the adequate sample size for having a reliable factor

analysis is at least 150 (i.e., the maximum between 150 participants

and 100).

Assumption #3: Normality. Each variable should be roughly normally distributed.

Note: Violations of this assumption do not distort the quality of the

factor analysis results. Factor analysis is fairly robust against

violations of the normality assumption.

Assumption #4: Linearity. Roughly linear relationships should be present between the

variables.

Note: This assumption is associated with correlation analysis that is

a foundation for factor analysis.

Dr. Panagiotis Zervopoulos 4

University of Sharjah - DBA Quantitative Business Research Methods II

Assumption #5: No extreme outliers. The presence of extreme observations can distort

the factor analysis results.

Note: Assumptions #1 and #2 are met by the study design, while assumptions #3, #4,

and #5 are tested using SPSS.

Factor Analysis – Principal Component Analysis (PCA)

Example: Data set #24 – Factor Analysis

Figure 1a. Data set #24

Dr. Panagiotis Zervopoulos 5

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 2a. Data set #24 – Factor analysis Assumptions

Figure 3a. Data set #24 – Factor analysis Assumptions

Dr. Panagiotis Zervopoulos 6

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 4a. Data set #24 – Factor analysis Assumptions

Figure 5a. Data set #24 – Factor analysis Assumptions – Boxplots / Outliers

Note: There are no extreme outliers (e.g., denoted by asterisks in SPSS).

Dr. Panagiotis Zervopoulos 7

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 6a. Data set #24 – Factor analysis Assumptions – Normality

Note: As shown in the figure above, the normality assumption is violated. This finding

is confirmed both by Kolmogorov-Smirnov and Shapiro-Wilk tests. However,

acknowledging that the sample size exceeds 100 observations, we can assume that the

data are normally distributed drawing on the Central Limit Theorem.

Tip: Our sample size should be as big as possible and never consist of less than 100

observations.

Figure 7a. Data set #24 – Factor analysis

Dr. Panagiotis Zervopoulos 8

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 8a. Data set #24 – Factor analysis

Figure 9a. Data set #24 – Factor analysis

Dr. Panagiotis Zervopoulos 9

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 10a. Data set #24 – Factor analysis / PCA

Figure 11a. Data set #24 – Factor analysis / PCA - Varimax

Note: Since we selected the Principal Component Analysis (PCA) method, the Varimax

orthogonal factor-rotation method is the most appropriate.

Dr. Panagiotis Zervopoulos 10

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 12a. Data set #24 – Factor analysis / PCA – Correlations coefficients cut-off

Note: We change the Absolute value below from .10 (default value) to .30. This

selection simplifies the output and makes it easier to interpret. If you are concerned

about the missing information due to the Absolute value change, you can rerun the

factor analysis with the default value and compare the results.

Figure 13a. Data set #24 – Factor analysis / PCA

Dr. Panagiotis Zervopoulos 11

University of Sharjah - DBA Quantitative Business Research Methods II

Note: As several of the correlations in the Correlation Matrix above (Figure 13a) are

higher than 0.3 (absolute values), the data are suitable for factor analysis.

Note: If we have one or more variables that do not have any correlations above 0.3,

then it’s better to remove this (or these) variable(s) from the factor analysis, as it is not

suitable.

How to remove a variable from factor analysis?: Please go back to Figure 8a and move

the variable that reports correlations lower than 0.3 with all the remaining sample

variables back to the left-hand side box.

Figure 14a. Data set #24 – Factor analysis / PCA

Note: The Kaiser-Meyer-Olkin (KMO) measure and Karllett’s test provide information

about the suitability of the data for applying factor analysis. These two measures

provide further information (additional to the correlation matrix in Figure 13a) about

the factorability of the data.

Specifically, the KMO measure (it ranges between 0 and 1) reports the amount of

variance in the data that can be explained by the factors. As expected, higher values

are better. Generally, KMO measure values ≤ 𝟎. 𝟓 are unacceptable, indicating that

the variables are not appropriate for applying factor analysis. KMO measure values

≥ 𝟎. 𝟔 are regarded as suitable for factor analysis.

Please see Figure 16a for further details about the suitability of variables (one-by-one)

for incorporating them in factor analysis.

Attention: if the overall KMO measure is ≤ 𝟎. 𝟓, then check the Anti-Image

Correlation matrix – the diagonal values are equivalent to the KMO measure for

individual variables (e.g., Figure 16a) before declining to use factor analysis. There,

you may find specific variables with Anti-Image Colleration (diagonal scores) ≤ 𝟎. 𝟓

or even < 𝟎. 𝟔 (Tip: The variables assigned KMO measure between 0.5 and 0.6 are still

suitable for factor analysis but weak). Those variables are not suitable for factor

Dr. Panagiotis Zervopoulos 12

University of Sharjah - DBA Quantitative Business Research Methods II

analysis and can be removed from the sample variables, which will be used for factor

analysis.

Barlett’s test of sphericity (in other words, homogeneity of variances) also indicates

how suitable the data are for factor analysis. If Barlett’s test is significant (i.e., 𝑝 <

0.05), then the data are suitable for factor analysis. If Barlett’s test is not significant

(i.e., 𝑝 ≥ 0.05), then the data are not suitable for factor analysis.

𝐻𝑜 : all variables’ correlations are equal to zero (𝑝 ≥ 0.05)

𝐻1 : variables’ correlations are not equal to zero (𝑝 < 0.05)

Interpretation: Both the KMO measure and Barlett’s test of sphericity suggest that the

data are suitable for factor analysis.

Figure 15a. Data set #24 – Factor analysis / PCA

Figure 16a. Data set #24 – Factor analysis / PCA

Dr. Panagiotis Zervopoulos 13

University of Sharjah - DBA Quantitative Business Research Methods II

Note: KMO measures for individual variables are found in the Anti-Image Correlation

matrix above (see Figure 16a). In particular, the diagonal scores (highlighted values)

should ideally be ≥ 𝟎. 𝟔. However, given that the overall KMO measure (see Figure

14a) suggests that the sample variables are suitable for factor analysis, we could include

in the factor analysis even variables with (diagonal) scores between 0.5 and 0.6, even

if they are regarded as weak.

Attention: Please mind that sometimes, variables assigned low KMO scores (i.e., 𝑝 ≤

0.5) are reverse coded. Hence, before removing a variable with a low KMO (diagonal)

score from the sample, please check whether it is reverse coded. Details about reverse

coding are found in the appendix of this chapter.

Figure 17a. Data set #24 – Factor analysis / PCA

Dr. Panagiotis Zervopoulos 14

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 18a. Data set #24 – Factor analysis / PCA

Note: Communalities express the proportion of each variable’s variance that can be

explained by the factors. Under the title “Initial”, the first column shows the variable’s

variance that can be expressed by the identified factors, where the number of factors

here is the same as the number of variables.

Attention: The presence of ones is due to the PCA’s assumptions and the corresponding

Orthogonal rotation method; namely, the factors (here, all variables) are not correlated,

and errors are not considered in the measurement.

Note: The right-hand side column “Extraction”, presents each variable’s variance that

can be explained by the extracted factors, which are fewer in number than the variables.

As expected, the variance that can be explained now is lower than that in the “Initial”

column.

Figure 19a. Data set #24 – Factor analysis / PCA

Note: Eigenvalues show the amount of variance explained by each variable. The table

above is useful as it helps us decide how many factors should be retained. The factors

assigned Eigenvalues > 1.0 should be retained. In this example, we keep two factors

from the original five variables. As shown in Figure 19a, these two factors can explain

92% of the total variance.

Dr. Panagiotis Zervopoulos 15

University of Sharjah - DBA Quantitative Business Research Methods II

We also find that after rotation (Orthogonal – Varimax; see the last set of columns on

the right side of Figure 19a), there is no improvement in the explained variance, which

remains 92%.

Inflection Point

Figure 20a. Data set #24 – Factor analysis / PCA

Note: The Scree Plot is a graph of the Eigenvalues; it is another tool we can use to

decide how many factors should be retained.

The factors we retain are those before the last inflection point of the graph. The

inflection point represents the point where the graph begins to level off, and subsequent

components add little to the total variance.

Attention: It’s common the Eigenvalues and the Scree Plot leading to different results.

A subjective decision is often needed.

Interpretation: Based on the Eigenvalue table above (see Figure 19a) and the Scree

Plot (please bear in mind that we retain the number of factors before the last inflection

point of the graph – in Figure 20a, the last inflection point is the third; thus, we could

retain two factors), we retain two factors.

Dr. Panagiotis Zervopoulos 16

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 21a. Data set #24 – Factor analysis / PCA

Components or Factors

Figure 22a. Data set #24 – Factor analysis / PCA

Interpretation: Based on the Rotated Component (or Factor) matrix (rotation method:

Orthogonal – Varimax), there are two factors. The first factor consists of the first three

variables (questions) shown in Figure 22a, and the second factor includes the last two

questions.

▪ Considering the questions in factor 1 (column #1), we could give a name to the

factor (latent variable), e.g., “Smoking freedom” or another related title.

Dr. Panagiotis Zervopoulos 17

University of Sharjah - DBA Quantitative Business Research Methods II

▪ Also, taking into account the questions in factor 2 (column #2), the name for the

second factor could be: “Smoke in restaurants” or another related title.

Figure 23a. Data set #24 – Factor analysis / PCA

Note: Going back to the Data set in SPSS, two new columns are added: FAC1_1 and

FAC2_1 referring to factor #1 and factor #2, respectively.

Attention: If you decide to run a regression analysis using these survey data, you

should introduce the two factors (latent variables) in the regression model instead of

the five variables.

Dr. Panagiotis Zervopoulos 18

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 24a. Data set #24 – Factor analysis / PCA – Setting the number of factors

(user-defined process)

Attention: Suppose that you want to set the number of factors (e.g., you prefer to have

a smaller number of factors in your analysis), then you can follow the same pathway

for running factor analysis in SPSS and select the Extraction option. As shown in Figure

24a, you should select “Fixed number of factors” instead of “Based on Eigenvalues”

and set the number of factors to extract in the highlighted box. Then, we should rerun

factor analysis.

Reporting: A Principal Components Analysis (PCA) was run on a five-question survey.

The overall Kaiser-Meyer-Olkin (KMO) measure was 0.678, with individual KMO

measures all greater than 0.5. Barlett’s test of sphericity was statistically significant

(𝑝 = 10−4 ), indicating that the data are suitable for factorization.

PCA indicated two factors to be retained that had Eigenvalues greater than one, which

explained 92.02% of the total variance.

Dr. Panagiotis Zervopoulos 19

University of Sharjah - DBA Quantitative Business Research Methods II

A Varimax orthogonal rotation was employed and yielded the following results

Factor 1 Factor 2

I think people should have the right to smoke .985

I think smoking is acceptable .983

I don't care if people smoke around me .939

I don't think people should smoke around food .939

I don't think people should smoke in restaurants .933

Factor Analysis – Principal Axis Factoring (PAF)

Another view to factor analysis

Suppose that the factors are correlated (looking back at the results of the previous

analysis, the relationship between the two extracted factors is a valid scenario). Also,

suppose that there is a possibility of errors in the data or measurements.

In other words, let us apply Principal Axis Factoring (PAF) together with the Oblique

(Promax) rotation method to Data set #24.

As shown below, the results are similar to those obtained from the PCA and Orthogonal

(Varimax) rotation method. Specifically

Dr. Panagiotis Zervopoulos 20

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 1b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Figure 2b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Dr. Panagiotis Zervopoulos 21

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 3b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Figure 3b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Dr. Panagiotis Zervopoulos 22

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 4b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Figure 5b. Data set #24 – Factor analysis Principal Axis Factoring (Promax)

Dr. Panagiotis Zervopoulos 23

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 6b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Figure 7b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Figure 8b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Dr. Panagiotis Zervopoulos 24

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 9b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Figure 10b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Figure 11b. Data set #24 – PCA (left-hand side) vs PAF (right-hand side)

Dr. Panagiotis Zervopoulos 25

University of Sharjah - DBA Quantitative Business Research Methods II

Factor Analysis – Categorical Principal Component Analysis (CATPCA)

The Categorical Principal Component Analysis (CATPCA) or Nonlinear Principal

Components Analysis is an extension of the conventional Principal Component

Analysis for categorical (i.e., ordinal or nominal) variables.

Advantages of CATPCA over the standard PCA

▪ CATPCA deals both with linear and nonlinear relationships

▪ CATPCA is particularly useful for variables measured on a Likert-type scale

▪ CATPCA can jointly analyze numeric, ordinal, and nominal variables, while PCA

solutions can only be interpreted when all variables are considered numeric.

Note: When all variables in the data set are numeric, and they are linearly related, PCA

and CATPCA will render exactly the same results.

Assumptions

Assumption #1: The analysis is based on positive integer data (i.e., ordinal or nominal,

including dichotomous, or both ordinal and nominal).

Example: Data set #25 – Factor Analysis

Background information: Data set #25 consists of 25 ordinal variables (i.e., questions)

measured on a 7-point scale (i.e., 1 – Strongly agree; 2 – Agree; 3 – Agree somewhat;

4 – Undecided; 5 – Disagree somewhat; 6 – Disagree; 7 – Strongly disagree).

Dr. Panagiotis Zervopoulos 26

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 1c. Data set #25 – Factor analysis - CATPCA

Figure 2c. Data set #25 – Factor analysis - CATPCA

Dr. Panagiotis Zervopoulos 27

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 3c. Data set #25 – Factor analysis – CATPCA

Figure 4c. Data set #25 – Factor analysis - CATPCA

Dr. Panagiotis Zervopoulos 28

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 5c. Data set #25 – Factor analysis – CATPCA

Figure 6c. Data set #25 – Factor analysis – CATPCA

Dr. Panagiotis Zervopoulos 29

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 7c. Data set #25 – Factor analysis – CATPCA (Orthogonal rotation: Varimax)

Figure 8c. Data set #25 – Factor analysis – CATPCA

Dr. Panagiotis Zervopoulos 30

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 8.1.c. Data set #25 – Factor analysis – CATPCA

Figure 9c. Data set #25 – Factor analysis – CATPCA

Dr. Panagiotis Zervopoulos 31

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 10c. Data set #25 – Factor analysis – CATPCA (Dimensions: 2)

Figure 11c. Data set #25 – Factor analysis - CATPCA

Note: Based on the Eigenvalue criterion (i.e., we retain the factors or dimensions, as

called in the CATPCA context, with Eigenvalue > 1.0). As shown in the figure above,

the first and second dimensions are assigned Eigenvalues equal to 7.808 and 4.237,

respectively, which are considerably higher than 1.0.

Note: In the Figure above, Cronbach’s Alpha is an internal consistency measure (details

about this measure will be provided in the Reliability Analysis chapter). Any value

(reliability coefficient) greater than 0.7 is considered acceptable.

Interpretation: Both dimensions here have relatively high internal consistency. Also,

the two (user-defined) dimensions account for 48.18% of the original variables’

variance. Given that the Eigenvalues of the two dimensions are greater than 1.0 and the

low % of variance explained by these two dimensions, we can increase the dimensions

expecting to increase the accounted variance.

Dr. Panagiotis Zervopoulos 32

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 12c. Data set #25 – Factor analysis - CATPCA

Note: We increase the number of dimensions to 3.

Figure 13c. Data set #25 – Factor analysis - CATPCA

Note: Given that the Eigenvalues are greater than 1.0 for the three user-defined

dimensions (or factors) and that the % of variance explained by these dimensions is

considered low (i.e., 58.34%), we can increase further the number of dimensions.

Dr. Panagiotis Zervopoulos 33

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 14c. Data set #25 – Factor analysis – CATPCA (Eigenvalues & % of Variance

explained)

Note: After a few trials, playing with the number of the dimensions, we decide that the

optimal number is 8, which explains 78.3% of the total variance. For instance, we set

dimension number 9 as well. Then, the % of variance increased, but the Eigenvalue

assigned to the 9th dimension was lower than one, which is not acceptable.

Dr. Panagiotis Zervopoulos 34

University of Sharjah - DBA Quantitative Business Research Methods II

Dimension

1 2 3 4 5 6 7 8

Q3 0.841 0.114 -0.026 0.094 0.128 0.062 0.161 0.061

Q6 0.815 0.150 -0.009 0.120 0.063 0.017 0.005 0.017

Q4 0.792 0.114 0.177 0.055 0.179 0.051 0.026 0.012

Q13 0.758 0.110 -0.033 0.063 0.133 0.132 0.166 0.143

Q5 0.744 0.081 0.126 -0.053 0.112 0.011 -0.254 0.026

Q12 0.686 0.007 0.032 -0.027 0.426 0.397 -0.066 0.005

Q9 0.597 -0.001 0.130 0.074 0.429 0.278 -0.169 -0.139

Q15 0.119 0.834 0.051 -0.084 0.080 0.271 0.195 -0.088

Q16 0.160 0.819 0.142 0.058 -0.020 -0.089 -0.185 0.090

Q14 0.196 0.800 0.022 -0.066 -0.081 0.277 0.207 -0.173

Q17 0.054 0.772 0.104 -0.025 -0.061 0.119 0.011 0.288

Q18 0.047 0.673 0.149 0.008 0.270 0.355 -0.195 0.225

Q24 0.051 0.011 0.872 -0.076 -0.121 -0.076 0.052 0.060

Q25 0.022 0.114 0.854 0.072 0.076 0.135 -0.027 0.113

Q23 0.135 0.209 0.790 0.084 0.073 -0.012 0.150 0.047

Q10 0.170 -0.094 0.066 0.899 -0.008 0.016 -0.056 -0.014

Q1 0.059 0.012 0.029 0.889 0.000 0.001 -0.097 0.000

Q11 -0.001 0.006 -0.045 0.827 0.085 -0.024 0.104 0.026

Q8 0.374 0.019 0.024 0.095 0.867 0.009 -0.023 -0.016

Q7 0.416 0.016 -0.032 -0.005 0.827 0.056 0.098 -0.018

Q19 0.144 0.349 0.069 -0.040 0.159 0.814 -0.136 0.047

Q2 0.263 0.332 -0.058 0.031 -0.062 0.792 0.169 -0.056

Q22 0.041 0.005 0.598 -0.010 0.038 0.047 0.677 0.072

Q21 -0.005 0.081 0.502 -0.116 0.026 -0.082 0.567 0.430

Q20 0.145 0.162 0.220 0.032 -0.050 0.002 0.096 0.868

Note: Loadings higher than 0.40 are highlighted

Figure 15c. CATPCA – Loadings (coefficients)

Note: Using CATPCA, we reduced the number of variables from 25 to 8. In the

CATPCA context, the factors or dimensions are the new variables that will be entered

into a regression model and serve as explanatory variables (or independent variables).

On the left-hand side table in Figure 15c, we obtain each dimension’s values. On the

right-hand side table in Figure 15c, we highlight the values greater than 0.4, which are

the most influential for each dimension. Based on those values, we can name each

dimension.

Further readings on CATPCA

Linting, M. & Van der Kooij (2012). Nonlinear principal components analysis with

CATPCA: A tutorial. Journal of Personality Assessment, 94(1), 12–25. [see

attached paper #12]

Dr. Panagiotis Zervopoulos 35

University of Sharjah - DBA Quantitative Business Research Methods II

Kemalbay, G., & Korkmazoglu, O. B. (2014). Categorical principal component logistic

regression: A case study for housing loan approval. Procedia – Social and

Behavioral Sciences, 109, 730–736. [see attached paper #11]

Appendix

Reverse coded variables

Reverse coding commonly applies to survey items (i.e., questions) with “negative”

meaning. For instance,

a. When we try to validate the consistency of the survey participants’ answers, it is

common to rephrase a “positive” question in a “negative” way. Example:

1. I would like to have more quantitative methods courses in the DBA program

St. Disagree Disagree Neutral Agree St. Agree

1 2 3 4 5

2. I don’t believe that extra quantitative methods courses in the DBA program

would add any value to DBA candidates’ knowledge.

St. Disagree Disagree Neutral Agree St. Agree

1 2 3 4 5

Let a DBA candidate answer “5” to question #1 and “1” to question #2. Both

answers convey the same meaning. Hence, they are consistent. However, the

scores are not consistent. Therefore, if we used correlation analysis, the

correlation coefficient would be very low. To tackle this problem, we should

reverse code question #2. Then, the scale would read:

St. Disagree Disagree Neutral Agree St. Agree

5 4 3 2 1

After reverse coding, both answers and scores are consistent for both questions.

Dr. Panagiotis Zervopoulos 36

University of Sharjah - DBA Quantitative Business Research Methods II

b. A survey item expressing a “negative” meaning, while most of the other items in

the same survey have “positive” meanings. Let the following questions:

1. I take responsibility of my decisions.

St. Disagree Disagree Neutral Agree St. Agree

1 2 3 4 5

2. I prefer to avoid difficulties.

St. Disagree Disagree Neutral Agree St. Agree

1 2 3 4 5

3. I have a clear plan for my future.

St. Disagree Disagree Neutral Agree St. Agree

1 2 3 4 5

If the interviewee is responsible for his/her decisions, doesn’t try to avoid

difficulties, and has a clear plan for his/her future, the answers provided could

be “5”, “1”, “5”.

For consistency of the answers, and if we want to use factor analysis to identify

any possible factors (latent variables), where high correlations matter, it’s

better to reverse code the scale for question #2. The reversed scale would read

as follows:

St. Disagree Disagree Neutral Agree St. Agree

5 4 3 2 1

The answers to the three questions would be: “5”, “5”, and “5”.

Reverse coding in SPSS

Dr. Panagiotis Zervopoulos 37

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 1Ap. Data set #26

Figure 2Ap. Data set #26 - Coding

Dr. Panagiotis Zervopoulos 38

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 3Ap. Data set #26 – Reverse-Coding procedure

Figure 4Ap. Data set #26 – Reverse-Coding procedure

Dr. Panagiotis Zervopoulos 39

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 5Ap. Data set #26 – Reverse-Coding procedure

Figure 6Ap. Data set #26 – Reverse-Coding procedure

Dr. Panagiotis Zervopoulos 40

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 7Ap. Data set #26 – Reverse-Coding procedure

Figure 8Ap. Data set #26 – Reverse-Coding procedure

Dr. Panagiotis Zervopoulos 41

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 9Ap. Data set #26 – Reverse-Coding procedure

Figure 10Ap. Data set #26 – Reverse-Coding procedure

Dr. Panagiotis Zervopoulos 42

University of Sharjah - DBA Quantitative Business Research Methods II

Figure 11Ap. Data set #26 – Reverse-Coding procedure

Dr. Panagiotis Zervopoulos 43

You might also like

- Common Core Connections Language Arts, Grade 5From EverandCommon Core Connections Language Arts, Grade 5Rating: 5 out of 5 stars5/5 (1)

- A Hybrid MCDM Model For Supplier Selection in Supply ChainDocument8 pagesA Hybrid MCDM Model For Supplier Selection in Supply ChainTJPRC PublicationsNo ratings yet

- Airtel Culture Survey ReportDocument18 pagesAirtel Culture Survey ReportPrasenjit ChakrabortyNo ratings yet

- Operations Management Lecture 5 Statistical Process ControlDocument55 pagesOperations Management Lecture 5 Statistical Process ControlHabibullah SarkerNo ratings yet

- Validity and Reliability Using Factor Analysis and Cronbach's AlphaDocument35 pagesValidity and Reliability Using Factor Analysis and Cronbach's AlphaCinderella MeilaniNo ratings yet

- Chapter 19: Factor Analysis: Advance Marketing ResearchDocument37 pagesChapter 19: Factor Analysis: Advance Marketing ResearchShachi DesaiNo ratings yet

- Chapter Nineteen: Factor AnalysisDocument37 pagesChapter Nineteen: Factor AnalysisSAIKRISHNA VAIDYANo ratings yet

- Chapter Nineteen: Factor AnalysisDocument37 pagesChapter Nineteen: Factor AnalysisSAIKRISHNA VAIDYANo ratings yet

- Ilovepdf MergedDocument7 pagesIlovepdf MergedBagas Prawiro DpNo ratings yet

- UEQ - Data - Analysis - Tool - Version9 (JAKI)Document228 pagesUEQ - Data - Analysis - Tool - Version9 (JAKI)Ezza Muhammad FirmansyahNo ratings yet

- Rapa Score Triumphs As Performance Metric of Choice: MARCH 2013Document8 pagesRapa Score Triumphs As Performance Metric of Choice: MARCH 2013KatarzinaNo ratings yet

- Factor AnalysisDocument34 pagesFactor Analysisrashed azadNo ratings yet

- Factor AnalysisDocument39 pagesFactor AnalysisVaibhavNo ratings yet

- MR - ' T ' Test-Independent Sample and Paired SampleDocument26 pagesMR - ' T ' Test-Independent Sample and Paired SamplePrem RajNo ratings yet

- Factor AnalysisDocument25 pagesFactor Analysisvipul758967% (3)

- Session 14 - Factor AnalysisDocument29 pagesSession 14 - Factor AnalysisKOTHAPALLI SAI SARADA MYTHILINo ratings yet

- Factor Analysis FullDocument61 pagesFactor Analysis FullcuachanhdongNo ratings yet

- Analysis For Institutional EvaluationDocument2 pagesAnalysis For Institutional EvaluationRonald Dequilla PacolNo ratings yet

- Reliability Analysis PDFDocument3 pagesReliability Analysis PDFAre MeerNo ratings yet

- Factor Analysis PDFDocument57 pagesFactor Analysis PDFsahuek100% (1)

- Feedback AnalysisDocument1 pageFeedback AnalysisSekar KrishNo ratings yet

- Data Angket 1Document4 pagesData Angket 1Shamratul JanahNo ratings yet

- General Directions:: User Guide For The Item Analysis Template © Balajadia 2014Document10 pagesGeneral Directions:: User Guide For The Item Analysis Template © Balajadia 2014Rizalie Pablico MacahiligNo ratings yet

- Quantitative Asset Manage 102727754Document31 pagesQuantitative Asset Manage 102727754h_jacobson67% (3)

- 08 Factorial2 PDFDocument40 pages08 Factorial2 PDFOtono ExtranoNo ratings yet

- Introduction To EFA: Jennifer BrussowDocument30 pagesIntroduction To EFA: Jennifer BrussowEduard SondakhNo ratings yet

- 5 Root Cause Analysis Tools For More Effective ProblemDocument4 pages5 Root Cause Analysis Tools For More Effective ProblemAgus HariyantoNo ratings yet

- Makalah Statistika 2 Tentang MRA - MULTIPLE REGRESSION ANALYSIS (Data Primer)Document35 pagesMakalah Statistika 2 Tentang MRA - MULTIPLE REGRESSION ANALYSIS (Data Primer)JUAL MAKALAH MURAH100% (4)

- Program End Feedback - EmployerDocument3 pagesProgram End Feedback - EmployerRaghuvaranNo ratings yet

- Presentation, Analysis and Interpretation of DataDocument8 pagesPresentation, Analysis and Interpretation of DataEarl TamayoNo ratings yet

- 07-Functional Form and Functional AdequacyDocument16 pages07-Functional Form and Functional AdequacyKhumbulani MabhantiNo ratings yet

- Tally FinalDocument7 pagesTally FinalVany SyNo ratings yet

- Ordinal Logistic ModelDocument32 pagesOrdinal Logistic Modeljiregnataye7No ratings yet

- To PrankDocument55 pagesTo PrankJobelle Cariño ResuelloNo ratings yet

- Table of ContentDocument2 pagesTable of ContentJemie Y't OneyorNo ratings yet

- Business Research Method: Factor AnalysisDocument52 pagesBusiness Research Method: Factor Analysissubrays100% (1)

- Malhotra 19Document37 pagesMalhotra 19Anuth SiddharthNo ratings yet

- Chapter Nineteen: Factor AnalysisDocument37 pagesChapter Nineteen: Factor AnalysisRon ClarkeNo ratings yet

- Malhotra 19Document37 pagesMalhotra 19Sunia AhsanNo ratings yet

- Statistik RikoDocument5 pagesStatistik Rikoriko arisaputraNo ratings yet

- Lecture Note - Week 10Document22 pagesLecture Note - Week 10josephNo ratings yet

- Linear Regression Makes Several Key AssumptionsDocument5 pagesLinear Regression Makes Several Key AssumptionsVchair GuideNo ratings yet

- Survey ResultDocument16 pagesSurvey ResultMelybelle LaurelNo ratings yet

- Decision Science - NMIMSDocument8 pagesDecision Science - NMIMSlucky.idctechnologiesNo ratings yet

- The Following Slides Are Not Contractual in Nature and Are For Information Purposes Only As of June 2015Document44 pagesThe Following Slides Are Not Contractual in Nature and Are For Information Purposes Only As of June 2015khldHA100% (1)

- Ratio Analysis - Most Comprehensive Guide (Excel Based)Document246 pagesRatio Analysis - Most Comprehensive Guide (Excel Based)Abhiron BhattacharyaNo ratings yet

- Factor AnalysisDocument25 pagesFactor AnalysisAshwath HegdeNo ratings yet

- Data AnalysissDocument9 pagesData AnalysissJudy Ann Alejandrino EspinoNo ratings yet

- Factor AnalysisDocument45 pagesFactor AnalysisKaran SinghNo ratings yet

- ANDRADE-LESLIE JOY E.-Activity-9 - Validation-of-Scale-Based-InstrumentDocument2 pagesANDRADE-LESLIE JOY E.-Activity-9 - Validation-of-Scale-Based-InstrumentLESLIE JOY ANDRADENo ratings yet

- UEQ Data PKLDocument122 pagesUEQ Data PKLCheka Cakrecjwara Al KindiNo ratings yet

- Application of It in Logistics Activities: Survey of Efficiency and Impact AnalysisDocument6 pagesApplication of It in Logistics Activities: Survey of Efficiency and Impact AnalysisMalcolm ChristopherNo ratings yet

- Factor Analysis: © 2007 Prentice HallDocument33 pagesFactor Analysis: © 2007 Prentice Hallrakesh1983No ratings yet

- Facts About Factors: Paula Cocoma Megan Czasonis Mark Kritzman David TurkingtonDocument50 pagesFacts About Factors: Paula Cocoma Megan Czasonis Mark Kritzman David TurkingtontniravNo ratings yet

- BAIDocument5 pagesBAIArnand Gregory Dela CruzNo ratings yet

- Plantilla GRAFICA X-R AutomaticaDocument2 pagesPlantilla GRAFICA X-R AutomaticamERCYNo ratings yet

- Authentic Leadership of School Heads, Teacher Wellbeing, and Teamwork Attitudes As Constructs of Work Engagement: Structural Equation ModelingDocument3 pagesAuthentic Leadership of School Heads, Teacher Wellbeing, and Teamwork Attitudes As Constructs of Work Engagement: Structural Equation ModelingGeorgeLitoQuibolNo ratings yet

- Factor Analysis: Interdependence TechniqueDocument22 pagesFactor Analysis: Interdependence TechniqueAnu DeppNo ratings yet

- Activity 5 - Statistical Analysis and Design - Regression - CorrelationDocument29 pagesActivity 5 - Statistical Analysis and Design - Regression - CorrelationJericka Christine AlsonNo ratings yet

- Factor Analysis: Meaning of Underlying VariablesDocument8 pagesFactor Analysis: Meaning of Underlying VariablesKritika Jaiswal100% (1)

- Lab Manual Ds&BdalDocument100 pagesLab Manual Ds&BdalSEA110 Kshitij BhosaleNo ratings yet

- Present Value Annuity Tables Formula: PV (1-1 / (1 + I) ) / IDocument1 pagePresent Value Annuity Tables Formula: PV (1-1 / (1 + I) ) / IVarisha Nawaz100% (1)

- Active Learning - Creating Excitement in The Classroom - HandoutDocument262 pagesActive Learning - Creating Excitement in The Classroom - HandoutHelder Prado100% (1)

- 1st Quarter Diagnostic Test in Practical Research 2Document6 pages1st Quarter Diagnostic Test in Practical Research 2ivyNo ratings yet

- JMK (Jurnal Manajemen Dan Kewirausahaan) : JMK 5 (3) 2020, 173-182 P-ISSN 2477-3166 E-ISSN 2656-0771Document10 pagesJMK (Jurnal Manajemen Dan Kewirausahaan) : JMK 5 (3) 2020, 173-182 P-ISSN 2477-3166 E-ISSN 2656-0771Kinasih Rahma DeeaNo ratings yet

- Patton - All Reading ReflectionDocument14 pagesPatton - All Reading Reflectionapi-336333217No ratings yet

- Critiques of Mixed Methods Research StudiesDocument18 pagesCritiques of Mixed Methods Research Studiesmysales100% (1)

- Case:1 Isabelle's Research Dilema: Prepared By: Sujan KhaijuDocument5 pagesCase:1 Isabelle's Research Dilema: Prepared By: Sujan KhaijuSujan KhaijuNo ratings yet

- Defining and Assessing Organizational Culture - Jennifer Bellot PHD 2011Document26 pagesDefining and Assessing Organizational Culture - Jennifer Bellot PHD 2011Luis Elisur ArciaNo ratings yet

- Employer BrandingDocument26 pagesEmployer BrandingsujithasukumaranNo ratings yet

- Performance Assessment Guide: Leadership Cycle 1: Analyzing Data To Inform School Improvement and Promote EquityDocument31 pagesPerformance Assessment Guide: Leadership Cycle 1: Analyzing Data To Inform School Improvement and Promote Equityokapi1245No ratings yet

- Venn DiagramDocument2 pagesVenn DiagramVelley Jay Casawitan100% (9)

- LAS Module 1 PR2 Ver 2 SecuredDocument6 pagesLAS Module 1 PR2 Ver 2 SecuredKhaira PeraltaNo ratings yet

- Methods For The Synthesis of Qualitative Research A Critical Review PDFDocument11 pagesMethods For The Synthesis of Qualitative Research A Critical Review PDFAlejandro Riascos GuerreroNo ratings yet

- Curriculm RevisionDocument17 pagesCurriculm RevisionNirupama KsNo ratings yet

- Factor Rating MethodDocument18 pagesFactor Rating Methoddarshana14894888No ratings yet

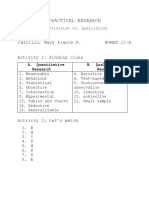

- Practical Research: Carrillo, Mary France P. HUMSS 12-A Activity 1: Finding CluesDocument5 pagesPractical Research: Carrillo, Mary France P. HUMSS 12-A Activity 1: Finding Cluesmary france carilloNo ratings yet

- Marketing at HCL Info SystemDocument68 pagesMarketing at HCL Info Systemcybercafec51No ratings yet

- USCP ModuleDocument69 pagesUSCP ModuleDennis Raymundo100% (1)

- Dabar IndiaDocument44 pagesDabar IndiaSachin Kumar SakriNo ratings yet

- Chapter 123 FinalDocument30 pagesChapter 123 FinalMaricar Oca de Leon0% (1)

- Cherry (1999) - The Female Horror Film Audience - Viewing Pleasures and Fan Practices PDFDocument285 pagesCherry (1999) - The Female Horror Film Audience - Viewing Pleasures and Fan Practices PDFmanuelNo ratings yet

- Consumers' Attitudes To Frozen Meat: Irlni Tzimitra - KalogianniDocument4 pagesConsumers' Attitudes To Frozen Meat: Irlni Tzimitra - KalogianniSiddharth Singh TomarNo ratings yet

- RESD2 Research Proposal 2Document7 pagesRESD2 Research Proposal 2Francis AbellarNo ratings yet

- MANASCI term3AY12-13Document6 pagesMANASCI term3AY12-13carlo21495No ratings yet

- Paradigms and Communication TheoryDocument14 pagesParadigms and Communication TheoryAdamImanNo ratings yet

- Grade Xii-Practical Research 2 First Quarter Prelim Examination (For Retakers Only)Document3 pagesGrade Xii-Practical Research 2 First Quarter Prelim Examination (For Retakers Only)Dabymalou Sapu-anNo ratings yet

- Research Methods and StatisticsDocument206 pagesResearch Methods and StatisticsProgress Sengera100% (1)

- Problem Set 5Document4 pagesProblem Set 5Luis CeballosNo ratings yet

- A New Age of Old AgeDocument15 pagesA New Age of Old AgeOlivera VukovicNo ratings yet