Professional Documents

Culture Documents

Web Scraping With Python and Selenium: Sarah Fatima, Shaik Luqmaan Nuha Abdul Rasheed

Uploaded by

Vanessa DouradoOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Web Scraping With Python and Selenium: Sarah Fatima, Shaik Luqmaan Nuha Abdul Rasheed

Uploaded by

Vanessa DouradoCopyright:

Available Formats

IOSR Journal of Computer Engineering (IOSR-JCE)

e-ISSN: 2278-0661,p-ISSN: 2278-8727, Volume 23, Issue 3, Ser. II (May – June 2021), PP 01-05

www.iosrjournals.org

Web Scraping with Python and Selenium

Sarah Fatima1, Shaik Luqmaan2 , Nuha Abdul Rasheed3

1

(Department of Computer Science and Engineering, Muffakham Jah College of Engineering and Technology /

Osmania University, India)

2

(Department of Computer Science and Engineering, Muffakham Jah College of Engineering and Technology /

Osmania University, India)

3

(Department of Computer Science and Engineering, Muffakham Jah College of Engineering and Technology /

Osmania University, India)

Abstract:

In this paper, we have designed a method for retrieving web information using selenium and python script.

Selenium is used to automate web browser interaction. The block-based structure is obtained by using a python

script. Web information is mostly unstructured, the proposed work helps to organize the unstructured data and

make it useful for various data analysis techniques. It also focuses on ways in which data can be persisted and

used from various websites for which APIs are not available.

Key Word: Web scraping; Selenium; Information Extraction; HTML Parsing; Web data retrieval.

---------------------------------------------------------------------------------------------------------------------------------------

Date of Submission: 02-06-2021 Date of Acceptance: 15-06-2021

---------------------------------------------------------------------------------------------------------------------------------------

I. Introduction

Web scraping is a process of information extraction from the world wide web (www), accomplished by

writing automated script routines that request data by querying the desired web server and retrieving the data by

using different parsing techniques [1]. Scraping helps in transforming unstructured HTML data into various

structured data formats like CSV, spreadsheets. As it is known, the nature of web data is changing frequently,

using an easy-to-use language like python which accepts dynamic inputs can be highly productive, as code

changes are easily done to keep up with the speed of web updates. Using the wide collection of python libraries,

such as requests, pandas, csv, webdriver can ease the process of fetching URLs and pulling out information

from web pages, building scrapers that can hop from one domain to another, gather information, and store that

information for later use. To automate web browser interaction, the single interface open-source tool Selenium

is used that can mimic human browsing behaviors. Besides, numpy and pandas are used to process the data [2].

By using this implementation, web data is transformed into structured blocks. The block-based structure is

obtained by using a python script with Selenium. The proposed experimental work shows, parsing the HTML

code, installation of python and selenium, python scripting and interpretation, and structural extraction of web

information. The evolving needs of internet and social media services require various techniques for the

extraction of web data. Web information is mostly unstructured, the proposed work helps to organize the

unstructured data and make it useful for various data analysis techniques.

Web Scraping and its applications:

Web Scraping is the practice of gathering information automatically from any website using an

application that simulates human web-behavior. This is achieved by writing automated scripts that query the

web server, request data, and transform the data in various structured formats like CSV, spreadsheets, and

JSON. This technique is highly used to persist the data from various websites for which APIs are not available.

In practice, web scraping uses a wide variety of programming techniques and technologies, such as data

analysis, information retrieval and security, Cyber Security, HTML parsing techniques. Web scraping has

various applications across many domains. Some of them are

● For collecting data from a collection of sites that do not have a warranted API. With web scraping,

even a small, finite amount of data can be viewed and accessed via a Python script and stored in a database for

further processing.

● Analysis of product data from social media platforms like Twitter and e-commerce sites like amazon

i.e., big data and sentiment analysis.

● To use the raw extracted data like texts, images to refine machine learning models and to develop

datasets.

DOI: 10.9790/0661-2303020105 www.iosrjournals.org 1 | Page

Web Scraping with Python and Selenium

● Observing customer sentiment by scraping customer feedback and reviews of different businesses as

visiting various websites can be cumbersome.

Scraping with Python and Selenium:

Web Scraping is all about dealing with huge amounts of data, Python is one of the most

favorable options to handle it, as it has a relatively easy learning curve and has a vast set of libraries and

frameworks like NumPy, CSV, Webdriver, etc. Using Python-based web scraping tools such as Selenium has

its benefits. Selenium is an automation testing framework for websites that takes control of the browser and

mimics actual human behavior using a web-driver package. With the majority of the websites being JavaScript-

heavy, Selenium provides an easy way to extract data using Scrapy selectors to grab HTML code.

II. Related Work

Before the evolution of technology, people used to manually copy the data from various websites and

paste it in a local file to analyze the data. But in today's day and age with technology increasing at a rapid pace

the old method of extracting the data can be overwhelming and time consuming. This is where web scraping

can be useful. It can automate the data extraction process and transform the data into desired structured format.

Web Scraping Tools and Techniques

In this section various tools as well as techniques used for web scraping are presented. They have been found

through searching the web or having heard about them due to their popularity.

1 Scrapy

Scrapy is an open-source Python framework initially outlined exclusively for web scraping and also supports

web crawling and extricate data via APIs. Data extraction can be done using Xpath or CSS which is the built-in

way as well as by using external libraries such as BeautifulSoup and xml. It allows for storing data within the

cloud.

2 HtmlUnit

HtmlUnit is a typical headless Java browser used for testing web applications. It allows commonly used

browser functionality such as following links, filling out forms, etc.

Moreover, it is used for web scraping purposes. The objective is to mimic a “real” browser, and thus HtmlUnit

incorporates support for JavaScript, AJAX and usage of cookies 13.

3 Text pattern matching

A basic yet effective approach to extract data from web pages can be based on the UNIX grep command or

regular expression - coordinating facilities of programming languages (for example, Perl or Python).

4 HTTP programming

HTTP requests posted to the remote web server using socket programming are used to retrieve static and

dynamic web pages.

5 HTML parsing

Data of the same category are typically encoded into similar pages (generated from database) by a common

script or template. In data mining, a wrapper is a program that extracts contents from such templates in a

particular information source and translates it into a relational form. HTML pages can be parsed, and content

can be retrieved using semi structured data query languages like Xquery and the HTQL.

6 DOM parsing

Browsers such as Mozilla browser or Internet Explorer can parse web pages into a DOM tree using which parts

of pages can be retrieved by programs. The resulting DOM tree is parsed using languages like Xpath.

Web scraping using BeautifulSoup

BeautifulSoup module is a web scraping framework of Python which is used to query and organize all

the parsed HTML into data using different parsers which the module has to offer. BeautifulSoup uses the

HTML parser that's native to Python's standard library known as html parser.

To get started, import Beautiful Soup and need to create beautiful soup objects called ‘soup’. There are

lots of ways to navigate the HTML using BeautifulSoup objects, one of the more common methods is find all

methods. Here we can enter a single tag name and it will return either the first or all of the tags of that kind. We

could also provide a list of tag names and the method will provide anything matching either tag. Alternatively,

we can specify the attributes, such as ID or class and it will return based on that criterion.

III. Proposed Methodology and Implementation

The proposed work focuses on analyzing a web page and extracting required visual blocks which can

be lists or unstructured tables and store these datasets in various already available structured formats such as

CSV, spreadsheets or SQL databases using respective Python libraries. Selenium web drivers are used to mimic

DOI: 10.9790/0661-2303020105 www.iosrjournals.org 2 | Page

Web Scraping with Python and Selenium

human behavior and ease the extraction of large data sets and images, we have created one script to perform

required scraping.

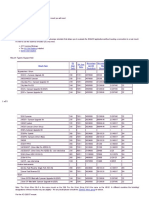

Fig.1. Web Scraping Timeline

Main tools used

1. Python (3.5)

2. Selenium library: for handling text extraction from a web page’s source code using element id, XPath

expressions or CSS selectors.

3. requests library: for handling the interaction with the web page (Using HTTP requests).

4. csv library: for storing extracted data.

5. Proxy header rotations: generating random headers and getting free proxy IPs in order to avoid IP

blocks.

Description of work

The research work is developed in Python using HTML parsing running on Anaconda Platform. Script is

supported by Selenium library [5]. The site used for scraping instances of unstructured data with and without

pagination. Simulation of experimented work:

A. Installation of Python

B. Importing selenium web drivers, requests and csv library

C. Execution of script using Python

D. Persisting the generated structured data in the database.

Fig.2. Complete flow of web scraping

When the script is run, an instance of chrome web driver is initiated, and the required output CSV files are

initialized. The scraper then parses the data from the aforementioned URL using element id or XPath and starts

collecting the data, which then will be written in output files using writer headers. The script is designed to

throw errors in case of time out or if the element id or XPath is missing.

DOI: 10.9790/0661-2303020105 www.iosrjournals.org 3 | Page

Web Scraping with Python and Selenium

Fig.3. Initialization of selenium web driver

Fig.4. Extraction of data using element id

Fig.5. Extraction of next links using Xpath when paginated

Results from the data files obtained from web scraping can further be used for machine learning and data

analytics techniques.

IV. Discussion

When we start web scraping, we will notice how much we value the simple things that browsers

perform for us. The web browser is a very handy tool for generating and sending the information packets from

our computer, and interpreting the data we receive as text, images, etc. back from the server. The internet

includes many types of interesting data sources that serve as a goldmine of interesting stuff. Unfortunately, the

current unstructured nature of the web makes it extremely difficult to easily access or export this data. Modern

browsers are brilliant at showcasing visuals, displaying motions, and arranging out websites in a pleasing style,

but they do not offer a capacitance to export their data, at least not in most situations. So, web scraping, instead

of seeing the website page through the interface of your browser, gathers the data from the browser. Now-a-

days many websites provide a service called an API, which gives access to the data, but most of the website

doesn't provide an API to interact with or doesn't expose an API required for our functionality. In such cases

building a web scraper to gather the data can be handy.

Applications of Web Scraping:

● E-Commerce

● Finance

● Research

● Data Science

● Social Media

● Sales

V. Conclusion

Web scraping can be useful to gather different types of data from websites either for business

or personal purposes and there are many ways to do it. But it is also necessary to be mindful of the burden that

your web scraper is putting on the website and there can be consequences of irresponsible web scraping.

Consider running a script throughout the first 100 pages; this would be an aggressive scraper, and we would be

placing an unreasonably enormous strain on the website servers, perhaps disrupting their operation and web

scraping is a violation of certain websites' terms and conditions in such cases the website is likely to take action

against you.

DOI: 10.9790/0661-2303020105 www.iosrjournals.org 4 | Page

Web Scraping with Python and Selenium

Web Scraping Code of Conduct:

1. Don't unlawfully distribute downloaded materials.

2. Downloading copies of documents that are plainly not public is not permitted.

3. Check if the data which is required is already available.

4. Don’t try aggressively scrapping, introduce delays in the scripts.

5. Check state’s law before scraping any website.

References

[1]. Anand V. Saurkar, Kedar G. Pathare, Shweta A. Gode, “An overview of web scraping techniques and tools” International Journal

on Future Revolution in Computer Science and Communication Engineering, April 2018.

[2]. Jiahao Wu, “Web Scraping using Python: Step by step guide” ResearchGate publications (2019).

[3]. Matthew Russell, “Using python for web scraping,” No Starch Press, 2012.

[4]. Ryan Mitchell, “Web scraping with Python,” O’Reilly Media, 2015.

[5]. Selenium Library : https://pypi.org/project/selenium/.

Sarah Fatima, et. al. “Web Scraping with Python and Selenium.” IOSR Journal of Computer Engineering

(IOSR-JCE), 23(3), 2021, pp. 01-05.

DOI: 10.9790/0661-2303020105 www.iosrjournals.org 5 | Page

You might also like

- Implementation of Web Application For Disease Prediction Using AIDocument5 pagesImplementation of Web Application For Disease Prediction Using AIBOHR International Journal of Computer Science (BIJCS)No ratings yet

- Data Analysis by Web Scraping Using PythonDocument6 pagesData Analysis by Web Scraping Using Pythonnational srkdcNo ratings yet

- Nayak (2022) - A Study On Web ScrapingDocument3 pagesNayak (2022) - A Study On Web ScrapingJoséNo ratings yet

- Web Data ScrapingDocument5 pagesWeb Data ScrapingMunawir MunawirNo ratings yet

- Mini ProjectDocument13 pagesMini Projectsaniyasalwa965No ratings yet

- Summary Paper 13 14 15Document2 pagesSummary Paper 13 14 15desen31455No ratings yet

- Online Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Document4 pagesOnline Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Delhi Technological University Presentation Subject: Web Technology Mc-320 Topic: Web Mining FrameworkDocument16 pagesDelhi Technological University Presentation Subject: Web Technology Mc-320 Topic: Web Mining FrameworkJim AbwaoNo ratings yet

- chp3A10.10072F978 3 319 32001 4 - 483 1Document4 pageschp3A10.10072F978 3 319 32001 4 - 483 1vishalweeshalNo ratings yet

- Online Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Document5 pagesOnline Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Web Scraping - Unit 1Document31 pagesWeb Scraping - Unit 1MANOHAR SIVVALA 20111632100% (1)

- Architectural Design and Evaluation of An Efficient Web-Crawling SystemDocument8 pagesArchitectural Design and Evaluation of An Efficient Web-Crawling SystemkhadafishahNo ratings yet

- Sing Rodia 2019Document6 pagesSing Rodia 2019Mohit AgrawalNo ratings yet

- Software Engineering ProjectDocument55 pagesSoftware Engineering ProjectShruti GargNo ratings yet

- The Design and Implementation of Configurable News Collection System Based On Web CrawlerDocument5 pagesThe Design and Implementation of Configurable News Collection System Based On Web CrawlerHasnain Khan AfridiNo ratings yet

- 2nd ReviewDocument33 pages2nd ReviewManasvi GautamNo ratings yet

- Introduction To Web ScrapingDocument3 pagesIntroduction To Web ScrapingRahul Kumar100% (1)

- Chapter IIIDocument3 pagesChapter IIIGabriel BecinanNo ratings yet

- Web Scrapping: Dept - of CS&E, BIET, Davangere Page - 1Document8 pagesWeb Scrapping: Dept - of CS&E, BIET, Davangere Page - 1jeanieNo ratings yet

- Synopsis YashvirDocument4 pagesSynopsis YashvirDhananjay KumarNo ratings yet

- Web ScrapingDocument11 pagesWeb ScrapingSantosh KandariNo ratings yet

- 3rd ReviewDocument35 pages3rd ReviewManasvi GautamNo ratings yet

- Christos ChenDocument42 pagesChristos ChenManisha TanwarNo ratings yet

- Thesis On Web Log MiningDocument8 pagesThesis On Web Log Mininghannahcarpenterspringfield100% (2)

- Summary Paper 10 11 12Document3 pagesSummary Paper 10 11 12desen31455No ratings yet

- Statistical Entity Extraction from WebDocument6 pagesStatistical Entity Extraction from WebUday SolNo ratings yet

- Design and Development of A Web Traffic Analyser: Mustafa - Kadhim@coie-Nahrain - Edu.iqDocument7 pagesDesign and Development of A Web Traffic Analyser: Mustafa - Kadhim@coie-Nahrain - Edu.iqAmit Singh khincheeNo ratings yet

- Log Paper-1Document15 pagesLog Paper-1uttammandeNo ratings yet

- 3.Eng-A Survey On Web MiningDocument8 pages3.Eng-A Survey On Web MiningImpact JournalsNo ratings yet

- 19-5E8 Tushara PriyaDocument23 pages19-5E8 Tushara Priya19-5E8 Tushara PriyaNo ratings yet

- Web MiningDocument13 pagesWeb Miningdhruu2503No ratings yet

- Hidden Web Crawler Research PaperDocument5 pagesHidden Web Crawler Research Paperafnkcjxisddxil100% (1)

- Flipkart Web ScrappingDocument8 pagesFlipkart Web Scrappingparv2410shriNo ratings yet

- Final Publish PaperDocument4 pagesFinal Publish Papershruti.narkhede.it.2019No ratings yet

- Resource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory NetworksDocument6 pagesResource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory Networksmindvision25No ratings yet

- Explores The Ways of Usage of Web Crawler in Mobile SystemsDocument5 pagesExplores The Ways of Usage of Web Crawler in Mobile SystemsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Web Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)Document4 pagesWeb Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Aprende programación python aplicaciones web: python, #2From EverandAprende programación python aplicaciones web: python, #2No ratings yet

- Review 1Document11 pagesReview 1Manasvi GautamNo ratings yet

- Project FinalDocument59 pagesProject FinalRaghupal reddy GangulaNo ratings yet

- Research PaperDocument4 pagesResearch PaperpremacuteNo ratings yet

- Page Ranking Algorithm Based on Link Visits for Online BusinessDocument10 pagesPage Ranking Algorithm Based on Link Visits for Online BusinessMinh Lâm PhạmNo ratings yet

- Semantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & EngineeringDocument36 pagesSemantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & Engineeringqwerty uNo ratings yet

- 1.1 Web ScrapingDocument34 pages1.1 Web ScrapinginesNo ratings yet

- Ad Web ExploreDocument30 pagesAd Web Exploresurya putraNo ratings yet

- Web Data Extraction and Generating Mashup: Achala Sharma, Aishwarya Vaidyanathan, Ruma Das, Sushma KumariDocument6 pagesWeb Data Extraction and Generating Mashup: Achala Sharma, Aishwarya Vaidyanathan, Ruma Das, Sushma KumariInternational Organization of Scientific Research (IOSR)No ratings yet

- DWM Assignment 1: 1. Write Detailed Notes On The Following: - A. Web Content MiningDocument10 pagesDWM Assignment 1: 1. Write Detailed Notes On The Following: - A. Web Content MiningKillerbeeNo ratings yet

- Design and Implementation of A Simple Web Search EDocument9 pagesDesign and Implementation of A Simple Web Search EajaykumarbmsitNo ratings yet

- WT - NotesDocument102 pagesWT - NotesshubhangiNo ratings yet

- Semantic Web Unit - 1 & 2Document16 pagesSemantic Web Unit - 1 & 2pavanpk0812No ratings yet

- Semantic Web Technologies in Data ManagementDocument4 pagesSemantic Web Technologies in Data ManagementTheodore CacciolaNo ratings yet

- (IJCST-V5I3P28) :SekharBabu - Boddu, Prof - RakajasekharaRao.KurraDocument7 pages(IJCST-V5I3P28) :SekharBabu - Boddu, Prof - RakajasekharaRao.KurraEighthSenseGroupNo ratings yet

- The Implementation of Crawling News Page Based On Incremental Web CrawlerDocument4 pagesThe Implementation of Crawling News Page Based On Incremental Web CrawlerHasnain Khan AfridiNo ratings yet

- WT - NotesDocument122 pagesWT - Notesbuzzi420100% (1)

- Top 10 Frameworks of PythonDocument7 pagesTop 10 Frameworks of PythonRiyas FathinNo ratings yet

- Web Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Document4 pagesWeb Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Umamageswari KumaresanNo ratings yet

- 2020's Best Web Scraping Tools For Data ExtractionDocument10 pages2020's Best Web Scraping Tools For Data ExtractionFrankNo ratings yet

- Tools and SoftwareDocument7 pagesTools and Softwarepulokr221No ratings yet

- AJAX PHP Web Application - Diploma I.T Final Year Project ProposalDocument10 pagesAJAX PHP Web Application - Diploma I.T Final Year Project ProposalNelson AmeyoNo ratings yet

- KDU Proceedings Comparative Study on Web ScrapingDocument6 pagesKDU Proceedings Comparative Study on Web ScrapingacenicNo ratings yet

- Using machine learning to gain insights from enterprise unstructured dataDocument11 pagesUsing machine learning to gain insights from enterprise unstructured dataVanessa DouradoNo ratings yet

- 2020 Findings-Emnlp 445Document14 pages2020 Findings-Emnlp 445Vanessa DouradoNo ratings yet

- CertificateOfCompletion The Way We Prevent CorruptionDocument1 pageCertificateOfCompletion The Way We Prevent CorruptionVanessa DouradoNo ratings yet

- Evaluation of Japanese Pretrained BERT Models Using Sentence ClusteringDocument7 pagesEvaluation of Japanese Pretrained BERT Models Using Sentence ClusteringVanessa DouradoNo ratings yet

- 19 Thesis EbertsDocument132 pages19 Thesis EbertsVanessa DouradoNo ratings yet

- Neural Transfer Learning for Natural Language Processing ThesisDocument329 pagesNeural Transfer Learning for Natural Language Processing ThesisIbrahim GadNo ratings yet

- CertificateOfCompletion L'Oreal EthicsDocument1 pageCertificateOfCompletion L'Oreal EthicsVanessa DouradoNo ratings yet

- Dell Precision Tower 5000 Series 5810 Spec SheetDocument2 pagesDell Precision Tower 5000 Series 5810 Spec SheetjeffjozoNo ratings yet

- Babaji Nagaraj: Invisible AshramDocument13 pagesBabaji Nagaraj: Invisible AshramVanessa DouradoNo ratings yet

- Dell Precision Tower 5000 Series 5810 Spec SheetDocument2 pagesDell Precision Tower 5000 Series 5810 Spec SheetjeffjozoNo ratings yet

- Gayatri Sadhana Truth DistortionsDocument105 pagesGayatri Sadhana Truth Distortionssksuman100% (33)

- Prakken2021 Article AFormalAnalysisOfSomeFactor-AnDocument27 pagesPrakken2021 Article AFormalAnalysisOfSomeFactor-AnVanessa DouradoNo ratings yet

- The Shaman and Ayahuasca: Journeys To Sacred Realms (PDFDrive)Document154 pagesThe Shaman and Ayahuasca: Journeys To Sacred Realms (PDFDrive)Vanessa Dourado100% (1)

- Babaji's Kriya Yoga, A Lineage of Yoga: Por NityanandaDocument3 pagesBabaji's Kriya Yoga, A Lineage of Yoga: Por NityanandaVanessa DouradoNo ratings yet

- Yoga and CelibacyDocument7 pagesYoga and CelibacyIvan CilerNo ratings yet

- Guide To The General Data Protection Regulation GDPR 1 0Document295 pagesGuide To The General Data Protection Regulation GDPR 1 0testNo ratings yet

- The Lost Paradise of AtlantisDocument9 pagesThe Lost Paradise of AtlantisVanessa DouradoNo ratings yet

- Certificado GenericoDocument2 pagesCertificado GenericoVanessa DouradoNo ratings yet

- Oracle - Practicetest.1z0 961.v2017!10!03.by - Landon.36q 1Document17 pagesOracle - Practicetest.1z0 961.v2017!10!03.by - Landon.36q 1Vanessa DouradoNo ratings yet

- Fibonacci and Elliottwave Relationships.Document11 pagesFibonacci and Elliottwave Relationships.Fazlan SallehNo ratings yet

- Maier - Atalanta Fugiens PDFDocument156 pagesMaier - Atalanta Fugiens PDFCap Franz100% (1)

- Earthing Calculation: A General Design DataDocument14 pagesEarthing Calculation: A General Design Dataمحمد الأمين سنوساوي100% (1)

- Python Regular Expressions (RegEx) Cheat SheetDocument4 pagesPython Regular Expressions (RegEx) Cheat SheetbabjeereddyNo ratings yet

- SET UP EQMOD SOFTWARE FOR ASTROPHOTOGRAPHYDocument5 pagesSET UP EQMOD SOFTWARE FOR ASTROPHOTOGRAPHYMechtatel HrenovNo ratings yet

- Artificial Intelligence Big Data 01 Research PaperDocument32 pagesArtificial Intelligence Big Data 01 Research PapermadhuraNo ratings yet

- Movetronix - Product Catalog 2023Document17 pagesMovetronix - Product Catalog 2023infoNo ratings yet

- SIMATIC S7 + TIA Function BlocksDocument109 pagesSIMATIC S7 + TIA Function BlocksVladimirAgeevNo ratings yet

- Experimental Phonetics 311Document7 pagesExperimental Phonetics 311Ebinabo EriakumaNo ratings yet

- Unit1 DBMSDocument64 pagesUnit1 DBMSRaj SuraseNo ratings yet

- Seminar: Predictive AnalyticsDocument10 pagesSeminar: Predictive Analyticssamiksha ingoleNo ratings yet

- 1687836876505b6669848e0 1100623Document14 pages1687836876505b6669848e0 1100623jhon130296No ratings yet

- Tire Thread Machine Learning ModelDocument12 pagesTire Thread Machine Learning ModelMarcos CostaNo ratings yet

- E176 PDFDocument20 pagesE176 PDFAhmad Zubair RasulyNo ratings yet

- APSC 177 Lab 2Document2 pagesAPSC 177 Lab 2Nusaiba NaimNo ratings yet

- NTC 2008 Example 002 PDFDocument14 pagesNTC 2008 Example 002 PDFMohamed Abo-ZaidNo ratings yet

- Quantifying The Seasonal Cooling Capacity of Green Infrastr 2020 LandscapeDocument21 pagesQuantifying The Seasonal Cooling Capacity of Green Infrastr 2020 Landscape熙槃No ratings yet

- SG110CXPV Grid-Connected Inverter User ManualDocument101 pagesSG110CXPV Grid-Connected Inverter User ManualAizat AlongNo ratings yet

- Redwan Ahmed Miazee - HW - 1Document3 pagesRedwan Ahmed Miazee - HW - 1REDWAN AHMED MIAZEENo ratings yet

- A Colony of Blue-Green Algae Can Power A Computer For Six MonthsDocument4 pagesA Colony of Blue-Green Algae Can Power A Computer For Six MonthsDr Hart IncorporatedNo ratings yet

- JL-03-November-December The Strutted Box Widening Method For Prestressed Concrete Segmental BridgesDocument18 pagesJL-03-November-December The Strutted Box Widening Method For Prestressed Concrete Segmental BridgesVivek PremjiNo ratings yet

- Summer Internship Completion Certificate FormatDocument1 pageSummer Internship Completion Certificate FormatNavneet Gupta75% (12)

- DownloadDocument2 pagesDownloadapextelecomsaNo ratings yet

- Tarea Vacacional PDFDocument16 pagesTarea Vacacional PDFkorina gonzalezNo ratings yet

- Fundamentals of Mimo Wireless CommunicationDocument1 pageFundamentals of Mimo Wireless CommunicationshailiayushNo ratings yet

- 2.2 Organizational StructureDocument60 pages2.2 Organizational StructureMateo Valenzuela Rodriguez100% (1)

- Employee Payroll System ProjectDocument21 pagesEmployee Payroll System ProjectZeeshan Hyder BhattiNo ratings yet

- Data Mining 1-3Document29 pagesData Mining 1-3Singer JerowNo ratings yet

- Reliance Jio's Internal ResourcesDocument17 pagesReliance Jio's Internal ResourcesBatch 2020-22100% (1)

- Video Games - Checklist, Research #1 and 2, Rubric, and TimelineDocument6 pagesVideo Games - Checklist, Research #1 and 2, Rubric, and Timelinefin vionNo ratings yet

- 6 Axis Breakout Board Wiring and SetupDocument5 pages6 Axis Breakout Board Wiring and SetupJalaj ChhalotreNo ratings yet