Professional Documents

Culture Documents

Keras Tuner Configuaration

Keras Tuner Configuaration

Uploaded by

anhtu12st0 ratings0% found this document useful (0 votes)

5 views5 pagesThe document discusses Keras Tuner, which allows defining Keras models with tunable hyperparameters. It describes defining models with hyperparameters using functions or the HyperModel base class, available tuners for hyperparameter optimization, and how to define different types of hyperparameters including boolean, choice, float, integer, and conditional hyperparameters. Key methods for getting the best hyperparameters and models from the tuner are also outlined.

Original Description:

Original Title

Keras Tuner Configuaration (1)

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses Keras Tuner, which allows defining Keras models with tunable hyperparameters. It describes defining models with hyperparameters using functions or the HyperModel base class, available tuners for hyperparameter optimization, and how to define different types of hyperparameters including boolean, choice, float, integer, and conditional hyperparameters. Key methods for getting the best hyperparameters and models from the tuner are also outlined.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

5 views5 pagesKeras Tuner Configuaration

Keras Tuner Configuaration

Uploaded by

anhtu12stThe document discusses Keras Tuner, which allows defining Keras models with tunable hyperparameters. It describes defining models with hyperparameters using functions or the HyperModel base class, available tuners for hyperparameter optimization, and how to define different types of hyperparameters including boolean, choice, float, integer, and conditional hyperparameters. Key methods for getting the best hyperparameters and models from the tuner are also outlined.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 5

Configuration Keras Tuner

1. Define the model

- With function:

o Argument: hp - keras_tuner.HyperParameters()

- With HyperModel base class:

o Method: build (self, hp: keras_tuner.HyperParameters) => Model

2. Turners

Tuners available: relative link

- RandomSearch: relative link

- GridSearch: relative link

- BayesianOptimization: relative link

- Hyperband: relative link

- Sklearn: relative link

Some important arguments:

- Hypermodel: A HyperModel instance.

- Objective: string | [string] | keras_tuner.Objective | [keras_tuner.Objective].

- max_trials: Optional integer, the total number of trials (model configurations) to

test at most.

Some important methods:

- get_best_hyperparameters:

o best_hp = tuner.get_best_hyperparameters()[0]

o model = tuner.hypermodel.build(best_hp)

- get_best_models:

o tuner.get_best_models(num_models=1)

3. HyperParameters

hp = tuner. HyperParameters()

Each method below must have a unique name:

- hp.Boolean(name, default=False, parent_name=None, parent_values=None)

Choice between True and False.

- hp.Choice( name, values, ordered=None, default=None, parent_name=None,

parent_values=None )

Choice of one value among a predefined set of possible values.

values: A list of possible values. Values must be int, float, str, or bool. All values

must be of the same type.

- hp.Fixed(name, value, parent_name=None, parent_values=None)

Fixed, untunable value.

value: The value to use (can be any JSON-serializable Python type).

- hp.Float(name,min_value,max_value,step=None,sampling="linear",default=None

,parent_name=None,parent_values=None)

Floating point value hyperparameter.

o min_value: Float, the lower bound of the range.

o max_value: Float, the upper bound of the range.

o step: Optional float, the distance between two consecutive samples in the

range. If left unspecified, it is possible to sample any value in the interval.

If sampling="linear", it will be the minimum additve between two samples.

If sampling="log", it will be the minimum multiplier between two samples.

o sampling: String. One of "linear", "log", "reverse_log". Defaults to "linear".

When sampling value, it always start from a value in range [0.0, 1.0). The

sampling argument decides how the value is projected into the range of

[min_value, max_value]. "linear": min_value + value * (max_value -

min_value) "log": min_value * (max_value / min_value) ^ value

"reverse_log": (max_value - min_value * ((max_value / min_value) ^ (1 -

value) - 1))

- hp.Int(name,min_value,max_value,step=None,sampling="linear",default=None,p

arent_name=None,parent_values=None)

Integer hyperparameter.

o min_value: Integer, the lower limit of range, inclusive.

o max_value: Integer, the upper limit of range, inclusive.

o step: Optional integer, the distance between two consecutive samples in

the range. If left unspecified, it is possible to sample any integers in the

interval. If sampling="linear", it will be the minimum additve between two

samples. If sampling="log", it will be the minimum multiplier between two

samples.

o sampling: String. One of "linear", "log", "reverse_log". Defaults to "linear".

When sampling value, it always start from a value in range [0.0, 1.0). The

sampling argument decides how the value is projected into the range of

[min_value, max_value]. "linear": min_value + value * (max_value -

min_value) "log": min_value * (max_value / min_value) ^ value

"reverse_log": (max_value - min_value * ((max_value / min_value) ^ (1 -

value) - 1))

- hp. conditional_scope(parent_name, parent_values)

4. Highlight examples

- Define the existence of layers with hp.Boolean:

- Define choice of activation function:

- Define hyper multi-layer and each layer must have unique name:

- Build model separate with HyperParameters:

You might also like

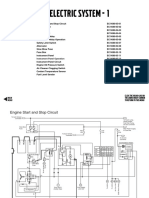

- Electric System - 1: Master Switch Battery RelayDocument18 pagesElectric System - 1: Master Switch Battery RelayMACHINERY101GEAR100% (9)

- Module 10 MCQ (Aviation Legislation) - 20170316-163430324Document5 pagesModule 10 MCQ (Aviation Legislation) - 20170316-163430324Jimmy HaddadNo ratings yet

- Designing, Evaluating, and Validating SOP TrainingDocument7 pagesDesigning, Evaluating, and Validating SOP Trainingramban11No ratings yet

- Unit 1 - Lab ProgramsDocument12 pagesUnit 1 - Lab Programsabisheik942No ratings yet

- Experiment 2 v2Document10 pagesExperiment 2 v2saeed wedyanNo ratings yet

- Shuffle (: New in Version 3.6. Changed in Version 3.9: Raises ADocument3 pagesShuffle (: New in Version 3.6. Changed in Version 3.9: Raises AwowomNo ratings yet

- DataFrame StatisticsDocument41 pagesDataFrame Statisticsrohan jhaNo ratings yet

- Exercise Sheet 6 SolutionDocument11 pagesExercise Sheet 6 SolutionSurya IyerNo ratings yet

- Python Solve CAT2Document17 pagesPython Solve CAT2Er Sachin ShandilyaNo ratings yet

- 00 Lab NotesDocument8 pages00 Lab Notesreddykavya2111No ratings yet

- Python: FinallyDocument10 pagesPython: FinallyMayur KashyapNo ratings yet

- Strings: Definition:: String Variable Name H E L L ODocument10 pagesStrings: Definition:: String Variable Name H E L L OSUGANYA NNo ratings yet

- Data Wrangling and PreprocessingDocument41 pagesData Wrangling and PreprocessingArchana BalikramNo ratings yet

- Day 4 AssignmentsDocument15 pagesDay 4 AssignmentslathaNo ratings yet

- TutorialDocument27 pagesTutorialxbsdNo ratings yet

- Static Utility Methods: Math ClassDocument4 pagesStatic Utility Methods: Math Classnirmala bogireddyNo ratings yet

- Python Basics: #This Is A Comment. #This Is Also A Comment. There Are No Multi-Line CommentsDocument19 pagesPython Basics: #This Is A Comment. #This Is Also A Comment. There Are No Multi-Line CommentsketulpatlNo ratings yet

- Random: - Generate Pseudo-Random NumbersDocument10 pagesRandom: - Generate Pseudo-Random NumbersNikita AgrawalNo ratings yet

- Art - Attacks.evasion - Zoo - Adversarial Robustness Toolbox 1.2.0 DocumentationDocument12 pagesArt - Attacks.evasion - Zoo - Adversarial Robustness Toolbox 1.2.0 DocumentationNida AmaliaNo ratings yet

- Intro To ForecastingDocument15 pagesIntro To ForecastingtrickledowntheroadNo ratings yet

- Experiment No 8Document7 pagesExperiment No 8Aman JainNo ratings yet

- EE2211 CheatSheetDocument15 pagesEE2211 CheatSheetAditiNo ratings yet

- Functions For Integers: Randrange RandrangeDocument138 pagesFunctions For Integers: Randrange Randrangelibin_paul_2No ratings yet

- ResamplingDocument7 pagesResamplingPower AgeNo ratings yet

- Sample Project Report StructureDocument5 pagesSample Project Report StructureRodrigo FarruguiaNo ratings yet

- JSQuery ProgrammingDocument25 pagesJSQuery ProgrammingStephen WoodardNo ratings yet

- Python CheatsheetDocument22 pagesPython Cheatsheettrget0001No ratings yet

- Problem 4 - Assignment 1Document3 pagesProblem 4 - Assignment 1Anand BharadwajNo ratings yet

- Data AnalysisDocument8 pagesData AnalysisTayar ElieNo ratings yet

- Informatics Practices Class 12 Cbse Notes Data HandlingDocument17 pagesInformatics Practices Class 12 Cbse Notes Data Handlingellastark0% (1)

- Expt 5Document20 pagesExpt 5Amisha SharmaNo ratings yet

- Architectures and Algorithms For DSP Systems (Crl702) : Centre For Applied Research in Electronics Iit DelhiDocument8 pagesArchitectures and Algorithms For DSP Systems (Crl702) : Centre For Applied Research in Electronics Iit DelhiRaj AryanNo ratings yet

- Implementing Custom Randomsearchcv: 'Red' 'Blue'Document1 pageImplementing Custom Randomsearchcv: 'Red' 'Blue'Tayub khan.ANo ratings yet

- Inpr Prelims CanvasDocument11 pagesInpr Prelims Canvasjayceelunaria7No ratings yet

- Homework 5: Fitting: Problem 1: Parity-Violating AsymmetryDocument7 pagesHomework 5: Fitting: Problem 1: Parity-Violating AsymmetryLevi GrantzNo ratings yet

- Bookkeeping Functions: RandomDocument16 pagesBookkeeping Functions: Randomlibin_paul_2No ratings yet

- Distributions DemoDocument28 pagesDistributions Demosid immanualNo ratings yet

- Utf 8''week4Document15 pagesUtf 8''week4devendra416No ratings yet

- Sensitivity Analyses: A Brief Tutorial With R Package Pse, Version 0.1.2Document14 pagesSensitivity Analyses: A Brief Tutorial With R Package Pse, Version 0.1.2Kolluru HemanthkumarNo ratings yet

- Mass Property FunctionsDocument2 pagesMass Property FunctionsatmelloNo ratings yet

- Eviews HelpDocument7 pagesEviews HelpjehanmoNo ratings yet

- SummaryDocument8 pagesSummaryMahmoud AdelNo ratings yet

- This Code Fragment Defines A Single Layer With Artificial Neurons, and It Expects Input VariablesDocument9 pagesThis Code Fragment Defines A Single Layer With Artificial Neurons, and It Expects Input Variablescrazzy 8No ratings yet

- This Code Fragment Defines A Single Layer With Artificial Neurons, and It Expects Input VariablesDocument9 pagesThis Code Fragment Defines A Single Layer With Artificial Neurons, and It Expects Input Variablescrazzy 8No ratings yet

- StringDocument35 pagesStringDaniel PioquintoNo ratings yet

- Autoencoder - MPL - Basic - Ipynb - Colaboratory PDFDocument21 pagesAutoencoder - MPL - Basic - Ipynb - Colaboratory PDFushasreeNo ratings yet

- You Will Follow The Same Logic As Lab 7 For Menu Driven Program To Ensure That The User Does Not Enter Wrong OptionsDocument2 pagesYou Will Follow The Same Logic As Lab 7 For Menu Driven Program To Ensure That The User Does Not Enter Wrong OptionsAdilMuhammadNo ratings yet

- Simple Guide To Optuna For Hyperparameters OptimizationTuningDocument29 pagesSimple Guide To Optuna For Hyperparameters OptimizationTuningRadha KilariNo ratings yet

- Func Shaper - User ManualDocument4 pagesFunc Shaper - User ManualErpel GruntzNo ratings yet

- Rabin-Karp Algorithm For Pattern Searching: ExamplesDocument5 pagesRabin-Karp Algorithm For Pattern Searching: ExamplesSHAMEEK PATHAKNo ratings yet

- Python Introduction 2019Document27 pagesPython Introduction 2019Daniel HockeyNo ratings yet

- Modul Praktikum SciPyDocument15 pagesModul Praktikum SciPyYobell Kevin SiburianNo ratings yet

- Chapter 6 - Mathematical Library MethodsDocument6 pagesChapter 6 - Mathematical Library Methods6a03aditisinghNo ratings yet

- String NotesDocument5 pagesString Notesmkiran888No ratings yet

- NUMPY Basics: Computation and File I/O Using ArraysDocument9 pagesNUMPY Basics: Computation and File I/O Using ArraysTushar GoelNo ratings yet

- 28-Execution Plan Optimization Techniques Stroffek KovarikDocument49 pages28-Execution Plan Optimization Techniques Stroffek KovariksreekanthkataNo ratings yet

- Python Programming Lectures.Document36 pagesPython Programming Lectures.SherazNo ratings yet

- Advance AI and ML LABDocument16 pagesAdvance AI and ML LABPriyanka PriyaNo ratings yet

- On TFIDF VectorizerDocument7 pagesOn TFIDF VectorizerBataan ShivaniNo ratings yet

- Session-5: Arithmetic Operations String OperationsDocument32 pagesSession-5: Arithmetic Operations String OperationsArun SaiNo ratings yet

- 3 Algorithm Analysis-2Document6 pages3 Algorithm Analysis-2Sherlen me PerochoNo ratings yet

- Numpy and PandasDocument11 pagesNumpy and PandasSuja MaryNo ratings yet

- Re-Thinking The Library PathfinderDocument13 pagesRe-Thinking The Library PathfinderMia YuliantariNo ratings yet

- Socio-Demographic Characteristics of Male Contraceptive Use in IndonesiaDocument6 pagesSocio-Demographic Characteristics of Male Contraceptive Use in IndonesiaBella ValensiaNo ratings yet

- PakikisamaDocument15 pagesPakikisamaJadsmon CaraballeNo ratings yet

- Trouble Shooting in Vacuum PumpDocument12 pagesTrouble Shooting in Vacuum Pumpj172No ratings yet

- Burkets Oral Medicine 13Th Edition Michael Glick Full ChapterDocument51 pagesBurkets Oral Medicine 13Th Edition Michael Glick Full Chapterdavid.drew550100% (3)

- SmartTrap Launcher ReceiverDocument4 pagesSmartTrap Launcher ReceiverKehinde AdebayoNo ratings yet

- Gas Turbine: Advanced Technology For Decentralized PowerDocument2 pagesGas Turbine: Advanced Technology For Decentralized PowerMarutisinh RajNo ratings yet

- A Convenient TruthDocument91 pagesA Convenient Truthamaleni22No ratings yet

- MSE250 Syllabus Fall2013Document4 pagesMSE250 Syllabus Fall2013AlekNo ratings yet

- Rap + Keb. Bahan. Hit 1Document173 pagesRap + Keb. Bahan. Hit 1ChairilSaniNo ratings yet

- The Little Book of BIMDocument37 pagesThe Little Book of BIMlinuxp97No ratings yet

- Adaptive Traffic Light Controller Using FPGADocument6 pagesAdaptive Traffic Light Controller Using FPGAshresthanageshNo ratings yet

- Be InspiredDocument50 pagesBe InspiredBenMaddieNo ratings yet

- 2019 Sleep Apnea Detection Based On Rician Modelling of Feature Variations in Multi Band EEGDocument9 pages2019 Sleep Apnea Detection Based On Rician Modelling of Feature Variations in Multi Band EEGYasrub SiddiquiNo ratings yet

- 3D Multizone Scaling Method of An NC Program For Sole Mould ManufacturingDocument5 pages3D Multizone Scaling Method of An NC Program For Sole Mould ManufacturingrajrythmsNo ratings yet

- LN - 10 - 47 - E-Learning Synoptic MeteorologyDocument30 pagesLN - 10 - 47 - E-Learning Synoptic MeteorologyPantulu MurtyNo ratings yet

- CBLM (Autosaved) - PREPARE SEAFOOD DISHES - CKDocument82 pagesCBLM (Autosaved) - PREPARE SEAFOOD DISHES - CKkopiko100% (5)

- Skimming Texts on GED RLADocument13 pagesSkimming Texts on GED RLAadkmelii06No ratings yet

- Eternalsun Spire - Whitepaper - Improving Uncertainty of Temperature Coefficients - v11 Hot Graphs TS SRDocument36 pagesEternalsun Spire - Whitepaper - Improving Uncertainty of Temperature Coefficients - v11 Hot Graphs TS SRShubham KumarNo ratings yet

- February 01, 2016 at 0731AMDocument5 pagesFebruary 01, 2016 at 0731AMJournal Star police documentsNo ratings yet

- Flowcharts PPT CADocument14 pagesFlowcharts PPT CAHarpreet KaurNo ratings yet

- UEW Referee Report Form - 67Document2 pagesUEW Referee Report Form - 67ambright mawunyoNo ratings yet

- Need Must Had Better Should Have To/have Got To Must Be Able To Can Used To Would Had To MustDocument5 pagesNeed Must Had Better Should Have To/have Got To Must Be Able To Can Used To Would Had To MustAlinaNo ratings yet

- Session-1: Unpacking Agenda 2030: 1.4. Categorization of 17 Sdgs Into 5PsDocument2 pagesSession-1: Unpacking Agenda 2030: 1.4. Categorization of 17 Sdgs Into 5PsPal GuptaNo ratings yet

- 1CH0 1H Rms 20220224Document31 pages1CH0 1H Rms 20220224Musyoka DanteNo ratings yet

- MTU16V4000DS2000 2000kW StandbyDocument4 pagesMTU16V4000DS2000 2000kW Standbyalfan nashNo ratings yet

- Auto-Tune 7.1.2 VST PC Notes: InstallationDocument4 pagesAuto-Tune 7.1.2 VST PC Notes: InstallationDeJa AustonNo ratings yet