Professional Documents

Culture Documents

22 - PDFsam - Escholarship UC Item 5qd0r4ws

22 - PDFsam - Escholarship UC Item 5qd0r4ws

Uploaded by

Mohammad0 ratings0% found this document useful (0 votes)

2 views1 pageOriginal Title

22_PDFsam_eScholarship UC item 5qd0r4ws

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

2 views1 page22 - PDFsam - Escholarship UC Item 5qd0r4ws

22 - PDFsam - Escholarship UC Item 5qd0r4ws

Uploaded by

MohammadCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 1

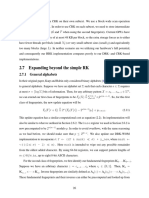

We provide an efficient implementation of a fully concurrent data structure (potentially

an irregular workload itself) based on the per-thread assignment and per-warp processing

approach.

6. By using the slab list, we implement a dynamic hash table for the GPU, the slab hash

(Chapter 5).

7. We design a novel dynamic memory allocator, the SlabAlloc, to be used in the slab hash

(Chapter 5).

As also summarized in Table 1.1, items 1 and 4 follow traditional per-thread assignment and

per-thread processing. Items 2 and 3 follow per-warp assignment and per-warp processing. Items

5, 6 and 7 use per-thread assignment and per-warp processing (the WCWS strategy).

1.2 Preliminaries: the Graphics Processing Unit

The Graphics Processing Unit (GPU) is a throughput-oriented programmable processor. It is

designed to maximize overall throughput, even by sacrificing the latency of sequential operations.

Throughout this section and this work, we focus on NVIDIA GPUs and CUDA as our parallel

computing framework. More details can be found in the CUDA programming guide [78].

Lindholm et al. [63] and Nickolls et al. [76] also provide more details on GPU hardware and the

GPU programming model, respectively.

1.2.1 CUDA Terminology

In CUDA, the CPU is referred to as the host and all available GPUs are devices. GPU programs

(“kernels”) are launched from the host over a grid of numerous blocks (or thread-blocks); the

GPU hardware maps blocks to available parallel cores (streaming multiprocessors (SMs)). The

programmer has no control over scheduling of blocks to SMs; no programs may contain execution

ordering assumptions. Each block typically consists of dozens to thousands of individual threads,

which are arranged into 32-wide warps. CUDA v8.0 and all previous versions assume that all

threads within warp execute instructions in lockstep (i.e., physically parallel). Hence, if threads

within a warp fetch different instructions to execute (e.g., by using branching statements), then

those instructions are serialized: similar instructions are executed in a SIMD fashion and one

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 36 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page36 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 40 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page40 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 35 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page35 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 45 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page45 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 28 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page28 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 16 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page16 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 39 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page39 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 24 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page24 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 25 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page25 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 34 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page34 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 43 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page43 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 38 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page38 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 17 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page17 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 13 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page13 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 26 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page26 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 23 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page23 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 19 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)Document1 page19 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)MohammadNo ratings yet

- 4 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page4 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 9 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page9 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 12 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page12 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 9.11 Performing Multiple Operations On A List Using FunctorsDocument3 pages9.11 Performing Multiple Operations On A List Using FunctorsMohammadNo ratings yet

- 20 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)Document1 page20 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)MohammadNo ratings yet

- 2 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)Document1 page2 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)MohammadNo ratings yet

- 8 - PDFsam - Escholarship UC Item 5qd0r4wsDocument1 page8 - PDFsam - Escholarship UC Item 5qd0r4wsMohammadNo ratings yet

- 9.11 Performing Multiple Operations On A List Using FunctorsDocument1 page9.11 Performing Multiple Operations On A List Using FunctorsMohammadNo ratings yet

- Solution: Securitymanager - IsgrantedDocument4 pagesSolution: Securitymanager - IsgrantedMohammadNo ratings yet

- 22 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)Document1 page22 - PDFsam - Beginning Rust - From Novice To Professional (PDFDrive)MohammadNo ratings yet

- 18.4 Being Notified of The Completion of An Asynchronous DelegateDocument3 pages18.4 Being Notified of The Completion of An Asynchronous DelegateMohammadNo ratings yet

- Deep Learning For Assignment of Protein Secondary Structure Elements From CoordinatesDocument7 pagesDeep Learning For Assignment of Protein Secondary Structure Elements From CoordinatesMohammadNo ratings yet

- 9.11 Performing Multiple Operations On A List Using FunctorsDocument4 pages9.11 Performing Multiple Operations On A List Using FunctorsMohammadNo ratings yet