Professional Documents

Culture Documents

Digital Communication

Uploaded by

shafignits_123Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Digital Communication

Uploaded by

shafignits_123Copyright:

Available Formats

BE Semester- VI (EC) Question Bank

(Digital Communication)

All questions carry equal marks (10 marks)

Write the advantages of digital communication over analog communication.

Q.1

Q.2 Explain delta modulation and demodulation technique.

Q.3 Write a short note on PCM and explain the role of compander in PCM.

Q.4 Represent 100111010 using following digital data format

(1) Polar RZ

(2) Bipolar NRZ

(3) AMI NRZ

(4) Split Phase Manchester

(5) On-Off

Q.5 What is ISI? Explain Nyquist’s frist criteria for zero ISI.

Q.6 Explain the desirable properties of line codes. What is essential bandwidth?

Q.7 Find the PSD of on-off signalling and discuss the advantages and

disadvantages of on-off signalling.

Q.8 State and explain sampling theorem in detail. Also explain aliasing.

Q.9 A signal m(t) band-limited to 3 kHz is sampled at a rate 33.33% higher than

the Nyquist rate. The maximum acceptable error in the sample amplitude is

0.5% of the peak amplitude mp. The quantized samples are binary coded.

Find the minimum bandwidth of a channel required to transmit the encoded

binary signal. If 24 such signals are time-division multiplexed, determine the

minimum transmission bandwidth required to transmit the multiplexed signal.

Q.10 State and explain central limit theorem in detail.

Q.11 Explain : (1) Auto-correlation (2) Probability density function.

Q.12 Define CDF. Prove all the properties of CDF.

Q.13 (a) A binary source generates digits 1 and 0 randomly with equal probability.

Assign probabilities to the following events with respect to 10 digits

generated by the source : (1) there are exactly two 1s and eight 0s. (2) there

are at least four 0s.

(b) Consider an AWGN channel with 4-kHz bandwidth and the noise power

spectral density η / 2 = 10-12 W/Hz. The signal power required at the receiver

is 0.1 mW. Calculate the capacity of this channel.

Q.14 Define : mean, moment and variance of a random variable X.

Q.15 Define entropy. Show that the entropy is maximum when all messages are

equiprobable.

Q.16 A source emits seven messages with probabilities 1/3, 1/3, 1/9, 1/9, 1/9, 1/27

and 1/27 respectively. Find the entropy of the source and compact binary

code and also find the average length of the codeword. Determine the

efficiency and redundancy of this code.

Q.17 Derive channel capacity of a binary symmetric channel.

Q.18 Discuss about error free communication over a noisy channel.

Q.19 A memoryless source emits six messages with probabilities 0.3, 0.25, 0.15,

0.12, 0.1 and 0.08. Find the 4-ary Huffman code. Determine its average word

length, the efficiency and the redundancy.

Q.20 Construct a (7, 4) cyclic code using generator polynomial g(x)=x3+x2+1.

Prepare a suitable decoding table. Decode the following received vectors: (a)

1101101 (b) 0101000.

Q.21 For a (6,3) code, the generator matrix G is

1 0 0 1 0 1

G = 0 1 0 0 1 1

0 0 1 1 1 0

For all eight possible data words, find the corresponding codewords and

verify that this code is a single correcting code.

Q.22 A binary channel matrix is given by

Outputs

y1 y2

x1 2 / 3 1 / 3

x 2 1 / 10 9 / 10

Inputs

Px(x1) = 1/3 and Px(x2)=2/3. Determine H(x), H(x|y), H(y), H(y|x) and I(x;y).

Q.23 “Hamming bound is a necessary but not sufficient condition for higher error

correcting codes whereas is a necessary and sufficient condition for single

error correcting codes”. Justify.

Q.24 Describe the procedure of encoding and decoding of linear block codes.

Q.25 Explain viterbi decoding method for convolutional codes.

Q.26 Write a short note on burst error detecting and correcting codes.

Q.27 “When random variables are independent, they are uncorrelated. However

the fact that they are uncorrelated does not ensure that they are

independent”. Justify.

Q.28 Write short notes on : (a) Regenerative repeaters (b) Eye diagram and its

applications.

Q.29 Derive the expression for signal to quantization noise ratio in a PCM system.

Q.30 Explain QPSK with waveforms, constellation diagram and mathematical

representation.

Q.31 Answer the following :

(a) State the condition for orthogonality in case of signals.

(b) Define a Gaussian random process.

(c) What are the conditions for detecting and correcting errors upto ‘t’ in a

code word?

Q.32 State and explain Chebyshev’s inequality.

Q.33 Explain the matched filter and correlation receiver for optimum threshold

detection.

Q.34 Compare different types of signalling in terms of bit error probability for

optimum binary detection.

Q.35 Derive the equation for bit error probability for optimum binary receiver.

Q.36 Explain non-coherent detection of ASK with required block diagram.

Q.37 Describe the exchange between the SNR and the transmission bandwidth

using the channel capacity equation.

Q.38 Explain the concept of M-ary communication with suitable examples.

Q.39 Describe the carrier recovery and symbol synchronization in M-ary PSK

receiver.

Q.40 Write a short note on differentially coherent FSK detection.

You might also like

- Digital Communication Uq 2011Document30 pagesDigital Communication Uq 2011shankarNo ratings yet

- Multiple Choice Questions For Digital Signal ProcessingDocument128 pagesMultiple Choice Questions For Digital Signal Processingpriya100% (2)

- A G1002 Pages: 2: Answer Any Two Full Questions, Each Carries 15 MarksDocument2 pagesA G1002 Pages: 2: Answer Any Two Full Questions, Each Carries 15 MarksAdithyan JNo ratings yet

- Iii Ece I SemDocument45 pagesIii Ece I SemMacharla DevikaNo ratings yet

- 9A04601 Digital CommunicationsDocument4 pages9A04601 Digital Communicationsraju.kprr8862No ratings yet

- DC Assignments - 18-19Document4 pagesDC Assignments - 18-19Allanki Sanyasi RaoNo ratings yet

- JNTU Digital Communications QuestionsDocument8 pagesJNTU Digital Communications QuestionsAkbarSabNo ratings yet

- Question Bank - NewDocument5 pagesQuestion Bank - NewJeevanreddy MamillaNo ratings yet

- 13A04502 Digital Communication SystemsDocument2 pages13A04502 Digital Communication SystemsMallikarjuna Rao YamarthyNo ratings yet

- Advanced Digital Communication1 December 2011Document2 pagesAdvanced Digital Communication1 December 2011Sneha UpadhyayulaNo ratings yet

- ECE4313 Worksheet EndsemDocument5 pagesECE4313 Worksheet EndsemBereketeab ZinabuNo ratings yet

- Anna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsDocument2 pagesAnna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsMohanapriya.S 16301No ratings yet

- Question Paper Code:: (10×2 20 Marks)Document3 pagesQuestion Paper Code:: (10×2 20 Marks)krithikgokul selvam100% (1)

- Digital Communications (DCOM)Document4 pagesDigital Communications (DCOM)Mamotete MohohloNo ratings yet

- DC aDCghskjskksks FjsDocument4 pagesDC aDCghskjskksks FjsSaiteja GundapuNo ratings yet

- Digital CommunicationsgDocument8 pagesDigital Communicationsgksln100% (1)

- Department of Electronics and Communication Sb-Bit, Meerut Digital Communication (Eec-601) TUTORIAL-1 (Unit-5)Document5 pagesDepartment of Electronics and Communication Sb-Bit, Meerut Digital Communication (Eec-601) TUTORIAL-1 (Unit-5)gkhanna_3No ratings yet

- Digital Communication Exam QuestionsDocument63 pagesDigital Communication Exam QuestionsShubham KingeNo ratings yet

- Question Bank - DCDocument3 pagesQuestion Bank - DCDivya KarthikeyanNo ratings yet

- DCOM Question Bank - Final2Document4 pagesDCOM Question Bank - Final2Prisha SinghaniaNo ratings yet

- 2021 Dec. ECT305-ADocument3 pages2021 Dec. ECT305-ADinil DhananjayanNo ratings yet

- Ect305 Analog and Digital Communication, December 2022Document3 pagesEct305 Analog and Digital Communication, December 2022Dinil DhananjayanNo ratings yet

- Question Paper Code:: Reg. No.Document3 pagesQuestion Paper Code:: Reg. No.krithikgokul selvamNo ratings yet

- CRCDocument2 pagesCRCrachna009No ratings yet

- CU7102 Advanced Digital Communication Techniques Question BankDocument11 pagesCU7102 Advanced Digital Communication Techniques Question BankSuresh HakunamatataNo ratings yet

- Gujarat Technological University: InstructionsDocument26 pagesGujarat Technological University: Instructionsdilawar sumraNo ratings yet

- Dcom QuestionDocument3 pagesDcom Questionsushant sahooNo ratings yet

- DC Assignment 2019 PDFDocument2 pagesDC Assignment 2019 PDFRashmi VermaNo ratings yet

- List of Important QuestionsDocument6 pagesList of Important Questions4025 deeptika pNo ratings yet

- G.L.BAJAJ INSTITUTE TECHNOLOGY & MANAGEMENT DEPARTMENT ELECTRICAL & ELECTRONICS ENGINEERING TUTORIAL SHEETDocument2 pagesG.L.BAJAJ INSTITUTE TECHNOLOGY & MANAGEMENT DEPARTMENT ELECTRICAL & ELECTRONICS ENGINEERING TUTORIAL SHEETPriyanka DattaNo ratings yet

- Digital CommunicationDocument88 pagesDigital CommunicationMrs. Sahana K AdyanthayaNo ratings yet

- 3232 Question PaperDocument2 pages3232 Question PaperDASHRATH SINGHNo ratings yet

- Digital CommunicationsDocument11 pagesDigital Communicationsveeramaniks408No ratings yet

- Cs 307 Data CommunicationDocument4 pagesCs 307 Data CommunicationNithin vNo ratings yet

- Sixth Semester B.Tech. (Engineering) Degree Examination, December 2010Document2 pagesSixth Semester B.Tech. (Engineering) Degree Examination, December 2010Abhishek EkNo ratings yet

- Digital and Analog Communication Exam QuestionsDocument2 pagesDigital and Analog Communication Exam Questionsmehul03ecNo ratings yet

- Electronics and Communication Engineering VI SemesterDocument2 pagesElectronics and Communication Engineering VI SemesterRamNo ratings yet

- DC Question BankDocument6 pagesDC Question BankBha RathNo ratings yet

- 15A04502 Digital Communication Systems (1) - 1Document1 page15A04502 Digital Communication Systems (1) - 117BF1A04L7 kalyanNo ratings yet

- COMPUTER NETWORKS I QUESTION BANKDocument7 pagesCOMPUTER NETWORKS I QUESTION BANKrahupiNo ratings yet

- Question Paper Code:: Reg. No.Document2 pagesQuestion Paper Code:: Reg. No.vijaikirubaNo ratings yet

- Adc 1Document2 pagesAdc 1nithyajothiNo ratings yet

- T.E. (E & TC) : Q1) A) Explain With Help of Diagram The Formatting Steps For Textual, Analog andDocument4 pagesT.E. (E & TC) : Q1) A) Explain With Help of Diagram The Formatting Steps For Textual, Analog andaniruddhaphatak93No ratings yet

- DC Question BankDocument2 pagesDC Question BankAnanda HembramNo ratings yet

- Answer Any Two Full Questions, Each Carries 15 MarksDocument2 pagesAnswer Any Two Full Questions, Each Carries 15 MarksKrishnakumar K ANo ratings yet

- EC2311 Communication Engineering GuideDocument9 pagesEC2311 Communication Engineering GuideBharath RamanNo ratings yet

- APJ Abdul Kalam Tech University B.Tech Degree Exam Dec 2018 Information Theory & CodingDocument2 pagesAPJ Abdul Kalam Tech University B.Tech Degree Exam Dec 2018 Information Theory & CodingMkNo ratings yet

- DCDocument8 pagesDCharshit420No ratings yet

- 161001Document2 pages161001mehul03ecNo ratings yet

- 15A04502 Digital Communication Systems (2) - 1Document1 page15A04502 Digital Communication Systems (2) - 117BF1A04L7 kalyanNo ratings yet

- Course Material (AQP)Document2 pagesCourse Material (AQP)shijo monNo ratings yet

- rr322205 Communication SystemsDocument7 pagesrr322205 Communication SystemsSRINIVASA RAO GANTANo ratings yet

- QPDocument3 pagesQPAnonymous 9WJ7YeGNo ratings yet

- Time: 03 Hours Maximum Marks: 100 Instructions To CandidatesDocument2 pagesTime: 03 Hours Maximum Marks: 100 Instructions To CandidatesShravan G RamNo ratings yet

- Ec8501 DC Reg QPDocument2 pagesEc8501 DC Reg QPsaru priyaNo ratings yet

- QB EEE-5th Communication Theory RAVEEN YADAVDocument6 pagesQB EEE-5th Communication Theory RAVEEN YADAVPraveen YadavNo ratings yet

- DC1 PrepDocument2 pagesDC1 PrepPavankumar GopNo ratings yet

- FP Data CommDocument5 pagesFP Data CommExperza HillbertNo ratings yet

- Center For Continuing Education National Institute of TechnologyDocument1 pageCenter For Continuing Education National Institute of Technologyshafignits_123No ratings yet

- Question BankDocument3 pagesQuestion Bankshafignits_123No ratings yet

- Prof K V SubbarajuDocument26 pagesProf K V Subbarajushafignits_123No ratings yet

- WWW - Manaresults.co - In: Code No: R1631044Document2 pagesWWW - Manaresults.co - In: Code No: R1631044shafignits_123No ratings yet

- Certificate Last-Converted (2 Files Merged)Document85 pagesCertificate Last-Converted (2 Files Merged)shafignits_123No ratings yet

- Centre For Continuing Education: National Institute of Technology WarangalDocument1 pageCentre For Continuing Education: National Institute of Technology Warangalshafignits_123No ratings yet

- Center For Continuing Education National Institute of TechnologyDocument1 pageCenter For Continuing Education National Institute of Technologyshafignits_123No ratings yet

- Checklist 9o3PIS7Document1 pageChecklist 9o3PIS7shafignits_123No ratings yet

- Center For Continuing Education National Institute of TechnologyDocument1 pageCenter For Continuing Education National Institute of Technologyshafignits_123No ratings yet

- Ninth Class Model Paper (Ap) : Summative Assessment - IiDocument4 pagesNinth Class Model Paper (Ap) : Summative Assessment - Iishafignits_123No ratings yet

- Center For Continuing Eduction National Institute of TechnogyDocument1 pageCenter For Continuing Eduction National Institute of Technogyshafignits_123No ratings yet

- Application Form 07092020Document1 pageApplication Form 07092020shafignits_123No ratings yet

- Center For Continuing Education National Institute of TechnologyDocument1 pageCenter For Continuing Education National Institute of Technologyshafignits_123No ratings yet

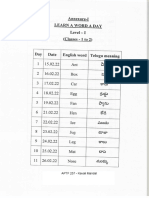

- 1,2 Classes-Learn A Word - Kavali BadiDocument2 pages1,2 Classes-Learn A Word - Kavali Badishafignits_123No ratings yet

- Ninth Class Model Paper (Ap) : Summative Assessment - Ii (2022)Document6 pagesNinth Class Model Paper (Ap) : Summative Assessment - Ii (2022)shafignits_123No ratings yet

- Ninth Class Model Paper (Ap) : Summative Assessment - Ii (2022)Document5 pagesNinth Class Model Paper (Ap) : Summative Assessment - Ii (2022)shafignits_123No ratings yet

- Ninth Class Model Paper (Ap) : Summative Assessment - Ii (2022)Document5 pagesNinth Class Model Paper (Ap) : Summative Assessment - Ii (2022)shafignits_123No ratings yet

- View AnswerDocument1 pageView Answershafignits_123No ratings yet

- Yogi Vemana University:: KadapaDocument16 pagesYogi Vemana University:: Kadapashafignits_123No ratings yet

- Yogi Vemana University, Kadapa-516005, A.P., India.: Appendix II-Table 2: Academic/Research ScoreDocument4 pagesYogi Vemana University, Kadapa-516005, A.P., India.: Appendix II-Table 2: Academic/Research Scoreshafignits_123No ratings yet

- Yogi Vemana University, Kadapa-516005, A.P., India.: Appendix II-Table 2: Academic/Research ScoreDocument4 pagesYogi Vemana University, Kadapa-516005, A.P., India.: Appendix II-Table 2: Academic/Research Scoreshafignits_123No ratings yet

- Yogi Vemana University:: KadapaDocument16 pagesYogi Vemana University:: Kadapashafignits_123No ratings yet

- Bocwwb Batch 4 Nominal RollDocument12 pagesBocwwb Batch 4 Nominal Rollshafignits_123No ratings yet

- Checklist for medical reimbursement claim submissionDocument1 pageChecklist for medical reimbursement claim submissionshafignits_123No ratings yet

- M.Tech Signal Processing Course DetailsDocument1 pageM.Tech Signal Processing Course Detailsshafignits_123No ratings yet

- View AnswerDocument1 pageView Answershafignits_123No ratings yet

- Speech Enhancement Using An Adaptive Wiener Filtering Approach M. A. Abd El-Fattah, M. I. Dessouky, S. M. Diab and F. E. Abd El-SamieDocument18 pagesSpeech Enhancement Using An Adaptive Wiener Filtering Approach M. A. Abd El-Fattah, M. I. Dessouky, S. M. Diab and F. E. Abd El-Samieshafignits_123No ratings yet

- AicDocument6 pagesAicSatwik JainNo ratings yet

- Amie FormDocument9 pagesAmie Formmakrand87No ratings yet

- AE SyllabusDocument3 pagesAE Syllabusprabhu2k8No ratings yet

- Onkyo TX-nr1010 SM PartsDocument202 pagesOnkyo TX-nr1010 SM PartsWalter CzyzyniewskiNo ratings yet

- Appnote VoceraDocument4 pagesAppnote Vocerajcy1978No ratings yet

- Assignment-Cn: Syeda Pakiza ImranDocument5 pagesAssignment-Cn: Syeda Pakiza Imrannauman tariqNo ratings yet

- Ae-4020 Service ManualDocument42 pagesAe-4020 Service ManualGabi GodoNo ratings yet

- Chapter 4 Tutorial StudentDocument4 pagesChapter 4 Tutorial StudentAzrif MoskamNo ratings yet

- Diagnostic Utilities TCP IPDocument1 pageDiagnostic Utilities TCP IPMir Farhan Ali AbediNo ratings yet

- Network SegregationDocument13 pagesNetwork SegregationsolbahiaNo ratings yet

- 3.1 Script - Rhel8 - BetaDocument4 pages3.1 Script - Rhel8 - BetaClatu RivasNo ratings yet

- UNIFUN Company ProfileDocument30 pagesUNIFUN Company ProfileConstantin GîlcaNo ratings yet

- Alcatel 7705 SAR-Hc DatasheetDocument3 pagesAlcatel 7705 SAR-Hc DatasheetEric WetterauerNo ratings yet

- Byrne, John: Advanced Signaling & Media Management - TelcobridgesDocument3 pagesByrne, John: Advanced Signaling & Media Management - TelcobridgesmsantacruzrNo ratings yet

- sx200 PDFDocument282 pagessx200 PDFidarNo ratings yet

- Huawei AC6005 Access Controllers Product Description PDFDocument48 pagesHuawei AC6005 Access Controllers Product Description PDFdexater007No ratings yet

- Cloud Security ChallengesDocument10 pagesCloud Security ChallengesAtul BarveNo ratings yet

- Analyzing Coverage With Propagation Delay-PD and Timing Advance - TA - GSM-WCDMA-LTEDocument20 pagesAnalyzing Coverage With Propagation Delay-PD and Timing Advance - TA - GSM-WCDMA-LTEAzmat GaadNo ratings yet

- Security Analysis As Software-Defined Security For SDN EnvironmentDocument6 pagesSecurity Analysis As Software-Defined Security For SDN EnvironmentForloopNo ratings yet

- Fabrication: Machine Setup in CAM: Learning ObjectivesDocument43 pagesFabrication: Machine Setup in CAM: Learning ObjectivesJohn ContrerasNo ratings yet

- ACFrOgADrA4dUBFymTmKRfSmh21uqQTorw8cNAb2SO32TbM5OlXyDt7NEwOU3AC0DhCebVN6yfJYF8ovy5as3X7Uh0-3gphEz jS67V0vcek4UTmJZ7XcPrsmaUoJLHxRRhy4wFvgkezkhnXY9ZK PDFDocument69 pagesACFrOgADrA4dUBFymTmKRfSmh21uqQTorw8cNAb2SO32TbM5OlXyDt7NEwOU3AC0DhCebVN6yfJYF8ovy5as3X7Uh0-3gphEz jS67V0vcek4UTmJZ7XcPrsmaUoJLHxRRhy4wFvgkezkhnXY9ZK PDFish kabir kumarNo ratings yet

- Multifunctional Ultrawideband AntennasDocument255 pagesMultifunctional Ultrawideband AntennasMarco Antonio Tg100% (2)

- Security+ Prep GuideDocument39 pagesSecurity+ Prep GuideavinashNo ratings yet

- Virtual Routing and Forwarding (VRF) Configuration HP SwitchDocument9 pagesVirtual Routing and Forwarding (VRF) Configuration HP SwitchAkhil Gupta100% (1)

- SUBSET-026-8 v340Document33 pagesSUBSET-026-8 v340Gonzalo CNNo ratings yet

- Cloud Security Platform and Threat Intelligence: Try Umbrella TodayDocument1 pageCloud Security Platform and Threat Intelligence: Try Umbrella TodayUmair AlamNo ratings yet

- Hytera-MD782G-Digital-Mobile-Radio-User-Manual-R8.5_engDocument35 pagesHytera-MD782G-Digital-Mobile-Radio-User-Manual-R8.5_engvincent amanteNo ratings yet

- Video & Voice Over IP Communications - A SIP-based Example: Barry AndrewsDocument23 pagesVideo & Voice Over IP Communications - A SIP-based Example: Barry AndrewsTejpal SinghNo ratings yet

- User Guide Intex - W300UAPDocument43 pagesUser Guide Intex - W300UAPmehul kothariNo ratings yet

- Selection and Ordering Data: V, 4 X I) I I I IDocument4 pagesSelection and Ordering Data: V, 4 X I) I I I ITuan Dang AnhNo ratings yet

- The Weather Channel - Wikipedia, The Free EncyclopediaDocument15 pagesThe Weather Channel - Wikipedia, The Free EncyclopediaThornNo ratings yet

- CCNA 1 Chapter 3 Exam AnswersDocument14 pagesCCNA 1 Chapter 3 Exam AnswersRazvan AlexandruNo ratings yet